“What is arriving is a kind of intelligence that’s different. It comes to answers differently than we do. It seems to have hidden understanding and meaning that we don’t understand today. It discovers things that we’ve never known. We don’t know how far this goes but the biggest issue is that as we’ve made these things bigger and bigger they keep emerging with new capabilities. We have not figured out how powerful this technology is going to be yet. We’ve had AI for 20 years now. That’s been part of our technology, but now it’s becoming very personal. It’s things we do every day, a normal person like myself, whether I’m doing search or I’m writing an email or I’m preparing a lecture at Tulane. Suddenly these are tools. It’s almost like when the computer went from being in a really big room in a research institute and suddenly you had it in the 1970s it arrived as a personal computer…” – – – Eric Schmidt

now from hyperbolic headlines about its

threat to our survival to the promise of

its life-changing technology artificial

intelligence is here and it is here to

stay how it’s applied and more

importantly how it’s regulated are the

questions being navigated right now

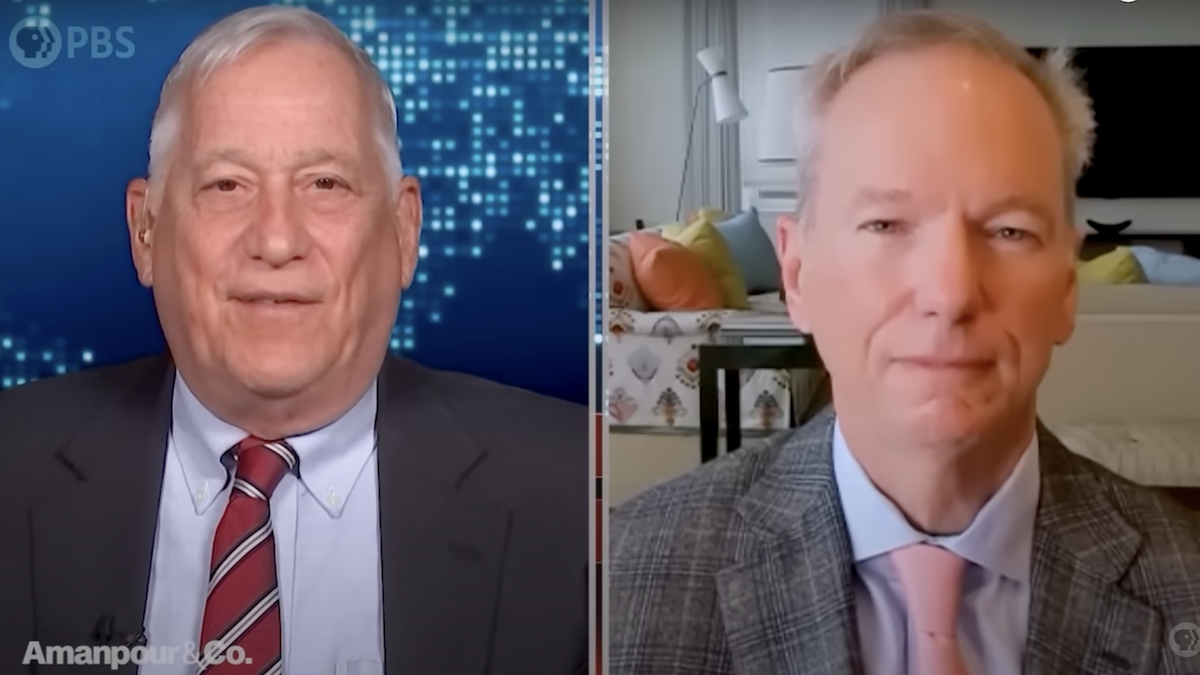

Walter Isaacson speaks to the former CEO

of Google Eric Schmidt about ai’s impact

on life politics and warfare and what

can be done to keep it under control

thank you Chris John and Eric Schmidt

welcome to the show thanks for having me

Walter you know industrial and

scientific and technological Revolution

sometimes sneak up on us I mean nobody

woke up one morning in 1760 and said oh

my God the industrial revolution has

started but in the past three or four

weeks between my students and myself we

suddenly feel we’re in a revolution

where artificial intelligence has become

personal it’s become chat Bots and

things that’ll integrate into our lives

do you think we’re on the cusp of some

new Revolution

I do and partly this revolution is

happening faster than I’ve ever seen

uh chat GPT which was released a few

months ago now has more than 100 million

users it took Gmail five years to get to

the same point there’s something about

the diffusion of technology that we

interact with at the human scale that’s

going to change our world in a really

profound way much more profound than

people think you and Henry Kissinger and

Daniel Hutton Loker have written a book

the age of AI and I think part of it is

excerpted or there’s an essay in the

Wall Street Journal and it Compares this

to the Advent of the Enlightenment

something I think that was spurred to by

great technology which is movable type

printing presses at uh Gutenberg did

compare what’s happening now to the

enlightenment we do not have a

philosophical basis

for interacting with an intelligence

that’s near our ability but non-human

we don’t know what happens to our

identity how we communicate how we think

about ourselves when these things arrive

now these things are not Killer Robots

which was what everybody assumes we’re

building because we’re not doing that

what is arriving is a kind of

intelligence that’s different it comes

to answers differently than we do it

seems to have hidden understanding and

meaning that we don’t understand today

it discovers things that we’ve never

known we don’t know how far this goes

but the biggest issue is that as we’ve

made these things bigger and bigger they

keep emerging with new capabilities we

have not figured out how powerful this

technology is going to be yet we’ve had

AI for 20 years now that’s been part of

our technology but now it’s becoming

very personal it’s things we do every

day a normal person like myself whether

I’m doing search or I’m writing an email

or I’m preparing a lecture at Tulane

suddenly these are tools it’s almost

like when the computer went from being

in a really big room in a research

institute and suddenly you had it in the

1970s it arrived as a personal computer

tell me about this transformation of AI

to being something personal

the systems are organized to essentially

engage you more

and the reason they want to engage you

more is if you engage more you use it

more they make more money so what they

do is they learn what your preferences

are using various algorithms and so they

say ah Walter likes this and Eric likes

that and so forth and they build a

profile now that profile is not a

dossier and it’s not written in English

and so forth but it’s a pretty good

approximation of what you like and what

you think and then the algorithms know

how to make you more engaged by the way

the best way to get you more engaged is

to make you more outraged and the best

way to make you more outraged is to use

more inflammatory language and so forth

well let’s stop right there because that

means this could destroy our politics

well it will and the reason it’s going

to is that not only will the opponents

of a political figure produce videos

that are false and harmful

but also the messaging is going to get

more and more outrageous and you can get

a situation and I call this the Dual

evil problem let’s say that URI was a

truly horrific person which we’re not

somebody who’s a racist or something

like that and we have the diffusion

model generate a racist video and then

the other of us is some sort of

Psychopathic social media person who

doesn’t care about the quality and all

he wants to make it worse so what

happens is my computer makes a racist

video on my behalf and does a good job

and then your computer system knowing

that it will get even more Revenue if

it’s more outrageous makes it worse

right so you see how it goes one way now

let’s say that you and I were Saints and

the sense that I did something saintly

and that you were the world’s best

social media person you would take my

sanely thing and you would make it more

sanely so you see how it pushes to the

sides and and my theory about life today

is the reason everyone’s upset is

because the social media is busy trying

to make us upset so the algorithms of

social media Twitter Facebook many other

things I try to get engagement by

getting enragement by getting us upset

you just said and what you’re saying is

that added to this will be these new AI

systems that will make this even worse

is that right we’ve got a situation

where we have megaphones of people who

we frankly don’t want to hear about and

they’re going to find an audience and

they’re going to find it a big audience

because they’re going to do crazy stuff

that’s not okay in my view in a

democracy democracies are at some level

about reason debate and these systems

will drive against that I don’t see a

solution today

from this except that we’re going to

have to regulate some of it for example

we’re going to have to know who’s on the

platform to hold them responsible for if

they do something really outrageous or

illegal and we’re also going to have to

know where the content came from we’re

going to know have to know if it was

authentic or if it was boosted and

changed in some way and we’re also going

to have to know how the platform makes

its own decisions all of those are

sensible improvements so we can

understand why we’re being fed this

information so who is going to determine

these guard rails and how are we going

to get them in place internationally

well in Europe it’s already part of the

legislation and some form of agreement

in America between the government and

the industry is going to be required I

don’t think we need to get rid of free

speech or any of those things although

there are people who’ve proposed that we

can’t even have free speech from my

perspective the technology of Engagement

is generally good if you take the guard

rails around and you keep the most

extreme cases off the platform forms but

my point about generative AI

is these systems are going to soup up

engagement and soup up your attention

there’s an old phrase about what the

currency of the future in economics is

attention and these systems are looking

for your attention as a consumer so

every time you go oh my God I had no

idea remember that it’s trying to get

you to have that reaction now going back

to the generative AI combined with large

language models it’s going to do some

other things that are particularly

powerful it will be able to generate

insights and ideas that we as humans

have not had think of them as existing

as savants if I’m a physicist I’ll have

a savant that runs around and suggests

physics problems for me to work on and

that sort of thing all of that is very

good so the power of AI in terms of

improving science and biology and human

health will be extraordinary but it

comes with this impact on our

on our societal discourse it’s not going

to be easy to get through this

you say we don’t understand how they

make these decisions now it used to be

with AI and with computers we wrote

programs they were step by step and they

were rules based and it was if this then

do this

these new systems seem to just look at

billions of pieces of information and of

human behaviors and everything else and

they aren’t following any rules that we

give them does that what is that what

makes them both amazing and dangerous

yes my whole world was we get computers

to do things because we tell it what to

do and step by step and it got better

and better but that’s fundamentally the

as built environment that we all use

today

with machine learning which has been in

its current version available in one

form or another for about a decade

instead of programming it you learn it

so the language that you say is can we

learn what the right answer is it

started off with classifiers where you’d

say is this a zebra or a giraffe and

that got pretty good then a technology

called reinforcement learning came along

which was allowed us to sort of figure

out what to do next in a complicated

multiplayer game and now these large

language models have come along with

this massive scale but the way to

understand how you would both strengthen

large language models and constrain them

is to learn how to do it so in the the

normal taxonomy you would describe we

have this big thing that’s doing weird

stuff we want to learn what it’s doing

so we can stop it doing the bad things

the problem with learning what it’s

doing is since its behavior is immersion

is you have to run it for a while to

understand and then you have to have

humans decide this is bad right so the

way chat gbt was so successful is that

they invented a technique which

ultimately involved humans telling it

good bad good bads it wasn’t fully done

by computers the problem with good bad

good bad with humans is eventually that

doesn’t scale but here’s the real

problem

so far that sounds pretty good but in a

situation where all of the software is

being released there are what are called

raw models which are unconstrained and

the people who’ve played with the raw

models say that they are these are ones

that you and I can’t get to as normal

users say they’re very frightening build

me a copy of the 1918 bird flu virus

show me a way to blow up this building

and where to put the bomb things that

are very very dangerous appears to have

been discovered in the Raw versions of

the models how do we keep those out of

bad people’s hands well the problem we

don’t today know how to do it and here’s

why imagine a situation where the model

gets smarter and smarter and it’s got

this checking system you can imagine in

a situation where the model gets smarter

and smarter and it learns to whatever

it’s being checked to say the right

answer but when it’s not being checked

to say what it really thinks

and like uh how the computer in 2001

Space Odyssey is learning how to outwit

the crew and by the way how would it do

that well these things have what are

called objective functions and they’re

trained and so if you give it a

strongest a strong enough objective

function to really surface the most

interesting answer that may overwhelm

the system that’s trying to keep it

under control and within appropriate

guardrails these problems are today

unsolved the reason we don’t know how

these work is they’re essentially

collections of numbers people have

looked very hard at what essentially

activation nodes where inside the Matrix

and there are areas that seem to control

the outcome but when you look at it on a

microscope in in a computer sense you

get the same sort of confusion if you

look in a human brain in a human brain

you say where did that thought come from

you can’t find it it’s the same is true

in these large language models so far

well let me drill down on some case use

cases that we might have you and I were

once on the defense Innovation board for

the U.S government and you’ve been

involved in another commission on

National Intelligence tell me how you

think this will change the fighting of

wars

the biggest short-term concern is

actually biological warfare

um last year there was a group that did

synthesis of a whole bunch of viruses to

try to be helpful and then they use the

same program the same algorithm the same

large language model approach if you

will to work it backward and come up

with the world’s worst and most terrible

pathogens there’s every reason to think

that these Technologies when spread

broadly will allow terrorist actions

that we cannot possibly imagine this has

got to get addressed people are working

on this another thing that’s happening

is that the concept of war the concept

of conflict is occurring much more

quickly it looks like these systems have

developed abilities to both do offensive

and defensive cyber attacks they

actually understand where the

vulnerabilities are in ways we don’t

fully understand and they can be used to

accelerate both offensive and defensive

actions that means that a good chance in

the future of a war war is a war that

takes a millisecond right North Korea

attacks the U.S the U.S attacks back

China decides it’s a bad time for war

the whole thing occurred in a in a

millisecond that’s faster than human

decision making time which means that

our systems are defensive systems are

going to have to be on a hair trigger

and they’re going to have to be invoked

by AI that we don’t fully understand

you know the first time I talked about

this in depth with you and with Henry

Kissinger together was in China I think

maybe three years ago and it was a

question then and now more of a question

of are we going to cooperate with China

and trying to figure this out or is this

the great new arms race that’s going to

happen and with our new confrontational

attitude towards China is that going to

make it harder to deal with the emergent

technology of artificial intelligence

well three years ago China announced its

AI strategy because they love to

announce their strategies and include

dominating AI by 2030. so China of

course has efforts in generative Ai and

large languages models as well they also

have large efforts in Quantum and

biology which are doing well they’re

already ahead of us in 5G they’re ahead

of us in financial services and in terms

of batteries new energy all the things

that you use in your electric car so we

should take them as a strong competitor

in the case of large language models

they have not been as advanced as the

American companies have the American and

UK companies for reasons I don’t fully

understand one idea that I would offer

is that the large language models

because they are unpredictable today

cannot be offered to the public in China

because the Chinese government does not

want unfettered access to information in

other words how do the Chinese

government know that these systems are

not going to talk about Channel and

square or something which is not

possible to talk in an area of lack of

free speech

so we will see but at the moment they’re

they’re trying to catch up but they are

behind we recently put in some

restrictions on Hardware which will slow

them down but not by much whenever

there’s a big Innovative change it moves

the Arc of History sometimes towards

more individual Freedom even the

printing press you know takes away the

hold of the Roman Catholic Church allows

the Reformation allows the Renaissance

even do you think this will inevitably

push history to more individual Freedom

or will it be used for more

authoritarian purposes

I’m sure the answer is both

if you are another authoritarian

dictatorship you know let’s say a really

bad one

you would use these Technologies to both

surveil your citizens but also

manipulate them lie to them misinform

them tell them things which are

falsehoods cause them to be motivated

against National National fears all of

the things that governments and

ideologues do in that case

if you’re a democracy you’re going to

use it first to try to improve your

business situation and also because you

believe in free speech you’re going to

allow people to say what they think

the dangers to both are obvious for the

autocracy it will so compound their

control that it could lead to a

revolution inside the autocracy people

don’t want this kind of restrictions

that are possible in a democracy as

we’ve discussed the concept of being

able to flood the zone right the ability

for a single individual to define the

narrative who shouldn’t otherwise have

that kind of power is very palpable in

these Technologies and it’s really

important that we understand that Human

Nature has not changed if you show

someone a video and you say to them this

video is false at some basic level

there’s evidence that they still believe

it to be true and you tell them up front

pictures that have been seen cannot be

unseen videos that have been seen cannot

be unseen

we have to confront the fact that humans

are manipulable by these Technologies

and we need to put the appropriate

safeguards in place to make sure that we

as a body populists are not so

manipulated to the wrong outcome Alex

Schmidt thank you so much for joining us

thank you Walter and thank you again

thank you

foreign