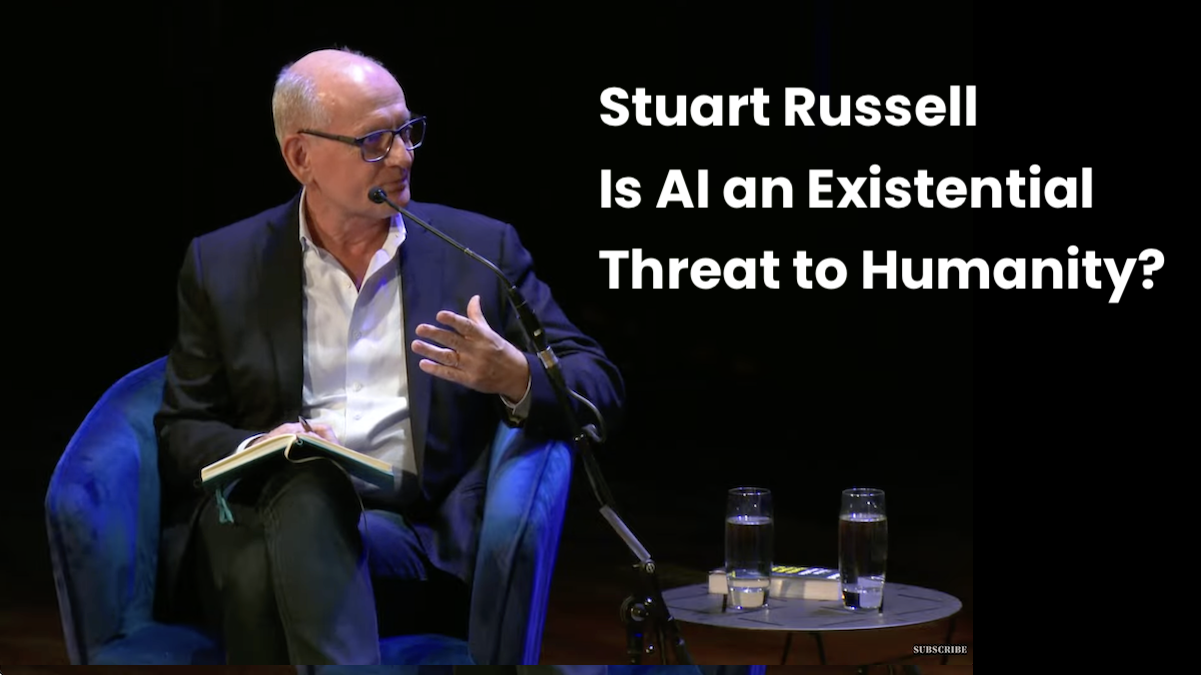

Stuart Russell on AI and Jobs: By the end of this decade AI may exceed human capabilities in every dimension and perform work for free, so there may be more employment, it just won’t be employment of humans.

Stuart Russell: saying AI is like the calculator is a [...]