STANFORD HAI. HUMAN-CENTERED ARTIFICIAL INTELLIGENCE.

Artificial Intelligence Index Report 2023.

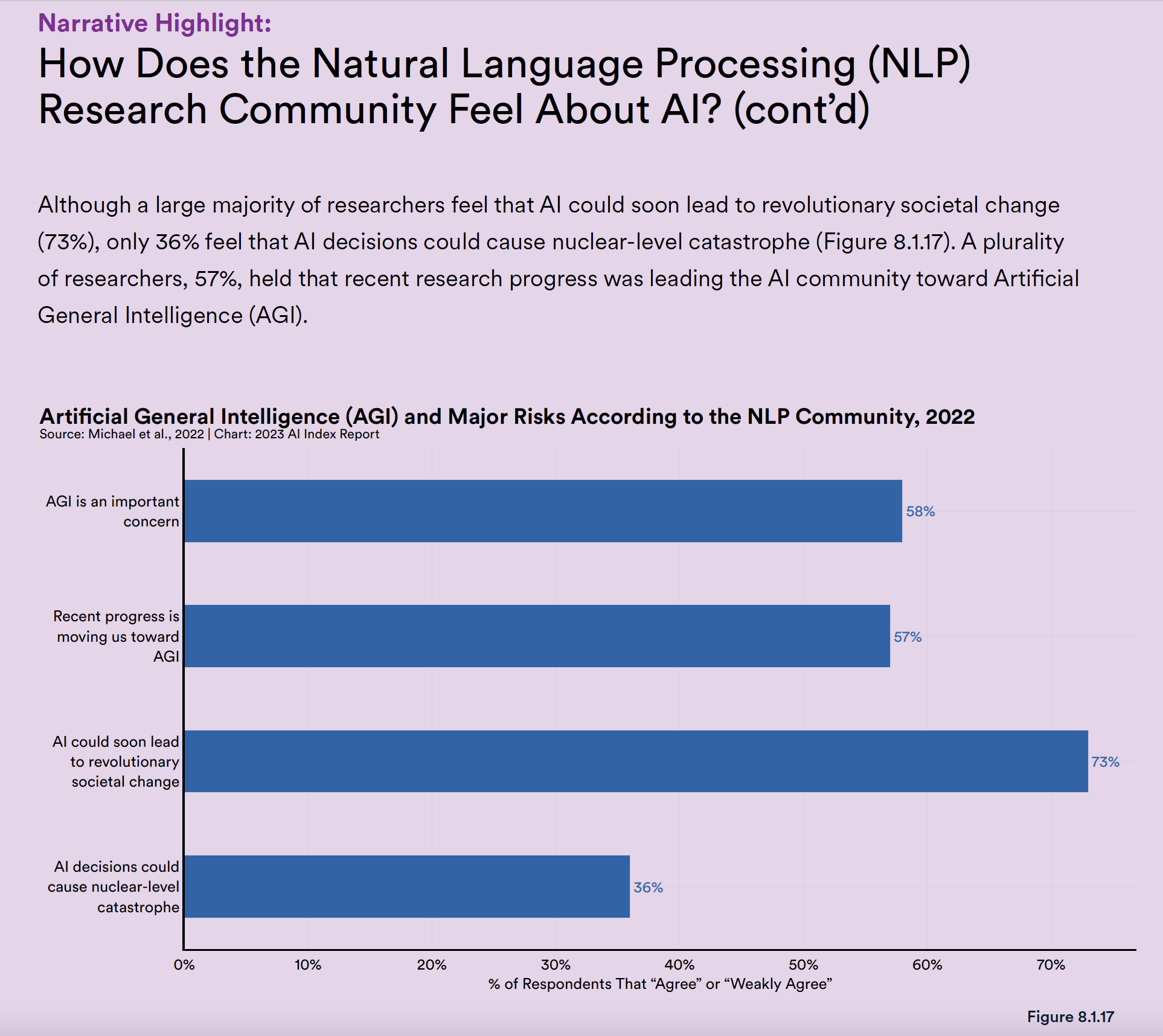

Although a large majority of researchers feel that AI could soon lead to revolutionary societal change (73%), only 36% feel that AI decisions could cause nuclear-level catastrophe (Figure 8.1.17). A plurality of researchers, 57%, held that recent research progress was leading the AI community toward Artificial General Intelligence (AGI). Page 337.

Learn More

- FORTUNE. TECH A.I. A.I. could lead to a ‘nuclear-level catastrophe’ according to a third of researchers, a new Stanford report finds. 10 APRIL 2023..

By Tristan Bove Bove

It was a blockbuster 2022 for artificial intelligence. The technology made waves from Google’s DeepMind predicting the structure of almost every known protein in the human body to successful launches of OpenAI’s generative A.I. assistant tools DALL-E and ChatGPT. The sector now looks to be on a fast track towards revolutionizing our economy and everyday lives, but many experts remain concerned that changes are happening too fast with potentially disastrous implications for the world.

Many experts in A.I. and computer science say the technology is likely a watershed moment for human society. But 36% don’t mean it as a positive, warning that decisions made by A.I. could lead to “nuclear-level catastrophe,” according to researchers surveyed in an annual report on the technology by Stanford University’s Institute for Human-Centered A.I., published earlier this month.

Almost three quarters of researchers in natural language processing—the branch of computer science concerned with developing A.I.—say the technology might soon spark “revolutionary societal change,” according to the report. And while an overwhelming majority of researchers say the future net impact of A.I. and natural language processing will be positive, concerns remain that the technology could soon develop potentially dangerous capabilities, while A.I.’s traditional gatekeepers are no longer as powerful as they once were.

“As the technical barrier to entry for creating and deploying generative A.I. systems has lowered dramatically, the ethical issues around AI have become more apparent to the general public. Startups and large companies find themselves in a race to deploy and release generative models, and the technology is no longer controlled by a small group of actors,” the report said.

A.I. fears over the past few months have mostly been contained to the technology’s disruptive implications for society. Companies including Google and Microsoft are locked in an arms race over generative A.I., systems trained on troves of data that can generate text and images based on simple prompts. But as OpenAI’s ChatGPT has already proven, these technologies can quickly wipe out livelihoods. If generative A.I. lives up to its potential, up to 300 million jobs could be at risk in the U.S. and Europe, according to a Goldman Sachs research note last month, with legal and administrative professions the most exposed.

Goldman researchers noted that A.I.’s labor market disruption could be undone in the long run by new job creation and improved productivity, but generative A.I. has also sparked fears over the technology’s tendency to be inaccurate. Both Microsoft and Google’s A.I. offerings have frequently made untrue or misleading statements, with one recent studyfinding that Google’s Bard chatbot can create false narratives in nearly eight out of 10 topics. A.I.’s imprecision in addition to a tendency for disturbing conversations when used too long has pushed developers and experts to warn the technology should not be used to make major decisions just yet.

But the fast pace of A.I. development means that companies and individuals who don’t take risks with it could be left behind, and the technology could soon advance so much that we may not have a choice.

At its current developmental speed, research is moving on from generative A.I. to creating artificial general intelligence, according to 57% of researchers surveyed by Stanford. Artificial general intelligence, or AGI, is an A.I. system that can accurately mimic or even outperform the capabilities of a human brain. There is very little consensus over when AGI could happen, with different experts claiming it will take 50 years or hundreds, while some researchers even question if true AGI is possible at all.

But if AGI does become reality, it would likely represent a seminal moment of human history and development, with some even fearing it could represent a technological singularity, a hypothetical future moment when humans lose control of technological growth and creations gain above-human intelligence. Around 58% of the Stanford researchers surveyed called AGI an “important concern.”

The survey found that experts’ most pressing concerns is that current A.I. research is focusing too much on scaling, hitting goals, and failing to include insights from different research fields. Other experts have raised similar concerns, calling for major developers to slow down the pace of A.I. rollout as ethics research continues. Elon Musk and Steve Wozniak were among the 1,300 signatories of an open letter last month calling for a six-month ban on creating more powerful versions of A.I. as research continues into the technology’s larger implications.