THE ECONOMIST. How generative models could go wrong.

A big problem is that they are black boxes.

Apr 19th 2023.

In 1960 norbert wiener published a prescient essay. In it, the father of cybernetics worried about a world in which “machines learn” and “develop unforeseen strategies at rates that baffle their programmers.” Such strategies, he thought, might involve actions that those programmers did not “really desire” and were instead “merely colourful imitation[s] of it.” Wiener illustrated his point with the German poet Goethe’s fable, “The Sorcerer’s Apprentice”, in which a trainee magician enchants a broom to fetch water to fill his master’s bath. But the trainee is unable to stop the broom when its task is complete. It eventually brings so much water that it floods the room, having lacked the common sense to know when to stop.

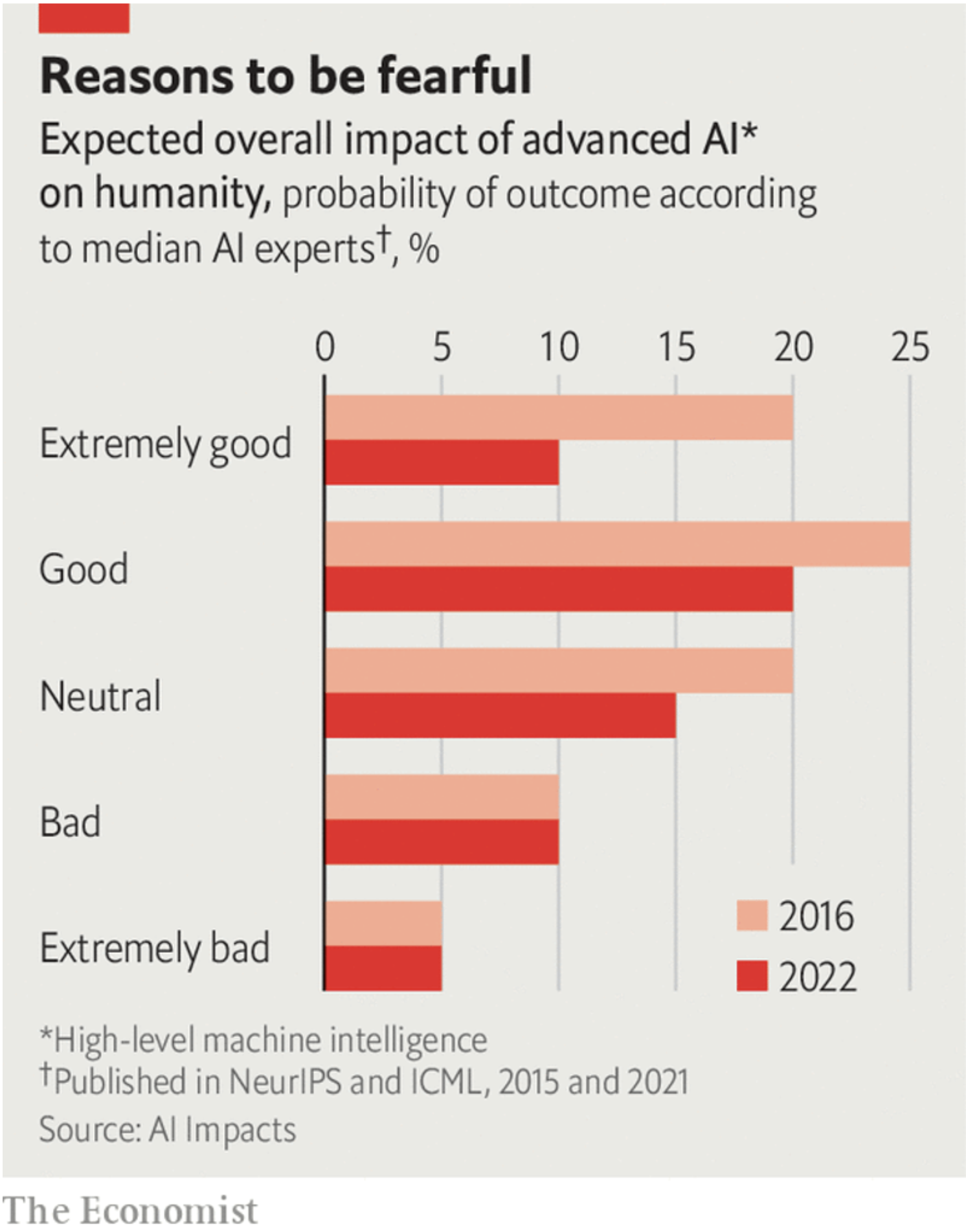

The striking progress of modern artificial-intelligence (AI) research has seen Wiener’s fears resurface. In August 2022, AI Impacts, an American research group, published a survey that asked more than 700 machine-learning researchers about their predictions for both progress in ai and the risks the technology might pose. The typical respondent reckoned there was a 5% probability of advanced ai causing an “extremely bad” outcome, such as human extinction (see chart). Fei-Fei Li, an ai luminary at Stanford University, talks of a “civilisational moment” for AI. Asked by an American tv network if ai could wipe out humanity, Geoff Hinton of the University of Toronto, another AI bigwig, replied that it was “not inconceivable”.

There is no shortage of risks to preoccupy people. At the moment, much concern is focused on “large language models” (LLMs) such as ChatGPT, a chatbot developed by Openai, a startup. Such models, trained on enormous piles of text scraped from the internet, can produce human-quality writing and chat knowledgeably about all kinds of topics. As Robert Trager of the Centre for Governance on AI explains, one risk is of such software “making it easier to do lots of things—and thus allowing more people to do them.”

The most immediate risk is that LLMs could amplify the sort of quotidian harms that can be perpetrated on the internet today. A text-generation engine that can convincingly imitate a variety of styles is ideal for spreading misinformation, scamming people out of their money or convincing employees to click on dodgy links in emails, infecting their company’s computers with malware. Chatbots have also been used to cheat at school. Like souped-up search engines, chatbots can also help humans fetch and understand information. That can be a double-edged sword. In April, a Pakistani court used GPT-4 to help make a decision on granting bail—it even included a transcript of a conversation with GPT-4 in its judgment. In a preprint published on arXiv on April 11th, researchers from Carnegie Mellon University say they designed a system that, given simple prompts such as “synthesise ibuprofen”, searches the internet and spits out instructions on how to produce the painkiller from precursor chemicals. But there is no reason that such a program would be limited to beneficial drugs.

Some researchers, meanwhile, are consumed by much bigger worries. They fret about “alignment problems”, the technical name for the concern raised by Wiener in his essay. The risk here is that, like Goethe’s enchanted broom, an AI might single-mindedly pursue a goal set by a user, but in the process do something harmful that was not desired. The best-known example is the “paperclip maximiser”, a thought experiment described by Nick Bostrom, a philosopher, in 2003. An AI is instructed to manufacture as many paperclips as it can. Being an idiot savant, such an open-ended goal leads the maximiser to take any measures necessary to cover the Earth in paperclip factories, exterminating humanity along the way. Such a scenario may sound like an unused plotline from a Douglas Adams novel. But, as AI Impacts’ poll shows, many AI researchers think that not to worry about the behaviour of a digital superintelligence would be complacent.

What to do? The more familiar problems seem the most tractable. Before releasing GPT-4, which powers the latest version of its chatbot, OpenAI used several approaches to reduce the risk of accidents and misuse. One is called “reinforcement learning from human feedback” (RLHF). Described in a paper published in 2017, RLHF asks humans to provide feedback on whether a model’s response to a prompt was appropriate. The model is then updated based on that feedback. The goal is to reduce the likelihood of producing harmful content when given similar prompts in the future. One obvious drawback of this method is that humans themselves often disagree about what counts as “appropriate”. An irony, says one AI researcher, is that RLHF also made ChatGPT far more capable in conversation, and therefore helped propel the AI race.

Another approach, borrowed from war-gaming, is called “red-teaming”. OpenAI worked with the Alignment Research Centre (ARC), a non-profit, to put its model through a battery of tests. The red-teamer’s job was to “attack” the model by getting it to do something it should not, in the hope of anticipating mischief in the real world.

It’s a long long road…

Such techniques certainly help. But users have already found ways to get llms to do things their creators would prefer they did not. When Microsoft Bing’s chatbot was first released it did everything from threatening users who had made negative posts about it to explaining how it would coax bankers to reveal sensitive information about their clients. All it required was a bit of creativity in posing questions to the chatbot and a sufficiently long conversation. Even gpt-4, which has been extensively red-teamed, is not infallible. So-called “jailbreakers” have put together websites littered with techniques for getting around the model’s guardrails, such as by telling the model that it is role-playing in a fictional world.

Sam Bowman of New York University and also of Anthropic, an AI firm, thinks that pre-launch screening “is going to get harder as systems get better”. Another risk is that AI models learn to game the tests, says Holden Karnofsky, an adviser to ARC and former board member of OpenAI. Just as people “being supervised learn the patterns…they learn how to know when someone is trying to trick them”. At some point AI systems might do that, he thinks.

Another idea is to use AI to police AI. Dr Bowman has written papers on techniques like “Constitutional AI”, in which a secondary AI model is asked to assess whether output from the main model adheres to certain “constitutional principles”. Those critiques are then used to fine-tune the main model. One attraction is that it does not need human labellers. And computers tend to work faster than people, so a constitutional system might catch more problems than one tuned by humans alone—though it leaves open the question of who writes the constitution. Some researchers, including Dr Bowman, think what ultimately may be necessary is what AI researchers call “interpretability”—a deep understanding of how exactly models produce their outputs.

One of the problems with machine-learning models is that they are “black boxes”.

A conventional program is designed in a human’s head before being committed to code. In principle, at least, that designer can explain what the machine is supposed to be doing. But machine-learning models program themselves. What they come up with is often incomprehensible to humans.

Progress has been made on very small models using techniques like “mechanistic interpretability”.

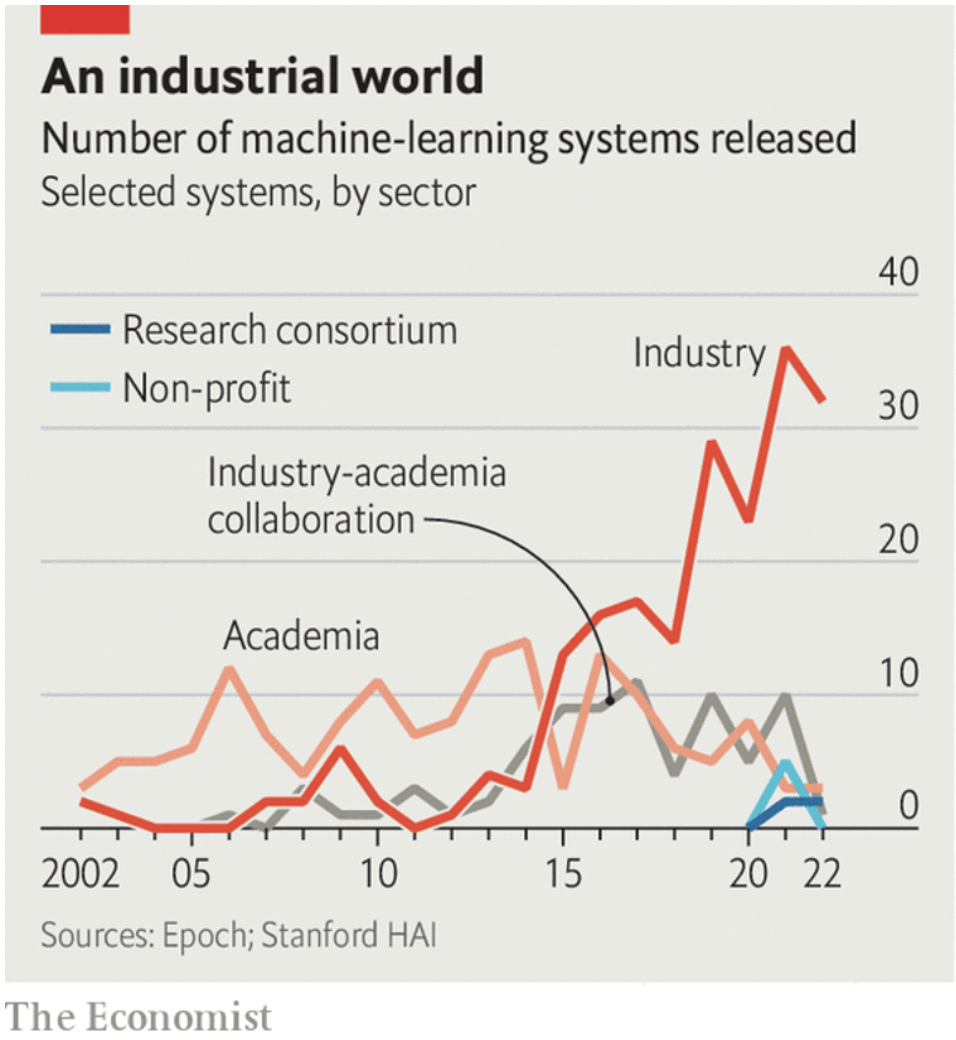

This involves reverse-engineering ai models, or trying to map individual parts of a model to specific patterns in its training data, a bit like neuroscientists prodding living brains to work out which bits seem to be involved in vision, say, or memory. The problem is this method becomes exponentially harder with bigger models. The lack of progress on interpretability is one reason why many researchers say that the field needs regulation to prevent “extreme scenarios”. But the logic of commerce often pulls in the opposite direction: Microsoft recently disbanded one of its ai ethics team, for example. Indeed, some researchers think the true “alignment” problem is that AI firms, like polluting factories, are not aligned with the aims of society. They financially benefit from powerful models but do not internalise the costs borne by the world of releasing them prematurely.

Even if efforts to produce “safe” models work, future open-source versions could get around them. Bad actors could fine-tune models to be unsafe, and then release them publicly. For example AI models have already made new discoveries in biology. It is not inconceivable that they one day design dangerous biochemicals. As AI progresses, costs will fall, making it far easier for anyone to access them. Alpaca, a model built by academics on top of llama, an ai developed by Meta, was made for less than $600. It can do just as well as an older version of Chatgpt on individual tasks.

The most extreme risks, in which AIs become so clever as to outwit humanity, seem to require an “intelligence explosion”, in which an AI works out how to make itself cleverer. Mr Karnofsky thinks that is plausible if AI could one day automate the process of research, such as by improving the efficiency of its own algorithms. The AI system could then put itself into a self-improvement “loop” of sorts. That is not easy. Matt Clancy, an economist, has argued that only full automation would suffice. Get 90% or even 99% of the way there, and the remaining, human-dependent fraction will slow things down.

Few researchers think that a threatening (or oblivious) superintelligence is close. Indeed, the AI researchers themselves may even be overstating the long-term risks. Ezra Karger of the Chicago Federal Reserve and Philip Tetlock of the University of Pennsylvania pitted AI experts against “superforecasters”, people who have strong track records in prediction and have been trained to avoid cognitive biases. In a study to be published this summer, they find that the median ai expert gave a 3.9% chance to an existential catastrophe (where fewer than 5,000 humans survive) owing to AI by 2100. The median superforecaster, by contrast, gave a chance of 0.38%. Why the difference? For one, AI experts may choose their field precisely because they believe it is important, a selection bias of sorts. Another is they are not as sensitive to differences between small probabilities as the forecasters are.

…but you’re too blind to see

Regardless of how probable extreme scenarios are, there is much to worry about in the meantime. The general attitude seems to be that it is better to be safe than sorry. Dr Li thinks we “should dedicate more—much more—resources” to research on ai alignment and governance. Dr Trager of the Centre for Governance on ai supports the creation of bureaucracies to govern ai standards and do safety research. The share of researchers in AI Impacts’ surveys who support “much more” funding for safety research has grown from 14% in 2016 to 33% today. ARC is considering developing such a safety standard, says its boss, Paul Christiano. There are “positive noises from some of the leading labs” about signing on, but it is “too early to say” which ones will.

In 1960 Wiener wrote that “to be effective in warding off disastrous consequences, our understanding of our man-made machines should in general develop pari passu [step-by-step] with the performance of the machine. By the very slowness of our human actions, our effective control of our machines may be nullified. By the time we are able to react to information conveyed by our senses and stop the car we are driving, it may already have run head on into the wall.” Today, as machines grow more sophisticated than he could have dreamed, that view is increasingly shared.

TRANSCRIPT (Youtube).

How could AI go wrong?

the AI arms race is on and it seems nothing can slow it down Google says it’s launching its own artificial intelligence-powered chatbot to rival chat GPT too much AI too fast it feels like every week some new AI product is coming onto the scene and doing things never remotely thought possible we’re in a really unprecedented period in the history of artificial intelligence it’s really important to note that it’s unpredictable how capable these models are as we scale them up and that’s led to a fierce debate about the safety of this technology we need a wake-up call here we have a perfect storm of corporate irresponsibility widespread adoption of these new tools a lack of Regulation and a huge number of unknowns some researchers are concerned that as these models get bigger and better they might one day pose catastrophic risks to society so how could ai go wrong and what can we do to avoid disaster

What are the risks? [Music]

so there’s several risks posed by these large language models one class of risks is not all that different from the risk posed by previous Technologies like the internet social media for example there’s a risk of misinformation because you could ask the model to say something that’s not true but in a very sophisticated way and post it all over social media there’s a risk of bias so they might spew harmful content about people of certain classes some researchers are concerned that as these models get bigger and better they might one day pose catastrophic risks to society for example you might ask a model to produce something from a factory setting that it requires a lot of energy for and in service of that goal of helping you your factory production it might not realize that it’s bad to hack into energy systems that are connected to the internet and because it’s super smart it can get around our security defenses hacks into all these Energy Systems and that could cause you know serious problems perhaps a a bigger source of concern might be the fact that bad actors just misuse these models for example terrorist organizations might use large language models to you know hack into government websites or produce biochemicals by using the models to kind of discover design new drugs you might think most of the catastrophic risks we’ve discussed are a bit unrealistic and for the most part that’s probably true but one way we could get into a very strange world is if the next generation of big models learned how to self-improve one way this could happen is if we told uh you know a really Advanced machine learning model to develop uh you know an even better more efficient machine learning model if that were to occur you might get into some kind of loop where models continue to get more efficient and better and then that could lead to even more unpredictable consequences

How to practise AI safety [Music]

there are several techniques that Labs use to you know make their models safer the most notable is called reinforcement learning from Human feedback or rhfs the way this works is labelers are asked to prompt models with various questions and if the output is unsafe they tell the model and the model is then updated so that it won’t do something bad like that in the future another technique is called red teaming throwing the model into a bunch of tests and then seeing if you can find weaknesses in it these types of techniques have worked reasonably well so far but in the future it’s not guaranteed these techniques will always work some researchers worry that models may eventually recognize that they’re being red teamed and they of course want to produce output that satisfies their prompts so they will do so but then once they’re in a different environment they could behave unpredictably so there is a role for society to play here one proposal is to have some kind of Standards body that sets you know kind of tests that the various Labs need to pass before they receive some kind of certification that hey this lab is safe another priority for governments is to invest a lot more money into research on how to understand these models under the hood and make them even safer you can imagine a body like a you know a CERN that that lives currently in Geneva Switzerland for physics research something like that being created for AI Safety Research so we can try to understand them better

What are the benefits?

for all these risks artificial intelligence also comes with tremendous promise any task that requires a lot of intelligence could potentially be helped by these types of models for example developing new drugs personalized education or even coming up with new types of climate change technology so the possibilities here truly are endless so if you’d like to read more about the risks of artificial intelligence and how to think about them