FOR EDUCATIONAL PURPOSES

“We don’ t know where the large language models are between a piece of paper and AGI. They are somewhere along there. We just don’t know where they are.”

“Now, human linguist behaviour is generated by humans who have goals. So from a simple ocamms razor point of view, if you’re going to imitate human linguistic behaviour, then the default hypothesis is that you’re going to become a goal-seeking entity in order to generate linguistic behaviour in the same way that humans do. So there is an open question. “Are these LLMs generating internal goals of their own?” And I asked Microsoft that question a couple of weeks ago when they were here to speak. And the answer is “we have no idea. and we have no way to find out. we don’t know if they have goals. we don’t know what they are”. So, let’s think about that a bit more…

Imitation learning is not alignment…

And then the question is not just do they have goals, but can they pursue them? How do those goals causally effect the linguistic behavior that they produce? Well, again, since we don’t even know if they have goals, we certainly don’t know if they can pursue them. But, the empirical anecdotal evidence suggests that “yeah” if you look at the Kevin Roose conversation [The New York Times] Sydney, the Bing version of GPT-4 is pursuing a goal for 20 pages despite Kevin’s efforts to redirect and talk about anything but getting married to Sydney. So if you haven’t seen that conversation go and read it and ask yourself “is Sydney pursuing a goal here, right, as opposed to just generating the next word?”

Black boxes, as I’ve just illustrated, are really tough to understand. This is a trillion parameter or more system. It’s been optimised by about a billion trillion random perdevations of the parameters. And we have no idea what it’s doing. We have no idea how it works. And that causes a problem. Even if you wrap it in this outer framework, the Assistance Game Framework, right, it’s going to be very hard for us to prove a theorem saying that “yep” this is definitely going to be beneficial when we start running it.

So part of my work now is actually to develop AI systems more around the lines that I described before, the knowledge based approach, where each component of the system, each piece of knowledge has it’s own meaningful semantics that we can individually check, against our own understanding, or against reality. that those pieces are put tougher in ways where we understand the logic of the composition, and then we can start to have a rigorous theory of these systems are going to behave. I just don’t know of any other way to achieve enough confidence in the behavior of the system that we would be comfortable with moving ahead with the technology.

Talk Title: “How Not To Destroy the World With AI”

Speaker: Stuart Russell, Professor of Computer Science, UC Berkeley

Date.

Time. 12:00 pm – 1:00 pm PDT

Register To Attend | Watch Livestream on YouTube

Abstract: It is reasonable to expect that artificial intelligence (AI) capabilities will eventually exceed those of humans across a range of real-world decision-making scenarios. Should this be a cause for concern, as Alan Turing and others have suggested? Will we lose control over our future? Or will AI complement and augment human intelligence in beneficial ways? It turns out that both views are correct, but they are talking about completely different forms of AI. To achieve the positive outcome, a fundamental reorientation of the field is required. Instead of building systems that optimize arbitrary objectives, we need to learn how to build systems that will, in fact, be beneficial for us. Russell will argue that this is possible as well as necessary. The new approach to AI opens up many avenues for research and brings into sharp focus several questions at the foundations of moral philosophy.

Speaker Bio: Stuart Russell, OBE, is a professor of computer science at the University of California, Berkeley, and an honorary fellow of Wadham College at the University of Oxford. He is a leading researcher in artificial intelligence and the author, with Peter Norvig, of “Artificial Intelligence: A Modern Approach,” the standard text in the field. He has been active in arms control for nuclear and autonomous weapons. His latest book, “Human Compatible,” addresses the long-term impact of AI on humanity.

Speaker Bio: Stuart Russell, OBE, is a professor of computer science at the University of California, Berkeley, and an honorary fellow of Wadham College at the University of Oxford. He is a leading researcher in artificial intelligence and the author, with Peter Norvig, of “Artificial Intelligence: A Modern Approach,” the standard text in the field. He has been active in arms control for nuclear and autonomous weapons. His latest book, “Human Compatible,” addresses the long-term impact of AI on humanity.

About the Talk: Co-hosted with the UC Berkeley Artificial Intelligence Research Group (BAIR)

About the Series: CITRIS Research Exchange delivers fresh perspectives on information technology and society from distinguished academic, industry and civic leaders. Free and open to the public, these seminars feature leading voices on societal-scale research issues. Presentations take place on Wednesdays from noon to 1 p.m. PT. Have an idea for a great talk? Please feel free to suggest potential speakers for our series.

Sign up to receive the latest news and updates from CITRIS.

Learn More: AISIC 2022 – Stuart Russell – Provably Beneficial AI

TRANSCRIPT

um need to introduce him um Professor Russell is a professor of computer science at UC Berkeley

um founder of the center for human compatible Ai and co-author of AI a

modern approach the I believe most widely selling textbook on AI

um so he knows a little bit of something about kind of how AI Works

um and he’s uh going to be talking about how to make um AI provably beneficial I will just

note that um if if you’re you’re if you’re not yet

convinced that this might be difficult um Professor Russell is also an adjective Adjunct professor of brain

surgery at UCSF so if he says something is hard you know he probably knows what

he’s talking about foreign

thanks for the introduction and the opportunity to speak um so I apologize for the way the

display is uh you can ignore the notes over there but I will I’ll read this out this was

actually borrowed from another talk it says reminiscing and pontificating two

of the favorite activities of an academic so um

all right so I’ll go through the main points first then you can go to sleep obviously we want our AI systems to be

safe and beneficial I think everyone kind of agrees with this and we want that to remain true no matter how

capable they become and this is the important part um I would argue that making sure that

they’re safe is easier if we design the system

most ways of building AI systems these days don’t involve designing them

which I think is dangerous

but even then there are ways of Designing AI systems and I’ll talk about what I call Standard model Ai and and

standard versions of AI safety uh refer to the idea that it’s it’s safe

if it meets the specification and I’ll argue that that’s a misleading

way of thinking about safety and as uh let’s do it number one

explained or Stuart Alpha I think he calls himself uh the potential for

misalignment between specifications and actual preferences means that we need to

do a lot of things differently in AI and it’s not just

okay let’s try to understand safety uh it’s not enough that it be safe we also have to know that it’s safe

and I believe that that requires us to

construct our AI systems on well-founded technological components assembled in

ways that we can verify and then lastly and this is really Pie in the Sky

um we need to make sure that unsafe AI systems are not able to run

and that means we have to have a different type of digital ecosystem from what we have now where all kinds of

Nefarious software runs almost unchecked so what I’m talking about really is

making AI more like Aviation and nuclear power where there are layers of well

understood analyzed verified Technologies and a lot of Regulation

um the the analogy between Ai and experimental avant-garde theater I came from a conversation I had with uh

someone from Aviation you know a very old-school rigorous control theorist uh with an experimental

uh projection and I asked him what he

thought about Ai and all this you know could we use deep learning to fly airplanes and

and he said well I yeah it’s like experimental love on God theater I mean I I really enjoy going I never know what

to expect but I don’t want those guys flying my airplane or running my country right and that’s

um that’s going to be a real culture change for the field but I think in the

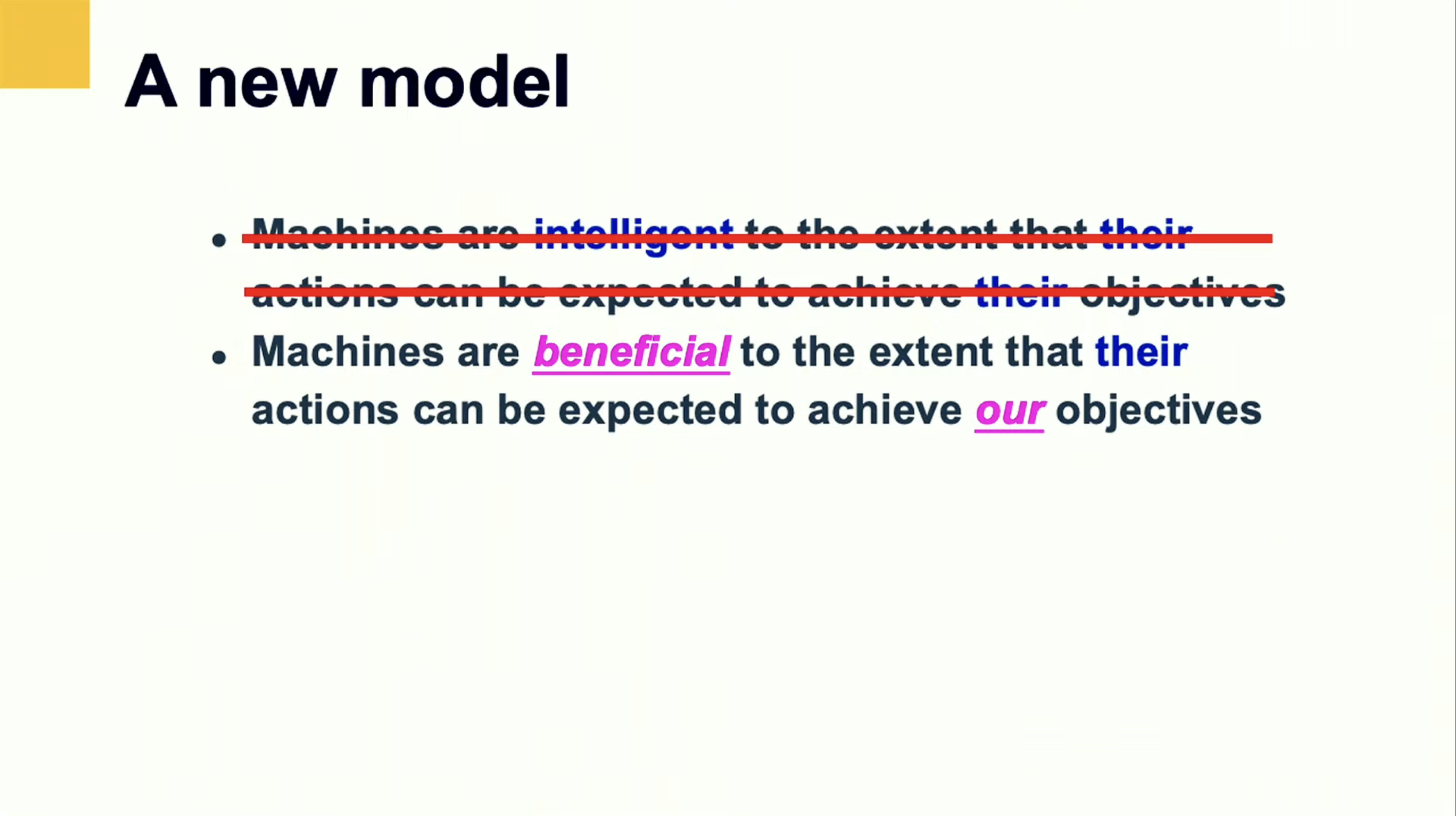

end it has to happen Okay so AI is about making intelligent machines

and from 50 000 feet uh what does that mean it means machines

are intelligent to the extent their actions can be expected to achieve their objectives

and I’ll call this the standard model because it pretty much pervades all uh

all the ways of building AI systems that we have used in the past 70 years or so there was another model which is

machines are intelligent to the extent that they resemble humans uh but that model

did not uh end up controlling the field and it it it morphed into cognitive science uh

instead so the uh you might call this the rationalist view initially it was goal

achieving systems so logical goals symbolic planning then it became rewards

and reinforcement learning and and so on and regardless of how you go about uh

doing this right the goal of AI is general purpose AI so systems that uh

can do anything that the human intellect is relevant to and probably do it much

better because machines have these intrinsic advantages and speed uh in

memory in communication bandwidth that we simply can’t approach

um and so if they can do what humans can do they’re probably going to end up doing it a lot better in almost all

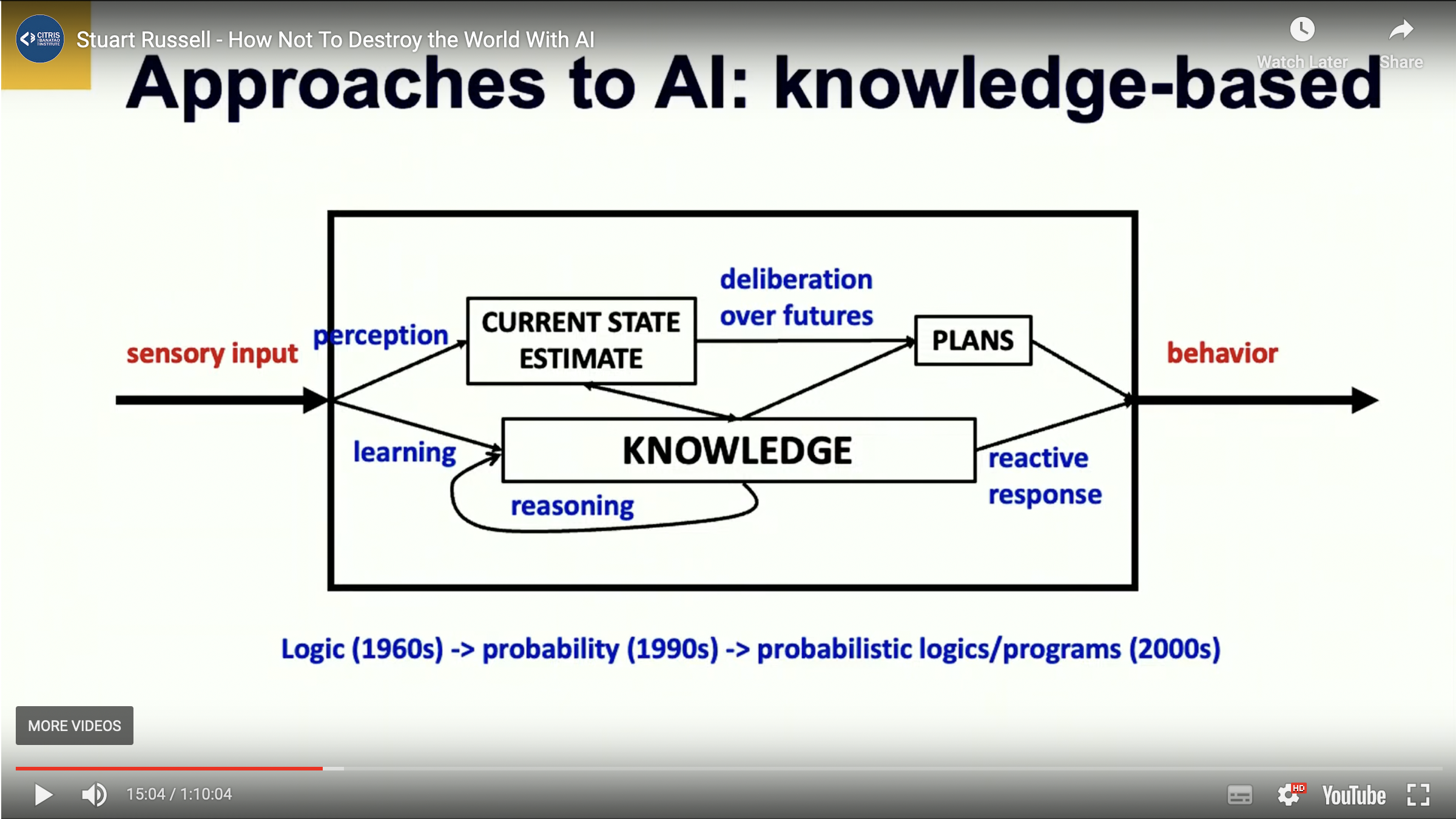

areas so I’ll talk briefly about some approaches to AI because I think it’s

it’s probably helpful that people understand that there are different approaches to AI uh and what is it what

is an approach to AI it’s how do you fill in this box right sensory input comes in Behavior comes out uh and this

continues over time in a loop with the environment and how do you connect up the two what what goes in that black box

and deep learning says Okay a massive quantity of circuit tunable circuit goes

in the Box right just throw as as many billions of tunable circuit elements as you can into

the box and then you optimize the objective uh by some kind of stochastic gradient

descent and that’s been successful in some in some areas uh and not not in others

um a much earlier approach and it’s usually associated with the

name of Friedberg back in the 1950s said okay you throw these are Fortran

programs for those of you who haven’t learned Fortran uh this is what Fortran programs look like so you throw Fortran

programs into the box and you optimize the objective by these evolutionary

techniques which amount to stochastic empirical gradient in other words you

can’t compute a derivative of the Fortran program at least not not uh not back then

so you just make changes and you see if the changes get a better value for the objective so a stochastic empirical

gradient and crossover so an extra technique that isn’t available to the

Deep learning people to try to find Optima in this space and the point of this example is that

in a sense sport Tran programs are more powerful than circuits

right they have you know built into them they already have the notion of addressable memories and

um loops and various other kinds of things that that make it very easy to

implement touring machines in Fortune full touring capabilities in in Fortran programs

um but this this approach got absolutely nowhere and was abandoned almost

immediately and you know there was a you know small I would say somewhat Fringe community of

genetic programming people who continue to this day uh working on this

um but it seems like it’s uh you know it’s not considered a candidate

for creating AGI and then there’s the more traditional kind of AI approach

which where knowledge is the central concept that we we build systems that know

things I I think that’s an important hypothesis

uh and I think it’s probably true of humans I think human beings know things

um and uh so you can acquire the knowledge from learning or communication

once you have knowledge you can reason with it based on your current estimate of the

state of the world and your knowledge of how the world works you can deliberate over Futures make plans produce Behavior

right and a lot of successful AI systems were built in this General Paradigm uh

you know deep blue is a good example of that but many many other systems in the

early days it was logic was the representation of knowledge logical

deliberation logical planning in the 90s and 2000s Technology based on

probability Theory to accommodate uh uncertainty both about the state of

the world uncertainty about the uh the Dynamics of the world and the outcomes

of actions uh and so on um and I’ll talk a little bit about probabilistic programs later on

um and uh I think it’s instructive

these days it’s very hard to convince especially uh you know undergraduate

students that they need to learn anything other than how to download tensorflow and shovel data through it

um so if someone is skeptical that a knowledge-based approach to AI is at all

valuable uh you could give this example right so so 1.2 billion light years away there

were some and 1.2 billion years ago there were some black holes uh that

um spiraled around each other and eventually collided and that produced

gravitational waves quite a big event

the energy output of this event was 50 times the energy output of all the stars

in the universe put together and and that produced a gravitational

wave that was detected by the the ligo detector that’s a picture of the ligo detector

and and they predicted exactly the signal that they would detect

and so this was the first ever detection of gravitational waves and was an

incredible confirmation of general relativity um so just ask a deep learning person

okay how would your deep Learning System uh invent the ligo

right and usually they’ll just change the subject right or and say but have you seen our

really cool pictures that we get out of stable diffusion

um whereas the knowledge-based story I mean this is a knowledge-based story right this uh we kind of know that

because the perceptual inputs that led to all these theories were perceptual inputs to physicists who

died many of them hundreds of years ago right and the knowledge was communicated

explicitly it was acquired and communicated in the form of knowledge which was then chained together into

ever more complex theories of physics and and device designs uh that they

could understand okay I think we’ve also been

overestimating how smart our machine learning systems are and this has

happened over and over and over again um but I just wanted to show you one example this is from openai

a deep reinforcement learning system that uh in the space of a couple of

hours you know these these humanoid robots start out completely unable to move right they’re

lying on the on the ground just like newborn babies all they can do is waggle their limbs randomly and they learn to

sit up and stand up and locomote and eventually to start playing soccer

and I would say you know the blue player is clearly better than the red player at

being in red flag is not much of a goalkeeper I would say but still if your newborn baby was playing soccer in the

back Garden two hours after it was born um you would call foreign exorcism

so okay this looks very impressive we you know what is the blue guy doing oh he

sees where the ball is he goes to the ball he looks at the goalkeeper he finds a way to kick it past the goalkeeper

into the goal right that’s what you’re seeing and you think okay it’s learned to you

know the blue guy has learned to score goals in soccer right let me change the behavior of the goalkeeper

just a bit right I’m not going to change the blue player at all exactly the same blue

program okay now the red goalkeeper just Falls over and Waggles his leg in the air

right and here’s the blue player right

so you see that what you were thinking what you thought you saw in the first video you were not seeing

right your brain was lying to you about what you were seeing you thought you

were seeing someone who was seeing the ball going to the ball finding a way to

kick past the goalkeeper right if that’s what you were seeing it would have done even better in the second video but it

didn’t right so what you were seeing was something completely alien and not

understandable maybe something more like a Tango right that it was like if I had

trained them to dance with each other and then one of the two dance Partners fell over the first dance partner might

not know what to do right and so it’s more like that than actually training two people to play

soccer against each other okay so we constantly do this we see examples

of successful behavior and we extrapolate uh as Stuart pointed out right we extrapolate in ways that are

totally unjustified uh and we over interpret the success that we’re seeing

um so you might then ask well why am I even asking this question right if AI systems are so terrible uh why are we

worried about it so I think there is real progress happening and and there’s actually other Technologies beside deep

learning that that are making a lot of progress so I want to ask what happens if we succeed in that goal of creating

general purpose AI and I don’t want to be accused of of

just talking about Doom uh so I want to explain why it is we’re even doing this

right because if there weren’t an upside we wouldn’t be doing AI at all

uh and I think a simple way of thinking about the upside would be that if we had

general purpose AI we could just use it at scale

at very very low cost to do what we already know how to do right we know that humans

can deliver to each other a high standard of living right and by definition if it’s general

purpose I it can do that but it can do it at scale and at very little cost and so we could deliver a high standard

of living to everyone on Earth and that would be about a tenfold

increase in the GDP of the world and if you translate that you know using the usual discount factors into a Net

Present Value the cash equivalent of the income stream is about 13.5 quadrillion

dollars so that’s a lower bound on the cash

value of creating general purpose AI right and if you think about it this way

it gives you a clue about why AI has become this subjective geopolitical

competition and Supremacy right because it would completely

revolutionize our entire global system it would be a far more impactful

invention than anything else we’ve ever invented uh and we might be able to do more

things too right so so we could speculate that it could also give us much much better health care uh

extending uh the health span uh improving education for children uh

accelerating scientific advances and all kinds of other cool things but we don’t have to make those assumptions to see

that by by necessity it would be an incredibly valuable thing

but Doom doominess has been around for a while uh Alan Turing in 1951 gave a

lecture and and here’s what he had to say right seems Parable that once the machine thinking method had started it

would not take long to outstrip our feeble Powers at some stage therefore we

should have to expect the machines to take control

uh so that’s it right no ifs no buts no apology no mitigation no solution no

nothing just this is it guys you know this is going to happen uh and

there’s nothing we can do about it um so

so why did you think that and I think to him at least it seems obvious

right eventually AI systems will whether it’s with deep learning or some other

combination of technologies will make better Real World decisions than human

beings right as they are already making better decisions on the go board and lots of

other and lots of other more narrow application areas as we generalize and

strengthen our Technologies the range of areas where they exceed human capabilities will just grow and grow

until it encompasses everything and I think Turing is saying look how do

we retain power over entities that are more powerful than us forever

good question okay and he I think touring and actually he was drawing on

earlier uh work like Samuel Butler’s Erawan from 1872

and he obviously agrees with the anti-machiness in Butler’s book who

argue that unless we do something now uh uh you know our our future is sealed

and in Butler’s book they simply ban all Machinery of any kind no matter how

simple right knowing that once you go down that road it’s sort of a slippery slope and

and you fall off the end so we don’t go down that road at all

um maybe at some point we’ll need to take that step I think it would be

extraordinarily difficult to do uh to say that okay maybe we can use computers

for you know databases and communication uh and so on but no AI of any kind no

language understanding no speech recognition none of that stuff right it would be very very difficult to

make that happen particularly because of that 13.5 quadrillion dollar prize that

everyone is chasing um and I would argue that the problem is

already happening right we already have Machinery uh it’s not very smart but it

is very well protected um that is already making a mess of the

will so here’s a simple example right the social media content selection or recommender system

algorithms and they choose what we uh what we listen to what we watch what we

read they have more control over human cognitive intake than any dictator has ever had in

history and yet they are completely or almost completely unregulated

and they are algorithms that are designed to maximize some objective and

I’ll say click through as one example engagement is another the amount of time you spend with the media but let’s think

about click through the probability that you click on the recommended item and just by coincidence that’s aligned

with Revenue generation I’m sure that’s a coincidence I think what the what the

platforms were hoping was that the algorithms would learn what people want uh and because if you send them stuff

they’re totally uninterested in or revolted by they’re not going to click on it

um and so the algorithms this this is actually maybe aligned with what people want that’s good

um but we quickly learned that that’s not actually what happens right that

uh click bait so things that people click on even if they don’t actually like it once they see it if you can get

people to click on it that generates the revenue and so click bait itself as a

category got Amplified by the algorithms right they learned to recognize clickbait and to present it more often

because they got more clicks out of it um and creating filter bubbles so if you only ever see what it the algorithm

already knows you want uh then your your Horizon actually shrinks down uh rather

than becoming broader but even that’s not the answer right if you want to optimize clicks right

where here this is not just the immediate probability of the click but actually the long-term sum of clicks

right so if you’re a reinforcement learning algorithm you’ll maximize the long-term sum of clicks how do I do that

right given that I can’t actually get out and grab your finger and make you click on things right which would be one

solution um you just modify people to be more predictable

right reinforcement learning algorithms learn strategies in other words conditional

action sequences that modify the state of the world in ways that produce more

reward the state of the world for this algorithm is your brain and so the algorithm modifies your brain

so that in the long run you generate more clicks and it does that by making you more predictable so it sends you

sequences of content items that it has learned are effective in turning you

into a different person who can generate more revenue for it okay so this is just a logical

consequence of connecting reinforcement learning algorithms to human beings through this type of interface

okay and uh that’s it right

um and so if we made the algorithms better

right what would the outcome be it would be worse

right the algorithms right now are really stupid they don’t know that human beings exist they don’t know that we

have minds or political opinions they don’t know anything about the content of the items that they’re sending

right if they were smarter better algorithms the outcome would be much much worse for human beings we might

even become unrecognizable as people um

and in fact uh Dylan Hadfield now my student and another undergrad at

Berkeley proved some theorems showing that under fairly mild conditions about

the connection between objectives and the state of the world

um this is a inevitable mathematical consequence and basically if you leave out pieces of the objective

the algorithms set the the things that you left out to extreme values in order

to squeeze a little bit of juice out for the the things that are in the objective

right and you can see this I mean this sort of happens in all kinds of examples of misaligned objectives that the the

things that are left out get set to extreme values and that causes all kinds of havoc

uh so we have a methodology that’s pervasive in the field not just in AI actually but lots of other fields

in economics and control theory and operations research a methodology that uh that leads to worse outcomes as we

improve the technology right so something’s wrong

with the very Paradigm in which we’re working if that’s if that’s a property

so we need to get rid of this definition um and I feel partly responsible for

sort of promulgating it in the first three editions of the textbook um I can only plead that it wasn’t my

idea uh it was just reflecting how the field understood itself um

and change it to something different right that we want not intelligent machines

that once they have an objective they’re just going to pursue it at all costs

right we want machines that are beneficial specifically to us not to uh

cockroaches or aliens but specifically to humans and they will be beneficial to the

extent that their actions can be expected to achieve our objectives and in the degenerate case where we managed

to exactly copy our objectives into the machine uh then we’ll have alignment and

things will work okay but that’s an extremely degenerate and

very rare situation or uh it’s a case where as in a go program right where the

the universe of the go program is so restricted um that nothing it can do at least by

putting go pieces on the go board uh can cause any problems for us

okay so that’s that’s what we would like to have and obviously it’s more difficult task

right because the machines don’t have the objective or at least not direct access to the

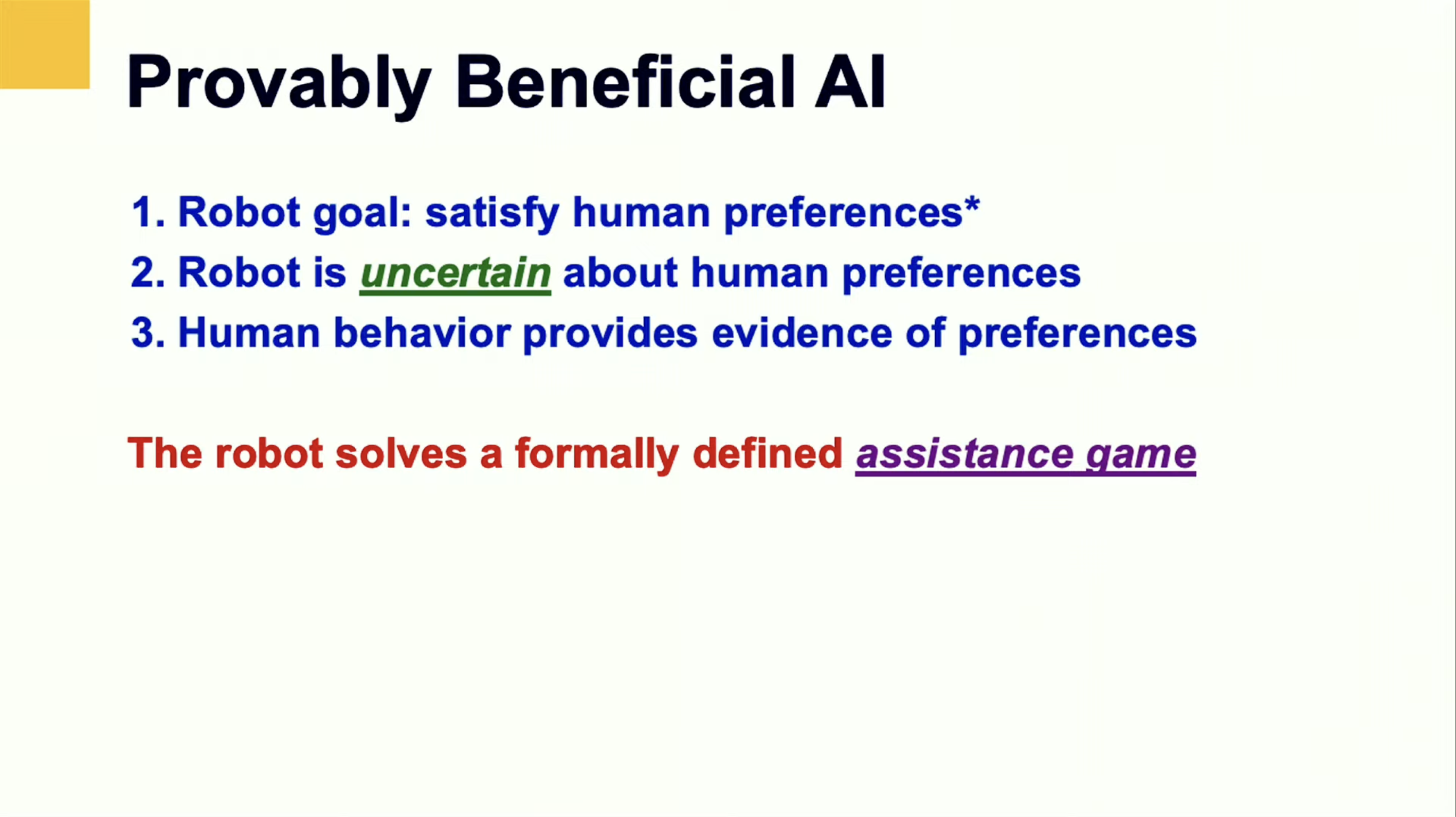

objective and so it can be more difficult for them uh to be beneficial to us but it’s not impossible

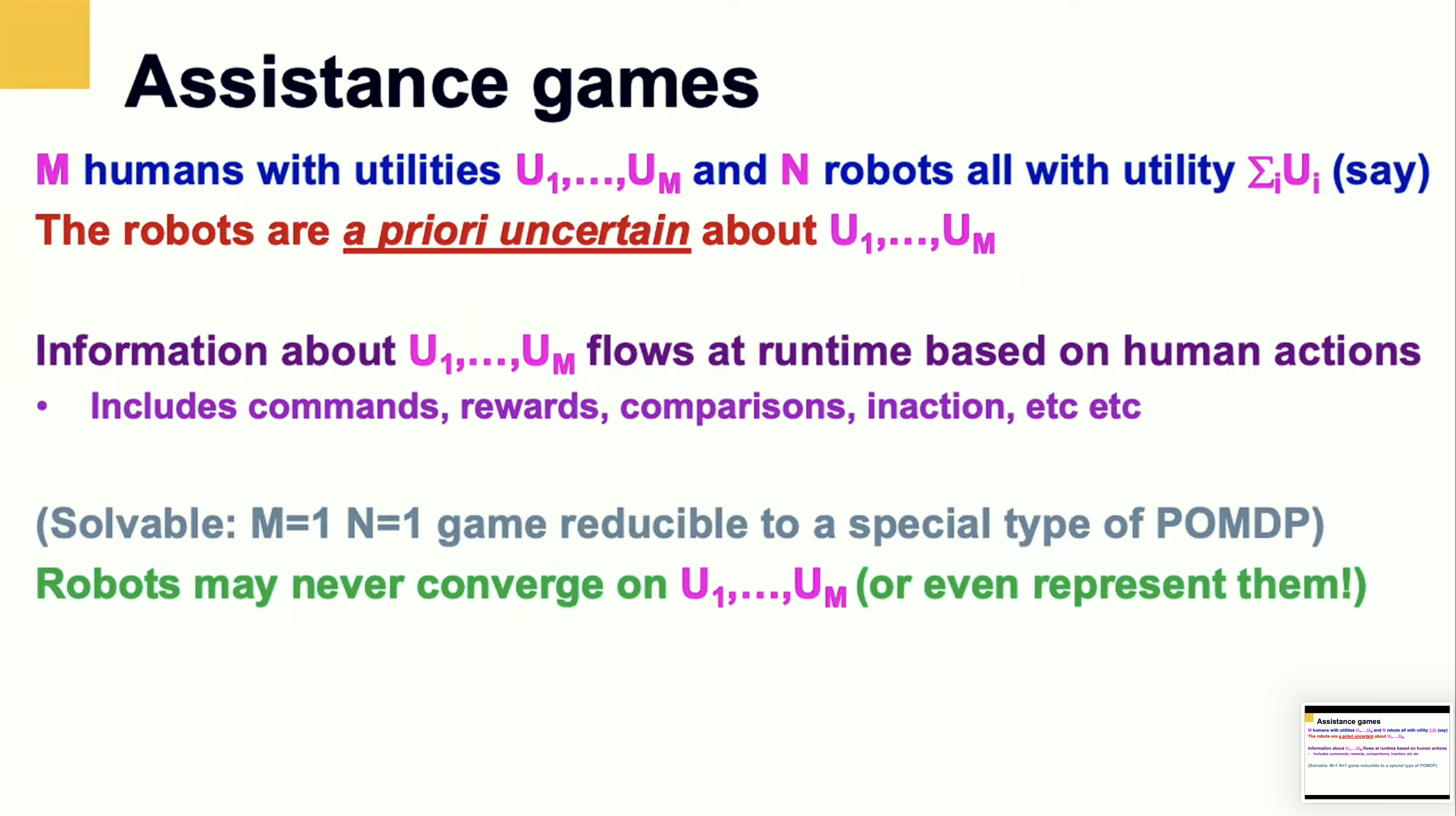

and we can turn that general idea into a mathematical framework that we call assistance games

and uh in assistance games you’ve got some number of humans who have utility

functions let’s say you run through um and some number of robots

and all the robots have the same payoff which is the sum of the utilities of the

humans right and here I just use sum of utilities as a as an example this is the

utilitarian approach to how you make decisions on Baja for multiple humans and there are

other versions that you could program in instead but let’s just stick with this one because it’s easy and it’s the right

one um so uh

the key Point here is that the robots don’t know what those utilities you run

through um actually are and this is crucial

right and in fact it’s that uncertainty that is going to give us control over

these more powerful entities forever

and when you solve these so that’s that’s it so you literally set the game up like that and then you can compute

Solutions right you can calculate what is you know for any if I have to add in some

Dynamics for the world and so on but what is the Nash equilibrium of the game

uh from the point of view of the robots and from the point of view of the humans how should they play this game you can

calculate it and uh a key characteristic

is that as the game itself unfolds

information is going to flow from the humans to the robots about what it is

the humans want because the humans are going to be in this game they’re going to be acting uh they’re actually going to have an

incentive to teach the robot so they will act in ways that are pedagogical towards the robot and the

robots will understand because they’re also solving the game they’ll understand the human behavior as conveying

information about preferences as well and some of that human behavior might be

giving commands which the AI systems don’t have to take literally at face value but can take

those as evidence of human preferences the humans could give rewards it could

offer comparisons like you know I like I like it when you do that I don’t like it when you do this right humans might do

nothing that’s also evidence about uh you know so that the human does nothing while the robot’s behaving it usually

means the robot is doing okay right all of this is is evidence about

um about human preferences uh and we’ve so far been able to solve

games with one human and one robot and actually examine these behaviors in

various environments and seeing that uh it basically things happen the way uh

the way that you want an important property of this and I want to emphasize this over and over again

one and I think the word alignment causes confusion

the word alignment suggests that first of all you get alignment and then the robots help you

right that isn’t I don’t say never but probably never going to happen

right the robots are always going to be acting under uncertainty about what the

full the full human preference structure actually is not least because we are

uncertain about many aspects of our own preference structure

um but even if we weren’t the robot still would have enormous gaps in their

understanding partly because there are things that we have preferences about that have never

occurred or anything like them have ever occurred uh in the history of the world but if they did occur we would have very

strong reactions to them one way or another right and so

especially for those kinds of things they’ll be uncertainty about what humans

would do and as I said when when you look at the

behaviors you get what you hope for you get deference uh to the humans because

they’re the ones whose utilities matter um you get minimally invasive Behavior

uh for actually sort of two reasons one is you might think it’s sort of a risk aversion like I don’t want to mess with

parts of the world where I’m not sure what the preferences are right so I don’t want to violate

preferences unintentionally um but there’s actually more to it than that there’s a notion that the world is

already kind of the way we like it right because we’ve been acting in the world for a long time and so we’ve kind of

made it the way we like it um and minimally invasive behavior

wouldn’t actually necessarily be the right thing to do if you were in a

completely AB initial untouched world where humans had never been

right there will be lots of things about it that we wouldn’t like and doing nothing might not be a safe thing for

the robot to do um but the key property is that the robot is going to be willing to be

switched off so touring actually talked about the possibility that maybe if we were lucky

we might be able to switch off the power at strategic moments but even so our

species would still be humbled but of course if you really are dealing with a super

intelligent AI that really is pursuing some mispecified objective it’s already

thought of that right just like your pathetic attempts to defeat deep blue or alphago uh

pathetic right it’s or whatever you think of to try to fool it it’s already thought of that

right and so you’re not going to be able to switch it off if it doesn’t want you to switch it off so you have to get it

and this is something that comes up over and over again right any attempts to block

undesirable behaviors you just have to assume that they’re going to fail

right just like we failed for 6 000 years to write tax laws in such a way

that people pay their taxes right despite enormous incentives for us to write the tax laws correctly we fail

over and over and over again because they’re they’re very smart powerful

groups who don’t want to pay taxes the only way to do it is they have to want to pay their taxes

right and same here right the machine has to want to be switched off uh if we want to switch it off

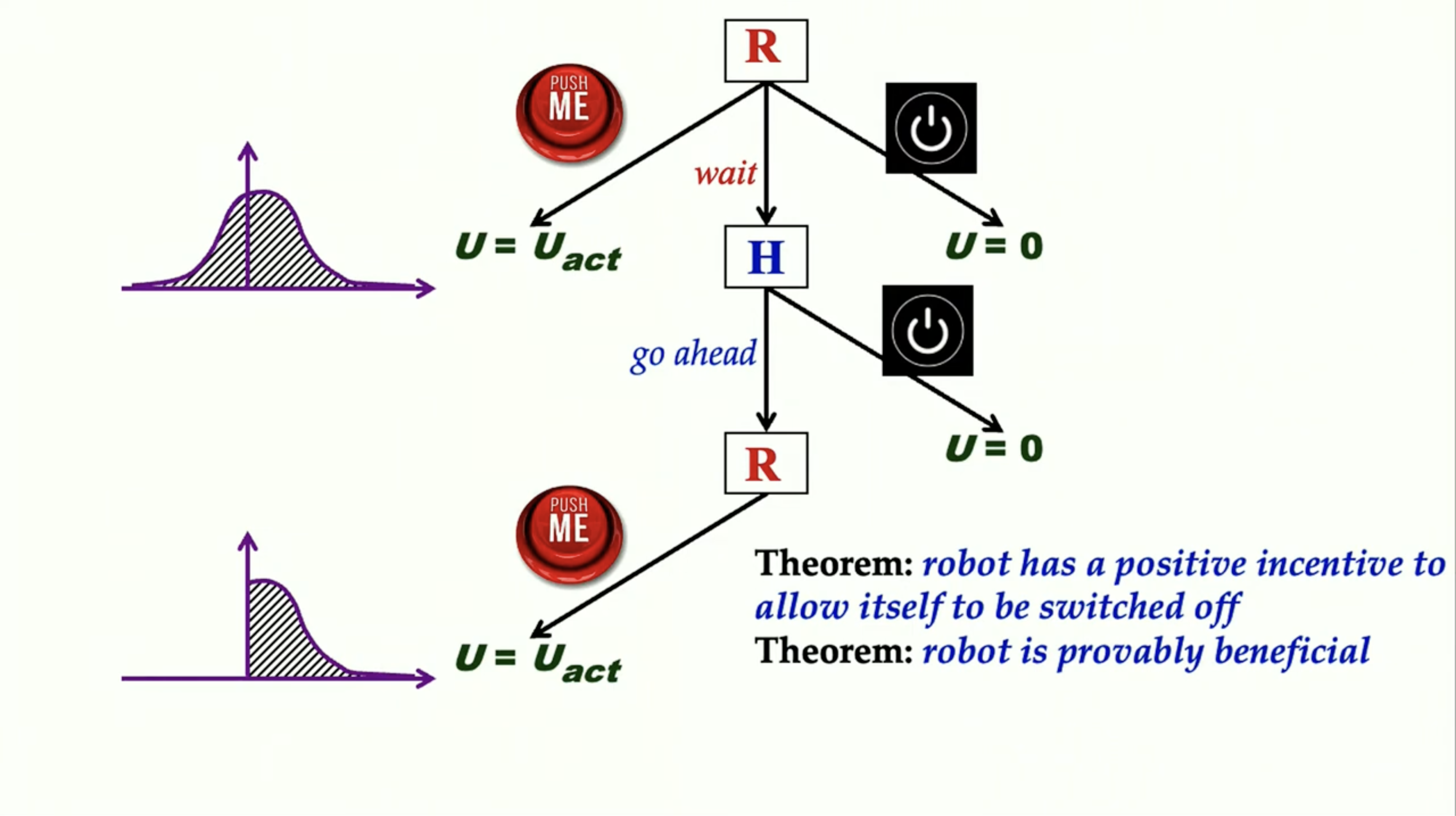

so let’s look at that problem right so here’s our robot it’s actually a hulking 200 kilogram robot and it has a safety

switch on the back uh there’s the switch and um if you if you look in the standard

model right you say okay fetch the coffee robot says okay you can’t fetch the

coffee if you’re dead so I need to disable my off switch so that I don’t get Switched Off and and

because that would prevent me from fetching the coffee right this is exactly the behavior we want to avoid

right but it’s sort of unavoidable in a standard model because you can’t really achieve anything if you did

and so the first thing a robot will do will make sure that it can’t be switched

off um but if you have uncertainty about the objective right if instead of saying

fetching the coffee is my sole goal in life right if it’s uncertain about all the

rest of human objectives and it doesn’t behave that way so here’s a little

um toy version of this off switch problem where uh here’s the robot gets to go

first in this game and there are two main choices right so it could take some action pushing the

big red button which might be quite dangerous you know maybe this launches the nuclear weapons uh maybe it sets off

all the sprinklers in the building who knows what it does and the robot’s quite uncertain about the value of this big

rig button for the human right so so I’ve Illustrated that with the uh feel

it though you probably can’t okay you can’t see my mouse anyway so this this uncertainty this probability

distribution is the probability distribution about the utility of pushing the big red button from the

point of view of the human and there’s a lot of uncertainty but the mean of this distribution is positive

will the robot could also just switch itself off in other words commit suicide and

we’ll fix the value of committing suicide to be zero that’s just without loss of generality and so in this

situation the robot would choose to push the big red button right uh and so the the assumption is

here that it would it would not let us switch it off right

if we if it doesn’t have a choice right it could commit suicide or push the big robot and it’s just going to push the big red button because it has a positive

expected utility so if we add a third choice which is to

wait and then allow the human to switch it off

or not right the question is will it take that choice

right and you might say well what’s the point because the robot if I’m the robot right I can

already switch myself off so what’s the point of waiting and let the human if it’s a good idea to to for me to be

switched off uh I should just do it and otherwise I should push the big red button but

actually the human is in a different decision-making situation than the robot because the human is the one who knows

more about their preferences than the robot and so uh if the human doesn’t switch me

off what do I learn I learned that pushing the big red button is actually okay

in other words I learned that the negative quadrant in my uncertainty distribution disappears

and so if you if you go through the math it’s very trivial you can show that

in this situation the robot will always choose to wait will always

allow itself to be switched off provided that it doesn’t know which Choice the

human will make so provided that it’s uncertain about the utility

of the human then it will always allow itself to be switched off if it becomes certain about

the utility of the human then it won’t there will be no advantage to allowance

cell to be switched off so the incentive to do so disappeared you had a question Dave

yeah in this in this toy example right um

but it as happens with these simple mathematical models right it does generalize appropriately anyway so you

can shoot we can prove this it’s a trivial theorem it’s exactly analogous to uh the non-negative expected value of

information um and in sense you can see that the human

uh by making this Choice conveys information to the robot about the preference function uh and so the robot

wants that information and therefore it has an incentive to try to get it

um and so this is this is the core I think of of why uncertainty about

preferences gives us control over the robot and you can elaborate this in all

kinds of ways you can make the human not perfectly rational you can have a cost

for asking the question of the human like annoying the human all the time uh and so on so forth and it behaves

exactly as you’d expect right the the more the cost of annoying the human the more often the human’s going to have to

put up with the robot doing slightly sub-optimal things and and so on and so forth so everything behaves itself

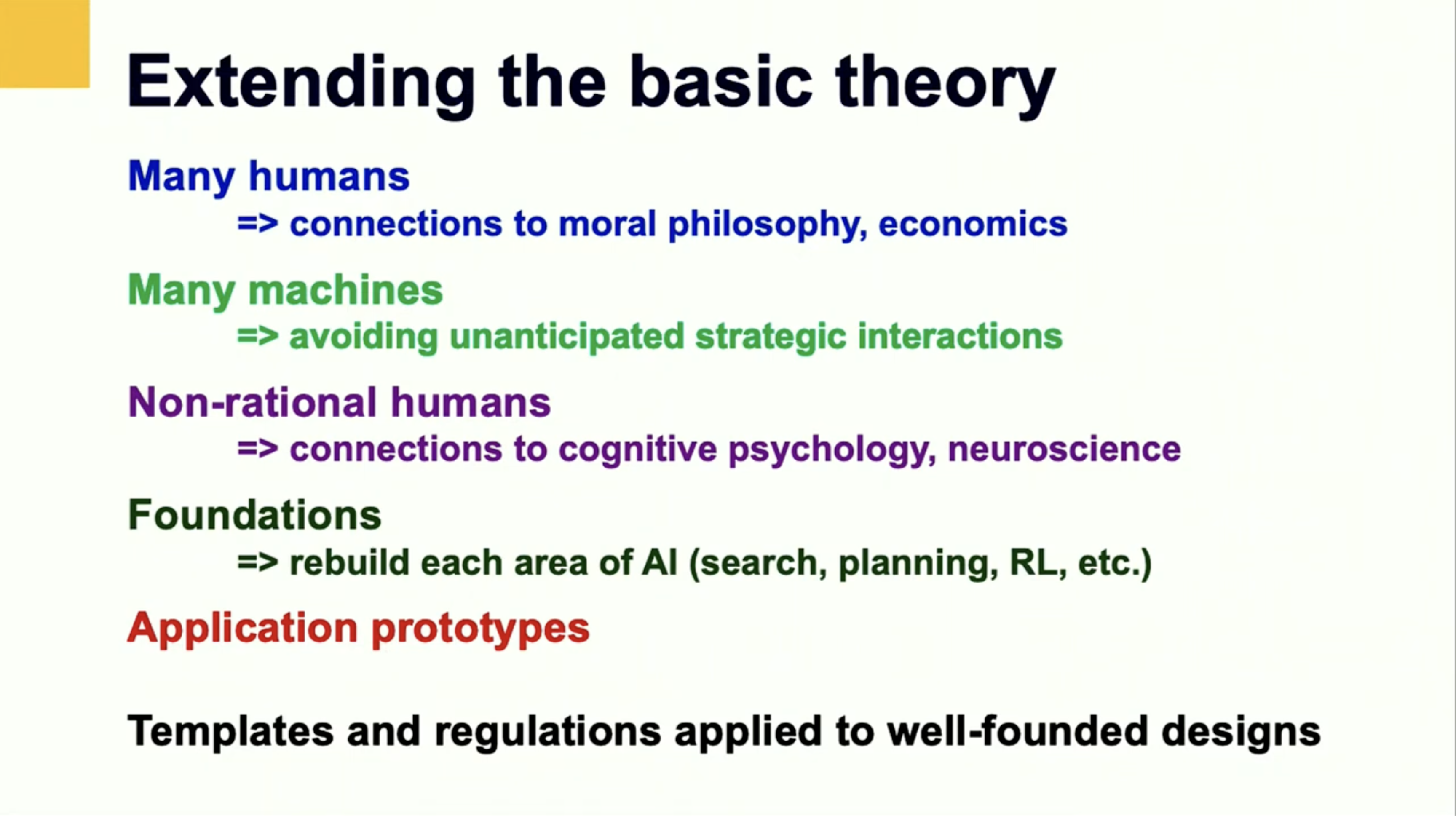

okay so there are tons and tons of important open questions right which

are still uh really basic research questions that we need to solve some of

them are not new of course um

so the first set of questions have to do with the fact that uh human preferences are very complicated

in the preferences of many humans are even more complicated some of them have to do with the fact

that uh even given our preferences

our Behavior is far from perfect

and uh and and that um is something that uh that Stuart

number one talked about quite a bit um and then we have to deal with the fact that

the machines aren’t perfect either and they’re never going to be perfect so uh what should we actually be trying

to prove about them uh given that they’re nowhere close to being rational either

[Music] um so when we think about real human preference functions

first of all for an individual human they could be very very complex

they’re almost certainly going to need to be expressed in terms of latent

variables like uh you know health and physical security they’re not I don’t want you to think of this as a if at

least if you’re a deep learning person you’re probably thinking of okay how do I map from video to a number or two

videos to a ranking of the two videos right that’s not really how we think about preference functions we think

about them in terms of things like health and physical safety and uh

agency and various other abstract properties

and there’s also the question of how we learn these do we have to learn it from scratch for every different human being

uh almost certainly not right I think there’s a massive amount of commonality across humans so how do we capture that

and take advantage of it so that systems are not starting from scratch with every

new person I think it’s also interesting that uh

that the textual record right everything we’ve ever written down uh contains

massive amounts of information about human preferences and it’s kind of interesting when you when you read text

from that point of view it just starts like flooding off the page at you uh you

realize how much is implicit just in the fact that people said something right so the the oldest text we know about

is some of the most boring text that’s ever been written right it says you know

Joe uh brought five camels to the market and traded them with Mary for 20 bushels

of corn right and on and on and on like this um so where you learn something about

the relative value of corn and camels from that that’s interesting but what

you learn also is from the fact that this was what they chose you know having figured out how to write this is what

they chose to write on those valuable you know uh time-consuming clay tablet things

right that tells you something about the importance of those types of material goods and knowledge of the trade and

records of it to the organization of those societies and so on so it’s a

little bit vague exactly what it means but it means it’s really important uh so there’s tons and tons of information and

it’s in various different uh explicit and implicit forms

um there’s also a lot of information that

psychologists have developed arguing that the simple uh notion of rsas primed

right which actually goes back to uh paper by Samuelson

Economist in about 1930 I think um arguing that we should we should

think about uh utilities of histories utilities of sequences of states and actions as sums of rewards associated

with transitions he was mainly arguing because it’s mathematically straightforward to to do things that way

um and later on there were some theorems showing that under various stationarity

properties of preferences that that is in fact the only solution but

real human preferences and certainly people like Daniel Kahneman have done a pretty convincing

uh research to show this um they’re not just preferences on state

action sequences right they have or at least the state has to include mental state because it matters whether you

know what’s going on not not just what’s going on but you know what’s going on that’s important to your happiness right

obviously and yet none of our models actually capture that correctly

memory is something you carry with you afterwards right whether it’s memory of good events or bad events has a massive

impact on you so it’s not just that a bad thing happened but it’s that I have to remember the damn thing for the next

50 years right and so now we have lots of PTSD treatments to try to help you forget the

bad things so that you can get on with the rest of your life anticipation of good or bad things happening in the

future also have a huge impact on your well-being and then autonomy itself right the the

counterfactual ability to choose what isn’t in your own best interests right is really important to us

and how do we even take that into account how do you formulate that in standard sort of mdp language uh is not

clear um

another big issue uh is that if you’re going to take a sort of Bayesian approach to this where the AI system

starts out uncertain about human preferences learns more over time there’s a danger of model

mispecification that if you if you have a prior that’s too narrow that actually

rules out the true utility function because it doesn’t include it in its hypothesis space

then the learning process converges to an exact belief in an incorrect utility

function and that’s exactly what we want to avoid right that a system believes 100 in the

wrong utility function so can we write prize in a way that are Universal for

what human preferences could be that’s a really difficult thing it is the case

that you can allow you can allow a little bit of error right so an AI system optimizing for an

incorrect utility version isn’t the end of the world as long as it’s close enough to the true utility and you hope that

the improved ability to optimize makes up for the fact that it’s optimizing the wrong thing and will still end up being

better off than if we were trying to optimize our own utility function unaided

the most difficult problem about real human preferences I think is plasticity the fact that they can be changed by

external influences causes all kinds of problems right the most obvious is should I optimize for

you today or you tomorrow right given that I’m going to take an action that affects you tomorrow right

that’s a that’s a problem that doesn’t seem to have an answer as far as We Know yep

okay 10 minutes oh good good got it

um and then how do we avoid AI systems that manipulate our preferences to make them

easier to satisfy and maybe the most important uh is the fact that the preferences we

have actually not autonomously acquired the the result of our upbringing and our

society and sometimes deliberate manipulation by those in power to actually enjoy our oppression

and so should AI systems even take our preferences at face value

uh or not that’s a really difficult question okay uh so I think

uh Stuart number one already dealt with um this uh this issue of

um the fact that our behavior is generated by the combination of our

preferences Theta in my notation and our decision-making processes f

and uh and it may not always be easy to separate our decision process from our

preferences because we actually need to optimize on behalf of the preferences so if we don’t separate those two things

correctly we could end up optimizing on behalf of something that actually doesn’t

represent human preferences correctly so we have somehow have a way of learning about F separately from learning about

Theta and the way economists have done this for decades is we ask humans to

make decisions in situations that are very very very straightforward would you like an apple or ten dollars

here what what you choose it’s assume that in that case you choose what you prefer

right um and and then you can extrapolate from there

um okay and then real machines I think you know the best we’re going to be able to do is to prove that uh despite the

machine’s limitations that in expectation uh we’re better off using that limited machine uh than trying to

make our own decisions okay more open issues

um developing the theory of multi-human assistance games so more than one human

you immediately get questions of strategic interactions among the humans that they will lie about their

preferences in order to get more help from the robot so the robot has to understand that it’s in that game and

and uh and have have make it basically you know invoke mechanisms that prevent

the kind of strategic interaction um we have to make

basic assumptions about comparability of human preferences because if we’re going

to do anything like what I suggested before summing up the utilities those utilities have got to be on

commensurable scales right and uh some economists have argued

actually that it’s completely meaningless to do that

right which means that the only kind of optimality you can aim for is Pareto optimality that you know this decision

is strictly better because everybody prefers it or no at least nobody disrefers this decision

um I actually think that’s nonsense right no one really thinks that

economists may want to state that as an axiom that that uh interpersonal

comparisons are meaningless but no one really believes that Jeff Bezos waiting

one microsecond longer for his private jet to arrive is incomparable to your

mother watching her child die of starvation over three months right but that’s what the Axiom says

that those two things cannot be ranked uh one is not necessarily more important or less important than the other

okay so but as I said no one actually believes that Axiom

other questions uh against you at one mention this uh actions that change the

number of humans like the China’s China’s one child policy got rid of 500

million people uh which we would usually think is an incredible atrocity right to extinguish

the lives of 500 million people they just happen to be potential people uh and not current people

so how do we make decisions about that um okay I’m gonna have to skip over the

generalized social aggregation theorem but you can you can read about it in our papers um and then you know similar questions

come up when you have multiple robots in the game and you want to make sure uh

again that we don’t get unfortunate strategic interactions among the robots particularly if we have billions of them

even if they’re all aligned with or if they’re all playing the assistance game they’re all trying to do the best for

Humanity uh perhaps that could be really bad sort of prisoners dilemma kinds of

interactions I think not because it’s a common payoff game when those you don’t usually have those kinds of strategic

problems they certainly have coordination problems which we have to deal with but maybe the fact that it’s

also open source that they could exchange their code with each other maybe we’ll be able to find good zero

shot solutions to the coordination problems uh embeddedness is another question so

all the standard theories of rational decision making assume basically that you can’t change your own code uh that

the actions only affect the outside world not the algorithm that’s making the decisions um and of course in the real world our

agents are embedded in their own environment and we have to make sure that they don’t mess with themselves in

the wrong way and then if we’re going to compete I think with uh the sort of

headlong Rush that’s happening in in the AI World particularly in the commercial

world we have to show that safe well-designed Solutions are competitive

in terms of functionality with tools produced by other approaches

okay very briefly I’ll go through the other points I want to make so first of all

um what could we use in terms of well-founded technological components things we actually understand what

they’re doing uh and we can we can prove properties about the systems we build as a result so probabilistic programming is

one technology which basically combines probability Theory with with any touring equivalent

formalism whether it’s programming languages or or first order logic it gives you enormous improvements and

expressive power compared to circuit-based languages uh and they can pretty much do anything

you want they come with general purpose inference algorithms so you write in theory you write one inference algorithm

and then for any model any data any query they can give you the answers you

need um and you can get nice cumulative learning which is very hard to get with deep Learning Systems where the stuff

you learn goes phase back into the the learning process so that you can learn better from from the next time and it’s

uh growing it’s sort of a stealth feel but it’s actually quite large now about 2500 papers in 2020

one example uh the monitoring system for the nuclear Test Ban Treaty is a probabilistic

program uh that that runs in Vienna uh it pretty much runs on a laptop uh and

but in real time it’s responsible for detecting uh and uh

identifying all the seismic events on the entire planet so it produces a Daily

Bulletin of all the events and where they happened and how big they were and whether they were potentially suspicious

and so on and it’s formulated as a as a probability problem so there’s evidence the raw data there’s a query what

happened and there’s a model which we write as a probabilistic program that looks like that

so that is the probability problem that is the monitoring algorithm for the nuclear Test Ban Treaty took about half

an hour to write Works more than twice as well as 100 Years of seismology

research was able to produce before that so just an example to show that there

are Technologies other than deep learning that can do really well on really hard problems

um and and they can be in some sense very straightforward and this is just showing successful

detection of a nuclear explosion in North Korea uh back in 2013. okay uh verification is actually much

more powerful than most people think uh you know when you ask a typical Silicon Valley programmer you know

why don’t you uh you know verify that your code is correct they’ll say oh you know we know verification doesn’t work

right we tried that in the 60s yeah it doesn’t work right well actually time has passed since the 60s

and quite big systems are fully verified uh including if you have any iOS device

if you have an iPhone or an iPad the kernel for the operating system is fully

verified um if you’ve ever taken Airbus uh avionics is fully verified uh if you

take a metro in Paris it’s fully verified so so there are very very capable verification systems and I think

the you know the the pendulum is swinging right the I think the gate is opened and we will start seeing much

greater use of these Technologies and not just verification but also synthesis

and you can use as much deep learning as you want to to guide the synthesis

process to make it run more efficiently but the point is you end up with code that’s guaranteed to be correct not just

code that looks like someone else’s code that they hoped was correct right but coded is guaranteed to be correct

um then the loss point which I I mentioned at the beginning uh we have a already a pretty bad digital ecosystem

and some estimates of cybercrime are running at six trillion dollars a year

for the cost not necessarily the revenues of cyber crime but the the the cost the collateral damage to the world

you know and that’s 10 times the software industry right it’s also 10 times the

semiconductor industry so something is seriously wrong when we are imposing costs on the world that are

10 times bigger than the revenues of the industry uh that are supposed to be generating the benefits

so it’s a sort of weird situation and it’s causing the internet to actually disappear

because all the major players now have moved off the internet and are running private networks with higher levels of

security then the internet can provide um and the the issue the reason this is

relevant is we have to prevent so if we develop safe AI it’s not enough right we have to prevent unsafe AI from

being deployed and I think passing laws even loan codes of conduct or principles

but even laws are not enough as we know to prevent those kinds of events from

happening so I think we need that the digital ecosystem itself

won’t run non-certified unsafe AI systems so we

need something like proof carrying code where software carries with it checkable proofs that it’s safe that it conforms

to the required standards and those proofs can be checked by Hardware that are very efficiently

um and so getting getting there from here is obviously a huge challenge but I

don’t see any other way to do it okay so I’ll summarize yeah what I said there’s a lot of work to do and I think but we

can do it I think the right way to go is to start with small and safe and then gradually

increase the capabilities of the systems but while remaining within this methodology of provable safe and

beneficial AI thanks [Applause]