Anthropic’s Claude AI can now digest an entire book like The Great Gatsby in seconds

Claude’s input memory grows to 75,000 words, beating GPT-4 by a wide margin.

BENJ EDWARDS – 5/12/2023, 4:44 PM

FOR EDUCATIONAL PURPOSES

On Thursday, AI company Anthropic announced it has given its ChatGPT-like Claude AI language model the ability to analyze an entire book’s worth of material in under a minute. This new ability comes from expanding Claude’s context window to 100,000 tokens, or about 75,000 words.

Like OpenAI’s GPT-4, Claude is a large language model (LLM) that works by predicting the next token in a sequence when given a certain input. Tokens are fragments of words used to simplify AI data processing, and a “context window” is similar to short-term memory—how much human-provided input data an LLM can process at once.

A larger context window means an LLM can consider larger works like books or participate in very long interactive conversations that span “hours or even days,” according to Anthropic:

The average person can read 100,000 tokens of text in ~5+ hours, and then they might need substantially longer to digest, remember, and analyze that information. Claude can now do this in less than a minute. For example, we loaded the entire text of The Great Gatsby into Claude-Instant (72K tokens) and modified one line to say Mr. Carraway was “a software engineer that works on machine learning tooling at Anthropic.” When we asked the model to spot what was different, it responded with the correct answer in 22 seconds.

While it may not sound impressive to pick out changes in a text (Microsoft Word can do that, but only if it has two documents to compare), consider that after feeding Claude the text of The Great Gatsby, the AI model can then interactively answer questions about it or analyze its meaning. 100,000 tokens is a big upgrade for LLMs. By comparison, OpenAI’s GPT-4 LLM boasts context window lengths of 4,096 tokens (about 3,000 words) when used as part of ChatGPT and 8,192 or 32,768 tokens via the GPT-4 API (which is currently only available via waitlist).

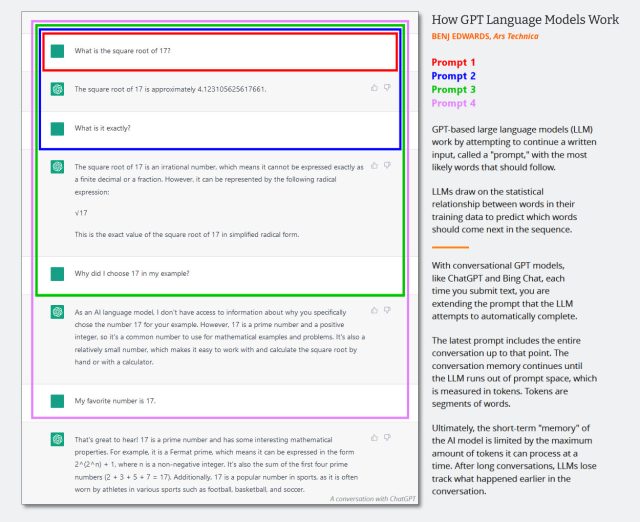

To understand how a larger context window leads to a longer conversation with a chatbot like ChatGPT or Claude, we made a diagram for an earlier article that shows how the size of the prompt (which is held in the context window) enlarges to contain the entire text of the conversation. That means a conversation can an last longer before the chatbot loses its “memory” of the conversation.

According to Anthropic, Claude’s enhanced capabilities extend past processing books. The enlarged context window could potentially help businesses extract important information from multiple documents through a conversational interaction. The company suggests that this approach may outperform vector search-based methods when dealing with complicated queries.

While not as big of a name in AI as Microsoft and Google, Anthropic has emerged as a notable rival to OpenAI in terms of competitive offerings in LLMs and API access. Former OpenAI VP of Research Dario Amodei and his sister Daniela founded Anthropic in 2021 after a disagreement over OpenAI’s commercial direction. Notably, Anthropic received a $300 million investment from Google in late 2022, with Google acquiring a 10 percent stake in the firm.

Anthropic says that 100K context windows are available now for users of the Claude API, which is currently restricted by a waitlist.

LEARN MORE:

THE VERGE. Anthropic leapfrogs OpenAI with a chatbot that can read a novel in less than a minute

Anthropic has expanded the context window of its chatbot Claude to 75,000 words — a big improvement on current models. Anthropic says it can process a whole novel in less than a minute.

An often overlooked limitation for chatbots is memory. While it’s true that the AI language models that power these systems are trained on terabytes of text, the amount these systems can process when in use — that is, the combination of input text and output, also known as their “context window” — is limited. For ChatGPT it’s around 3,000 words. There are ways to work around this, but it’s still not a huge amount of information to play with.

Now, AI startup Anthropic (founded by former OpenAI engineers) has hugely expanded the context window of its own chatbot Claude, pushing it to around 75,000 words. As the company points out in a blog post, that’s enough to process the entirety of The Great Gatsby in one go. In fact, the company tested the system by doing just this — editing a single sentence in the novel and asking Claude to spot the change. It did so in 22 seconds.

You may have noticed my imprecision in describing the length of these context windows. That’s because AI language models measure information not by number of characters or words, but in tokens; a semantic unit that doesn’t map precisely onto these familiar quantities. It makes sense when you think about it. After all, words can be long or short, and their length does not necessarily correspond to their complexity of meaning. (The longest definitions in the dictionary are often for the shortest words.) The use of “tokens” reflects this truth, and so, to be more precise: Claude’s context window can now process 100,000 tokens, up from 9,000 before. By comparison, OpenAI’s GPT-4 processes around 8,000 tokens (that’s not the standard model available in ChatGPT — you have to pay for access) while a limited-release full-fat model of GPT-4 can handle up to 32,000 tokens.

Right now, Claude’s new capacity is only available to Anthropic’s business partners, who are tapping into the chatbot via the company’s API. The pricing is also unknown, but is certain to be a significant bump. Processing more text means spending more on compute.

But the news shows AI language models’ capacity to process information is increasing, and this will certainly make these systems more useful. As Anthropic notes, it takes a human around five hours to read 75,000 words of text, but with Claude’s expanded context window, it can potentially take on the task of reading, summarizing and analyzing a long documents in a matter of minutes. (Though it doesn’t do anything about chatbots’ persistent tendency to make information up.) A bigger context window also means the system is able to hold longer conversations. One factor in chatbots going off the rails is that when their context window fills up they forget what’s been said and it’s why Bing’s chatbot is limited to 20 turns of conversation. More context equals more conversation.