An EXCELLENT resource for AI Safety engineering, governance and policy.

Download PDF

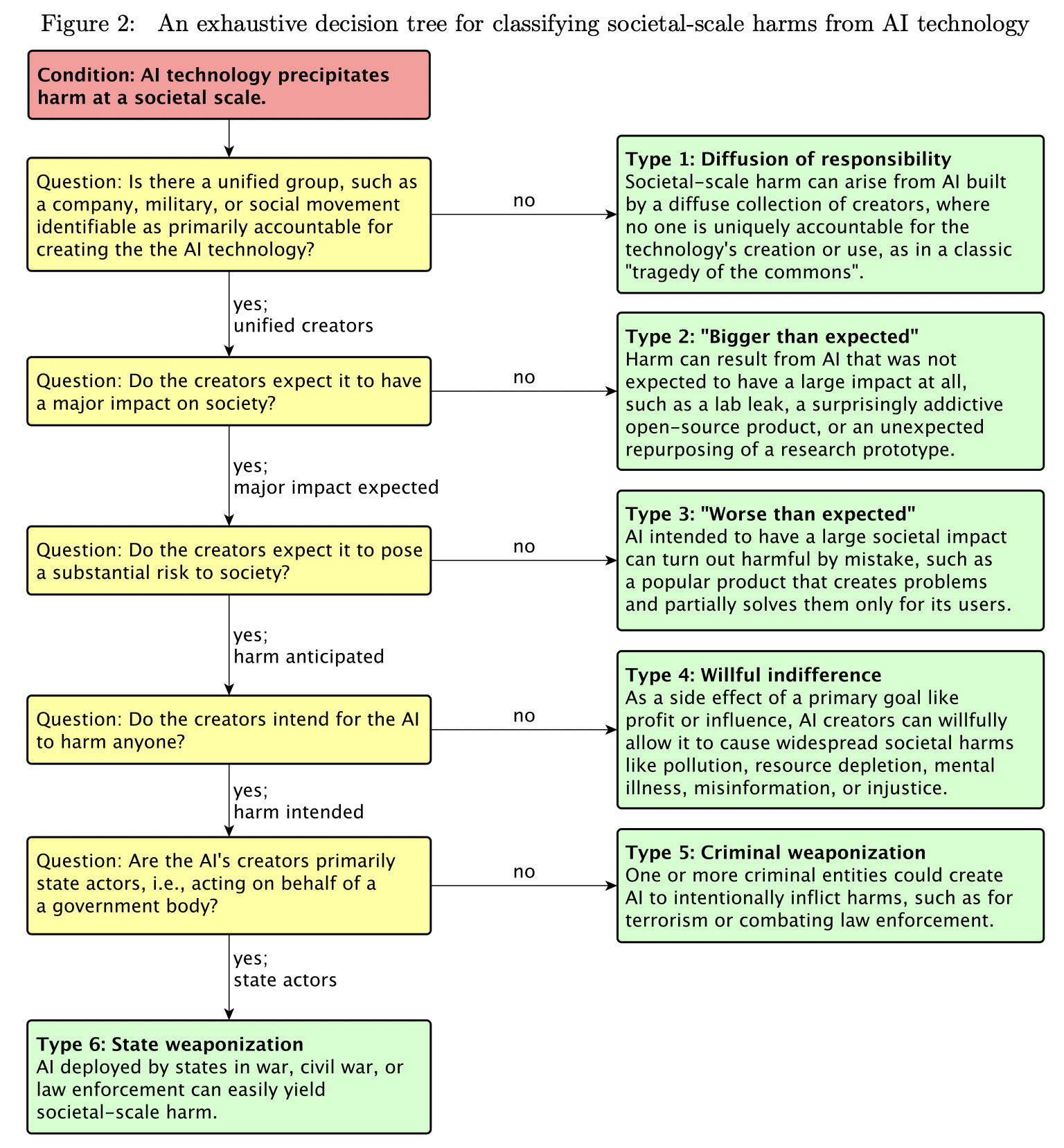

FLI Newsletter: “Categorizing Societal-Scale AI Risks: AI researchers Andrew Critch and Stuart Russell developed an exhaustive taxonomy, centred on accountability, of societal-scale and extinction-level risks to humanity from AI.”

TASRA: “In reality, no well-defined “creator’s intent” might exist if multiple AI systems are involved and built with different objectives in mind.”

Simply put: human error happens.

TASRA: a Taxonomy and Analysis of Societal-Scale Risks from AI.

Stuart Russell. Andrew Critch.

16 JUNE 2023.

Abstract. While several recent works have identified societal-scale and extinction-level risks to humanity arising from artificial intelligence, few have attempted an exhaustive taxonomy of such risks. Many exhaustive taxonomies are possible, and some are useful—particularly if they reveal new risks or practical approaches to safety. This paper explores a taxonomy based on accountability: whose actions lead to the risk, are the actors unified, and are they deliberate? We also provide stories to illustrate how the various risk types could each play out, including risks arising from unanticipated interactions of many AI systems, as well as risks from deliberate misuse, for which combined technical and policy solutions are indicated.

2 Types of Risk Here we begin our analysis of risks organized into six risk types, which constitute an exhaustive decision tree for classifying societal harms from AI or algorithms more broadly. Types 2-6 will classify risks with reference to the intentions of the AI technology’s creators, and whether those intentions are being well served by the technology. Type 1, by contrast, is premised on no single institution being primarily responsible for creating the problematic technology. Thus, Type 1 serves as a hedge against the taxonomy of Types 2-6 being non-exhaustive.

- Type 1: Diffusion of responsibility

- Type 2: “Bigger than expected” AI impacts

- Type 3: “Worse than expected” AI impacts

- Type 4: Willful indifference

- Type 5: Criminal weaponization

- Type 6: State weaponization

3 Conclusion. At this point, it is clear that AI technology can pose large-scale risks to humanity, including acute harms to individuals, large-scale harms to society, and even human extinction. Problematically, there may be no single accountable party or institution that primarily qualifies as blameworthy for such harms (Type 1). Even when there is a single accountable institution, there are several types of misunderstandings and intentions that could lead it to harmful outcomes (Types 2-6). These risk types include AI impacts that are bigger than expected, worse than expected, willfully accepted side effects of other goals, or intentional weaponization by criminals or states. For all of these risks, a combination of technical, social, and legal solutions are needed to achieve public safety.

One might argue that, because AI technology will be created by humanity, it will always serve our best interests. However, consider how many human colonies have started out dependent upon a home nation, and eventually gained sufficient independence from the home nation to revolt against it. Could humanity create an “AI industry” that becomes sufficiently independent of us to pose a global threat?

It might seem strange to consider something as abstract or diffuse as an industry posing a threat to the world. However, consider how the fossil fuel industry was built by humans, yet is presently very difficult to shut down or even regulate, due to patterns of regulatory interference exhibited by oil companies in many jurisdictions (Carpenter and Moss, 2013; Dal Bó, 2006). The same could be said for the tobacco industry for many years (Gilmore et al., 2019). The “AI industry”, if unchecked, could behave similarly, but potentially much more quickly than the oil industry, in cases where AI is able to think and act much more quickly than humans.

Finally, consider how species of ants who feed on acacia trees eventually lose the ability to digest other foods, ending up “enslaved” to protecting the health of the acacia trees as their only food source (Ed Yong, 2013). If humanity comes to depend critically on AI technology to survive, it may not be so easy to do away with even if it begins to harm us, individually or collectively.