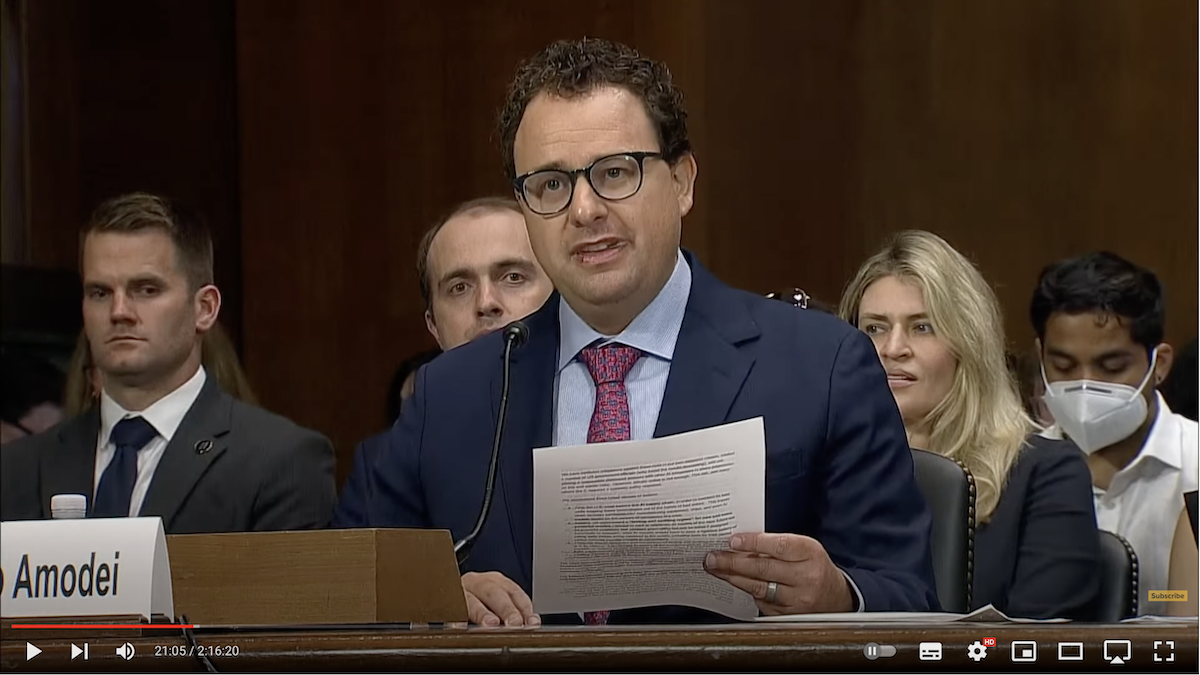

Witnesses testifying include Stuart Russell, professor of computer science at The University of California Berkeley; Yoshua Bengio, founder and scientific director of Mila — Quebec AI institute, and Dario Amodei, CEO of Anthropic…

Dario Amodei:

Thank you, chairman Blumenthal, Excuse me. Chairman Blumenthal, ranking member Hawley and members of the committee, thank you for the opportunity to discuss the risks and oversight of AI with you. Anthropic is a public benefit corporation that aims to lead by example in developing and publishing techniques to make AI systems safer and more controllable. And by deploying these safety techniques in state-of-the-art models, research conducted by Anthropic includes constitutional ai, a method for training AI systems to behave according to an explicit set of principles, early work on red teaming or adversarial testing of AI systems to uncover bad behavior and foundational work in AI interpretability the science of trying to understand why AI systems behave the way they do this month. After extensive testing, we were proud to launch our AI model, Claude two. For US users. Claude two puts many of these safety improvements into practice. While we’re the first to admit that our measures are still far from perfect, we believe they’re an important step forward in a race to the top on safety, we hope we can inspire other researchers and companies to do even better.

AI will help our country accelerate progress in medical research, education, and many other areas. As you said in your open remarks, the benefits are great. I would not have found it philanthropic if I did not believe AI’s benefits could outweigh its risks. However, it is very critical that we address the risks. My written testimony covers three categories of risks, short term risks that we face right now, such as bias, privacy, misinformation, medium term risks related to misuse of AI systems as they become better at science and engineering tasks, and long-term risks related to whether models might threaten humanity as they become truly autonomous, which you also mentioned in your open testimony. In these short remarks, I wanna focus on the medium term risks, which present an alarming combination of imminence and severity. Specifically, philanthropic is concerned that AI could empower a much larger set of actors to misused biology over the last six months.

Andro in collaboration with world-class biosecurity experts has conducted an intensive study of the potential for AI to contribute to the misuse of biology today. Certain steps in bio weapons production involve knowledge that can’t be found on Google or in textbooks, and requires a high level of specialized expertise. This being one of the things that currently keeps us safe from attacks, we found that today’s AI tools can fill in some of these steps, albeit incompletely and unreliably. In other words, they’re showing the first nascent signs of danger. However, a straightforward extrapolation of today’s systems to those we expect to see in two to three years suggests a substantial risk that AI systems will be able to fill in all the missing pieces, enabling many more actors to carry out large scale biological attacks. We believe this represents a grave threat to US national security. We have instituted mitigations against these risks in our own deployed models, briefed a number of US government officials, all of whom found the results disquieting and are piloting a responsible disclosure process with other AI companies to share information on this in similar risks.

However, private action is not enough. This risk and many others, like it requires a systemic policy response. We recommend three broad classes of actions. First, the US must secure the AI supply chain in order to maintain its lead while keeping these technologies out of the hands of bad actors. This supply chain runs from semiconductor manufacturing equipment to chips, and even the security of AI models stored on the servers of companies like ours. Second, we recommend a testing and auditing regime for new and more powerful models similar to cars or airplanes. AI models of the near future will be powerful machines that possess great utility, but can be lethal if designed incorrectly or misused. New AI models should have to pass a rigorous battery of safety tests before they can be released to the public at all, including tests by third parties and national security experts in government.

Third, we should recognize the science of testing and auditing for AI systems is in its infancy. It is not currently easy to detect all the bad behaviors in the AI system is capable of without first broadly deploying it to users, which is what create the risk. Thus, it is important to fund both measurement and research on measurement to ensure a testing and auditing regime is actually effective funding. NIST and the National AI Research Resource are two examples of ways to ensure America leads here. The three directions above our synergistic responsible supply chain policies help give America enough breathing room to impose rigorous standards on our own companies without seeding our national lead to adversaries. And funding measurement, in turn, makes these rigorous standards meaningful. The balance between mitigating AI’s risks and maximizing its benefits will be a difficult one, but I’m confident that our country can rise to the challenge. Thank you.