FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT.

TRANSCRIPT. In March 2023 an open letter sounded the alarm on the training of giant AI experiments. It was signed by over 30,000 individuals including more than 2,000 industry leaders and more than 3,000 experts. Since then there has been a growing concern about out of control AI. CBS NEWS: “Development of Advanced AI could pose quote profound risk to society and Humanity.” – ABC NEWS: “They say we could potentially face a dystopic future or even extinction.” People are right to be afraid. These Advanced systems could make humans extinct and with threats escalating it could be sooner than we think. Autonomous weapons, large-scale cyber attacks and AI enabled bio terrorism endanger human lives today. Tampant misinformation and pervasive bias are eroding trust and weakening our society. We face an international emergency. AI developers are aware and admit these dangers. NBC NEWS. “and we’re we’re putting it out there before we actually know whether it’s safe” – OPENAI: “and the bad case and I think this is like important to say is like lights out for all of us”. ANTRHOPIC: “a straightforward extrapolation of today’s systems to those we expect to see in 2 to 3 years suggests a substantial risk that AI systems will be able to fill in all the missing pieces enabling many more actors to carry out large-scale biological attacks” CBS NEWS: “in 6 hours he says the computer came up with designs for 40,000 highly toxic molecules”. They remain locked in an arms race to create more and more powerful systems with no clear plan for safety or control. GOOGLE: “It can be very harmful if deployed wrongly and we don’t have all the answers there yet and the technology is moving fast so does that keep me up at night absolutely”. They recognize a slowdown will be necessary to prevent harm but are unable or unwilling to say when or how there is. Public consensus and the call is loud and clear. Regulate AI now. We’ve seen regulation driven innovation in areas like Pharmaceuticals and Aviation. Why would we not want the same for AI? There are major downsides we have to manage to be able to get the upsides . There are three key areas we are looking for us lawmakers to act: ONE: Immediately establish a registry of giant AI experiments maintained by a US Federal agency. TWO: They must build a licensing system to make Labs prove their systems are safe before deployment. THREE: They must take steps to make sure developers are legally liable for the harms their products cause. Finally we must not stop at home. This affects everyone. At the upcoming UK Summit every concerned Nation must have a seat. We are looking to create an international multi-stakeholder auditing agency this kind of international Co operation has been possible in the past we coordinated on cloning and we banned bioweapons and now we can work together on AI.

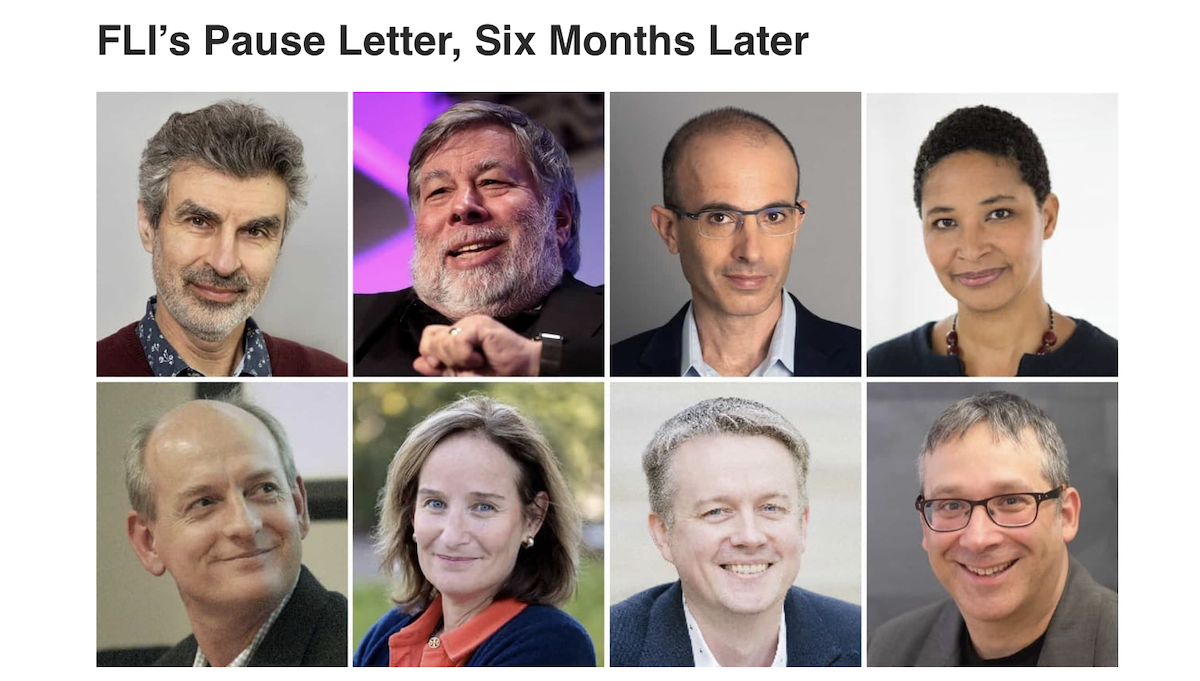

Image: Prominent signatories of the ‘Pause Giant AI Experiments’ open letter.

Future of Life Institute Newsletter: Our Pause Letter, Six Months Later. October 01, 2023

Reflections on the six-month anniversary of our open letter, our UK AI Safety Summit recommendations

As Six-Month Pause Letter Expires, Experts Call for Regulation on Advanced AI Development

Contents

On Friday September 22nd 2023, the Future of Life Institute (FLI) will mark six months since they released their open letter calling for a six month pause on giant AI experiments, which kicked off the global conversation about AI risk. It was signed by more than 30,000 experts, researchers, industry figures and other leaders.

Since then, the EU strengthened its draft AI law, the U.S. Congress has held hearings on the large-scale risks, emergency White House meetings have been convened, and polls showwidespread public concern about the technology’s catastrophic potential – and Americans’ preference for a slowdown. Yet much remains to be done to prevent the harms that could be caused by uncontrolled and unchecked AI development.

“AI corporations are recklessly rushing to build more and more powerful systems, with no robust solutions to make them safe. They acknowledge massive risks, safety concerns, and the potential need for a pause, yet they are unable or unwilling to say when or even how such a slowdown might occur,” said Anthony Aguirre, FLI’s Executive Director.

Critical Questions

FLI has created a list of questions that must be answered by AI companies in order to inform the public about the risks they represent, the limitations of existing safeguards, and their steps to guarantee safety. We urge policymakers, press, and members of the public to consider these – and address them to AI corporations wherever possible.

It also includes quotes from AI corporations about the risks, and polling data that reveals widespread concern.

Policy Recommendations

FLI has published policy recommendations to steer AI toward benefiting humanity and away from extreme risks. They include: requiring registration for large accumulations of computational resources, establishing a rigorous process for auditing risks and biases of powerful AI systems, and requiring licenses for the deployment of these systems that would be contingent upon developers proving their systems are safe, secure, and ethical.

“Our letter wasn’t just a warning; it proposed policies to help develop AI safely and responsibly. 80% of Americans don’t trust AI corporations to self-regulate, and a bipartisan majority support the creation of a federal agency for oversight,” said Aguirre. “We need our leaders to have the technical and legal capability to steer and halt development when it becomes dangerous. The steering wheel and brakes don’t even exist right now”.

Bletchley Park

Later this year, global leaders will convene in the United Kingdom to discuss the safety implications of advanced AI development. FLI has also released a set of recommendations for leaders leading up to and after the event.

“Addressing the safety risks of advanced AI should be a global effort. At the upcoming UK summit, every concerned party should have a seat at the table, with no ‘second-tier’ participants” said Max Tegmark, President of FLI. “The ongoing arms race risks global disaster and undermines any chance of realizing the amazing futures possible with AI. Effective coordination will require meaningful participation from all of us.”

Signatory Statements

Some of the letter’s most prominent signatories, Apple co-founder Steve Wozniak, AI ‘godfather’ Yoshua Bengio, Skype co-founder Jaan Tallinn, political scientist Danielle Allen, national security expert Rachel Bronson, historian Yuval Noah Harari, psychologist Gary Marcus, and leading expert Stuart Russell also made statements about the expiration of the six-month pause letter.

Dr Yoshua Bengio

Professor of Computer Science and Operations Research, University of Montreal and Scientific Director, Montreal Institute for Learning Algorithms

“The last six months have seen a groundswell of alarm about the pace of unchecked, unregulated AI development. This is the correct reaction. Governments and lawmakers have shown great openness to dialogue and must continue to act swiftly to protect lives and safeguard our society from the many threats to our collective safety and democracies.”

Dr Stuart Russell

Professor of Computer Science and Smith-Zadeh Chair, University of California, Berkeley

“In 1951, Alan Turing warned us that success in AI would mean the end of human control over the future. AI as a field ignored this warning, and governments too. To express my frustration with this, I made up a fictitious email exchange, where a superior alien civilization sends an email to humanity warning of its impending arrival, and humanity sends back an out-of-office auto-reply. After the pause letter, humanity and its governments returned to the office and, finally, read the email from the aliens. Let’s hope it’s not too late.”

Steve Wozniak

Co-founder, Apple Inc.

“The out-of-control development and proliferation of increasingly powerful AI systems could inflict terrible harms, either deliberately or accidentally, and will be weaponized by the worst actors in our society. Leaders must step in to help ensure they are developed safely and transparently, and that creators are accountable for the harms they cause. Crucially, we desperately need an AI policy framework that holds human beings responsible, and helps prevent horrible people from using this incredible technology to do evil things.”

Dr Danielle Allen

James Bryant Conant University Professor, Harvard University

“It’s been encouraging to see public sector leaders step up to the enormous challenge of governing the AI-powered social and economic revolution we find ourselves in the midst of. We need to mitigate harms, block bad actors, steer toward public goods, and equip ourselves to see and maintain human mastery over emergent capabilities to come. We humans know how to do these things—and have done them in the past—so it’s been a relief to see the acceleration of effort to carry out these tasks in these new contexts. We need to keep the pace up and cannot slacken now.”

Prof. Yuval Noah Harari

Professor of History, Hebrew University of Jerusalem

“Suppose we were told that a fleet of spaceships with highly intelligent aliens has been spotted, heading for Earth, and they will be here in a few years. Suppose we were told these aliens might solve climate change and cure cancer, but they might also enslave or even exterminate us. How would we react to such news? Well, six months ago some of the world’s leading AI experts warned us that an alien intelligence is indeed heading our way – only that this alien intelligence isn’t coming from outer space, it is coming from our own laboratories. Make no mistake: AI is an alien intelligence. It can make decisions and create ideas in a radically different way than human intelligence. AI has enormous positive potential, but it also poses enormous threats. We must act now to ensure that AI is developed in a safe way, or within a few years we might lose control of our planet and our future to an alien intelligence.”

Dr Rachel Bronson

President and CEO, Bulletin of the Atomic Scientists

“The Bulletin of the Atomic Scientists, the organization that I run, was founded by Manhattan Project scientists like J. Robert Oppenheimer who feared the consequences of their creation. AI is facing a similar moment today, and, like then, its creators are sounding an alarm. In the last six months we have seen thousands of scientists – and society as a whole – wake up and demand intervention. It is heartening to see our governments starting to listen to the two thirds of American adults who want to see regulation of generative AI. Our representatives must act before it is too late.”

Jaan Tallinn

Co-founder, Skype and FastTrack/Kazaa

“I supported this letter to make the growing fears of more and more AI experts known to the world. We wanted to see how people responded, and the results were mindblowing. The public are very, very concerned, as confirmed by multiple subsequent surveys. People are justifiably alarmed that a handful of companies are rushing ahead to build and deploy these advanced systems, with little-to-no oversight, without even proving that they are safe. People, and increasingly the AI experts, want regulation even more than I realized. It’s time they got it.”

Dr Gary Marcus

Professor of Psychology and Neural Science, NYU

“In the six months since the pause letter, there has been a lot of talk, and lots of photo opportunities, but not enough action. No new laws have passed. No major tech company has committed to transparency into the data they use to train their models, nor to revealing enough about their architectures to others to mitigate risks. Nobody has found a way to keep large language models from making stuff up, nobody has found a way to guarantee that they will behave ethically. Bad actors are starting to exploit them. I remain just as concerned now as I was then, if not more so.”

Learn more:

A path to controllable AGI?: Steve Omohundro and FLI’s Max Tegmark published a paper outlining how mathematical proofs of safety, e.g., written into “proof-carrying code”, are powerful, accessible tools for developing safe artificial general intelligences (AGIs).

Why this matters: As Omohundro and Tegmark argue, creating these “provably safe systems” is the only way we can guarantee safe, controlled AGI, in contrast to the possible extinction risk presented by unsafe/uncontrolled AGI.

On GPS: Former Google CEO on the challenge of AI guardrails Fareed Zakaria, GPS

- Former Google CEO Eric Schmidt tells Fareed why it’s so difficult to build guardrails around artificial intelligence and ensure the technology is used responsibly.

How former Google exec Eric Schmidt thinks AI could become a weapon of war

Existential risk from artificial general intelligence – Wikipedia