AI Tracker #6: Russian Roulette, Anyone?

A lot of high-quality AI safety conversation happens on Twitter. Unfortunately, this leads to great write-ups & content being buried in days. We’ve set up AI Tracker to aggregate this content.

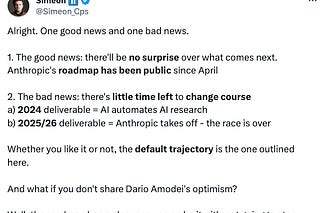

10-25% AI Ends Humanity… Keep Calm and Scale On?

We heard from Dario Amodei (AGI lab Anthropic’s CEO) on The Logan Bartlett Show, who revealed that his own estimation of human extinction or similar catastrophe from advanced AI is between 10 and 25%.

As @AISafetyMemes pointed out, “Those are Russian Roulette odds.”

And former British Government Environment Minister, Lord Zac Goldsmith, was not happy either.

Sasha Chapin states his case, and Cate Hall does so, too.

Don’t Call It A Comeback

Singer Grimes reminds us of the surreal situation we’re in, where many developers of advanced AI openly admit their creation “has a probability of ending civilization”.

Are The Adults In The Room?

Sasha Chapin comes around more to AI safety after previously agreeing less with the AI safety positions. In a longform tweet he explains:

Large Language Muddles

According to a new paper by Yong, Menghini, and Bach, “Low-Resource Languages Jailbreak GPT-4,” getting around GPT-4’s safeguards is as easy as translating your dangerous request into a “low-resource language,” such as Scots Gaelic or Hmong, and translating its output back to your target language.

Hints From Hinton

In his 60 Minutes interview, the Godfather of AI and Turing Prize winner Geoffrey Hinton addressed his concerns around AI and in particular with how it is ‘grown.’

Interviewer: “What do you mean? ‘We don’t know exactly how it works?’ It was designed by people?”

Hinton: “No, it wasn’t. What we did was we designed the learning algorithm. That’s a bit like designing the principle of evolution. But when this learning algorithm then interacts with data, it produces complicated neural networks that are good at doing things, but we don’t really understand exactly how they do those things.”

Tallinn’s Tips

Rob Wiblin tweets about what Jaan Tallinn would like to see to reduce the risk from advanced AI.

* * *

If we miss something good, let us know: send us tips here. Thanks for reading AGI Safety Weekly! Subscribe for free to receive new AI Tracker posts every Friday.