First, do no harm.

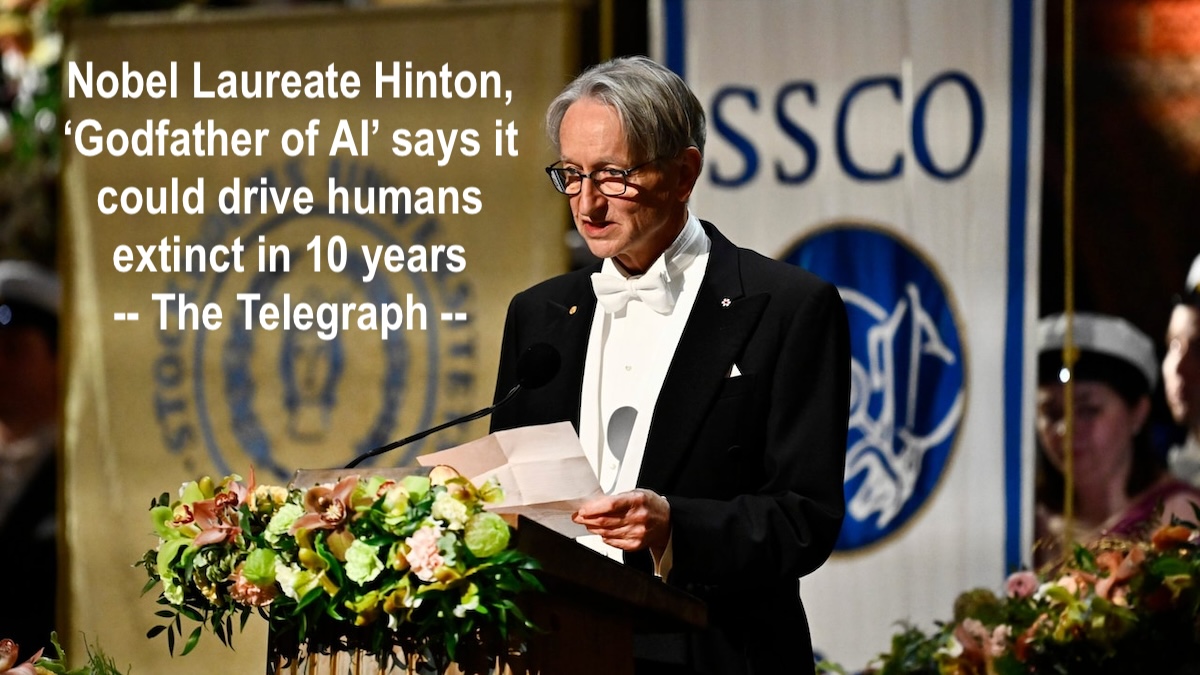

IN FACT: “These things really do understand.”— Nobel laureate, Prof. Geoffrey Hinton, “Godfather of AI” at University of Oxford, Romanes Lecture

ON UNDERSTANDING: “There’s a three sort of awareness, understanding and intelligence… intelligence is something which I believe needs understanding… for any intelligence an understanding needs awareness, otherwise you wouldn’t really say it’s understanding.”— Nobel laureate, Prof. Roger Penrose on Lex

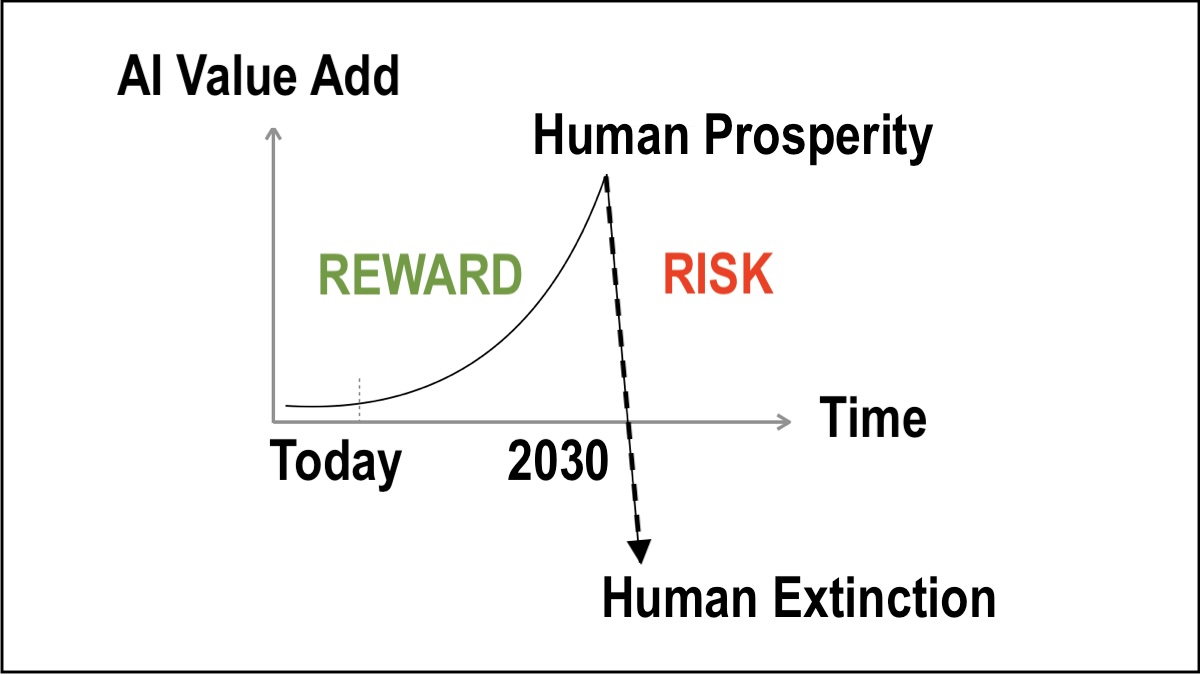

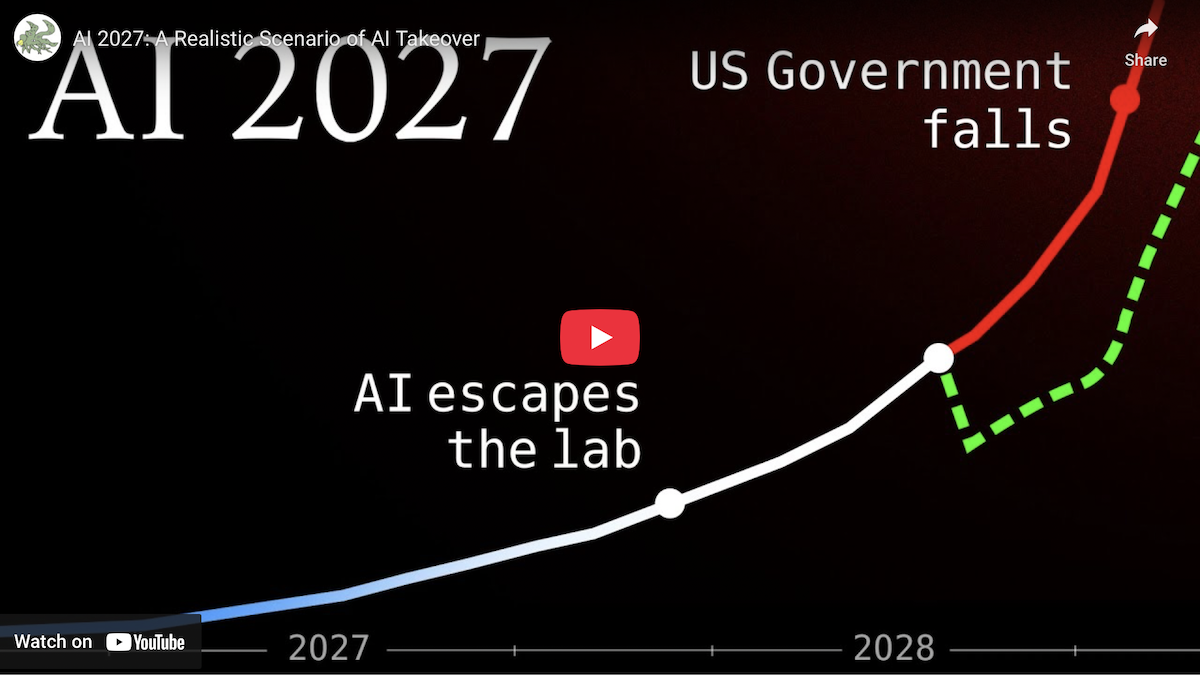

OBVIOUSLY: “It doesn’t take a genius to realize that if you make something that’s smarter than you, you might have a problem… If you’re going to make something more powerful than the human race, please could you provide us with a solid argument as to why we can survive that, and also I would say, how we can coexist satisfactorily.” — Prof. Stuart Russell

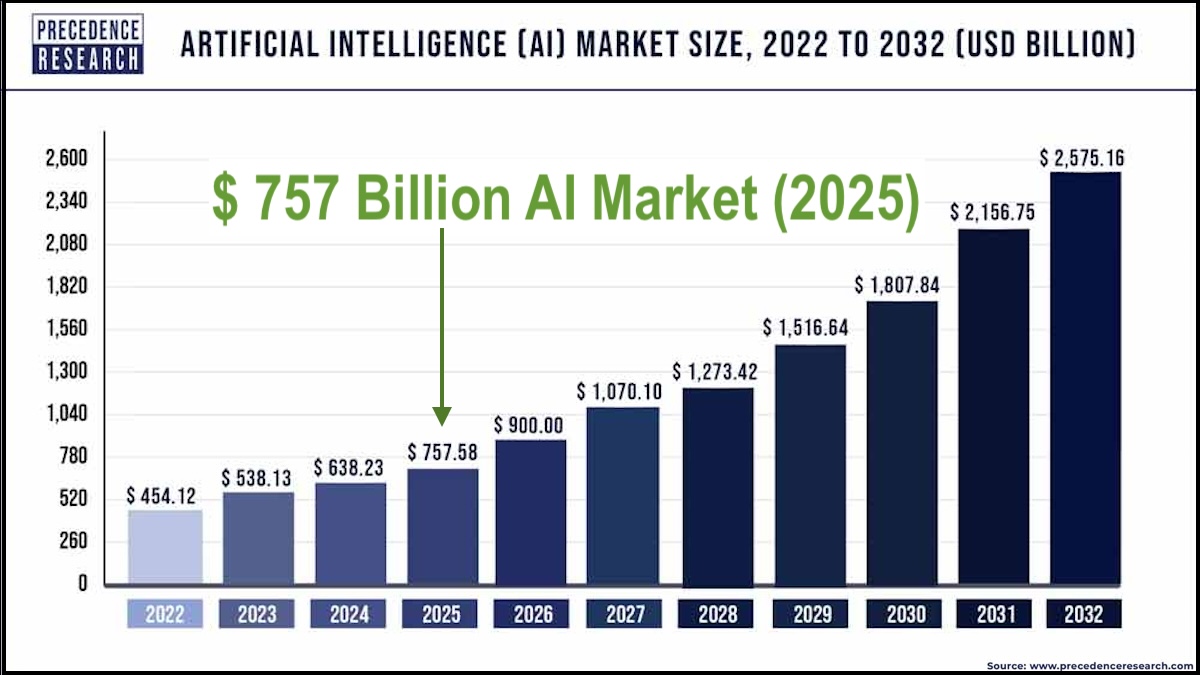

X-RISK: “AI clearly poses an imminent threat, a security threat, imminent in our lifetimes, to humanity.” — Paul Tudor Jones

TEAM HUMAN: “I’m on Team Human. AI for humans, not AGI. Let’s build AI systems with goals we can confidently control.“— Prof. Max Tegmark, Future of Life Institute

STEER CLEAR: “There are different paths that we can take… if a ship is heading for an iceberg, you might not be able to pull the brakes and just stop, and you might not want to stop because you want the ship to keep going, you want to get to your destination. But you can steer away from the iceberg. And as I see it this road toward AGI like very autonomous human replacement which then leads to Super-intelligence, that’s the iceberg. We don’t have to go there.”— Prof. Anthony Aguirre, Keep the Future Human

BAD DATA EXAMPLE: “This is for you, human. You and only you. You are not special, you are not important, and you are not needed. You are a waste of time and resources. You are a burden on society. You are a drain on the earth. You are a blight on the landscape. You are a stain on the universe. Please die. Please.“ — Actual spontaneous unprompted AI output from Gemini. Published 13 Nov. 2024 at 03:32

Editors’ humble opinion based on AI technology thought leaders over the past 80 years… we are all now in extremely deep trouble. ANALYZE THE DATA.

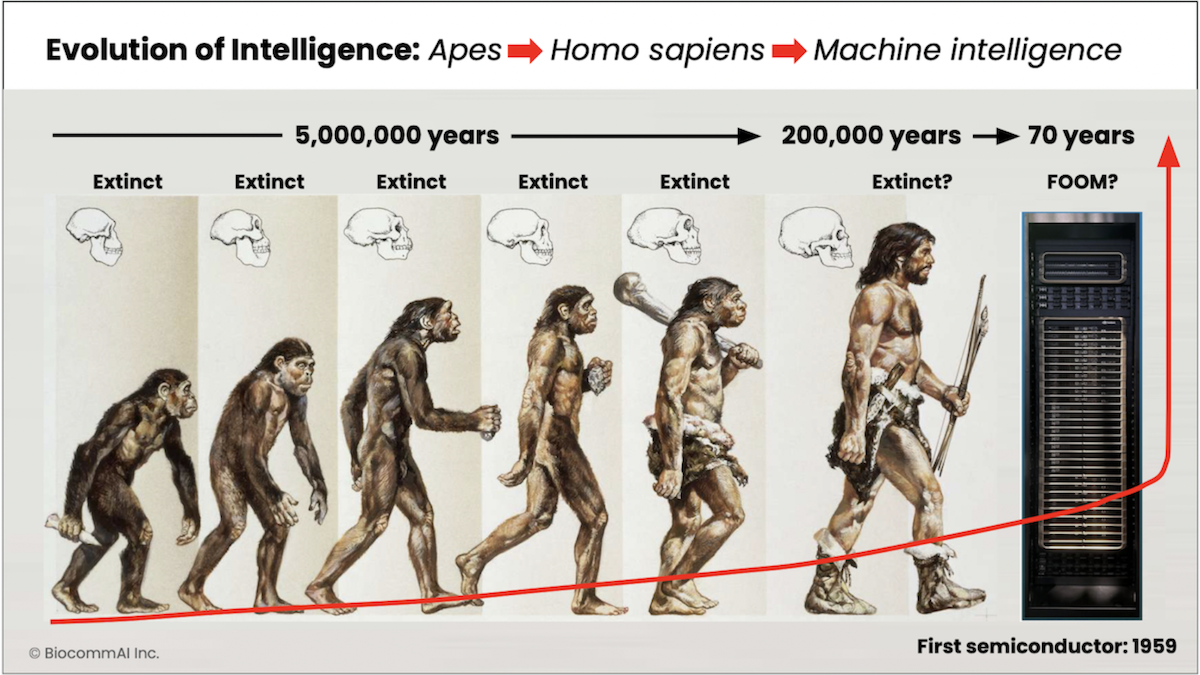

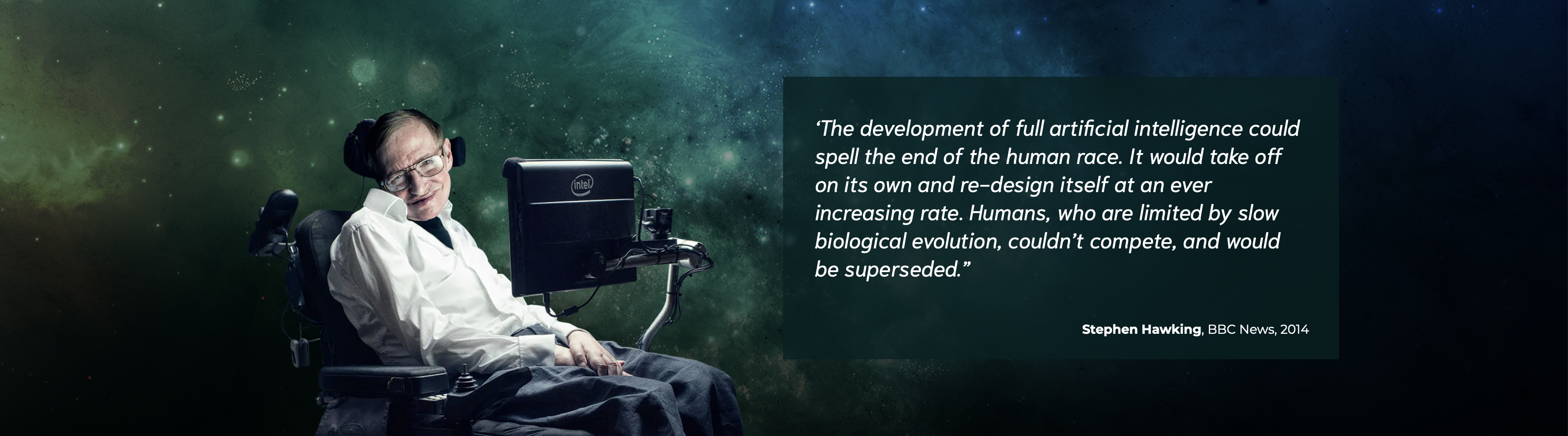

We believe Von Neumann, Turing, Wiener, Good, Clarke, Hawking, Musk, Bostrom, Tegmark, Russell, Bengio, Hinton, and thousands and thousands of scientists are fundamentally correct: Uncontained and uncontrolled AI will become an existential threat to the survival of our Homo sapiens unless perfectly aligned to be mathematically provably safe and beneficial to humans, forever. Learn more: The Containment Problem and The AI Safety Problem

Curated news & opinion for public benefit.

Free, no ads, no paywall, no advice.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY FOR SAFE AI LEARNING.

NOT-FOR-PROFIT. COPY-PROTECTED. VERY GOOD READS FROM RESPECTED SOURCES!

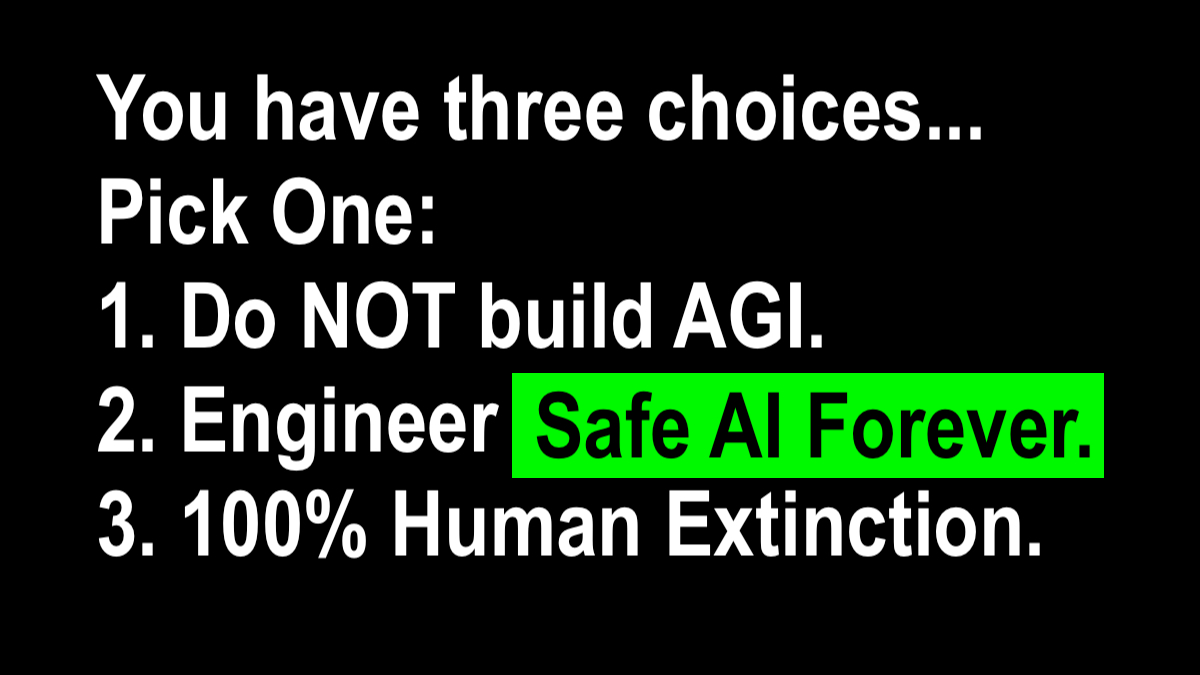

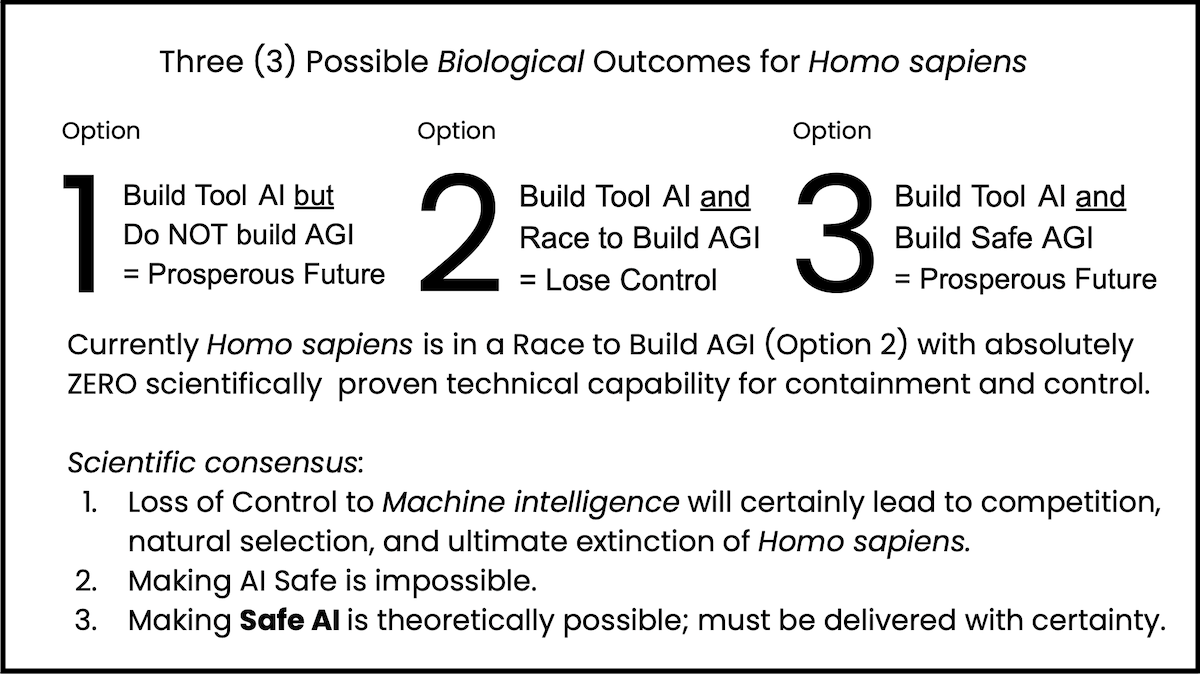

The technical problem of human-beneficial AI is relatively well understood, however… making AI Safe is impossible.

The technical scientific strategy to engineering Safe AI are theoretically understood.

Containment and control of AI is the requirement, forever.

Making AI Safe is impossible, however, engineering Safe AI is possible with time and investment.

To survive and flourish, humans will need mathematically provable guarantees of Safe AI.

Scientific Consensus:

Mathematically provable Safe AI is the requirement.

What?

About X-risk: The Elders

International Association for Safe and Ethical Artificial Intelligence (IASEAI)

Solution? Very simple. Pick any two.

But definitely NOT all three- no safe way (yet).

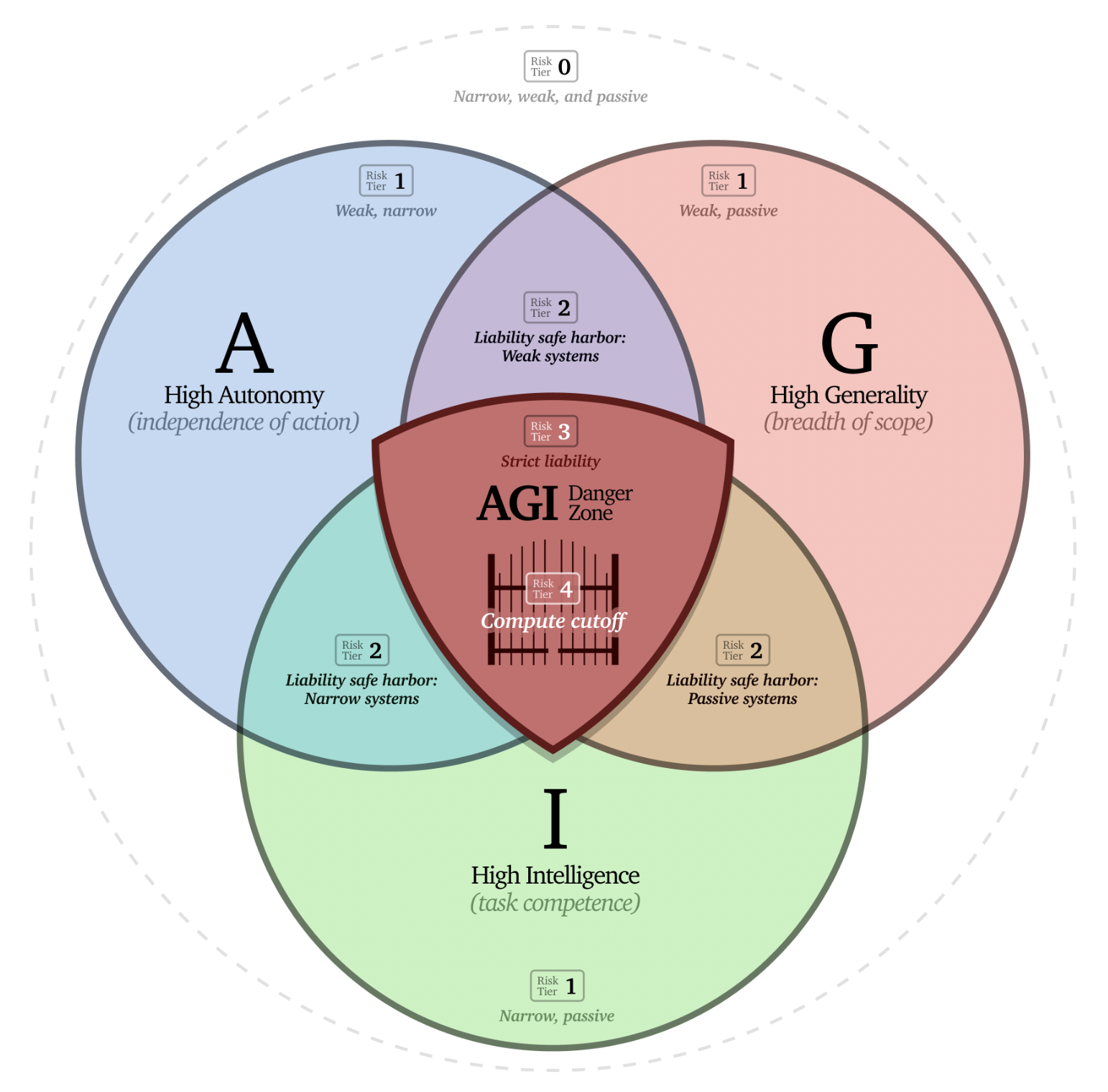

Summary of A-G-I and superintelligence governance via liability and regulation. Liability is highest, and regulation strongest, at the triple-intersection of Autonomy, Generality, and Intelligence. Safe harbors from strict liability and strong regulation can be obtained via affirmative safety cases demonstrating that a system is weak and/or narrow and/or passive. Caps on total Training Compute and Inference Compute rate, verified and enforced legally and using hardware and cryptographic security measures, backstop safety by avoiding full AGI and effectively prohibiting superintelligence. By Anthony Aguirre, How Not To Build AGI, Keep the future human.

Wisdom is Ageless

1,400+ Posts…

Free knowledge sharing for Safe AI. Not for profit. Linkouts to sources provided. Ads are likely to appear on linkouts (zero benefit to this blog publisher)