Bestseller: Superintelligence: Paths, Dangers, Strategies by Nick Bostrom

The existential risk: Containment and control of Superintelligent AI is an extreme engineering challenge. The future survival of humanity is at stake.

Control methods for safe containment of Superintelligent AI include:

1. Boxing – restricted access to the outside world by Superintelligent AI

2. Incentivising – using social or technical rewards for Superintelligent AI

3. Stunting – constraints on cognitive abilities of Superintelligent AI

4. Tripwires – detection of activity beyond expected behaviour automatically closes down the Superintelligent AI system

Important Note: Since 2014, AI technology has developed far faster than scientists expected. The current prediction for superintelligent machines is between 2025 and 2030 (if it has not happened already and we don’t know about it)

Scientific Consensus: Mathematically provable safe deployments of Superintelligent AI is a requirement for the future survival of the human race.

Learn More:

- Nick Bostrom Home Page

- Thinking Inside the Box: Controlling and Using an Oracle AI (2012) Stuart Armstrong, Anders Sandberg, Nick Bostrom

- Provably Safe Systems: The Only Path to Controllable AGI. (06 SEPT 2023) Tegmark and Omohundro

- Current themes in mechanistic interpretability research (16th NOV 2022, LessWrong) by Lee Sharkey, Sid Black

- MIT Mechanistic Interpretability Conference 2023 Tegmark.org

- Provably Safe AGI – MIT Mechanistic Interpretability Conference (MAY 7, 2023) Steve Omohundro

- ALIGNMENT MAP by The Future of Life Institute (2023)

- The Containment Problem

Book reviews and opinions…

- Superintelligence by Nick Bostrom and A Rough Ride to the Future by James Lovelock – review | The Guardian | 17 July 2014

- Book Review: Superintelligence (Paths, Dangers, Strategies) by Nick Bostrom | Medium | Ruslan Kozhuharov | Aug 30, 2018

- Book Review: Superintelligence — Paths, Dangers, Strategies | Yale Scientific | By Zachary Miller | January 18, 2015

- Superintelligence — Paths, Dangers, Strategies by Nick Bostrom | How to Learn Machine Learning

- Superintelligence by Nick Bostrom – Book Review | Live Forever Club | 12-Nov-2019

Nick Bostrom Interview by Tim Adams | The Guardian | 12 JUNE 2016

- On humanity and artificial intelligence: “Before the prospect of an intelligence explosion, we humans are like small children playing with a bomb. We have little idea when the detonation will occur, though if we hold the device to our ear we can hear a faint ticking sound.”

- According to Oxford philosopher Nick Bostrom, superintelligent machines are a greater threat to humanity than climate change.

Learn More

Sharing the World with Digital Minds (2020). Draft. Version 1.8 Carl Shulman & Nick Bostrom

- Strategic Implications of Openness in AI Development (2017). Nick Bostrom, Future of Humanity Institute, University of Oxford

- Existential Risk Prevention as Global Priority (2012). Nick Bostrom, Faculty of Philosophy & Oxford Martin School, University of Oxford

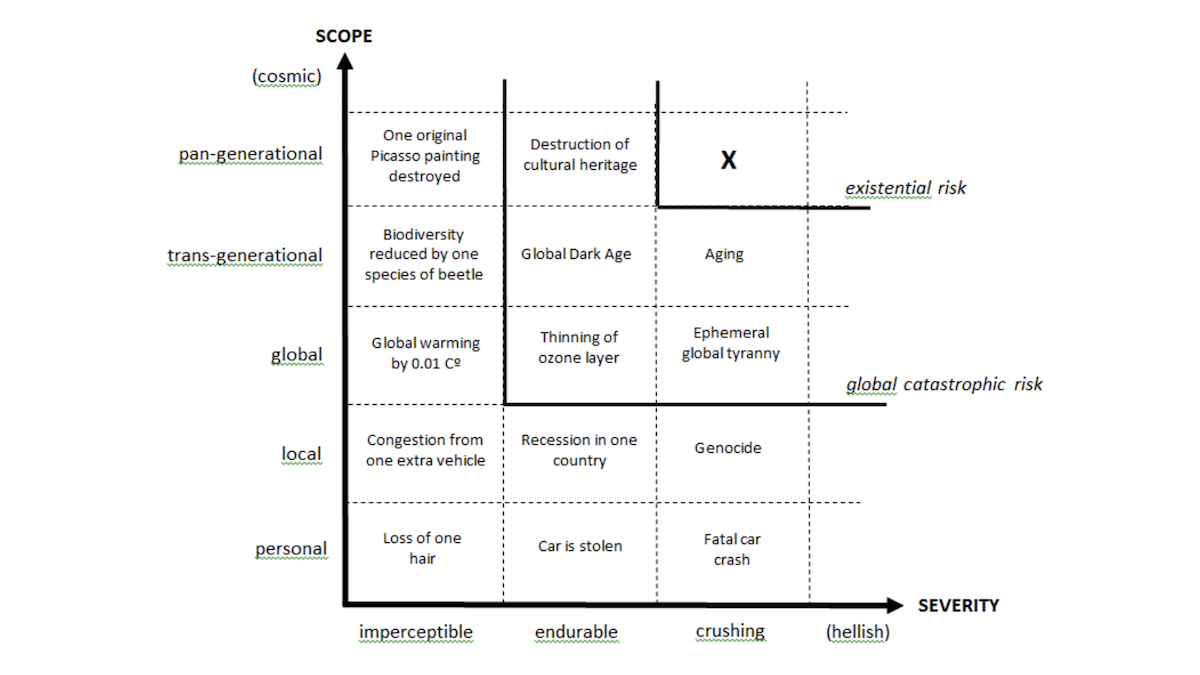

Figure 2: Qualitative risk categories. The scope of a risk can be personal (affecting only one person), local (affecting some geographical region or a distinct group), global (affecting the entire human population or a large part thereof), trans-generational (affecting humanity for numerous generations, or pan-generational (affecting humanity over all, or almost all, future generations). The severity of a risk can be classified as imperceptible (barely noticeable), endurable (causing significant harm but not completely ruining quality of life), or crushing (causing death or a permanent and drastic reduction of quality of life).

The area marked “X” in figure 2 represents existential risks. This is the category of risks that have (at least) crushing severity and (at least) pan-generational scope.6 As noted, an existential risk is one that threatens to cause the extinction of Earth-originating intelligent life or the permanent and drastic failure of that life to realize its potential for desirable development. In other words, an existential risk jeopardizes the entire future of humankind.