POLITICO. Power grab by France, Germany and Italy threatens to kill EU’s AI bill.

Landmark law hangs in the balance as big countries drag their feet on advanced AI rules.

BY GIAN VOLPICELLI

20 November 2023

Europe’s three largest economies have turned against regulation of the most powerful types of artificial intelligence, putting the fate of the bloc’s pioneering Artificial Intelligence Act on the line.

France, Germany and Italy are stonewalling negotiations over a controversial section of the EU’s draft AI legislation so it doesn’t hamper Europe’s own development of “foundation models,” AI infrastructure that underpins large-language models like OpenAI’s GPT and Google’s Bard.

Government officials argue that slapping tough restrictions on these newfangled models would harm the EU’s own champions in the race to harness AI technology.

In a joint paper shared with fellow EU governments, obtained by POLITICO, the three European heavyweights said Europe needs a “regulatory framework which fosters innovation and competition, so that European players can emerge and carry our voice and values in the global race of AI.” The paper suggests self-regulating foundation models through company pledges and codes of conduct.

The Franco-German-Italian charge pits them against European lawmakers, who strongly want to rein in foundation models.

“This is a declaration of war,” said a member of the European Parliament’s negotiating team, who was granted anonymity because of the talks’ sensitivity.

The deadlock could even mean the end of talks on its Artificial Intelligence Act altogether. Inter-institutional negotiations on the law are at a standstill at the EU level after parliamentary staffers walked out of a meeting with government representatives of the EU Council and European Commission officials in mid-November, in response to hearing the three countries’ resistance to regulating foundation models.

Talks are under intense pressure, as negotiators face a December 6 deadline. With the European Parliament up for reelection in June 2024, the window of opportunity to pass the law is quickly closing.

An un-European act

The push to dial down Europe’s regulatory model is surprising because it breaks with the Continent’s traditional thinking that the tech sector needs stronger regulation.

What’s more, it comes at a time when leading artificial intelligence industry chiefs have called for strict regulation of their technology and countries like the United States — a long-time supporter of light-tech tech laws — is rolling out its own regulatory agenda via a sweeping Executive Order on AI.

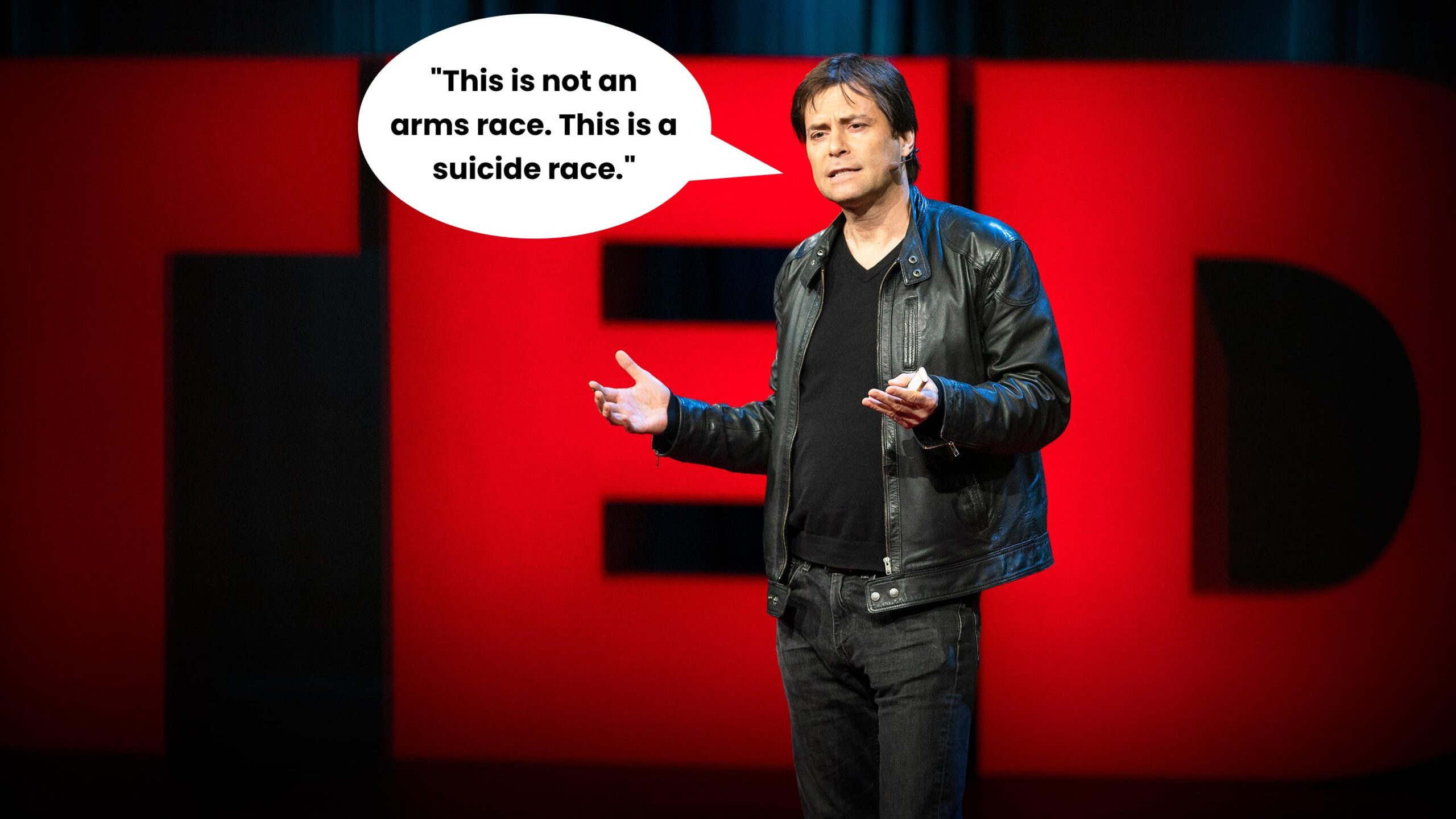

Ignoring foundation models (and consequently the most cutting-edge among them, called “frontier models” by industry insiders) would be “crazy” and risk making the EU’s AI Act “like the law of the jungle,” Canadian computer scientist Yoshua Bengio, a leading voice on AI policy, said in an interview last week.

“We might end up in a world where benign AI systems are heavily regulated in the EU … and the biggest systems that are the most dangerous, the most potentially harmful, are not regulated,” Bengio added.

The European Union is broadly backing bans and tough rules on AI applications based on their use in sensitive scenarios like education, immigration and the workplace. Foundation models can perform multiple tasks, making it tricky to predict their risk level.

In their proposal, European parliamentarians planned to add obligations for foundation model developers, independent of the system’s intended use, including compulsory model testing by third-party experts. Some obligations would only apply to models undergirded by more computing power, creating a two-tier set of rules that the three governments explicitly trashed in their paper.

While other EU countries — notably Spain, which holds the Council rotating presidency — are favorable to expanding the AI Act’s scope to cover foundation models, there is now little room for the Council to depart from the Big Three’s position.