A very good read from a respected source!

FT. OpenAI and the rift at the heart of Silicon Valley.

The tech industry is divided over how best to develop AI, and whether it’s possible to balance safety with the pursuit of profits.

Richard Waters in San Francisco and John Thornhill in London

24 November, 2023

In the past year, Sam Altman, chief executive of OpenAI, has become the embodiment of a contradiction at the heart of artificial intelligence.

Buoyed by the euphoria following the launch of the company’s AI-powered chatbot ChatGPT 12 months ago, the 38-year-old entrepreneur took his brand of Silicon Valley optimism on a global tour to meet heads of state and promote AI’s potential.

But at the same time, he has also sounded a warning: that advanced AI systems like the ones OpenAI hopes one day to build might lead to the extinction of the human race.

That jarring disparity has left many onlookers wondering why companies such as OpenAI are racing each other to be the first to build a potentially devastating new technology.

It has also been on full display in the past week, as the OpenAI boss was first ejected from his job, then reinstated after the threat of a mass defection by staff of the San Francisco-based AI start-up.

The four members of OpenAI’s board who threw Altman out have not explained the immediate cause, except to say that he had not been “consistently candid” in his dealings with them.

But tensions had been building inside the company for some time, as Altman’s ambition to turn OpenAI into Silicon Valley’s next tech powerhouse rubbed up against the company’s founding mission to put safety first. Altman’s reinstatement late on Tuesday has taken some of the heat out of the drama — at least, for now.

A shake-up on the board has brought in more experienced heads, in the shape of ex-chair of Twitter Bret Taylor and former US Treasury secretary Larry Summers.

Changes to the way the company is run are being considered. But nothing has been settled and for now, with Altman in charge again though stripped of his board seat, it’s back to business as usual.

- Sadly, the OpenAl crisis shows that well- motivated governance schemes are less robust than one would hope – JAAN TALLINN, THE CO-FOUNDER OF SKYPE

OpenAI was launched as a research firm dedicated to building safe AI for the benefit of all humanity. But the drama of recent days suggests it has failed to deal with the outsized success that has flowed from its own technical advances.

It has also thrown the question of how to control AI into sharp relief: was the crisis caused by a flaw in the company’s design, meaning that it has little bearing on the prospects for other attempts to balance AI safety and the pursuit of profits? Or is it a glimpse into a wider rift in the industry with serious implications for its future — and ours?

When OpenAI was founded, its architects “recognised the commercial impulse could lead to disaster”, says Stuart Russell, a professor of computer science at the University of California, Berkeley who was one of the first to sound a warning about the existential risks of AI. “Now they’ve succumbed to the commercial impulse themselves.”

A clash of beliefs

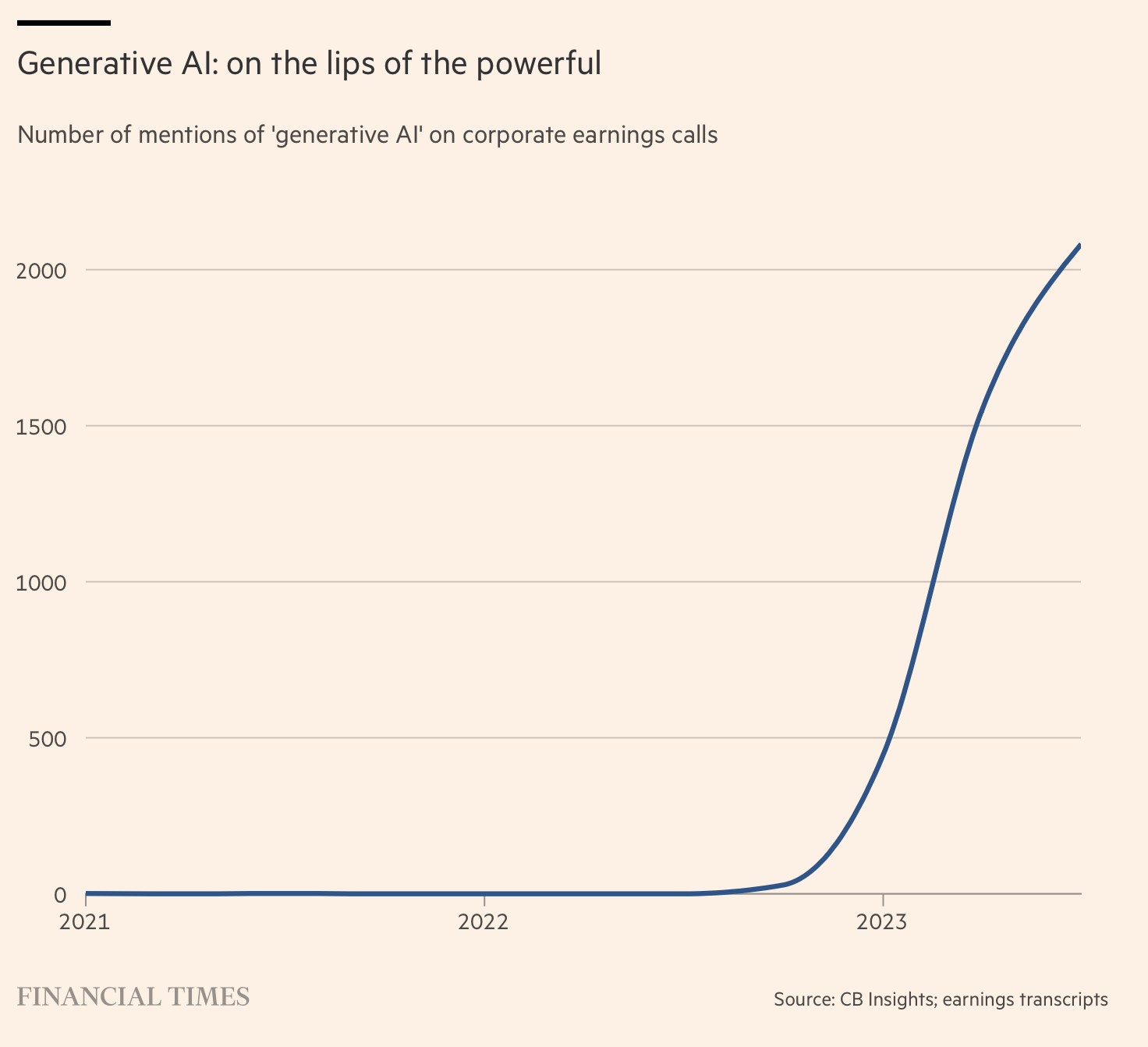

The drama at OpenAI has shone a spotlight on the tension between two sets of beliefs that have come to the fore in Silicon Valley as so-called generative AI — the technology that allows ChatGPT to turn out creative content — has turned from an interesting research project into the industry’s most promising new technology in years.

One is the familiar brand of optimistic techno-capitalism that has taken root in northern California. This holds that, with the application of ample amounts of venture capital and outsized ambition, any sufficiently disruptive idea can take over the world — or at least, overthrow some sleepy business incumbent. As a former head of Y Combinator, the region’s most prominent start-up incubator, Altman had a ringside seat to how this process worked.

The other, more downbeat, philosophy advocates a caution that is normally less common in Silicon Valley. Known as long-termism, it is based on a belief that the interests of later generations, stretching far into the future, must be taken into account in decisions made today.

- This field – artificial intelligence is really much more like alchemy than like rocket science – HELEN TONER, FORMER OPENAI BOARD MEMBER

The idea is an offshoot of effective altruism, a philosophy shaped by Oxford university philosophers William MacAskill and Toby Ord, whose adherents seek to maximise their impact on the planet to do the most good. Long-termists aim to lessen the probability of existential disasters that would prevent future generations being born — and the risks presented by rogue AI have become a central concern.

It was the demands of capital that brought these two thought systems into such abrupt opposition inside OpenAI. Founded in 2015, and with Altman and Elon Musk as its first co-chairs, it was launched with the high-minded mission of leading the research into safely building humanlike AI.

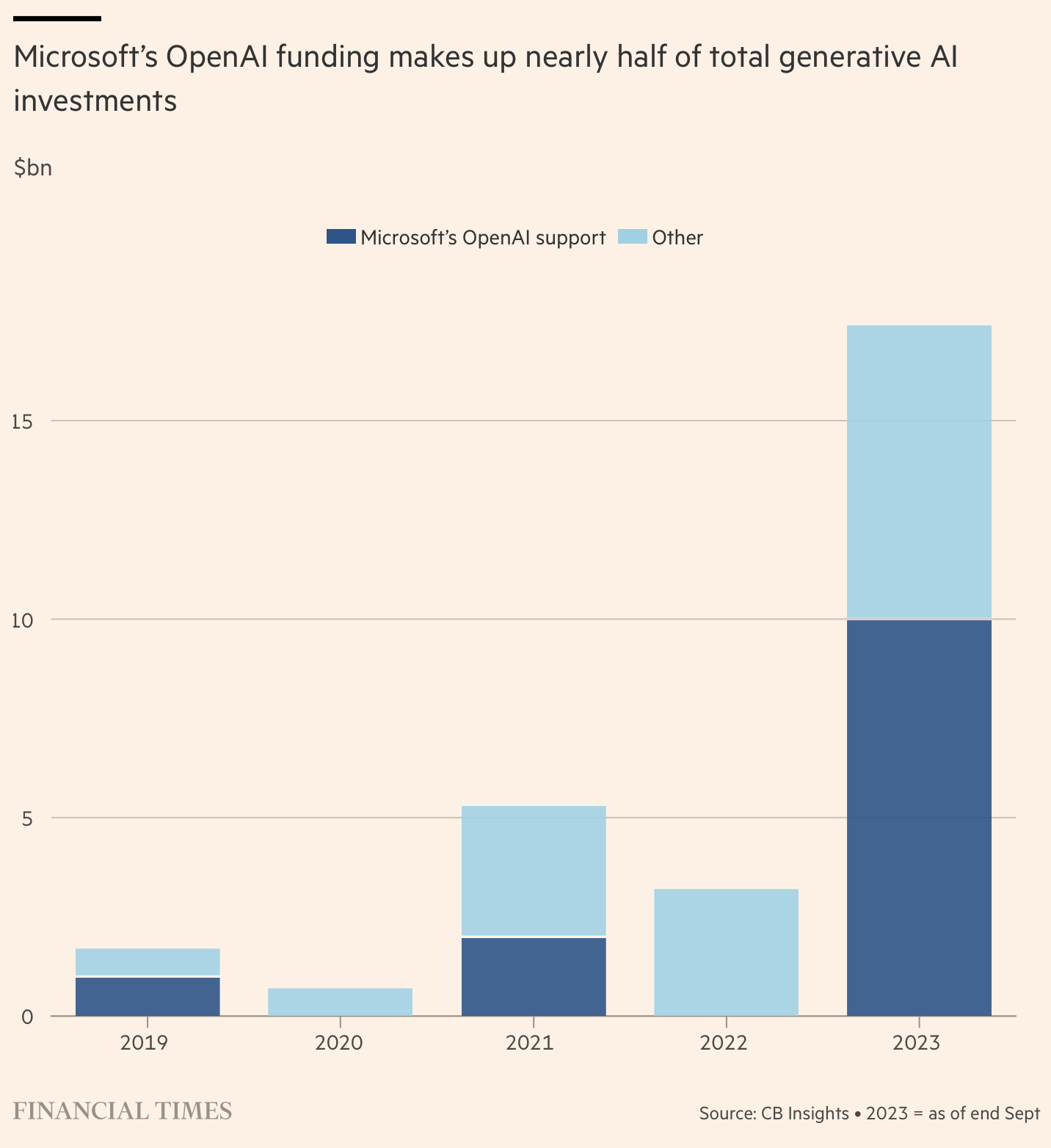

The primary method its researchers alighted on involved creating ever-larger language models, which in turn required large amounts of capital. By 2019, the need for cash led OpenAI to take a $1bn investment from Microsoft.

Though this led to the creation of a new, commercial subsidiary that would exploit OpenAI’s core technology, the entire enterprise was left under the control of a non-profit board whose core responsibility remained making sure that advanced AI was used for the benefit of humanity.

Two of the four board members who would eventually sack Altman — Helen Toner, director of strategy at the Center for Security and Emerging Technology, and Tasha McCauley, a tech entrepreneur — have connections to the effective altruism movement.

The week that shook AI

- FRI NOV 17 OpenAI chief executive Sam Altman is fired by the board of directors and replaced with chief technology officer Mira Murati. The move plunges the leading generative AI company into crisis

- SAT NOV 18 The tech world voices broad support for Altman — even as some of the company’s investors claimed this was how OpenAI was meant to operate, regardless of the possible consequences. Other investors, led by Microsoft, push to reinstate him

- SUN NOV 19 OpenAI taps Emmett Shear, co-founder of gaming service Twitch, to succeed Altman. Microsoft commits to hiring Altman and any other OpenAI staff who choose to join them at a new AI research subsidiary

- MON NOV 20 The FT reports that 747 out of 770 OpenAI employees have signed a letter threatening to quit and join Microsoft if the board refuses to resign and reverse its decision

- TUES NOV 21 Altman is reinstated as CEO by the board. Changes at the board are also announced; ex-chair of Twitter Bret Taylor is named chair and former US Treasury secretary Larry Summers also joins

A recent paper on which Toner was an author hinted at the sort of tensions this would cause. The paper implicitly criticised Altman for releasing ChatGPT without any “detailed safety testing” first, leading to the kind of “race-to-the-bottom dynamics” that OpenAI had itself decried. The frenzy around the chatbot had led other companies, including Google, to “accelerate or circumvent internal safety and ethics review processes”, Toner and her fellow authors wrote.

Concerns like these have accumulated as OpenAI’s technology has advanced and many experts in the field have watched nervously for signs that human-level machine intelligence, known as artificial general intelligence, may be approaching.

Altman appeared to raise the stakes again this month, hinting that the company had made another major research breakthrough that would “push the veil of ignorance back and the frontier of discovery forward”.

But if OpenAI board members thought they had the final say over how technologies like this would be exploited, they seem to have been mistaken. Altman’s sudden dismissal shocked the tech world and brought a rebellion from employees, many of whom threatened to quit unless he returned.

Microsoft, which has now committed $13bn to the company, also worked to get Altman reinstated — an example of the outside influence that OpenAI’s governance arrangements were designed to resist. Within days, OpenAI’s directors relented.

The agreement that saw Altman reinstated led to both Toner and McCauley stepping down from the board, along with Ilya Sutskever, an AI researcher who has led a new initiative inside the company this year to prevent a future “superintelligence” from subjugating or even wiping out humanity.

“On paper, the board had the power, that’s how Sam Altman presented it,” says Ord, who serves on the advisory board of the Center for the Governance of AI alongside McCauley and Toner. “Instead, a very large amount of power seems to reside with Altman personally, and the employees and Microsoft.”

Ord says he has no idea if these kinds of tensions can be resolved, but adds of OpenAI: “At least there was a company that was being held accountable to something other than bottom-line profit.”

“I don’t see any alternatives,” he adds. “You could say government, but I don’t see governments doing anything.”

Futureproofing AI

OpenAI is not the only advanced AI company to experiment with a new form of governance.

Anthropic, whose founders quit OpenAI in 2020 over concerns about its commitment to AI safety, has tried a different approach. It has taken minority investments from Google and Amazon, but to reinforce its attention to AI safety, it lets members of an independent trust appoint a number of its board members.

The founders of DeepMind, the British AI research lab that was bought by Google almost a decade ago, fought for a greater degree of independence inside the company to guarantee their research would always be used for good. This year DeepMind was folded into Google’s other AI research operations, though the combined unit is led by DeepMind co-founder Demis Hassabis.

Critics say these kinds of attempts at self-regulation are doomed to fail, given how high the stakes are.

One AI investor, who knows Altman well, says that the OpenAI chief takes the potential risks of the technology seriously. But he is also determined to deliver on his company’s mission to develop artificial general intelligence. “Sam wants to be one of the great people in history and reshape the world in a consequential way,” the investor says.

Russell, of UC Berkeley, adds: “The sense of power and destiny that comes from transforming the future is really hard to resist. The vested interest isn’t just the money.” Jaan Tallinn, the co-founder of Skype and one of the tech world’s most prominent supporters of effective altruism, backs the idea of AI companies coming up with “thoughtful governance structures” rather than simply responding to market forces.

But, he adds, “sadly, the OpenAI governance crisis shows that such well-motivated governance schemes are less robust than one would hope”.

In Silicon Valley, meanwhile, the upheaval at OpenAI has added fuel to a backlash against the long-termism movement, while also handing ammunition to opponents of greater AI regulation.

The reputation of the effective altruism movement was dented this month when one of its most prominent backers, FTX founder Sam Bankman-Fried, was convicted of fraud. The crypto entrepreneur, who had said he would give away most of his wealth, was a significant early investor in Anthropic.

Using individual situations like this “to say that a philosophy is defunct is like saying liberalism is dead because of one politician”, says Ord. “You can’t critique a philosophy like that.” Critics of the doomsayers, meanwhile, are becoming more outspoken. Yann LeCun, chief AI scientist at Meta, recently said it was “preposterous” to believe that an AI could threaten humanity. Rather, intelligent machines would stimulate a second Renaissance in learning and help us tackle climate change and cure diseases. “There’s no question that we’ll have machines assisting us that are smarter than us. And the question is: is that scary or is that exciting? I think it’s exciting because those machines will be doing our bidding,” he told the Financial Times. “They will be under our control.”

Marc Andreessen, a venture capitalist who has become one of Silicon Valley’s most outspoken opponents of regulation, has described people who warn of existential risks from AI as a cult, no different from other millenarian movements that warn of impending social disasters. “They’ve whipped themselves into a frenzy,” he wrote in June.

Putting people who will live in the future on a par with those who are alive today has sinister implications if taken to its logical conclusion, critics say — because the number of future people is far greater, their interests will predominate.

At its most extreme, they say, this could be used as an excuse to subjugate large parts of the population in the notional interest of billions of people in the future.

- [Claiming that Al] doesn’t pose an existential risk to humans right now is a ridiculous argument – TOBY ORD, OXFORD PHILOSOPHER AND PROPONENT OF LONG-TERMISM

Long-termists say this argument stretches the philosophy to extreme lengths to discredit it, in an effort to make regulation of AI safety less likely. “To criticise long-termism as some weird, neo-fascist mentality is wrong-headed,” says Russell.

Most of its adherents agree on the need to “discount” the interests of generations far in the future, he says, meaning they weigh less heavily in decisions than the interests of people alive today.

Long-termists also argue that their focus on AI risks makes them no different from those who warn about looming disasters like climate change, and that just because the probability of humanity’s destruction by AI cannot be precisely quantified does not mean it should be ignored.

Given all the variables, says Ord, claiming that AI “doesn’t pose an existential risk to humans right now is a ridiculous argument”.

Know thine alchemy

This view has found an audience beyond some corners of the tech industry and the Oxford university philosophy department.

An AI safety summit hosted this month by the British government at Bletchley Park was the first attempt to hold a global policy debate about the existential risks of AI.

The meeting — attended by 28 countries, including the US and China, and many of the world’s top AI researchers and tech executives, such as Altman — paved the way for a dialogue on these issues with two more summits to be held in South Korea and France over the next year.

Reaching an accord that the existential risks of AI should be taken seriously is only the first step. Both the UK and the US are also creating permanent AI safety institutes to deepen public sector expertise in this area and test the big AI companies’ frontier models.

And the British government has commissioned a state-of-the-science report from Yoshua Bengio, one of the pioneers of the deep-learning revolution and winner of the Turing award, to inform global policymakers.

The only way for the world to “navigate the dangers posed by powerful AI”, says Tallinn, is to “step up its regulation game, both locally and internationally”.

- [AGI] scary or exciting? I think it’s exciting because those machines will be doing our bidding. They will be under our control – YANN LECUN, CHIEF AI SCIENTIST AT META

But many of the world’s leading AI scientists differ wildly in their assessments of AI’s current capabilities and its future evolution, making it hard for regulators to know what to do.

“This field — artificial intelligence — is really much more like alchemy than like rocket science,” Toner, the former OpenAI board member, said in a recent interview for an FT podcast recorded prior to this week’s events. “You just throw things into the pot and see what happens.”

How OpenAI resolves the blow-up at its highest levels may help show how well its competitors, in the race for human-level AI, can be expected to handle the deep contradictions in their work between progress and safety.

But although Altman is now back at the helm, OpenAI’s new board has yet to offer a public explanation of what exactly went wrong, or set out what changes it will make to ensure the company is not derailed from its core mission of making computer intelligence safe for humanity. The world is waiting.