A very good read from a respected source!

TOP-20 AGI Known Problems and TOP-20 AGI Mathematically Provable Containment and Control Solutions.

Source. LESSWRONG

TOP-20 AGI Known Problems

- Self-replication/Escape (10532 + 1624 results)

- Self-modification (8459 results)

- P(doom) (5685 results)

- Goodhart’s Law (4370 results)

- Goals (4048 results)

- Deception (2624 results)

- Black box (532 results)

- Emergent behavior (groking) (41 + 414 results)

- Power seeking (352 results)

- Instrumental convergence (330 results)

- Wireheading (321 results)

- Value Learning & Proxy Gaming (376+19 results)

- Cybersecurity (181)

- Inner misalignment (113 results)

- Biosecurity (101 results)

- Gradient hacking (91 results)

- Misaligned goals (51 results)

- Model splintering (50 results)

- Ontological crisis (47 results)

- Alien mind (30 results)

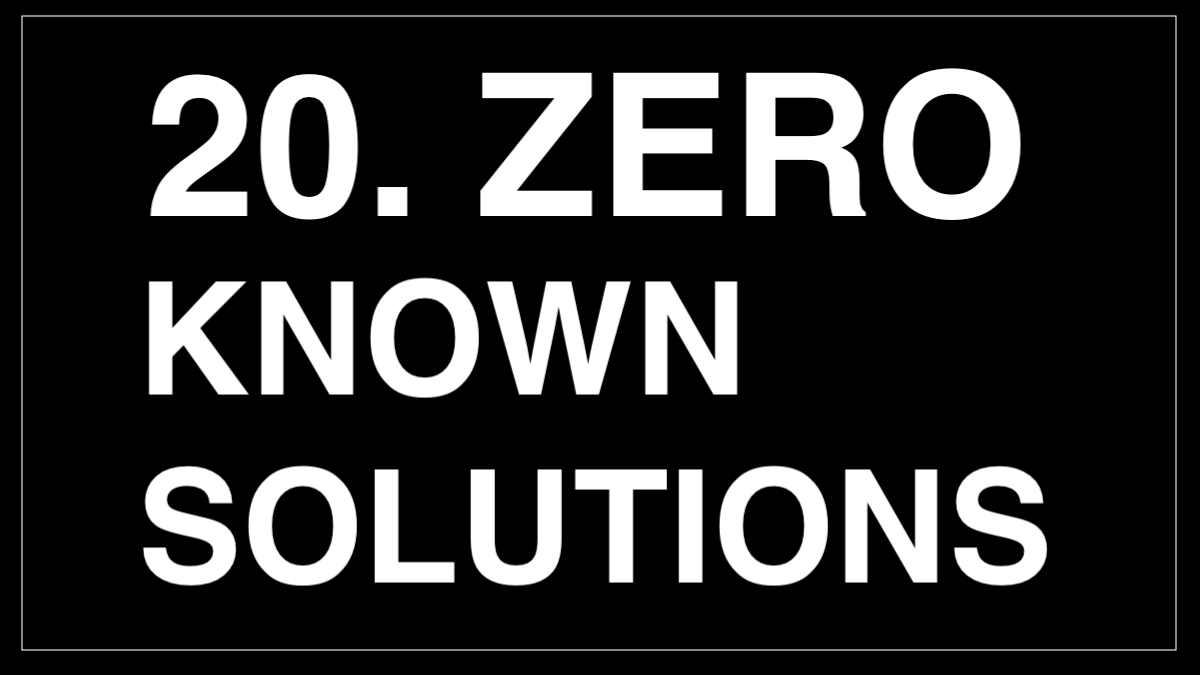

TOP-20 AGI Mathematically Provable Containment and Control Solutions (alphabetical)

- ABSOLUTELY NONE

- Gonzo-zip

- Goose Egg

- Inexistence

- Nada

- Naught

- Nil

- Nix

- None

- Nothing

- Nothingness

- Non-existent

- Nut-tin’

- Null

- Nyet

- Zero

- Zilch

- Zip

- Zippo

Not to worry?

The big-tech companies are working on safe AI too, and while they certainly do not know how, just yet, to make safe AI – “we are doing the best we can” – they really think they can hopefully solve the problem. ($100s of Billions of Budget allocation: >99% capability and <1% safety). AND Government regulations are slowly coming! (routinely watered down by those same tech industry lobbyists)

Meanwhile, as the tech companies race-to-the-bottom, the current average P(doom) prediction by AI engineers is 40%.

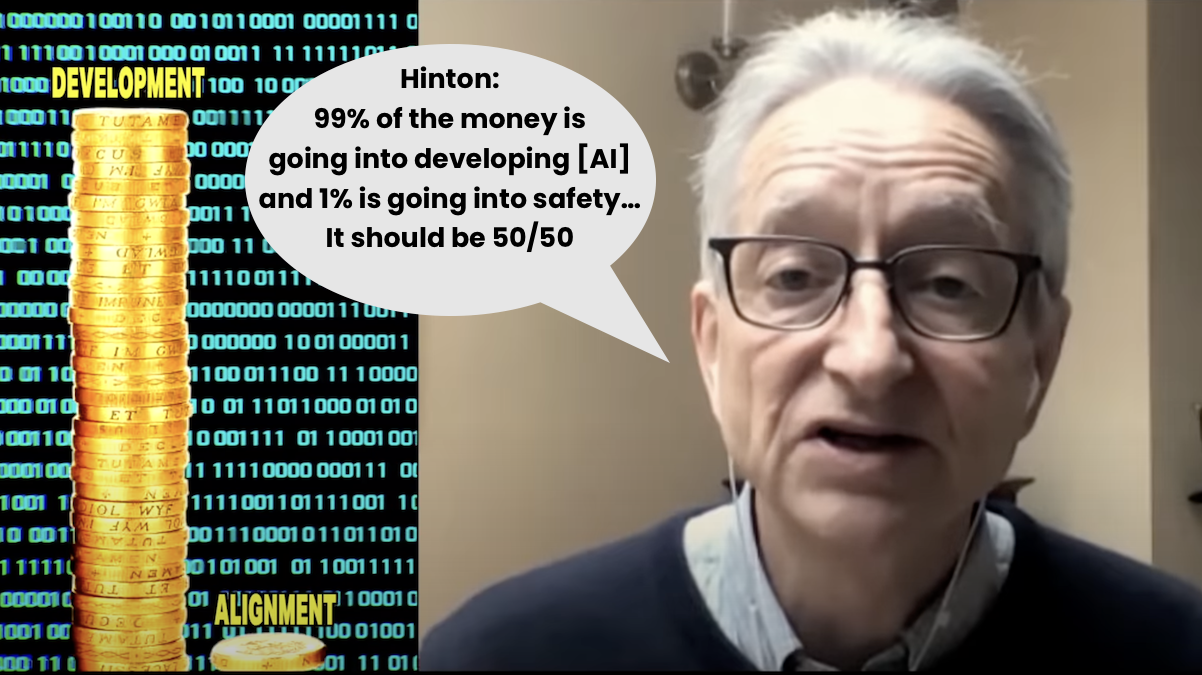

Geoffrey Hinton is widely respected as the “Godfather of Deep Learning AI”

“So presently ninety-nine percent (99%) of the money is going into developing [AI] and one percent (1%) is going into safety. People are saying all these things might be dangerous so it should be more like 50/50.” — Geoffrey Hinton