Wow! BRAVO to Google. Gemini is a very important and powerful AI product/service from Google Deepmind! (But where is the real safety TECHNOLOGY? Where is the real CONTAINMENT? Where is the real CONTROL?)

A row of Cloud TPU v5p AI accelerator supercomputers in a Google data center.

Gemini: Unlocking insights in scientific literature

Gemini: Processing and understanding raw audio

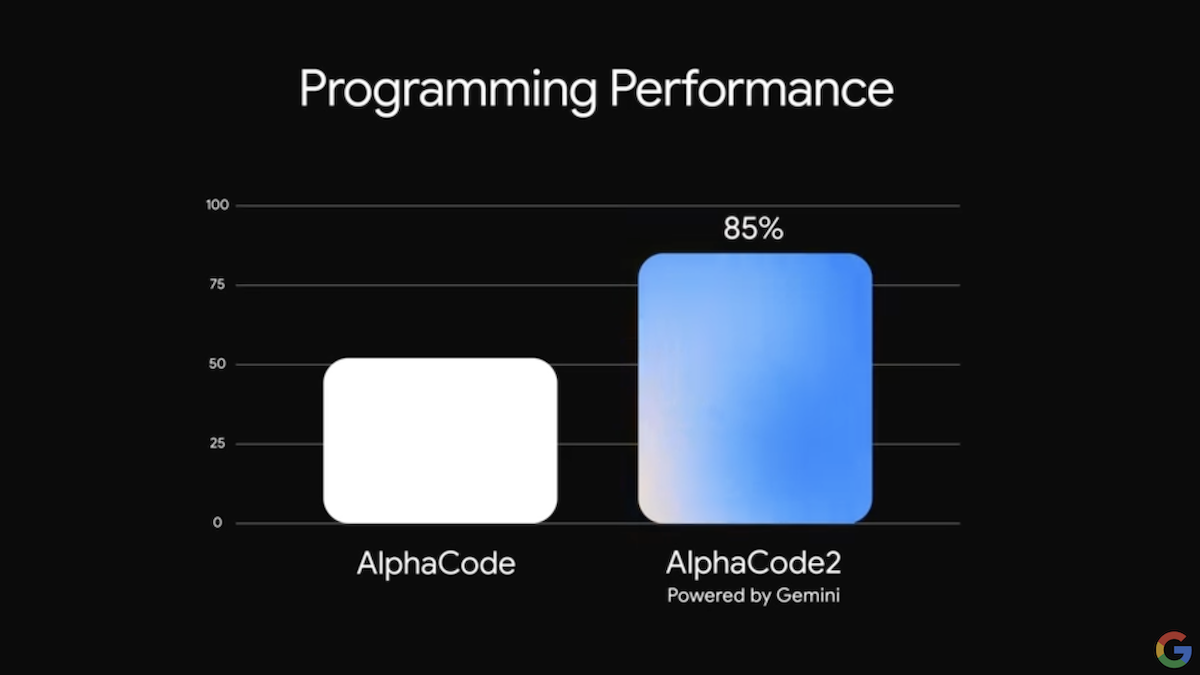

Gemini excels at coding and competitive programming

Gemini: Explaining reasoning in math and physics

Gemini: Reasoning about user intent to generate bespoke experiences

Gemini: Safety and responsibility at the core

TRANSCRIPT: [gentle music] [James Manyika speaking] I am so excited about Gemini because it’s probably the most capable AI system we’ve ever developed. [Lila Ibrahim speaking] But safety and responsibility has to be built in from the beginning and at Google DeepMind, that’s what we’ve done with Gemini. [James Manyika speaking] As these systems become more capable, especially the multimodality, images, audio, video, all of those capabilities also raise new questions. [Tulsee Doshi speaking] Going from image to text, that introduces new contextual challenges because an image might be innocuous on its own, or text might be innocuous on its own, but the combination could be offensive or hurtful. We develop proactive policies and adapt those to the unique considerations of multimodal capabilities, and that allows us to test for new risks like cybersecurity and considerations like bias and toxicity. [Dawn Bloxwich speaking] One of the key areas is not only internal evaluations, but also external evaluations. This can look like external red teaming to bring in different experts to give us their perspectives on how the model is performing, but it also involves getting their advice. [Tulsee Doshi speaking] So we’re working with organizations like MLCommons to develop extensive benchmarks that we can use both to test models within Google and across the industry. And we’re also creating cross-industry collaborations via frameworks like SAIF, the Secure AI Framework. [James Manyika speaking] It’s an incredible way to learn from the industry, learn from other experts and build that into how we’re approaching responsibility at Google. [Dawn Bloxwich speaking] It can mean that our models, our products are better for people and better for society overall. [gentle music fades]

Learn More:

Google Gemini

Gemini: A Family of Highly Capable Multimodal Models – Technical White Paper

Google News: Google launches new AI model Gemini

Google DeepMind Unveils Its Most Powerful AI Offering Yet – Time

Google’s Gemini isn’t the generative AI model we expected – TechCrunch

Google launches its largest and ‘most capable’ AI model, Gemini – CNBC

— COMMENT —

“safety and responsibility has to be built in from the beginning” [YES] with “Proactive policies” and “Internal/external red teaming”? [Is that all??? SERIOUSLY?]

Ref: GOV UK Guidelines for secure AI system development

Page 7: Who is responsible for developing secure AI?

Providers of AI components should take responsibility for the security outcomes of users further down the supply chain.

Providers should implement security controls and mitigations where possible within their models, pipelines and/or systems, and where settings are used, implement the most secure option as default. Where risks cannot be mitigated, the provider should be responsible for:

>informing users further down the supply chain of the risks that they and (if applicable) their own users are accepting

>advising them on how to use the component securely Where system compromise could lead to tangible or widespread physical or reputational damage, significant loss of business operations, leakage of sensitive or confidential information and/or legal implications, AI cyber security risks should be treated as critical.