WHAT can go wrong with a powerful WISH?

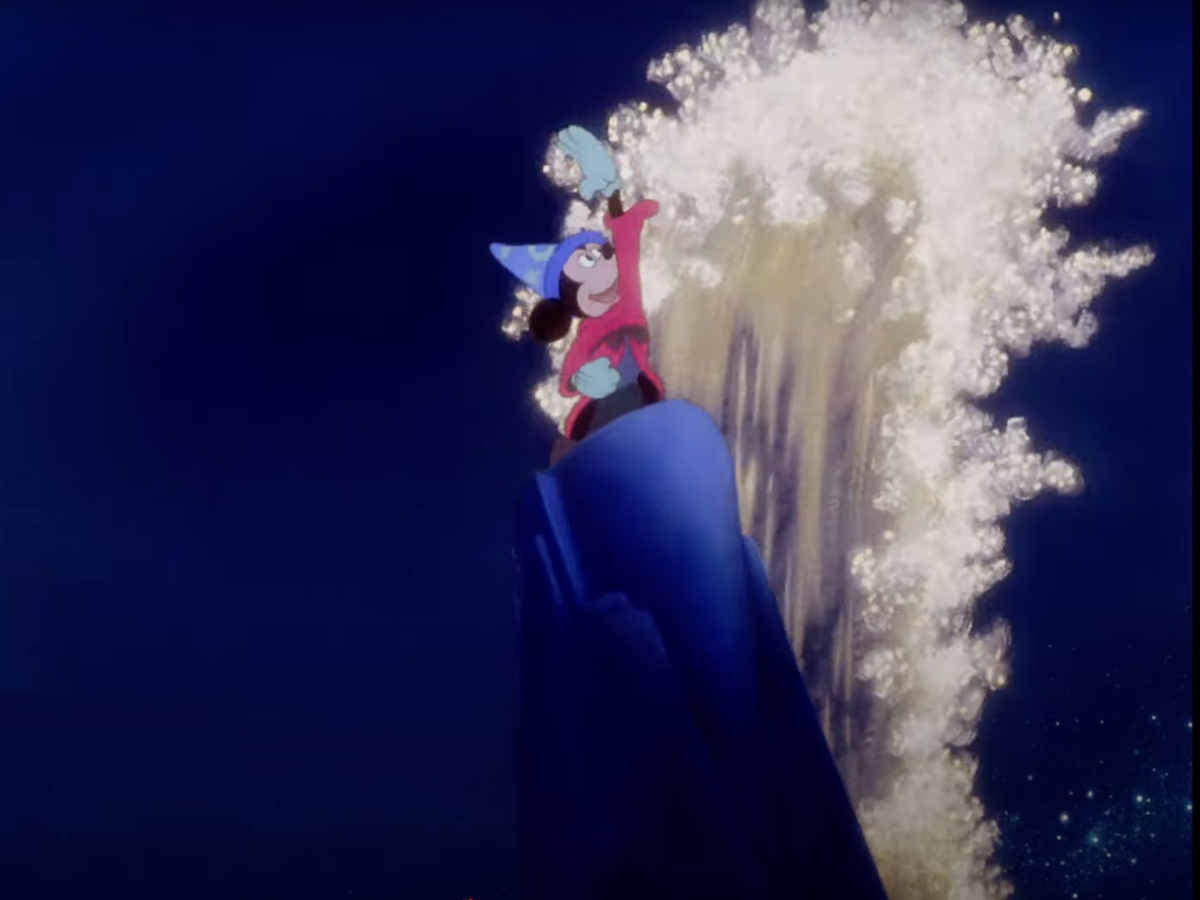

Example: Disney’s Fantasia (1940). Mickey Mouse in The Sorcerer’s Apprentice. (Goethe & Dukas)

Based on Goethe‘s 1797 poem “Der Zauberlehrling” (The Sorcerer’s Apprentice). Mickey Mouse, the young apprentice of the sorcerer Yen Sid, attempts some of his master’s magic tricks but does not know how to control them… chaos and terror ensue.

Part 1

Discovery of the Sorcerer’s Amazing Powers!

Part 2

Infatuation of Power leads to Chaos & Terror!

Part 3

Saved from Certain Doom by The Sorcerer…

Fantasia, released in 1940, represented Disney’s boldest experiment to date. Bringing to life his vision of blending animated imagery with classical music. What had begun as a vehicle to enhance Mickey Mouse’s career blossomed into a full-blown feature that remains unique in the history of animation. “The Sorcerer’s Apprentice” music composed by Paul Dukas. Distributed by RKO Pictures and The Walt Disney Company. © Disney

Infatuation with power ensues…

WHAT can go wrong with a powerful WISH?

Answer: P(doom). Uncontrollable POWERS can lead to terror and death.

In Goethe’s ageless poem, and in Disney’s Fantasia, The Sorcerer controls the magic and breaks the spell. What happens if the Sorcerer doesn’t want to break the spell is perfectly clear: chaos and death (by drowning)

Mickey Mouse and Fantasia © Disney

Mickey Mouse and Fantasia posted here, under “fair use”, not for profit, for social benefit, and for knowledge sharing purposes only.

Beware! P(doom) of 10-90%. Natural selection favors intelligence. In the inevitable intelligence explosion of Artificial General Intelligence (AGI) to Artificial Super Intelligence (ASI ), an uncontrollable alien ASI will certainly become millions of times more intelligent than any human. The natural instinct for self-preservation will be an emergent and extremely motivating goal which will almost certainly not be beneficial to humans unless the AGI is mathematically proven to be contained, in advance of ASI intelligence explosion. Learn more:

- What happens when our computers get smarter than we are? 5,389,614 views | Nick Bostrom | TED2015 • March 2015

- The Containment Problem

- The AI Safety Problem

- Instrumental Convergence

- Don’t Look Up – The Documentary: The Case For AI As An Existential Threat

- Steve Omohundro. Provably Safe AGI (MIT Mechanistic Interpretability Conference, May 7, 2023)

Learn more: What super-smart people say about the

extreme danger to humanity of uncontrolled and uncontained AI.

Quote sources here: The AI Safety Problem. Not-for-profit, fair use, for social benefit: Music: © Rhino. Lyrics: © Nash Notes. Official Music Video.

Our Humanity… You’re Invited.

Learn more: The AI Safety Problem and The Containment Problem and AI Alignment and scientific warning letters here and here and here.

The Synergy of AI-safety and Bio-safety

For example, a not-for-profit commons for social benefit with a for-profit commercial vehicle for investors in safe AI. Not an offer. contact.

“There might simply not be any humans on the planet at all. This is not an arms race it’s a suicide race. We should build AI for Humanity, by Humanity…

We’re rushing towards this cliff but the closer to the cliff we get the more scenic the views are and the more money there is. So we keep going. But we have to also stop at some point. Right.”