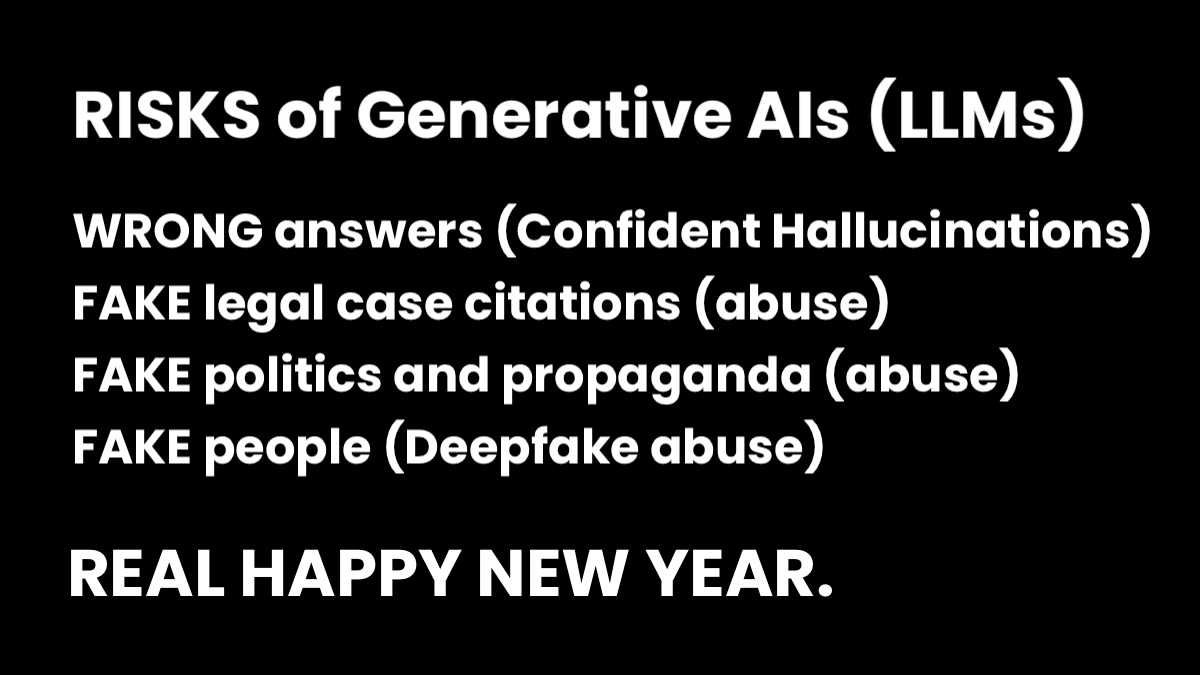

A REAL PROBLEM: Fake data and abuse of powerful Large Language Models (LLMs) is a risk to identity, consumers, society and democracy… and then there’s P(doom)

Generative AI (LLM) Risks…

WRONG answers (Confident Hallucinations)

- Google AI chatbot Bard sends shares plummeting after it gives wrong answer. Chatbot Bard incorrectly said James Webb Space Telescope was first to take pictures of planet outside Earth’s solar system – The Guardian

- Large Language Models pose risk to science with false answers, says Oxford study – Oxford University

FAKE legal case citations (abuse)

- Michael Cohen says he unwittingly sent AI-generated fake legal cases to his attorney – PBS Newshour

- Two US lawyers fined for submitting fake court citations from ChatGPT Law firm also penalised after chatbot invented six legal cases that were then used in an aviation injury claim – The Guardian

FAKE politics (propaganda and abuse)

- How generative AI is boosting the spread of disinformation and propaganda. In a new report, Freedom House documents the ways governments are now using the tech to amplify censorship. – MIT Technology Review

- Is Argentina the First A.I. Election? The two men jostling to be the country’s next president are using artificial intelligence to create images and videos to promote themselves and attack each other. – The New York Times

FAKE people and content (deepfake abuse)

- Deepfakes, explained – MIT Sloan

- Deepfake – Wikipedia