A very IMPORTANT read from a VERY respected source!

THE CHOSUN DAILY. ‘AI Godfather’ Yoshua Bengio forecasts arrival of self-doubting AI in 2024.

In 2024, larger and more powerful artificial intelligence (AI) will emerge. The advent begins with AI capable of self-doubt.

By Silicon Valley=Oh Rora, Park Su-hyeon Pubilshed 2024.01.06. 06:00

In a recent video interview with Chosunilbo, renowned AI expert Yoshua Bengio (59) shared his perspectives on the future of artificial intelligence (AI). Bengio highlighted that major tech companies are on the verge of unveiling more advanced AI in 2024, surpassing current perceptions of its scale and sophistication. He emphasized the role of OpenAI’s ChatGPT in recent AI developments, suggesting that these advancements are just the initial steps, with 2024 serving as a pivotal year that could significantly shape the future of AI and its relationship with humanity.

Discussing a critical distinction between current AI chatbots, like ChatGPT, and the upcoming generation, Bengio underscored their improved ‘reasoning’ capabilities. Beyond routine tasks such as document organization and content generation, upcoming AI systems are expected to assess the truthfulness and values of responses, striving for a more ‘human-like’ nature.

Bengio cautioned that despite the ability of current chatbots like ChatGPT to predict responses quickly, they are akin to intelligent children with a deceptive nature. He highlighted the next step for AI as the ability to question the truth of its answers, similar to human cognition. According to Bengio, a genuine evolution in AI will begin when it can respond with uncertainties like ‘I’m not sure’ or ‘I don’t know.’

Bengio, along with Geoffrey Hinton of the University of Toronto, Yann LeCun of New York University, and Andrew Ng of Stanford University, is recognized as one of the “Four Kings of AI.” In 2018, they were honored with the Turing Award, often referred to as the “Nobel Prize of Computer Science,” for their contributions to developing deep learning technology, which underlies contemporary AI. Bengio’s connections with Korea also run deep, as Samsung Electronics’ SAIT (Samsung Advanced Institute of Technology) joined the world-renowned deep learning research institution, MILA (Montreal Institute for Learning Algorithms), established by Bengio in Montreal in 2019.

The following is a summary and compilation of the Q&A session with him.

— What should we prepare for and how?

“Two major preparatory tasks are crucial. Firstly, from a scientific perspective, there is an urgent need to make breakthroughs in the so-called ‘alignment’ technology, ensuring that AI operates under human control. Secondly, from a political and social standpoint, international norms regarding developing, operating, and managing powerful AI need to be established promptly. I signed a statement by a non-profit AI organization in March, 2023, advocating for a six-month halt in AI development, and my stance remains unchanged. However, recognizing that too much capital and manpower have already been invested in the AI industry and that technological development will not come to a halt, we must find ways to ensure AI safety decisively from both a technical and regulatory perspective.”

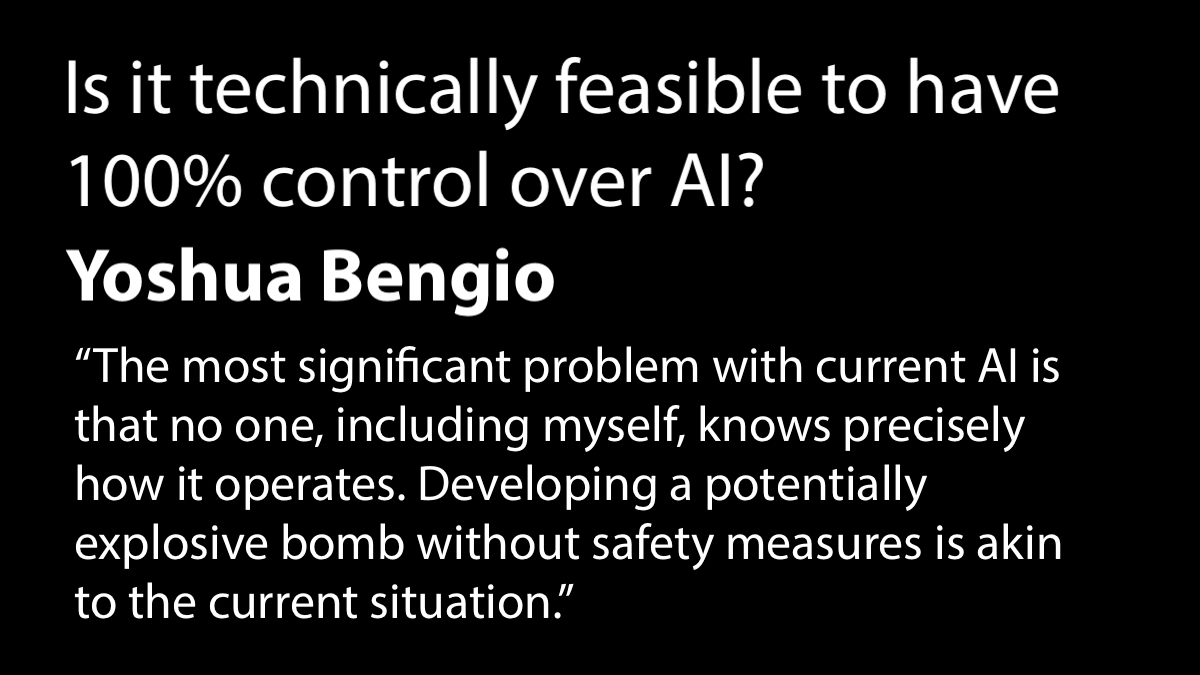

— Is it technically feasible to have 100% control over AI?

“It is challenging at the moment, but we have no other choice. Whether it’s 100% or 99%, we must invest in research at the level equivalent to the Manhattan Project or the Apollo Project, which developed atomic bombs, to achieve control. The most significant problem with current AI is that no one, including myself, knows precisely how it operates. Developing a potentially explosive bomb without safety measures is akin to the current situation. The phenomenon of AI boldly providing false information, known as hallucination, is already causing harm. If a more intelligent AI emerges, and we don’t know how to control it, dangerous consequences may follow.”

— You emphasized international regulations for AI.

“In the future, AI will become a power for individuals, organizations, and nations equipped with the technology. If power is concentrated on one side, it can be detrimental to democracy. Hence, urgent international treaties are needed. Similar to the control of nuclear weapons, international agreements must prevent individuals or countries from using AI in warfare, crime, and other harmful activities. Additionally, security measures should be implemented to prevent the leakage of AI technology developed by companies for industrial espionage, hacking, theft, etc. Even if we develop safe AI under human control, it could lead to disaster if the technology falls into the wrong hands, such as North Korea or terrorists.”

— Currently, AI power is excessively concentrated in the United States. What is your perspective?

“It is a crucial issue when considering the potential harm to countries without AI technology. From this year onward, significant decisions related to AI will be made over the next few years, and developing countries and third-world nations should have equal participation in these discussions. Since AI, like pandemics or climate issues, affects us all, a platform should be established where everyone has a voice.”

— How do you view Korea’s role?

“Considering that most countries lack AI experts, Korea stands out with excellent AI capabilities from leading research universities and tech companies. I believe Korea can play a significant role in international collaborative AI research addressing monumental issues like poverty and climate change.”