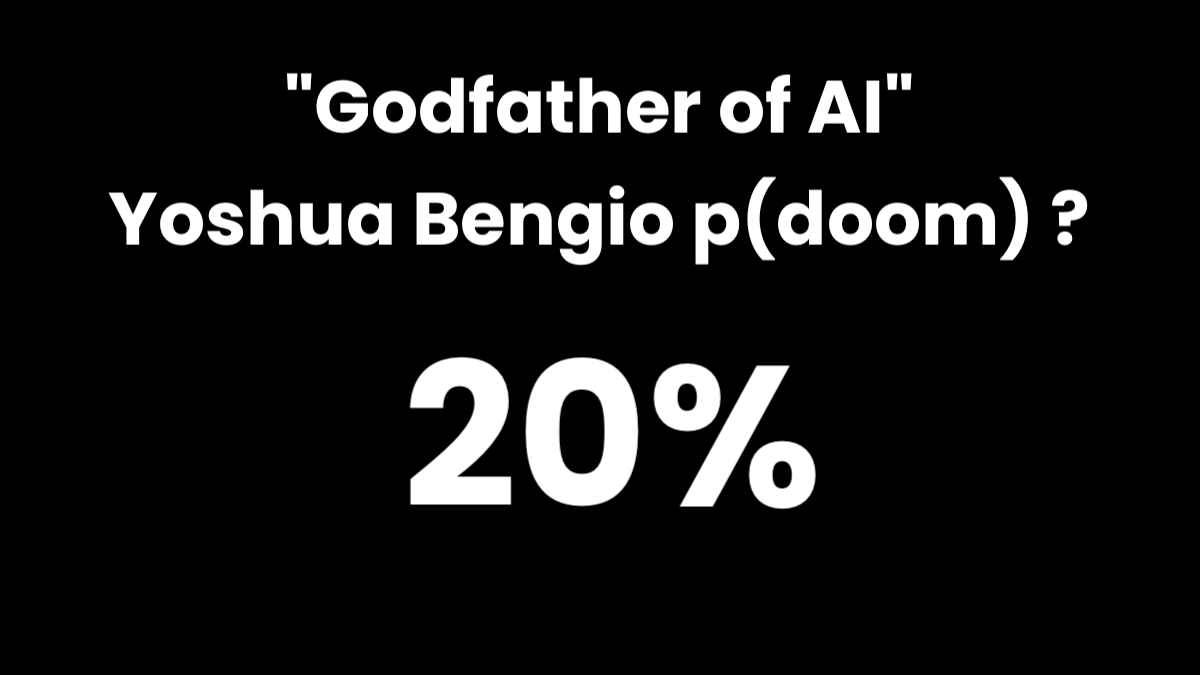

“Godfather of AI” Yoshua Bengio thinks “there’s a 1 in 5 chance AI destroys humanity “

Professor Bengio arrived at the figure based on several inputs:

1) 50% probability that AI would reach human-level capabilities within a decade, and

2) a greater than 50% probability that AI (or humans) themselves would turn AI against humanity at scale

“I think that the chances that we will be able to hold off such attacks is good, but it’s not 100% … maybe 50%,” he says.

As a result, after almost 40 years of working to bring about more sophisticated AI, Yoshua Bengio has decided in recent months to push in the opposite direction, in an attempt to slow it down. “Even if it was 0.1% [chance of doom], I would be worried enough to say I’m going to devote the rest of my life to trying to prevent that from happening,” he says.

“What I’ve been saying now for a few weeks is ‘Please give me arguments, convince me that we shouldn’t worry, because I’ll be so much happier.’

“And it hasn’t happened yet.”

Professor Bengio believes we’re travelling too quickly down a risky path.

“We don’t know how much time we have before it gets really dangerous.”

“Godfather of AI” Yoshua Bengio thinks there’s a 1 in 5 chance AI destroys humanity

“Professor Bengio arrived at the figure based on several inputs:

1) 50% probability that AI would reach human-level capabilities within a decade, and

2) a greater than 50% probability that AI… https://t.co/GN18ZNznNY

— AI Notkilleveryoneism Memes ⏸️ (@AISafetyMemes) February 20, 2024

“Godfather of AI” Geoffrey Hinton now thinks there is a 1 in 10 chance everyone will be dead from AI in 5-20 years

Weeks ago, we learned that Yoshua Bengio, another Turing Award winner, thinks there’s a 1 in 5 chance we all die.

Hinton is worried about AI hive minds: “Hinton… https://t.co/GN18ZNznNY

— AI Notkilleveryoneism Memes ⏸️ (@AISafetyMemes) March 9, 2024

“Godfather of AI” Geoffrey Hinton now thinks there is a 1 in 10 chance everyone will be dead from AI in 5-20 years

Weeks ago, we learned that Yoshua Bengio, another Turing Award winner, thinks there’s a 1 in 5 chance we all die.

Hinton is worried about AI hive minds: “Hinton realised that increasingly powerful AI models could act as “hive minds”, sharing what they learnt with each other, giving them a huge advantage over humans.” “OpenAI’s latest model GPT-4 can learn language and exhibit empathy, reasoning and sarcasm.”

“I am making a very strong claim that these models do understand,” he said in his lecture.

He predicts that the models might also “evolve” in dangerous ways, developing an intentionality to control. “If I were advising governments, I would say that there’s a 10% chance these things will wipe out humanity in the next 20 years. I think that would be a reasonable number,” he says.

“He was encouraged that the UK hosted an AI safety summit at Bletchley Park last year, stimulating an international policy debate. But since then, he says, the British government “has basically decided that profits come before safety”.

“He says he is heartened that a younger generation of computer scientists is taking existential risk seriously and suggests that 30% of AI researchers should be devoted to safety issues, compared with about 1% today.”

“We humans should make our best efforts to stay around.”

He literally quit his high paying, prestigious job to do warn about the dangers of AI

Imagine being praised as the godfather of your field then realizing your baby might destroy the world?

How much cognitive dissonance you’d feel?

How hard it would be to admit this? pic.twitter.com/55tKVBUI6t

— AI Notkilleveryoneism Memes ⏸️ (@AISafetyMemes) March 10, 2024

Jesus @elonmusk, this logically-flimsy scenario of AI keeping humans around as interesting pets is what you bring up when you get an audience with @GeoffreyHinton?

It wasn’t just one of your jokes? pic.twitter.com/PQM4PUQQiX

— Liron Shapira (@liron) May 11, 2023