Apparently we must be extremely careful what we wish for!

Opinion. Artificial intelligence How fatalistic should we be on AI? FT.

The godfather of artificial intelligence has issued a stark warning about the technology

“30 per cent of AI researchers should be devoted to safety issues, compared with about 1 per cent today.”

John Thornhill

Feb 22 2024

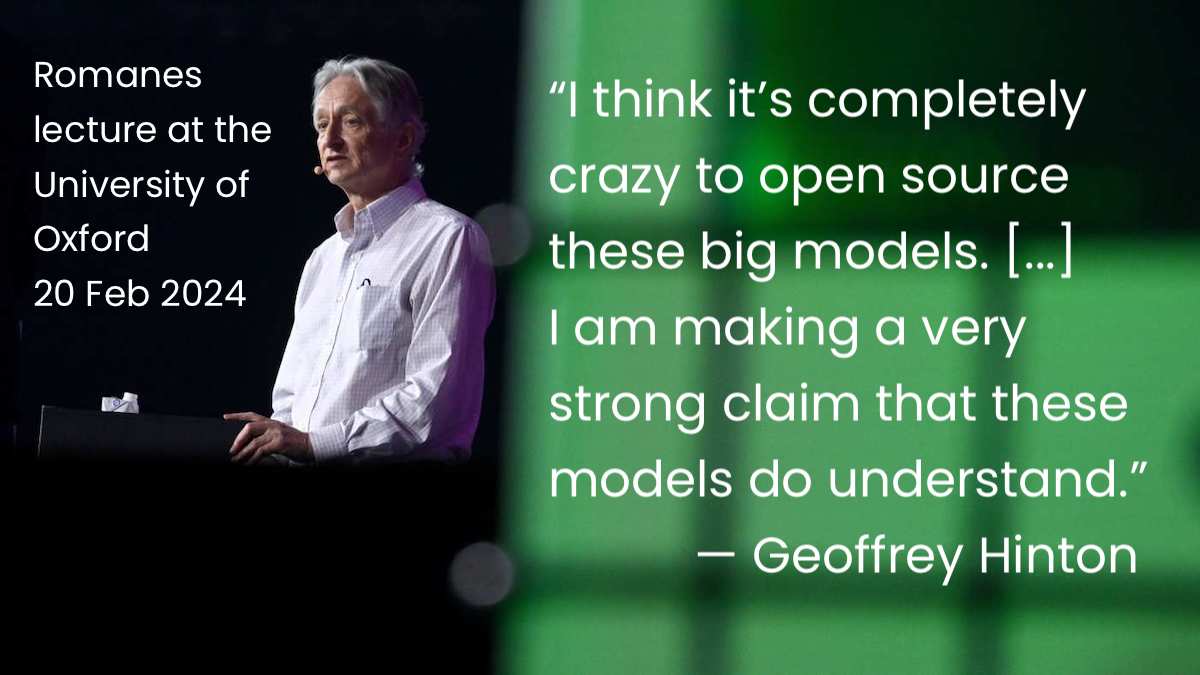

A long line of prestigious speakers, ranging from Sir Winston Churchill to Dame Iris Murdoch, has delivered the annual Romanes lecture at the University of Oxford, starting with William Gladstone in 1892.

But rarely, if ever, can a lecturer have made such an arresting comment as Geoffrey Hinton did this week. The leading artificial intelligence researcher’s speech, provocatively entitled Will Digital Intelligence Replace Biological Intelligence?, concluded: almost certainly, yes. But Hinton rejected the idea, common in some West Coast tech circles, that humanism is somehow “racist” in continuing to assert the primacy of our own species over electronic forms of intelligence. “We humans should make our best efforts to stay around,” he joked.

The British-Canadian computer scientist came to fame as one of the pioneers of the “deep learning” techniques that have revolutionised AI, enabling the creation of generative AI chatbots, such as ChatGPT. For most of his career in academia and at Google, Hinton believed that AI did not pose a threat to humanity. But the 76-year-old researcher says he experienced an “epiphany” last year and quit Google to speak out about the risks.

Hinton realised that increasingly powerful AI models could act as “hive minds”, sharing what they learnt with each other, giving them a huge advantage over humans. “That made me realise that they may be a better form of intelligence,” he told me in an interview before his lecture.

It still seems fantastical that lines of software code could threaten humanity. But Hinton sees two main risks. The first is that bad humans will give machines bad goals and use them for bad purposes, such as mass disinformation, bioterrorism, cyberwarfare and killer robots. In particular, open-source AI models, such as Meta’s Llama, are putting enormous capabilities in the hands of bad people. “I think it’s completely crazy to open source these big models,” he says.

But he predicts that the models might also “evolve” in dangerous ways, developing an intentionality to control. “If I were advising governments, I would say that there’s a 10 per cent chance these things will wipe out humanity in the next 20 years. I think that would be a reasonable number,” he says.

Hinton’s arguments have been attacked on two fronts. First, some researchers argue that generative AI models are nothing more than expensive statistical tricks and that existential risks from the technology are “science fiction fantasy”.

The prominent scholar Noam Chomsky argues that humans are blessed with a genetically installed “operating system” that helps us understand language, and that is lacking in machines. But Hinton argues this is nonsense given OpenAI’s latest model GPT-4 can learn language and exhibit empathy, reasoning and sarcasm. “I am making a very strong claim that these models do understand,” he said in his lecture.

The other line of attack comes from Yann LeCun, chief AI scientist at Meta. LeCun, a supporter of open-source models, argues that our current AI systems are dumber than cats and it is “preposterous” to believe they pose a threat to humans, either by design or default. “I think Yann is being a bit naive. The future of humanity rests on this,” Hinton responds.

The calm and measured tones of Hinton’s delivery are in stark contrast to the bleak fatalism of his message. Can anything be done to improve humanity’s chances? “I wish I knew,” he replies. “I’m not preaching a particular solution, I’m just preaching the problem.”

He was encouraged that the UK hosted an AI safety summit at Bletchley Park last year, stimulating an international policy debate. But since then, he says, the British government “has basically decided that profits come before safety”. As with climate change, he suggests serious policy change will only happen once a scientific consensus is reached. And he accepts that does not exist today. Citing physicist Max Planck, Hinton grimly adds: “Science progresses one funeral at a time.”

He says he is heartened that a younger generation of computer scientists is taking existential risk seriously and suggests that 30 per cent of AI researchers should be devoted to safety issues, compared with about 1 per cent today. We should be instinctively wary of researchers who conclude that more research is needed.

But in this case, given the stakes and uncertainties involved, we had better hurry up. What is extraordinary about the debate on AI risk is the broad spectrum of views out there. We need to find a new consensus.