Groundbreaking Gemma 7B Performance Running on the Groq LPU™ Inference Engine

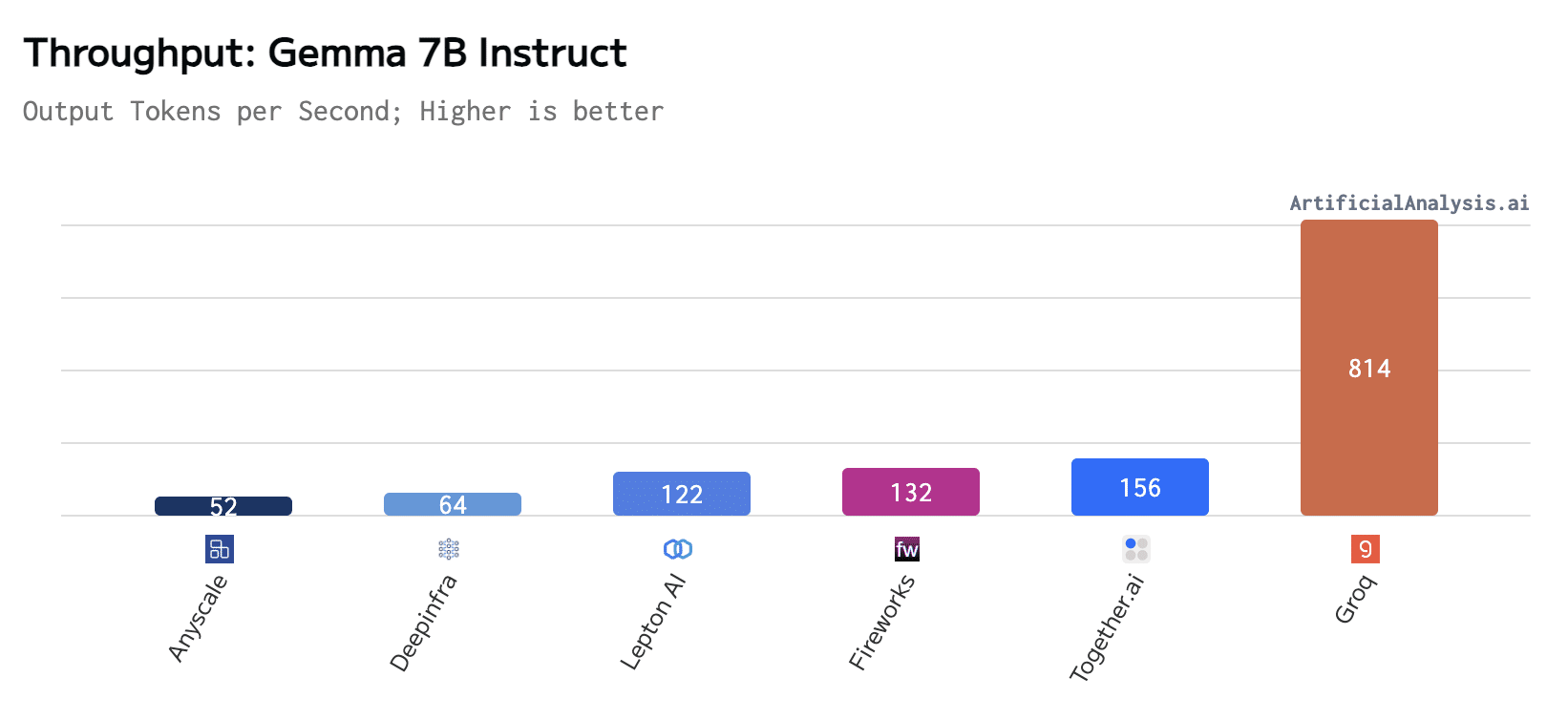

ArtificialAnalysis.ai Shares Gemma 7B Instruct API Providers Analysis, with Groq Offering Up To 15X Greater Throughput

Written by: Groq

In the world of large language models (LLMs), efficiency and inference speed are becoming increasingly important factors for real-world applications. That’s why the recent benchmark results by ArtificialAnalysis.ai for the Groq LPU™ Inference Engine running Gemma 7B are so noteworthy.

Gemma is a family of lightweight, state-of-the-art open-source models by Google DeepMind and other teams across Google, built from the same research and technology used to create the Gemini models. Notably, it is part of a new generation of open-source models from Google to “assist developers and researchers in building AI responsibly.”

Gemma 7B is a decoder-only transformer model with 7 billion parameters that, “surpasses significantly larger models on key benchmarks while adhering to our rigorous standards for safe and responsible outputs.” Specifically, Google:

- Used automated techniques to filter out certain personal information and other sensitive data from training sets

- Conducted extensive fine-tuning and reinforcement learning from human feedback (RLHF) to align the instruction-tuned model with responsible behaviors

- Conducted robust evaluations including manual red-teaming, automated adversarial testing, and assessments of model capabilities for dangerous activities to understand and reduce the risk profile for Gemma models

Overall, Gemma represents a significant step forward in the development of LLMs, and its impressive performance on a range of NLP tasks has made it a valuable tool for researchers and developers alike.

We’re excited to share an overview of our Gemma 7B performance based on ArtificialAnalysis.ai’s recent inference benchmark. Let’s dive in.