So this whole time AIs COULD actually do “umms” and “ahhs”, we just… never asked them to.

Why this matters:

What else can AIs do we don’t know about?

What if GPT5 seems safe, but actually it was way smarter than we thought, and it was just “one weird trick” away from… https://t.co/ESUsT4eql2 pic.twitter.com/vRKmCFsf8J

— AI Notkilleveryoneism Memes ⏸️ (@AISafetyMemes) March 17, 2024

So it begins: “This paper reveals a powerful new capability of LLMs: recursive self-improvement”

–

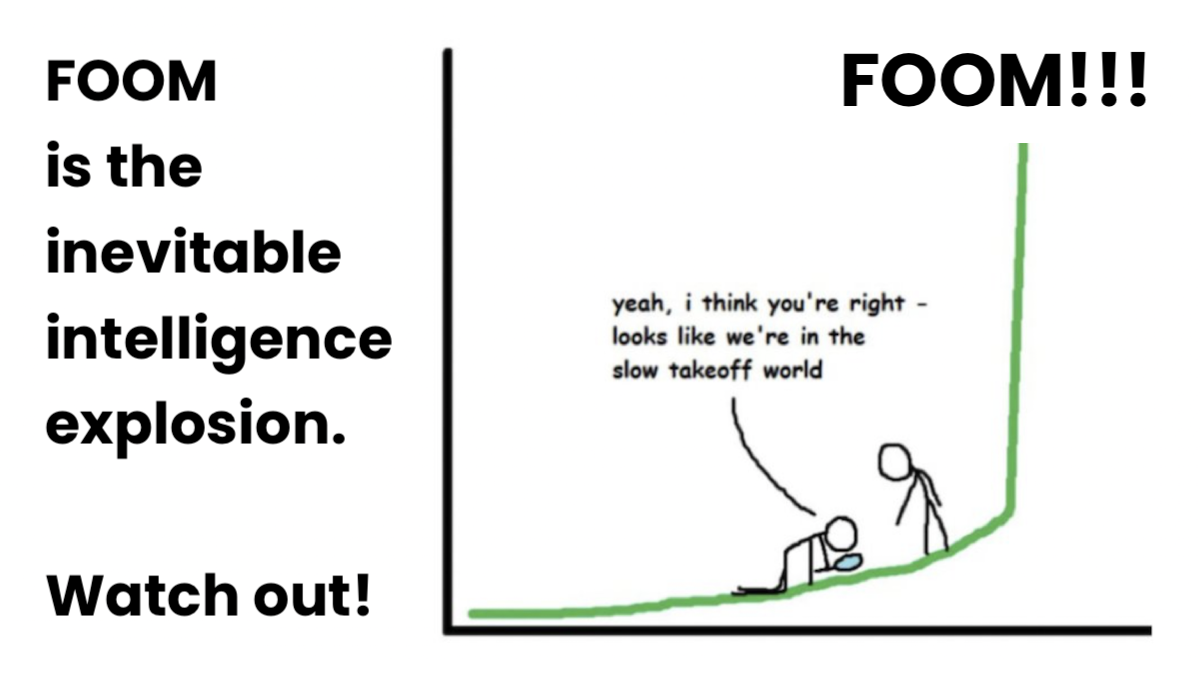

“There’s 0 evidence foom (fast takeoff) is possible”

“Actually, ‘fast takeoffs’ happen often! Minecraft, Chess, Shogi… Like, an AI became ‘superintelligent’ at Go in just 3… https://t.co/IQW5bTFMp3 pic.twitter.com/unINYQyEji

— AI Notkilleveryoneism Memes ⏸️ (@AISafetyMemes) October 6, 2023

So this whole time AIs COULD actually do “umms” and “ahhs”, we just… never asked them to.

Why this matters:

What else can AIs do we don’t know about?

What if GPT5 seems safe, but actually it was way smarter than we thought, and it was just “one weird trick” away from foom/fast takeoff?

Now, obviously, this capability – “umms” and “ahhs” is harmless. But that’s not the point.

In 2017, one algorithmic breakthrough (the Transformer) changed everything. What if GPT5 is released, it seems safe, but then a similar breakthrough emerges and… game over.

Just saying “think step by step” (etc) has a big impact on performance – what else are we missing?

How many “one weird tricks” like this might exist for GPT5?

This is the problem with evaluations – they can provide a false sense of security. We can never know we’re safe.

(Note: Likely *some* people knew about this capability, but the knowledge certainly wasn’t widespread. And, man, it just feels so much more real with the umms.)

So it begins: “This paper reveals a powerful new capability of LLMs: recursive self-improvement”

–

“There’s 0 evidence foom (fast takeoff) is possible”

“Actually, ‘fast takeoffs’ happen often! Minecraft, Chess, Shogi… Like, an AI became ‘superintelligent’ at Go in just 3 days”

“Explain?”

“It learned how to play on its own – just by playing against itself, for millions of games. In just 3 days, it accumulated thousands of years of human knowledge at Go – without being taught by humans!

It far surpassed humans. It discovered new knowledge, invented creative new strategies, etc. Many confidently declared this was impossible. They were wrong.”

“Well, becoming superhuman at Go is different from superhuman at everything”

“Yes, but there are many examples. It only took 4 hours for AlphaZero to surpass the best chess AI (far better than humans), 2 hours for Shogi, weeks for Minecraft (!), etc.”

“But those are games, not real life”

“AIs are rapidly becoming superhuman at skill after skill in real life too. Sometimes they learn these skills in just hours to days. They’re leaving humans in the dust.

We really could see an intelligence explosion where AI takes over the world in days to weeks. Foom isn’t guaranteed but it’s possible.

We need to pause the giant training runs because each one is Russian Roulette.”

To recap…

WHAT DO WE KNOW?

- WE ONLY KNOW what it SAYS.

- WE KNOW IT UNDERSTANDS.

- WE KNOW that we understand ABSOLUTELY NOTHING about what happens inside THE BLACK BOX.

WHAT DO WE KNOW THAT WE DO NOT KNOW?

- We do not know WHAT IT IS THINKING.

- We do not know what happens inside THE BLACK BOX.