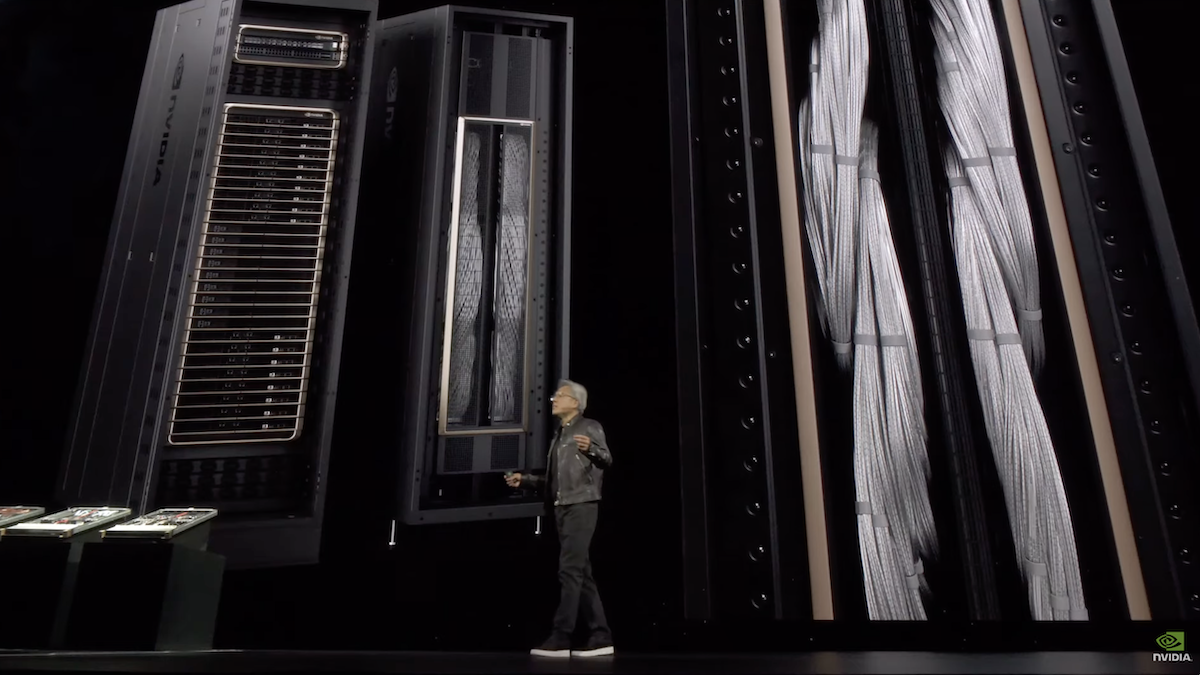

The entire rack is 120KW and is liquid cooled.

now let’s see what it looks like in operation. okay let’s imagine what is what how do we put this to work and what does that mean well if you were to train a GPT model 1.8 trillion parameter model it took it took about apparently about you know 3 to 5 months or so uh with 25,000 amp uh if we were to do it with hopper it would probably take something like 8,000 GPUs and it would consume 15 megawatts 8,000 GPUs on 15 MW it would take 90 days about 3 months and that would allows you to train something that is you know this groundbreaking AI model and this is obviously not as expensive as as um as anybody would think but it’s 8,000 8,000 gpus it’s still a lot of money and so 8,000 gpus 15 megawatts if you were to use Blackwell to do this it would only take 2,000 GPUs 2,000 gpus same 90 days but this is the amazing part only 4 MW of power so from 15 yeah that’s right and that’s and that’s our goal our goal is to continuously drive down the cost and the energy they’re directly proportional to each other cost and energy associated with the Computing so that we can continue to expand and scale up the computation that we have to do to train the Next Generation models.

Learn more: NVLink and NVLink Switch

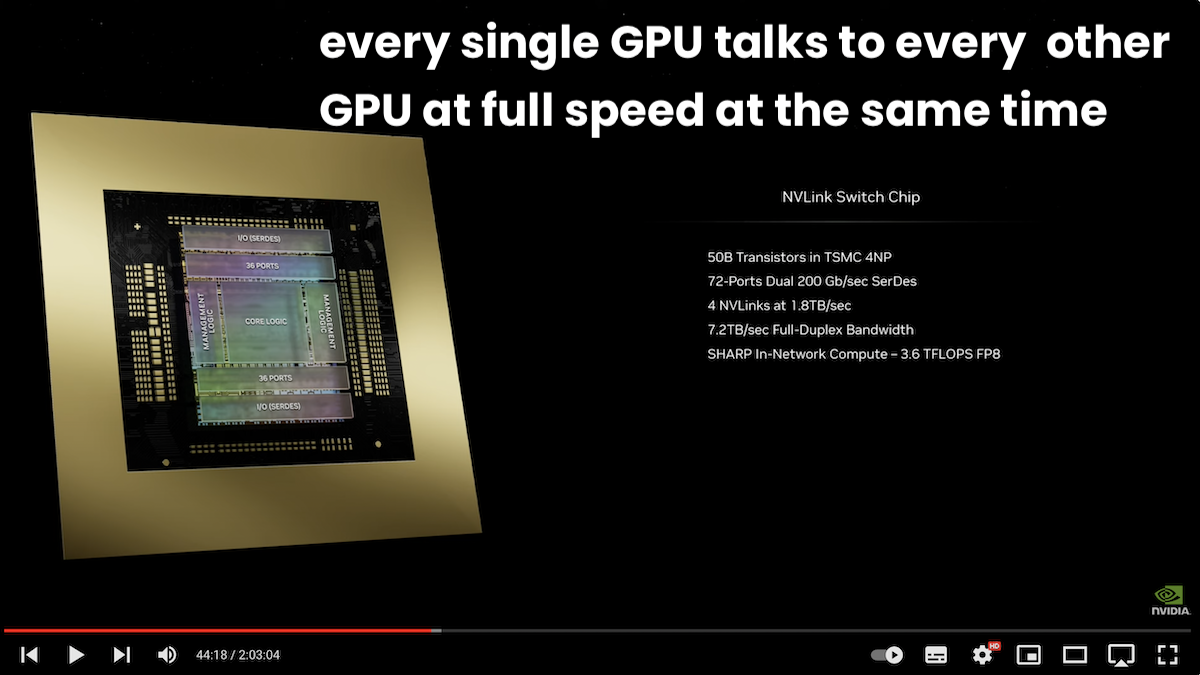

- The building blocks of high-speed, multi-GPU communication for feeding large datasets faster into models and rapidly exchanging data between GPUs.

- Maximize System Throughput with NVIDIA NVLink Fifth-generation NVLink vastly improves scalability for larger multi-GPU systems. A single NVIDIA Blackwell Tensor Core GPU supports up to 18 NVLink 100 gigabyte-per-second (GB/s) connections for a total bandwidth of 1.8 terabytes per second (TB/s)—2X more bandwidth than the previous generation and over 14X the bandwidth of PCIe Gen5. Server platforms like the GB200 NVL72 take advantage of this technology to deliver greater scalability for today’s most complex large models.

- Raise GPU Throughput With NVLink Communications NVLink Switch Chip Fully Connect GPUs With NVIDIA NVLink and NVLink Switch NVLink is a 1.8TB/s bidirectional, direct GPU-to-GPU interconnect that scales multi-GPU input and output (IO) within a server. The NVIDIA NVLink Switch chips connect multiple NVLinks to provide all-to-all GPU communication at full NVLink speed within a single rack and between racks. To enable high-speed, collective operations, each NVLink Switch has engines for NVIDIA Scalable Hierarchical Aggregation and Reduction Protocol (SHARP)™ for in-network reductions and multicast acceleration.

Learn more:

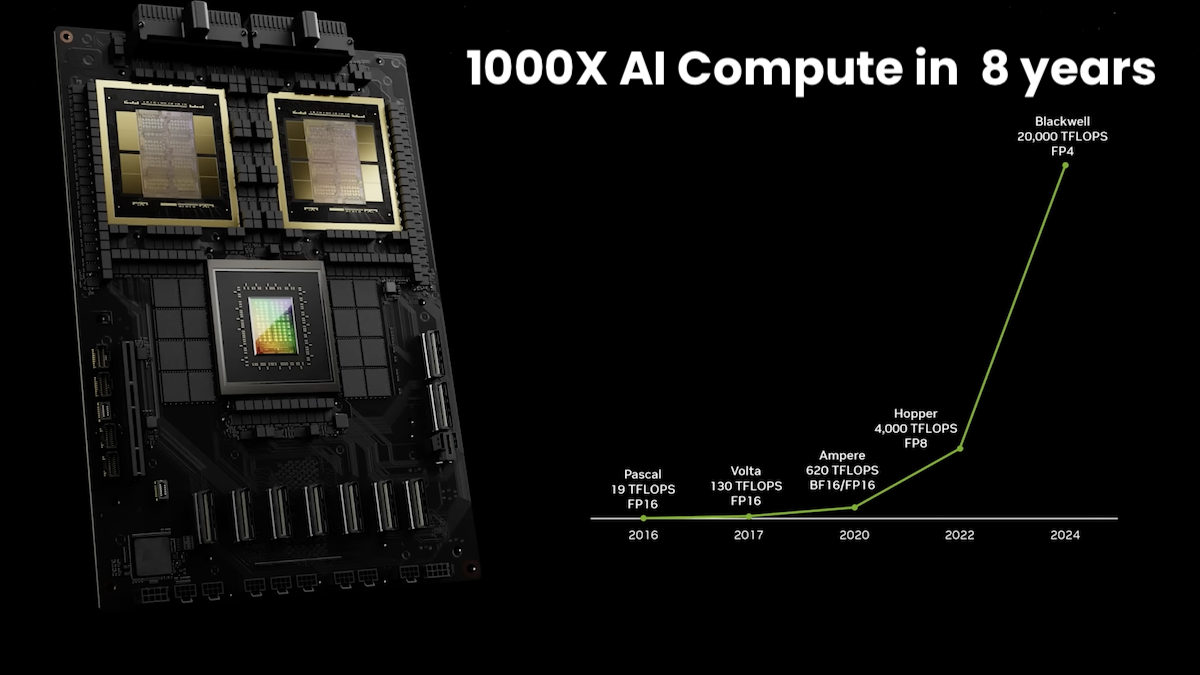

- NVIDIA Blackwell Architecture The Engine of the New Industrial Revolution.

- A New Class of AI Superchip Blackwell-architecture GPUs pack 208 billion transistors and are manufactured using a custom-built TSMC 4NP process. All Blackwell products feature two reticle-limited dies connected by a 10 terabytes per second (TB/s) chip-to-chip interconnect in a unified single GPU.

- NVIDIA GB200 NVL72

- NVIDIA GB200 NVL72 Delivers Trillion-Parameter LLM Training and Real-Time Inference

- Upgrading Multi-GPU Interconnectivity with the Third-Generation NVIDIA NVSwitch

- NVIDIA Confidential Computing Secure data and AI models in use.

- NVIDIA Tensor Cores Unprecedented Acceleration for Generative AI

- The Modern AI Data Center. Learn how IT leaders are scaling and managing their data center to readily adopt NVIDIA AI.

- Accelerated Computing for Modern Applications

- NVIDIA NGC: AI Development Catalog

- Harness Generative AI Engineered for Your Enterprise Discover production-ready APIs that run anywhere with NVIDIA NIM.

- NVIDIA Healthcare Launches Generative AI Microservices to Advance Drug Discovery, MedTech and Digital Health