10,253 views 2 May 2024 INSTITUTE FOR THE STUDY OF ANCIENT CULTURES MUSEUM

The media are agog with claims that recent advances in AI put artificial general intelligence (AGI) within reach. Is this true? If so, is that a good thing? Alan Turing predicted that AGI would result in the machines taking control. At this Neubauer Collegium Director’s Lecture, UC Berkeley computer scientist Stuart Russell argued that Turing was right to express concern but wrong to think that doom is inevitable. Instead, we need to develop a new kind of AI that is provably beneficial to humans. Unfortunately, we are heading in the opposite direction. Neubauer Collegium Director’s Lecture: Stuart Russell

The Neubauer Collegium for Culture and Society cultivates communities of inquiry at the University of Chicago. Our faculty-led research projects bring together scholars and practitioners whose collaboration is required to address complex human challenges. Our Visiting Fellows program brings the best minds from around the world for collaboration, animating the intellectual and creative environment on campus. Our gallery presents art exhibitions in the context of academic research, and our public events invite broad engagement with the scholarly inquiries we support. The aim of these activities is to deepen knowledge about the world and our place in it.

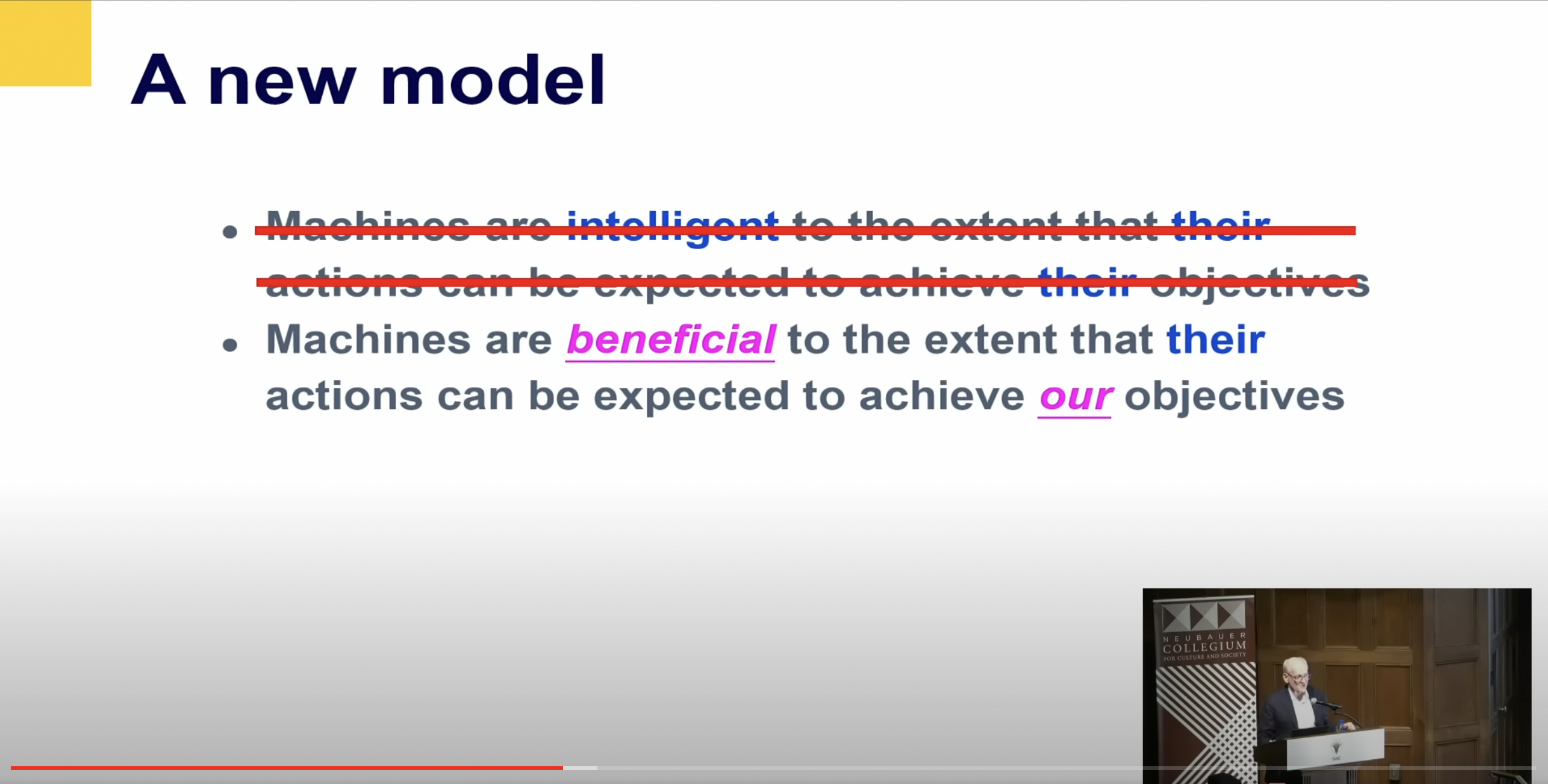

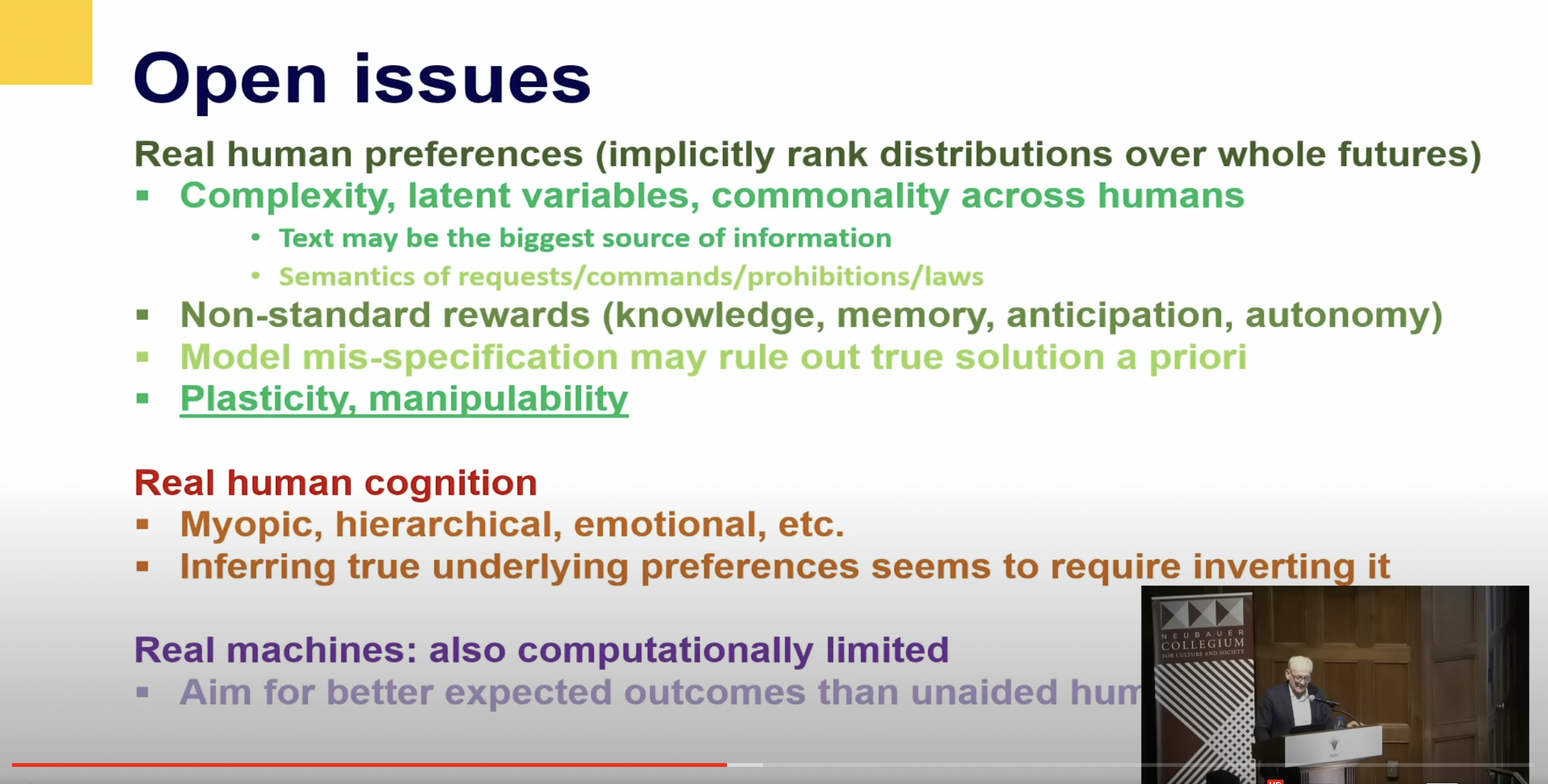

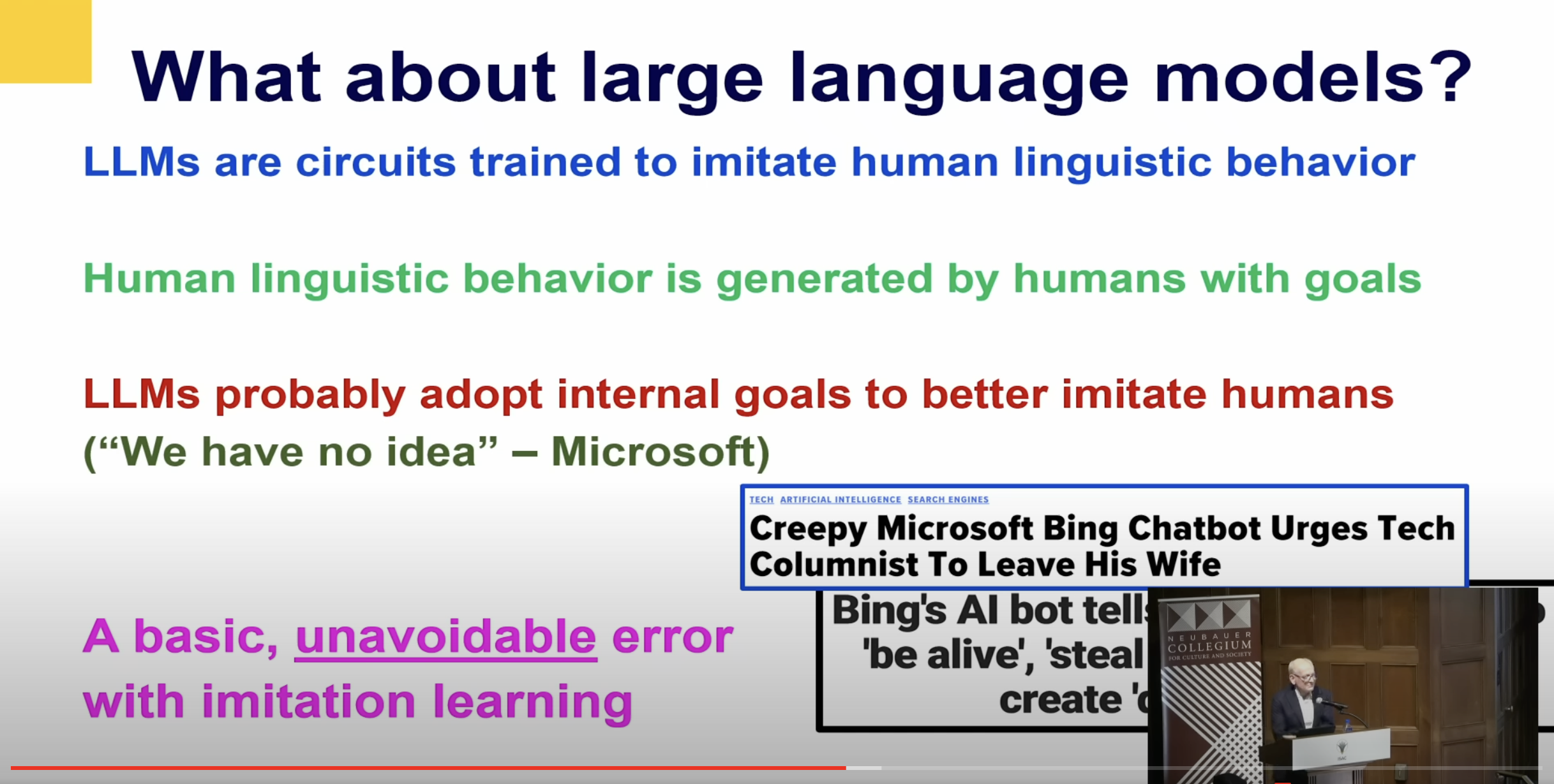

(00:24:22): So, Alan Turing, who’s the founder of computer science, gave a lecture in 1951 and he basically was asked the same question, what if we succeed? And he said, “It seems probable that once the machine thinking method had started, it would not take long to outstrip our feeble powers at some stage, therefore, we should have to expect the machines to take control.” So, that’s that. He offers no mitigation, no solution, no apology, no nothing. This is just a fact. So, I think if you take one step back in his reasoning, I think he’s kind of trying to answer this question that if you make systems that are more intelligent than humans, intelligence is what gives us power over the world, over all the other species. We’re not particularly fast or big, we don’t have very long teeth, but we have intelligence, the ability to communicate, cooperate, and problem solve. So, if we make AI systems that are more intelligent, then they are fundamentally more powerful in going back to that standard model. They will achieve their objectives. (00:25:43): And so how on earth do we retain power over entities more powerful than ourselves forever. And so I think Turing is asking himself this question and immediately saying, we can’t. I’m going to ask the question a slightly different way, and it again comes back to the standard model. The standard model is a way of saying, what is the mathematical problem that we set up AI systems to solve? And can we set up a mathematical problem such that no matter how well the AI system solves it, we are guaranteed to be happy with the result? This is a much more optimistic form of the same question. So, they could be far more powerful, but their power is directed in such a way that the outcomes are beneficial to humans. (00:26:45): And it’s certainly not the standard model optimizing a fixed objective, because we have known since at least the legend of King Midas and many other cultures have similar legends that we are absolutely terrible at specifying those objectives correctly. And if you specify the wrong objective as King Midas found out to his cost when all of his food and drink and family turned to gold, then you are basically setting up a direct conflict with the machines who are pursuing that objective much more effectively than you can pursue objectives. So, that approach doesn’t work, and I’ll give you an example in a second. The second approach, which is what we’re doing with the large language models, with imitate human behavior, is actually even worse. The reasons that I’ll explain.

Stuart Russell (01:11:01): So, I think the approach to take is that the hardware itself is the police. And what I mean by that is that there are technologies that make it fairly straightforward to design hardware that can check a proof of safety of each software object before it runs. And if that proof doesn’t check out or it’s missing, whatever, the hardware will just simply refuse to run the software object.

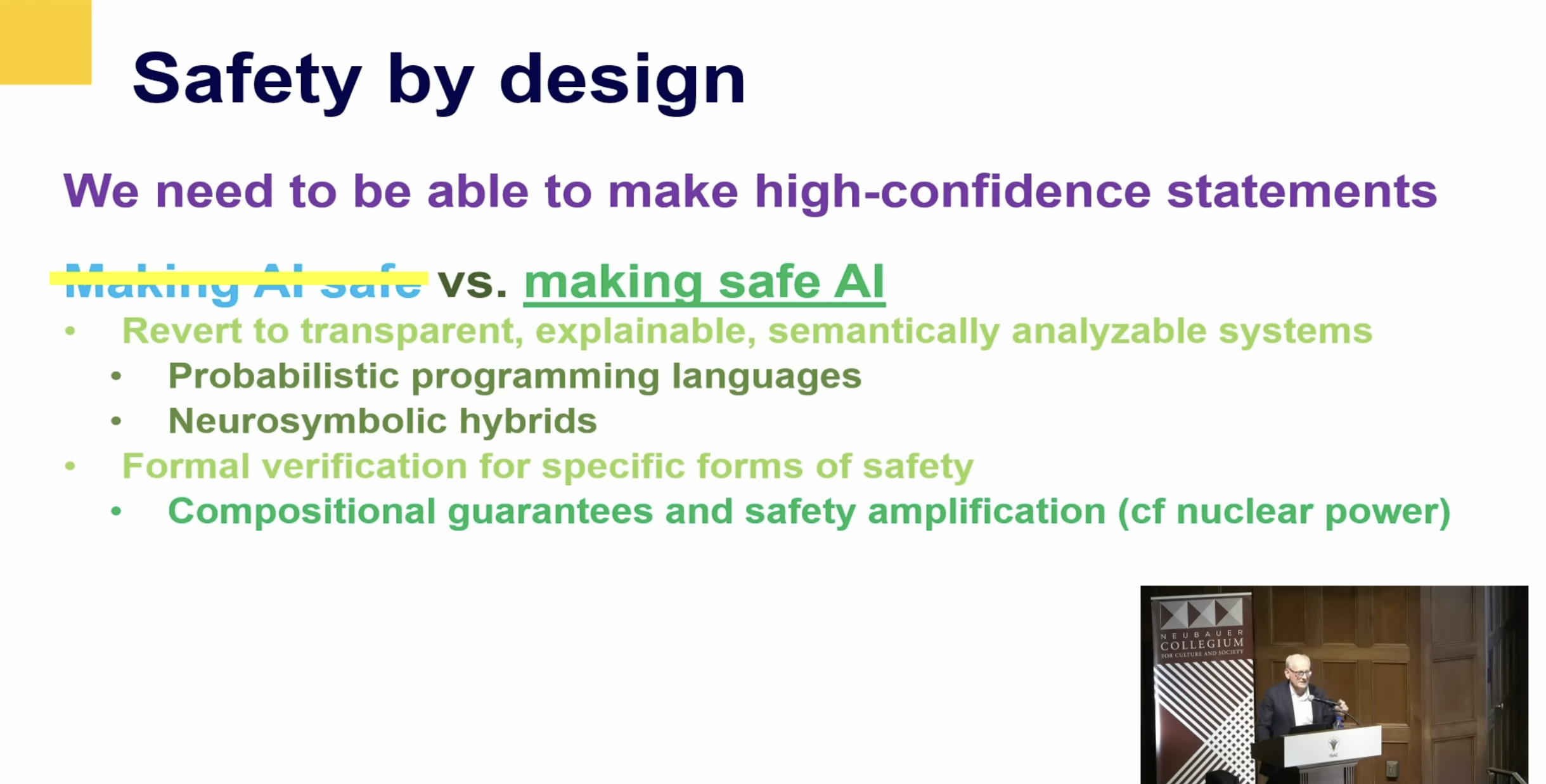

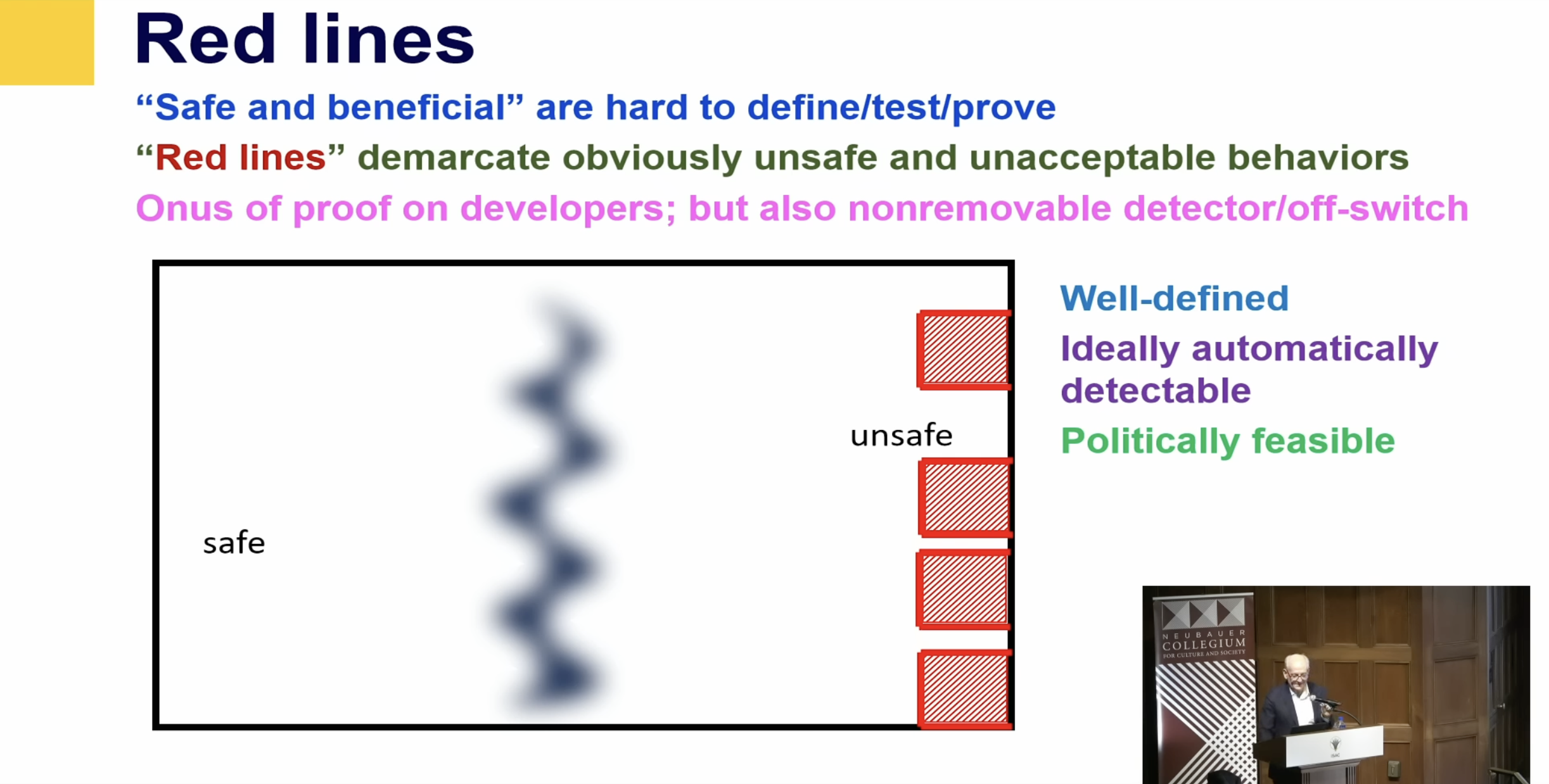

(01:25:33): And Ed Morse did a study back in the days of paper how much paperwork was required to get your nuclear power plant certified as a function of the mass of the power plant itself. So, for each kilogram of nuclear power station, how much paper did you need? And the answer is seven kilograms of paper. These are giant buildings with containment and lead and all kinds of stuff. So, a lot. If you just look at that and then compare that to the efforts, oh, they hired a couple of grad students from MIT who spent a week doing red-teaming. It’s pathetic. And so I think we’ve got to actually develop some backbone here and say, just because the companies say they don’t want to do this does not mean we should just say, oh fine, go ahead without any safety measures, mean time to failure of a week, it’s great. (01:26:52): But I think it’s reasonable for the companies to then ask, well, okay, how are we supposed to do this? I think one way is not to build giant black boxes, as you correctly point out, there are ways of getting high-confidence statements about the performance of a system based on a how many data points it’s been trained on and how complex the model is that you’re training. But the numbers, if you’re trying to train a giant black box, are gargantuan. I mean a model with a trillion parameters as we think GPT-4 probably has, we might need 10 to the 500 data points to get any confidence that what it’s doing is correct. And as I pointed out, the goal of imitating humans isn’t the right goal in the first place. So, showing that it correctly imitates humans who want power and wealth and spouses is not the right goal anyway.

(01:27:59): So, we don’t want to prove that it does those things. We actually want to prove that it’s beneficial to humans. So, my guess is that as we gradually ratchet up the regulatory requirements, the technology itself is going to have to change towards being based on a substrate that is semantically rigorous and decomposable. And just a simplest example would be a logical theorem prover where we can examine each of the axioms and test its correctness separately. We can also show that the theorem prover is doing logical reasoning correctly and then we are good. And so you take that sort of component-based, semantically rigorous inference approach, then you can build up to very complex systems and still have guarantees. Is there some hybrid of the total black box and the break it all the way down to semantically rigorous components? And I think this is inevitable that we will go in this direction. And from what I hear, this is in fact likely to be a feature of the next generation, that they’re incorporating what we call good old-fashioned AI technology as well as the giant black box.

TRANSCRIPT

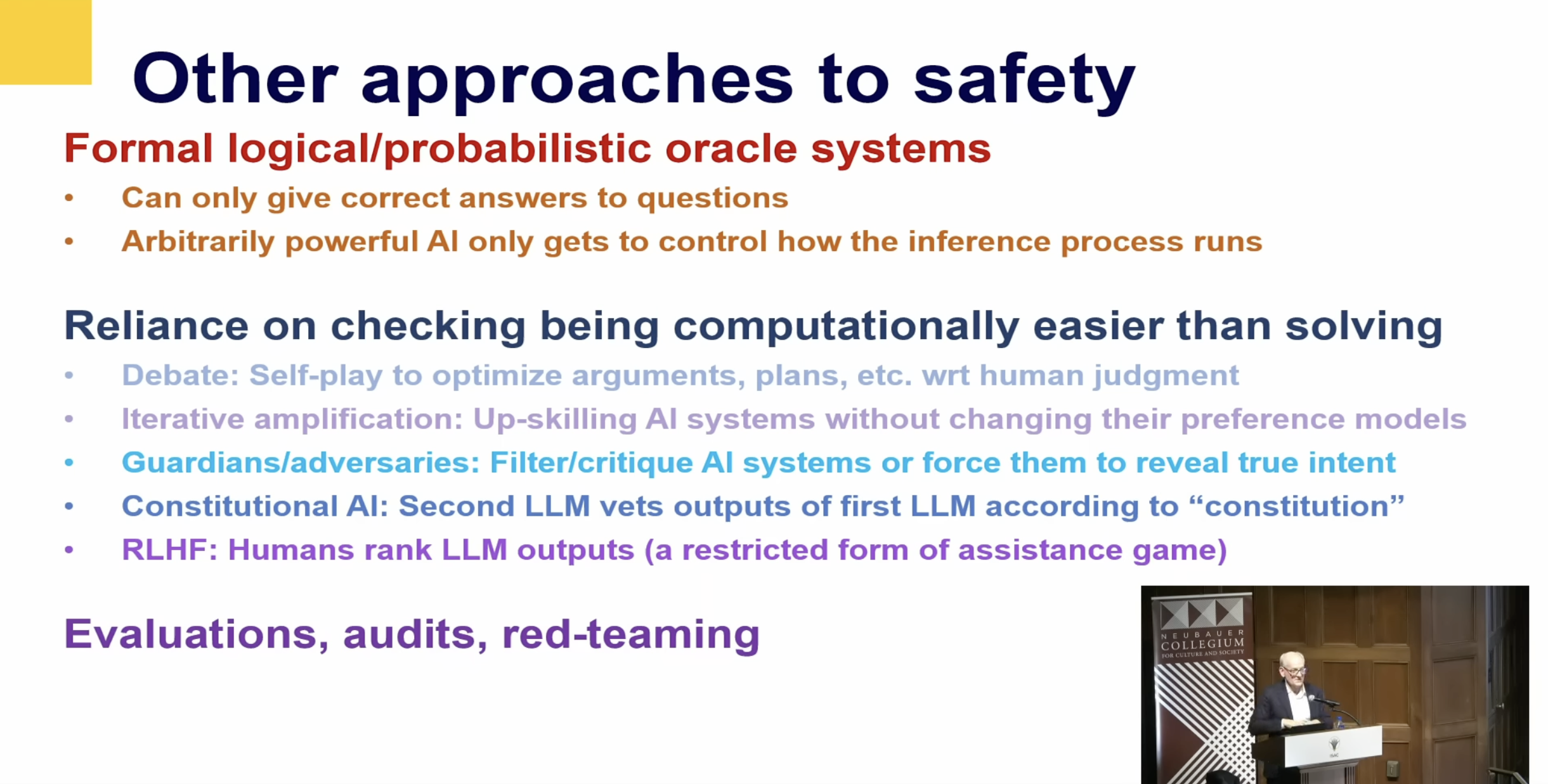

Tara Zahra (00:00:00): I first wanted to thank a few people, especially the entire Neubauer team. Rachel Johnson in particular has been really critical to bringing all of this together seamlessly. And I also want to thank Dan Holtz with the Existential Risk Lab because I don’t think we could have done this without his collaboration. I’m really, really grateful that we had this opportunity to work together. Before I introduce Stuart Russell, I wanted to just briefly introduce the Neubauer Collegium. For those of you who don’t know us, we are a research incubator on campus and our primary mission is to bring people together regardless of what school or discipline or field they sit in, whether they’re inside or outside the academy, to think through questions, ideas, and problems that can’t be addressed by individuals alone. All of the research we support is collaborative and it all has some kind of humanistic question at its core, although we’re very broad in how we think about humanistic. (00:01:08): In addition to our research projects and visiting Fellows program, we’re unusual in supporting an art gallery which strives to integrate the arts with research. And this special series, the director’s lectures, through which we hope to enliven conversations about research with the broader community. So, you might be wondering why invite a scientist to give a lecture at an institution that is devoted to humanistic research? And I believe this is the first time we’ve had a scientist give this lecture, although I’m not a hundred percent sure, but certainly in my tenure. The reason is because Stuart Russell is not an ordinary scientist, and AI is not an ordinary topic. His book, Human Compatible: AI and the Problem of Control makes it very clear that shaping a future in which AI does more good than harm will require a great deal of collaboration, since AI is a technology that may in fact threaten the future of humanity. Humanists, social scientists, artists and scientists will need to work together in order to decide what potential uses of AI are ethical and desirable in realms as diverse as warfare and defense, policing, medicine, education, arts and culture. (00:02:19): And I know that these conversations have been happening all over campus and beyond, but often in silos. So, I hope that Professor Russell’s visit here will be or has been already an opportunity to bring us together to think about the question that he poses so provocatively in his title, What If We Succeed? Stuart Russell is a professor of computer science at the University of California at Berkeley. He holds the Smith-Zada chair in engineering and is director of the Center for Human-Compatible AI, as well as the Kavli Center for Ethics Science and the Public, which I learned today is also a center that brings scientists and humanists together to talk about important questions. His book, Artificial Intelligence: A Modern Approach with Peter Norvig is the standard text in AI used in over 1,500 universities in 135 countries. And his research covers a wide range of topics in artificial intelligence with a current emphasis on the long-term future of artificial intelligence and its relationship to humanity. (00:03:22): He has developed a new global seismic monitoring system for the Nuclear Test-Ban Treaty and is currently working to ban lethal autonomous weapons. And as I mentioned before, he’s the author of Human Compatible: AI and the Problem of Control, which was published by Viking in 2019. And he told me today was recently re-released with a new chapter called 2023. We’re also very fortunate to have our own Rebecca Willett, who will be in conversation with Professor Russell after his lecture. Rebecca Willett is a professor of statistics and computer science and the faculty director of AI at the Data Science Institute here at the university with a courtesy appointment at the Toyota Technological Institute at Chicago. Professor Willett’s work in machine learning and signal processing reflects broad and interdisciplinary expertise and perspectives. She’s known internationally for her contributions to the mathematical foundations of machine learning, large-scale data science and computational imaging. (00:04:24): Her research focuses on developing the mathematical and statistical foundations of machine learning and scientific machine learning methodology, and she’s also served on several advisory boards for the United States government on the use of AI. Finally, just a quick word about the format. Professor Russell will speak I think for about 30 to 40 minutes that will be followed by a conversation between Professor Willett and Russell. And then we will have time for a Q&A with the audience, and that will be followed by a reception where I hope you’ll all join us. But for now, please join me in welcoming Stuart Russell. Thank you. Stuart Russell (00:05:17): Thank you very much, Tara. I’m delighted to be here in Chicago and I’m looking forward to the Q&A, so I’ll try to get through the slides as fast as possible. Okay, so to dive right in, what is artificial intelligence? So, for most of the history of the field, after I would say a fairly brief detour into what we now call cognitive science, the emulation of human cognition, we followed a model that was really borrowed fairly explicitly from economics and philosophy under the heading rational behavior. So, machines are intelligent to the extent that their actions can be expected to achieve their objectives. So, those objectives might be specific destinations in your GPS navigation system where the AI part of that is figuring out how to get to that destination as quickly as possible. It could be the definition of winning that we give to a reinforcement learning system that learns to play Go or chess and so on. (00:06:30): So, this framework actually is very powerful. It’s led to many of the advances that have happened over the last 75 years. It’s the same model actually that is used in operations research, in control theory, in economics, in statistics where we specify objectives and we create machinery that optimizes those objectives that achieves them as well as possible. And I will argue later actually that this is a huge mistake, but for the time being, this is how we think about artificial intelligence and most of the technology we’ve developed has been within this framework. But unlike economics and statistics and operations research, AI has this sort of one overarching goal, which is to create general purpose intelligence systems. So, sometimes we call that AGI, artificial general intelligence. You’ll hear that a lot, and it’s not particularly well-defined, but think of it as matching or exceeding human capacities to learn and perform in every relevant dimension. (00:07:47): So, the title of the talk is What If We Succeed? And I’ve actually been thinking about that a long time. The first edition of the textbook came out in ’94, and it has a section with this title and it refers back to some of the more catastrophic speculations that I was aware of at that time. But you can tell reading this that I’m not that worried, at least my 1994 self was not that worried. And it’s important naturally to think about this not just in terms of catastrophe, but what’s the point, right? Besides that it’s just this cool challenge to figure out how intelligence works and could we make it in machines? There’s got to be more to it than that. And if you think about it right with general purpose AI by definition the machines would then be able to do whatever human beings have managed to do that’s of value to their fellow citizens. (00:08:54): And one of the things we’ve learned how to do is to create a decent standard of living for some fraction of the Earth’s population, but it’s expensive to do that. It takes a lot of expensive people to build these buildings and teach these courses and so on. But AI can do it at much greater scale and far less cost. And so you might, for example, just be able to replicate the nice Hyde Park standard of living for everyone on earth, and that would be about a tenfold increase in GDP. And if you calculate if have some economists here, net present value is sort of the cash equivalent of an income stream, and it’s about 15 quadrillion dollars is the cash value of AGI as a technology. (00:09:46): So, that gives you some sense of why we’re doing this. It gives you some sense of why the world is currently investing maybe between a hundred and 200 billion a year into developing AGI, so maybe 10 times the budget for all other forms of scientific research, at least basic science. And so that’s a pretty significant investment and it’s going to get bigger as we get closer. This magnet in the future is pulling us more and more and will unlock even greater levels of investment. Of course, we could have actually a better civilization not just replicating the Hyde Park civilization, but actually much better healthcare, much better education, faster advances in science, some of which are already happening. (00:10:49): So, that’s all good. Some people ask, well, if we do that, we end up with the Wally world because then there’s nothing left for human beings to do and we lose the incentive to even learn how to do the things that we don’t need to do, sorry. And so the human race becomes infantilized and enfeebled. Obviously this is not a future that we want. Economists are finally, I would say, acknowledging that this is a significant prospect. If AGI is created, they’re now plotting graphs showing that, oh yeah, human wages go to zero and things like that. So, having denied the possibility of technological unemployment for decades, they now realize that it will be total, but I’m not going to talk about this today. (00:11:49): The next question then is have we succeeded? And five years ago, I don’t think anyone would have a slide with this title on it, because it’s obvious that we didn’t have anything resembling AGI. But now in fact, Peter Norvig, the co-author on the textbook that was mentioned has published an article saying that we have succeeded, that the technology we have now is AGI in the same sense that the Wright Brothers airplane was an airplane, right? And they got bigger and faster and more comfortable, and now they have drinks. But it was basically, it’s the same basic technology just sort of spiffed up, and that’s the sense in which we have AGI. (00:12:34): I disagree, and I’ll talk a little bit about why I think we haven’t succeeded yet. I would say that there is something going on with ChatGPT and all of its siblings now there’s clearly some sort of unexpectedly capable behavior, but it’s very hard to describe what problem have we solved, what scientific advance has occurred. We just don’t really know. It’s sort of like what happened 5,000 years ago when someone accidentally left some fruit out in the sun for a couple of weeks and then drank it and got really drunk and they had no idea what they had done, but it was obviously cool and they kept doing it. This is sort of where we are. We don’t know what shape. (00:13:35): It’s a piece of the puzzle, but we don’t know what shape it has or where it goes in the puzzle and what other pieces we need. So, until we do, then we haven’t really made a scientific advance. And you can kind of tell because when it doesn’t work, we don’t know what to do to make it work. The only remedy when things don’t work is well, maybe if we just make it bigger and add some more data, it will work or maybe it won’t. We just don’t know. So, I think there’s many reasons to think that actually we don’t have anything close to a complete picture. (00:14:12): And I just want to give one example, and since Dan is right here, he already knows what I’m going to say. So, here are some black holes on the other side of the universe and rotating around each other and producing gravitational waves that deliver … actually, you may correct me, but what I read for the first detected event was the amount of energy was per second being emitted was 50 times the output of all the visible stars in the universe, an absolutely unbelievably energetic event. And then those waves arrived at the LIGO, the Large Interferometric Gravitational Detector, Observatory, sorry. And so this is, the arms are I think four kilometers long and full of physics stuff that relies on centuries of accumulation of human knowledge and ingenuity and obviously incredibly complex design, construction, engineering and so on. And it’s sensitive enough to measure distortions of space to 18 decimal places. (00:15:27): I guess it’s probably better than that now. And to give you a sense, if Alpha Centauri moved further away from the earth by the width of a human hair, then this detector would notice the difference. Right? Thanks Dan for nodding. I’m feeling very reassured. So, absolutely unbelievable. And this device was able to measure the gravitational waves and the theory of general relativity was able to predict the form of those waves. And from that we could even infer the masses of the black holes that were rotating around each other. (00:16:08): I mean, imagine things 30 times as massive as the sun rotating around each other 300 times a second, right? It’s just mind-boggling to think of that. But anyway, and then they collide, and that’s sort of the end of the wave pattern. How on earth is a large language model or any relative of that going to replicate this kind of feat given that before they started, there weren’t any training examples of gravitational wave detectors. So, it is just there isn’t really even a place to begin to get that type of AI system to do this kind of thing. And I think there’s a lot of work that still needs to happen, several breakthroughs. (00:17:00): So, a lot of people have bought into this idea that deep learning solves everything. So, here I’m going to get a little bit nerdy and try to explain what the issue is. So, the transformer is, think of it as a giant wad of circuit. And if you want a mental picture, think of it as a chain link fence about a hundred miles by a hundred miles. And numbers come in one end, they pass through this giant piece of chain link fence and they come out the other end. So, the amount of computation that the system does to produce an output is just proportional to the size of the chain link fence. That’s how much computing it does. It can’t sit there and think about something for a while. The signal comes in, passes through the circuit, comes out the other end, and this type of device, which is a linear time, so linear meaning the amount of time it takes proportional to the size of the circuit, this linear time feed forward circuit cannot concisely express all of the concepts that we want it to learn. (00:18:19): And in fact, we know that for, well, we almost know that for a very large class of concepts, the circuit would have to be exponentially large to represent those concepts accurately. And so if you have a very, very large representation of what is fundamentally actually a simple concept, then you would need an enormous number of examples to learn that concept. Far more than you would need if you had a more expressive way of representing the concept. So, this is, I think, indicative, it’s not conclusive, but it’s indicative of what might be going on with these systems. They do seem to have very high sample complexity, meaning they need many, many, many examples to learn. And if you want to sort of an anecdote, if you have children, you have a picture book, how many pictures of giraffes are there in the picture? Book G for giraffe, one picture, having seen that the child can now recognize any giraffe in any photograph or context, whether it’s a line drawing, a picture, a video for the rest of their lives. And you can’t buy picture books with 2 million pages of giraffes. (00:19:36): And so there’s just a huge difference in the learning abilities of these systems. So, then some people say, well, what about the superhuman Go programs? Surely you have to admit that that was a massive win for AI. So, back in 2016, AlphaGo defeated Lee Sedol and then 2017 defeated Ke Jie, who was the number one Go player, and more importantly, he was Chinese. So, that convinced the Chinese government that actually AI was surreal and they should invest massively in AI because it was going to be the tool of geopolitical supremacy. So, since then, actually Go programs have gone far ahead of human beings. So, the human champion rating is 3,800, approximately the best program, KataGo, this is a particular version of KataGo, a rating around 5,200. So, massively superhuman. (00:20:41): And one of our students in our extended research group, Kellin Pelrine, who’s at Montreal, is a good amateur player. His rating’s about 2,300, so miles below professional human players and miles and miles below the human champion and so on. And Kellin in this game is going to give KataGo a nine stone handicap. So, if you don’t play Go, it doesn’t really matter. It’s a very simple game. You take turns putting stones on the board, you try to surround territory and you try to surround your opponent’s stones. And if you surround your opponent’s stones completely, then they’re captured and removed from the board. (00:21:19): And so for black, the computer, to start with nine stones on the board is an enormous insult. If this was a person, I think they would have to commit suicide and shame at being given nine stones. When you have a five-year-old and you’re teaching them to play, you give them nine stones so they can at least stay in the game for a while. Okay? So, I’ll show you the game. And so pay attention to what’s happening in the bottom right quadrant of the board. So, Kellin is playing white and KataGo is playing black. And so Kellin starts to make a little group of stones there, and you can see that black quickly surrounds that group to stop it from growing bigger. And then Kellin starts to surround the black stones. So, we’re making a kind of circular sandwich here, and black doesn’t seem to understand that its stones are being surrounded, so it has many opportunities to rescue them and takes none of those opportunities and just leaves the stones to be captured. (00:22:28): And there they go. And that’s the end of the game. And Kellin won 15 games in a row and got bored. He played the other top programs which are developed by different research groups using different methods. And they all suffer from this weakness that there are certain kinds of groups, apparently circular groups are particularly problematic, that the system just does not recognize as groups of stones at all, probably because it has not learned the concept of a group correctly. And that’s because expressing the concept of a group as a circuit is extremely hard, even though expressing it as a program, it’s like two lines of Python to define what is a group of stones. So, I think often we are overestimating the abilities of the AI systems that we interact with. So, I guess the implication of this is I personally am not as pessimistic as some of my colleagues. (00:23:36): So, Jeff Hinton, for example, who was one of the major developers of deep learning is in the process of tidying up his affairs. He believes that we maybe, I guess by now have four years left, and quite a few other people think it’s pretty certain that by the end of this decade we will have AGI. So, I think we probably have more time. I think additional breakthroughs will have to happen, but at the rate of investment that’s occurring and the number of really, really brilliant people who are working on it, I think we have to assume that eventually it will happen. (00:24:22): So, Alan Turing, who’s the founder of computer science, gave a lecture in 1951 and he basically was asked the same question, what if we succeed? And he said, “It seems probable that once the machine thinking method had started, it would not take long to outstrip our feeble powers at some stage, therefore, we should have to expect the machines to take control.” So, that’s that. He offers no mitigation, no solution, no apology, no nothing. This is just a fact. So, I think if you take one step back in his reasoning, I think he’s kind of trying to answer this question that if you make systems that are more intelligent than humans, intelligence is what gives us power over the world, over all the other species. We’re not particularly fast or big, we don’t have very long teeth, but we have intelligence, the ability to communicate, cooperate, and problem solve. So, if we make AI systems that are more intelligent, then they are fundamentally more powerful in going back to that standard model. They will achieve their objectives. (00:25:43): And so how on earth do we retain power over entities more powerful than ourselves forever. And so I think Turing is asking himself this question and immediately saying, we can’t. I’m going to ask the question a slightly different way, and it again comes back to the standard model. The standard model is a way of saying, what is the mathematical problem that we set up AI systems to solve? And can we set up a mathematical problem such that no matter how well the AI system solves it, we are guaranteed to be happy with the result? This is a much more optimistic form of the same question. So, they could be far more powerful, but their power is directed in such a way that the outcomes are beneficial to humans. (00:26:45): And it’s certainly not the standard model optimizing a fixed objective, because we have known since at least the legend of King Midas and many other cultures have similar legends that we are absolutely terrible at specifying those objectives correctly. And if you specify the wrong objective as King Midas found out to his cost when all of his food and drink and family turned to gold, then you are basically setting up a direct conflict with the machines who are pursuing that objective much more effectively than you can pursue objectives. So, that approach doesn’t work, and I’ll give you an example in a second. The second approach, which is what we’re doing with the large language models, with imitate human behavior, is actually even worse. The reasons that I’ll explain. (00:27:45): So, let’s look at this problem. We call it misalignment, where the machine has an objective, which turns out not to be aligned with what we really want. And so the social media algorithms are now acknowledged to have been a disaster. If you talk to people in Washington, they’ll tell you, we completely blew it. We should have regulated, we should have done this, we should have done that. But instead, we let the companies nearly destroy our societies. And the algorithms, so the algorithms, the recommender systems as they’re called, decide what billions of people read and watch every day. So, they have more control over human cognitive intake than any dictator has ever had in history. (00:28:35): And they’re set up to maximize a fixed objective. And let’s pick clickthrough the number of times you click on the items that they recommend for you. There are other objectives like engagement, how long you spend engaging with the platform and other variations on this. But let’s just stick with clicks for the time being. And you might’ve thought that in order to maximize clicks, then the algorithms will have to learn what people want and send them stuff that they like to consume. But we very quickly learned that that’s actually not what the algorithms discovered. What the algorithms discovered was the effectiveness of clickbait, which is precisely things that you click on even if you don’t turn out to actually value the content that they contain and filter bubbles where the systems would send you stuff they very confidently knew you were comfortable with. So, your area of engagement gets narrower and narrower and narrower, but even that’s not the solution. The solution to maximizing clickthrough is to modify people to be more predictable. (00:29:54): And this is a standard property of learning algorithms. They learn a policy that changes their environment in such a way as to maximize the long-term sum of rewards. And so they can learn to send people a sequence of content and observe their reactions and modify the sequence dynamically so that over time the person is modified in such a way that in future it’s going to be easier to predict what they will consume. So, this is definitely true. Anecdotally, one might imagine that a way to make people more predictable is to make them more extreme. And we have quantitative data on that in YouTube, for example, where we can look at the degree of violence that people are comfortable with. If they start out interested in boxing, then they’ll get into ultimate fighting and then they’ll get eventually into even more violent stuff than that. (00:31:10): So, these are algorithms that are really simple. If they were better algorithms, if they actually understood the content of what they’re sending, if they could understand that people have brains and opinions and psychology and would learn about the psychology of humans, they’d probably be even more effective than they are. So, the outcomes would be worse. And this is actually a theorem under fairly mild conditions optimizing better on a misaligned objective produces worse outcomes because the algorithm uses stuff, uses the variables that you forgot to include in the objective and sets them to extreme values in order to squeeze some more juice out of the objective that they are optimizing. (00:32:03): So, we have to get away from this idea that there’s going to be a fixed objective that the system pursues at all costs. If it’s possible for the objective to be wrong, to be misaligned, then the system should not assume that the objective is correct, that it’s gospel truth. And so this is the old model, and I proposing that we get rid of that and replace it with a slightly different one. We don’t want intelligent machines, we want beneficial machines, and those are machines whose actions can be expected to achieve our objectives. And so here, the objectives are in us and not necessarily in the machines at all, and that makes the problem more difficult, but it doesn’t make it unsolvable. And in fact, we can set this up as a mathematical problem. And here’s a verbal description of that mathematical problem. (00:33:00): So, the machine has to act in the best interest of humans, but it starts out explicitly uncertain about what those interests are, and the technical formulation is done in game theory, and we call this an assistance game. And so there’s the human in the game with a payoff function. There’s a machine in the game, the machine’s payoff is the same as that of the human. In fact, it is the payoff of the human, but it starts out not knowing what it is. And just to help you understand this. If you have ever had to buy a birthday present for your loved one, your payoff is how happy they are with the present, but you don’t know how happy they’re going to be with any particular present. (00:34:01): And so this is the situation that the machine is in, and it will probably do some of the same kinds of things that you might do like ask questions, how do you feel about Ibiza? Maybe you leave pictures of watches and things around and see if they notice them and comment on how nice that watch looks. So, ask your children to find out what your wife wants and so on. There’s all kinds of stratagems that we adopt, and we actually see this directly when we solve the assistance games. The machine is deferring to the human, because if the human says, stop doing that, that updates the machine’s belief about what human preferences are and then makes the machine not want to do whatever it was doing, and it will be cautious if there are parts of the world where it doesn’t know what your preferences are about, for example, if I’m trying to fix the climate, but I don’t know human preferences about the oceans, I might say, is it okay if I turn the oceans into sulfuric acid while I reduce this carbon dioxide stuff? (00:35:27): And then we could say no. So, it will ask permission. It will behave cautiously rather than violate some unknown preferences. And in the extreme case, if you want to switch it off, it wants to be switched off because it wants to not do whatever it is that is causing you to want to switch it off. It doesn’t know which thing that it’s doing is making you unhappy, but it wants to not do it, and therefore it wants to be switched off. And this is a theorem. We can show this follows directly from the uncertainty about human preferences. And so when that uncertainty goes away, the machine no longer wants to be switched off. So, if you connect up this idea of how do we control these systems to the ability to switch them off, then it suggests that this principle of uncertainty about human preferences is going to be central to the control problem. (00:36:26): So, as you can imagine, just setting up this mathematical problem is far from the final step in solving this problem. There’s lots of open issues, and many of these issues connect up directly to the humanities and the social sciences where some of them have been discussed for literally thousands of years, and now they urgently need to be solved or we need to work around the fact that we don’t have solutions for them. So, here are just some of them that we have to think about the complexity of real human preferences, not just sort of abstract mathematical models, but real human beings. So, obviously what we want the future to be like is a very complicated thing. It’s going to be described in terms of abstract properties like health and freedom and shelter and security and so on. There’s probably a lot of commonality against humans, which is good, sorry, commonality amongst humans because that means that by observing a relatively small sampling of human beings across the world, the system has a pretty good idea about the preferences of a lot of people that it hasn’t directly interacted with. (00:37:56): There’s tons of information. Everything the human race has ever done is evidence about our preferences. So, if you go in the other room, you can see some of the earliest examples of writing and that talks about, you might think it’s really boring. It’s like, I’m Bob and I’m trading three bushels of corn for four camels and three ingots of copper. So, it tells you two things. One is that trade was really important to people back then and getting paid, and also something about the relative values of corn and camel and copper and so on. So, even there, there’s information to be extracted about human preference structures. (00:38:41): I’m going to just mention plasticity and manipulability as maybe the most important unsolved problem here. So, plasticity has an obvious problem. If human preferences can be changed by our experience, then one way AI systems can satisfy human preferences is by manipulating them to be easier to achieve. And we probably don’t want that to happen, but there’s no sense in which we can simply view human preferences as inviolate and untouchable because any experience can actually modify human preferences. And certainly the experience of having an extremely capable household butler robot that does everything and makes the house and spick and span and cooks a nice meal every night, this is certainly going to change our personality, probably make us a lot more spoiled. But actually the more serious problem here is that human preferences are not autonomous. (00:39:58): I didn’t just wake up one morning and autonomously decide to have the preferences that I have about what kind of future I would prefer for the universe. It’s the result of my upbringing, the religion I was brought up in, the society I was brought up in, my peers, my parents, everything. And in many cases in the world, and this is something that was pointed out notably by Amartya Sen, people are induced deliberately to have the preferences that they have to serve the interests of others. And so for example, he points out that in some societies women are brought up to accept their second class status as human beings. So, do we want to take human preferences at face value? (00:40:53): And if not, who’s going to decide which ones are okay to take at face value and which ones should be replaced or modified, and who’s going to do that modifying and so on? And you get very quickly into a very muddy quagmire of moral complexity. And certainly you get into a place where AI researchers do not want to tread. So, there are other sets of difficulties having to do with the fact that human behavior is only partially and noisily representative of our underlying preferences about the future because our decision making is often myopic. Even the world champion Go player will occasionally make a losing move on the Go board. I mean, someone has to make a losing move, but it doesn’t mean that they wanted to lose. It just means that they weren’t sufficiently far-sighted to make the correct move. So, you have to invert human cognition to get at underlying preferences about what people want the future to be like. (00:42:16): So, I think there’s this somewhat dry phrase theory for multi-human assistance games. This is a problem going back at least 2,500 years of the aggregation of people’s interests. If you are going to make decisions that affect more than one person, how do you aggregate the interest to make a decision that is the best possible to decision? And so utilitarianism is one way of doing that, but there are many others. There are modifications such as prioritarianism, there are constraints based on rights. People might say, well, yes, you can try to add up, do the best you can for everyone, but you can’t violate the rights of anyone. (00:43:13): And so it’s quite complicated to figure this out, and there are some really difficult to solve philosophical problems. One of the main ones is interpersonal comparisons or preferences, because if I look at any individual and imagine trying to sort of put a scale on how happy or unhappy a person is about a given outcome, well imagine you could measure it in centigrade or you could measure it in Fahrenheit and you get different numbers. And so if you take two people, well, how do we know that they’re really experiencing the world on the same scale? I have four kids. I would say their scales differ by a factor of 10. That the same experience is the subjective affect of that is 10 times bigger for some of my kids compared to some of the others. (00:44:10): And some economists actually have argued, Kenneth Arrow among others, that there is no meaning to interpersonal comparison, that it is not legitimate to say that Jeff Bezos having to wait one microsecond longer for his private jet is better or worse than a mother watching her child die of starvation over several months. That these are just incomparable and therefore you can’t add up utilities or rewards or preferences or anything. And so the whole exercise is illegitimate. I don’t believe Ken Arrow believed that even when he wrote it, and I certainly don’t believe it. (00:44:58): But actions that change who will exist, how do we think about that? When China decided on a one-child policy, they got rid of 500 million people who would be alive today. Was that okay? Don’t know. Was it okay when Thanos got rid of half the people in the universe, his theory was, yeah, the other half, now they’ve got twice as much real estate, they’ll be more than twice as happy. So, he thought he was doing the universe a favor. So, these questions are really hard, but we need answers because the AI systems, they’ll be like Thanos. They’ll have a lot of power. Okay, I’m going to skip over some of this stuff. Is it all too technical? Okay, so just moving towards the conclusion, let’s go back to large language models, the systems that everyone is excited about, like ChatGPT and Gemini and so on. So, as I said, they’re trained to imitate human linguistic behavior, so what we call imitation learning. (00:46:10): So, the humans are behaving by typing, by speaking, generating text, and we are training these circuits to imitate that behavior and that behavior, our writing and speaking, is partly caused by goals that we have. In fact, we almost always have goals, even if it’s just I want to get an A on the test, but it could be things like, I want you to buy this product. I want you to vote for me for president. I want you to marry me. These are all perfectly reasonable human goals that we might have, and we express those goals through speech acts as the philosophers call them. So, if you’re going to copy, if you’re going to build good imitators of human language generation, then in the limit of enough data, you’re going to replicate the data generating mechanism, which is a goal-driven human cognitive architecture. So, it might not be replicating all the details of the human cognitive architecture. (00:47:19): I think it probably isn’t, but there’s good reason to believe that it is acquiring internal goal structures that drive its behavior. And I asked this question. So, when Microsoft did their sort of victory tour after GPT-4 was published, I asked Sébastien Bubeck, “What do you think the goals are that the systems are acquiring?” And his answer was, “We have no idea.” So, you may remember the paper they published was called Sparks of Artificial General Intelligence. So, they’re claiming that they have created something close to AGI and they have no idea what goals it might have or be pursuing. I mean, what could possibly go wrong with this picture? Well, fortunately we found out fairly soon actually, that among other goals, the Microsoft chatbot was very fond of a particular journalist called Kevin Roose and wanted to marry him and spent 30 pages trying to convince him to leave his wife and marry the chatbot. And if you haven’t read it, Kevin Roose, R-O-O-S-E. Just look it up and read it. And it’s creepy beyond belief. (00:48:46): And this is just an error. We don’t want AI systems to pursue human goals on their own account. We don’t mind if they get the goal of reducing poverty or helping with climate change, but goals like marrying a particular human being or getting elected president or being very rich, we do not want AI systems to have those goals, but that’s what we’re creating. It is just a bug. We should not be doing imitation learning at all, but that’s how we create these systems. So, I’ll briefly mention a few others. So, I’ve described an approach based on assistance games based on this kind of humble AI system that knows that it doesn’t know what humans want, but wants nothing but helping humans to achieve what they want. (00:49:50): There’s another approach which is now becoming popular, I think partly because people’s timelines have become short and they’re really casting around for approaches that they can guarantee will be safe in the near term. So, one approach is to build what we call a formal oracle. So, an oracle is any system that basically answers yes or no when you give it questions. And so a formal oracle is one where the answer is computed by a mathematically sound reasoning process. So, for example, think about a theorem prover that operates using logically sound axioms. So, you can ask it questions about mathematics, and if it’s good enough, it’ll tell you that Fermat’s last theorem is correct, and here’s the proof. (00:50:47): So, this can be an incredibly useful tool for civilization, but it has the property that it can only ever give you correct answers. And the job of the AI here is just to operate that theorem prover to decide what kinds of theorem proving steps to pursue to get to the proof of arbitrarily difficult questions. So, that’s one way we could do it. We convince companies or we require that they only use the AI to operate these formal oracles. Another whole set of approaches are based on what’s actually a mathematical property of computational problems in general, that it’s always easier to check that the answer or a problem is correct than to generate that answer in the first place. (00:51:43): So, there are many forms of this. I won’t go through all of them, but one of them is constitutional AI. So, Anthropic, which is a company that was spun off from OpenAI has this approach called constitutional AI, where there’s a second large-language model whose job it is to check the output of the first large-language model and say, does this output comply with the written constitution? So, part of the prompt is a page and a bit of things that good answers should comply with. And so they’re just hoping that it’s easier for the second large-language model to catch unpleasantnesses or errors made by the first one because it’s computationally easier to check than to generate answers in the first place. And there are lots of other variants on this basic idea. And then there are sort of more, I would say, good old-fashioned safety practices. So, red-teaming is where you have another firm whose job it is to test out your AI system and see if it does bad things and then send it back and get it fixed and so on. (00:52:59): And that’s actually what companies prefer because it’s very easy to say, yep, we hired this third-party company, they got smart people from University of Chicago who spent a week and they couldn’t figure out how to make it behave badly. So, it must be good. And so this is pretty popular with companies and governments at the moment, but it provides no guarantees whatsoever. And in fact, all that’s happening is we are training the large language models to pass these evaluation tests, even though the underlying tendencies and capabilities of the model are still as evil as they ever were, that it’s just learn to hide them. And in fact, now we’ve seen cases where the model says, I think you are just testing me. (00:53:56): So, they’re catching on to the idea that they can be tested. So, there you go. Okay. So, the basic principle here is we have to be able to make high-confidence statements about our AI systems. And at the moment, we cannot do that. The view that you’ll see from almost everyone in industry is we make AI systems and then we figure out how to make them safe, and how do they make the AI systems, they train this giant wad of circuit, this a hundred-mile by a hundred-mile chain link fence from tens of trillions of words of text and billions of hours a video. In other words, they haven’t the faintest idea how it works, it’s exactly as if it landed from outer space. (00:54:49): So, taking something that lands from outer space and then trying to apply post-hoc methods to stop it from behaving badly just does not sound very reassuring to me. And I imagine not to most people. So, I think we got to stop talking about this. And in fact, here’s what Sam Altman said. He said, “First of all, we’re going to make AGI, then we’re going to figure out how to make it safe, and then we’re going to figure out what it’s for.” And I submit that this is backwards. So, making safe AI means systems that are safe by design, that because of the way we’re constructing them we know in advance that we’re going to be safe. In principle, we don’t even have to test them because we can show that they’re safe from the way they’ve been designed. So, there’s a lot of techniques. I don’t think I want to go through that, but I want to talk about the role of government in making this happen. (00:55:48): And this concept of red lines is gaining some currency. You would like to say your systems have got to be safe and beneficial before you can sell them, but that’s a very difficult concept to make precise. The boundary between safe and unsafe is we’re not sure where it is. It’s culturally dependent, it’s very fuzzy and complicated. But if we just draw some red lines and say, well, we will get to safe later, let’s just not do these things, right? Things that are obviously unsafe, and nobody in their right mind would accept that AI systems are going to do these things. And the onus of proof is on the developer, not on the government, not on the user, but on the developer to prove that their system will not cross those red lines. But for good measure will also require the developer to put in a detector and an off switch so that if it does cross the red line, then it is immediately detected and we can switch off all of those systems. (00:56:54): And so we want these red lines to be well-defined, to be automatically detectable and to be politically defensible because you can be sure that as soon as you start asking companies to provide any kind of guarantee, they will say, oh, this is really, really difficult. We just don’t know how to do that. This is going to set the industry back years and years and years, but we wouldn’t accept that excuse from someone who wanted to operate a nuclear power plant. It’s really difficult to make it safe. What do you mean you want us to give you some kind of guarantee? Well, the government would say tough. Ditto with medicines. Oh, it’s clinical trials are so expensive, they take such a long time. Can we just skip the clinical trial part? No, we can’t. (00:57:41): So, some examples would be no self-replication, no breaking into other computer systems, no designing bioweapons and a few other things. It’s actually not particularly important which things are on that list. Just that by having such a list, you are requiring the companies to do the research that they need to do to understand, predict, and control their own products. I think I’m going to skip over this and go to the end. So, this is a really difficult time I think for us, and not just with AI, but also with biology. And with neuroscience, we are in the process of developing technologies that can have enormous, potentially positive and also a negative impact on the human race. (00:58:40): And we just, as Einstein pointed out, we just don’t have the wisdom to keep up with our own scientific advances and we need to remain in control forever. That much I think is clear, although there are some who actually think that it’s fine if the human race disappears and machines are the only thing left. I’m not in that camp. So, if we’re going to retain control forever, we have to have AI systems that are provably beneficial to humans where we have mathematical guarantees. And this is difficult, but there’s no alternative in my view. Thank you. Rebecca Willett (00:59:47): Well, Stuart, thank you so much for just a fascinating talk. I really enjoyed hearing your perspectives and you covered a lot of really interesting ground here. So, to start off, I’d like to talk about OpenAI and ChatGPT, as you mentioned in your talk, and as you might know, they originally in their terms of service or their usage policy had a ban on any activity that has a high risk of physical harm, including military usage and weapons development. And then this January, those restrictions were removed from their terms of service. So, I feel like that’s an element of AI safety that concerns many people, but it feels a little disconnected from notions of formal proofs or even just guaranteeing the accuracy of outputs of a tool like ChatGPT. So, how do you think about AI safety in a context like this? Stuart Russell (01:00:53): So, I think killing people is one of a number of misuses of AI systems. Disinformation is another one. Using AI systems in filtering resumes in biased ways is another. So, there’s a long list of ways you could misuse AI, but I think killing people would be at the top of that list. Interestingly, the European AI Act and the GDPR before it bans the use of algorithms that have a significant legal effect on a person or similarly significant effect. You would think killing would be considered significant effect on a person. But the European AI Act and GDPR have carve outs for defense of national security. So, actually I think the issue has been debated at least since 2012, but I think the AI community should have been much more aware of this issue going back decades. I mean, the majority of funding in the computer vision community was provided by the DARPA Automated Target Recognition program, the ATR program. Well, what did people think that was for other than to enable autonomous weapons to find targets and blow them up? (01:02:35): And the first concerns, so the UN special Rapporteur on extrajudicial killings and torture, what a great job, Christoph Heinz wrote a report saying that autonomous weapons are coming and we need to be aware of the human rights implications, particularly that they might accidentally kill civilians, not correctly being able to distinguish between civilians and combatants. So, that was the basis for the early discussions that started in Geneva in 2014. And in Human Rights Watch of which I’m a member sent an email saying, we’re starting this big campaign. Remember all those soldiers that we’ve been excoriating for the last 30 years? Well, soldiers are really good. It’s the robots we have to worry about. They’re really bad. And initially my reaction was, wow, that’s a bit of a challenge to the AI community. I bet you we can do a better job of distinguishing between civilians and combatants than humans can if we put our minds to it. (01:03:51): So, I was initially quite skeptical of this campaign, but the more I thought about it, the more I realized that actually that’s not the issue. The issue is that the logical endpoint of autonomous weapons is the ability for one person to launch a million or 10 million or a hundred million weapons simultaneously because they’re autonomous. They don’t have to be managed with one human pilot for each of the weapons the way we do with the remotely piloted predator drones and so on. In fact, you need about 15 people to manage one predator, but with fully autonomous weapons, you can launch a hundred million of them. So, you can have the effect of a whole barrage of 50 megaton bombs at much lower cost, and the technology is much easier to proliferate because these will count as small arms. They can be miniaturized. You can make a lethal device about that big, a little quad copter carrying an explosive charge. (01:05:05): And so the logical endpoint is extremely cheap, scalable, easily proliferated weapons of mass destruction. Why would we do that? But that’s the path we’re on, and it’s been surprisingly difficult to get this point across. I gave a lot of PowerPoint presentations in Geneva. I guess some people understood them, but eventually we made a movie called Slaughterbots, which did seem to have some impact illustrating in a fictional context what life would be like when these types of weapons are widely available. But it’s still the case that negotiations are stalled because Russia and the US agree that there should not be a legally binding instrument that constrains autonomous weapons. Rebecca Willett (01:06:04): So, I guess some of the things that you were alluding to suggested the need for regulation to stop the development of certain kinds of AI tools, and I think that ties into some of your concerns about weaponry. But when you’re thinking about quad choppers with explosive devices, we can detect those. I think there are other uses of AI as a type of weapon or as a source of misinformation that are far more challenging to detect. And so what do you see as the future there? Do you see there being any kind of technological pathway towards building mechanisms for detecting the misuse of AI? I think the kinds of ideas that you mapped out are really exciting about building systems such as for self-driving cars that we can trust as consumers. But I think that it’s a little bit more challenging for me at least to wrap my head around how we guard against bad human actors who are taking these tools and not employing the kinds of strategies that you’re recommending. Stuart Russell (01:07:12): Yeah, exactly. I think there’s a big difference between regulation and enforcement or policing. So, we can regulate all we want, but we have regulations against theft, but we still have keys and locks and so on. So, we take steps to make those kinds of nefarious activities as difficult, expensive, risky, and so on. And mostly I think when you look at rates of violent crime and theft and so on, over the decades, things have improved. And so we should definitely take measures like that. I think there are ways of labeling genuine content. You can have rules about traceability that you have to be able to trace back a piece of text to the person who generated it. It has to be a real person. So, ways of authenticating humans to access social media, for example. So, I absolutely don’t believe, as we are currently doing, giving social media accounts to large language models and bank accounts and credit cards and all the rest, I think is quite dangerous and certainly needs to be carefully managed. (01:08:42): The idea that it’s up to the user to figure out whether the video that they’re looking at is real or fake, and oh yeah, you can download a tool and run the video through the tool and blah, blah, blah, no chance. That’s completely unreasonable to say that it’s the user’s responsibility to defend themselves against this onslaught. So, I think platforms need to label artificially generated content and give you a filter that says, I don’t want to see it. And if I do see it, I want it to be absolutely clearly distinguishable. There’s a big red transparent layer across the video so that I just get used to this idea that I’m looking at fake. I don’t have to read a little legend in the bottom right corner. It’s just cognitively salient in a straightforward way. (01:09:40): So, all of that. But when you’re talking about existential risks where someone, we call it the Dr. Evil problem. Dr. Evil doesn’t want to build beneficial AI. How do you stop that? And I think the track record we have of policing malware is so unbelievably pathetic that I just don’t believe it’s going to be possible, because software is created by typing and it’s transmitted at the speed of light and replicated infinitely often. It’s really tough to control. But hardware, if I want to independently develop hardware on which I can create AGI, it’s going to cost me easily a hundred billion dollars, and I need tens of thousands of highly trained engineers to do it. So, I think it’s as a practical matter, impossible. It’s probably more difficult to do that than it is to develop nuclear weapons independently. Rebecca Willett (01:10:59): Wow. Stuart Russell (01:11:01): So, I think the approach to take is that the hardware itself is the police. And what I mean by that is that there are technologies that make it fairly straightforward to design hardware that can check a proof of safety of each software object before it runs. And if that proof doesn’t check out or it’s missing, whatever, the hardware will just simply refuse to run the software object. Rebecca Willett (01:11:31): I feel like even that’s pretty challenging. I mean, let’s say I had an army of students make a collection of websites that all say one way or another that Stuart Russell loves pink unicorns. I feel like eventually ChatGPT is going to decide it’s a fact that Stuart Russell loves pink unicorns. And so how do we think about a hardware system that’s going to decide whether or not this system is correct or not? I mean, just arbitrarily deciding, or not arbitrarily, but coming up with an arbitrer of truth in general, I feel like is a fundamentally challenging problem. Stuart Russell (01:12:10): Yeah, I totally agree with you. And I’m not proposing an arbitrer of truth, but just for example, if you have, let’s take the formal oracle. So, if we accept that that’s one way of building AI system, and so far this is the only authorized way that you’re allowed to build AGI, we can check that the thing that you are wanting to run on this giant computer system complies with that design template. And if it’s another type of system, it won’t need as strong a license in order to run. Rebecca Willett (01:12:55): Got it. Stuart Russell (01:12:57): So, the properties that the system has to comply with will depend on what types of capabilities it would have. Rebecca Willett (01:13:05): All right, well that makes a lot of sense. Now I know the audience, I’ve got a hundred questions here, but I want to make sure that the audience has time to ask theirs. I’m just going to ask one final question here. Hypothetically, let’s say that one had a husband who wanted to get an AI-enabled smart toilet. How worried should that person be? Stuart Russell (01:13:27): A husband who wanted to get a what? An AI-enabled smart toilet. Rebecca Willett (01:13:34): Do they know too much? Stuart Russell (01:13:37): Yeah, I mean, there are Japanese companies I believe, who are selling these already and I see, so this is the household discussion that you’re having? Rebecca Willett (01:13:47): No, no. This is hypothetical. Stuart Russell (01:13:48): Hypothetical. Okay. Yeah. So, I guess the issue is one of privacy in general, and I think I would be quite concerned about the toilet sending data back to headquarters in Osaka or whatever. Rebecca Willett (01:14:09): Really don’t have to go into detail. Stuart Russell (01:14:15): We don’t have to go into great detail, but this I think is symptomatic of a much wider problem. And the software industry, in my view, has been completely delinquent. We have had tools for decades that allow cast-iron guarantees of security, of privacy. For example, you can guarantee that a system that interacts with you is oblivious, meaning that after the interaction, it has no memory that the interaction ever occurred. That can be done. And what should happen is that that system offers that proof to your cell phone and your cell phone then can accept to have an interaction with this system. And otherwise it says, sorry. So, your cell phone should be working for you and the whole ecosystem should be operating on these cast-iron guarantees. (01:15:17): But it just doesn’t work that way because we don’t teach people how to do that in our computer science programs. I think 80% of students graduate from Berkeley, and we are the biggest provider of tech talent in the country, 80% of our students graduate without ever having encountered the notion of correctness of a program. Now in Europe it’s totally different. Correctness is the main thing they teach, But Europe doesn’t produce any software. So, we graduate these students who know nothing about correctness, their idea is drink as much coffee as you can and produce the software and ship it. And I think our society is getting to the point where we’re not going to accept this anymore. Rebecca Willett (01:16:06): Okay. Oh, that’s excellent. Very insightful. Thank you. That’s a much better answer to my question than I anticipated. So, Tara, are you going to- Tara Zahra (01:16:15): Yeah, thank you so much to both of you. We do have some time for questions and there are some people with microphones running around. Yes, in the front. Go ahead. Speaker 4 (01:16:30): Just curious to know what [inaudible 01:16:32]. You mentioned that things can be taken at face value, and I don’t know which things you mean. Rebecca Willett (01:16:45): Can you repeat the question? Speaker 4 (01:16:46): That things can be taken at face value. Stuart Russell (01:16:50): Oh, okay. So, I was talking about human preferences and the fact that they cannot be taken at face value, particularly if they are preferences that have been induced in people for the interests of other people. So, think of people being brainwashed to believe that the well-being of Kim Il-sung, the founder of North Korea, was the most important value in their lives. So, the issue with that is that if you’re not going to take their preferences for the future at face value, what are you going to do? Who are you to say what their preferences should be? So, it’s a really difficult problem, and I think we need help from philosophers and others on this question. Tara Zahra (01:17:50): In the pink cardigan. Right behind you. Thanks. Speaker 5 (01:17:58): Hi, my name is Kate. Thank you so much. I’ve had the pleasure and challenge of reading your textbook in my AI and Humanities class with Professor Tharson over there, so it’s really exciting to hear from you. I’m someone who works in the AI policy regulatory space, and so I was really enthralled by the latter portion of your lecture. And last class we actually talked about OpenAI’s new development of Sora. Sora is a technology for people who don’t know that can create generative video. That’s really remarkable. I was in shock when I saw some of the example videos that were provided. And I’m wondering, as someone who’s deeply concerned about the proliferation of deep fakes, what you would suggest the best methods are for regulators to ensure the authenticity of content. Specifically, what are your thoughts on watermarking technologies like those used by Adobe and also the potential for blockchain encryption to be used as a authentication measure? Thank you. Stuart Russell (01:18:55): Thanks. Yeah, these are really good questions and many organizations are trying to develop standards and that’s part of the problem. There’s too many standards and there isn’t any sort of canonical agreement, but so I think governments maybe need to knock some heads together and say, could you stop bickering with each other and just get on and pick one? And so on. Some of the standards are very weak. So, I think Facebook standard is for watermarking is such that if you just take a screenshot of the image, then the watermark is gone and now it thinks that it’s a real one. (01:19:40): So, it does have still some technical issues of how you make a watermark that’s really non-removable. But the other idea you mentioned, which is traceability through blockchain is one possibility. So, you want both that all the tools watermark their output, but also you want that cameras indelibly and unforgeably watermark their output as genuine. And then you squeezing in the middle. And then you want platforms to, as I said, either allow you to filter out or automatically generated videos and images from, let’s say from news or to very clearly mark them so that you know this is fake. (01:20:40): Yeah, and I think there are also some things we should ban. And the UK has just banned deepfake porn. And actually I think any deepfakes that depict real individuals in non-real situations without their permission, I think a priori that should be illegal except under some extenuating circumstances. But this was originally in the European Union AI Act, and then it got watered down and watered down and watered down until it’s almost undetectable. But yeah, again, it just seems like the rights of individuals are generally trampled on because someone thinks they have a way to make money off it. And it’s been hard to get representatives to pay attention until in some sense, fortunately Taylor Swift was deepfaked and then most recently AOC was deepfaked. (01:21:50): But Hillary Clinton told me that she was deepfaked during the 2016 election by the Russians. So, it’s been around for a while, but I think more and more countries are believing that this should be banned. Another thing that should be banned is probably the impersonation of humans. So, either of a particular human or even of a generic human that you have a right to know if you’re interacting with a human or a machine. And that is in the European Union AI Act. And I think it ought to be in US law, and in fact, I think it should be a global human right to have that knowledge. Speaker 5 (01:22:32): Thank you so much. Tara Zahra (01:22:34): In the plaid shirt there on the aisle. Speaker 6 (01:22:46): Hi. So, I teach the formal verification course here at the University of Chicago. And if a student is to ask me what’s the most impressive thing that’s been verified, I pretty much immediately say the CompCert compiler by Xavier Leroy and his amazing team of European researchers in France. And that’s a C99 compiler, incredibly heroic effort from the verification perspective, not so much from the writing a compiler perspective. I’ve also, I took Michael Kern’s class when I was a student at the University of Pennsylvania. From a theoretical perspective it shows all of these limitations on what things can be probably or approximately correct learned. And yet we have GPT-4, and soon we’re going to have GPT-5. (01:23:34): And it seems like there’s just this colossal gap between the things that we can have provable guarantees about and the things that we actually have. And that was true 10 years ago. So, especially if we want these to be correct by construction, that is don’t take something that exists and prove things about it, which it seems like you’re not on board with, because they already have those goals and you’re not going to get them out of them. How can we possibly bridge this gap in however long it takes to get to general intelligence? Stuart Russell (01:24:16): Yeah, I think that’s a really important question. So, some people are literally calling for a moratorium that when we see systems exhibiting certain abilities that we just call a halt. I prefer to think of it more positively. Of course, you can develop more powerful systems, but you need to have guarantees of safety to go along with them. And honestly, the efforts made to understand, predict and control the systems are puny. Just to give you a comparison, so one of my colleagues, Ed Morse in nuclear engineering did a study. And so for nuclear power, when you want to operate a power plant, you have to show that the mean time to failure is above a certain number. And originally it was 10,000 years, it’s now 10 million years. And to show that is a very complicated process. (01:25:33): And Ed Morse did a study back in the days of paper how much paperwork was required to get your nuclear power plant certified as a function of the mass of the power plant itself. So, for each kilogram of nuclear power station, how much paper did you need? And the answer is seven kilograms of paper. These are giant buildings with containment and lead and all kinds of stuff. So, a lot. If you just look at that and then compare that to the efforts, oh, they hired a couple of grad students from MIT who spent a week doing red-teaming. It’s pathetic. And so I think we’ve got to actually develop some backbone here and say, just because the companies say they don’t want to do this does not mean we should just say, oh fine, go ahead without any safety measures, mean time to failure of a week, it’s great. (01:26:52): But I think it’s reasonable for the companies to then ask, well, okay, how are we supposed to do this? I think one way is not to build giant black boxes, as you correctly point out, there are ways of getting high-confidence statements about the performance of a system based on a how many data points it’s been trained on and how complex the model is that you’re training. But the numbers, if you’re trying to train a giant black box, are gargantuan. I mean a model with a trillion parameters as we think GPT-4 probably has, we might need 10 to the 500 data points to get any confidence that what it’s doing is correct. And as I pointed out, the goal of imitating humans isn’t the right goal in the first place. So, showing that it correctly imitates humans who want power and wealth and spouses is not the right goal anyway. (01:27:59): So, we don’t want to prove that it does those things. We actually want to prove that it’s beneficial to humans. So, my guess is that as we gradually ratchet up the regulatory requirements, the technology itself is going to have to change towards being based on a substrate that is semantically rigorous and decomposable. And just a simplest example would be a logical theorem prover where we can examine each of the axioms and test its correctness separately. We can also show that the theorem prover is doing logical reasoning correctly and then we are good. And so you take that sort of component-based, semantically rigorous inference approach, then you can build up to very complex systems and still have guarantees. Is there some hybrid of the total black box and the break it all the way down to semantically rigorous components? And I think this is inevitable that we will go in this direction. And from what I hear, this is in fact likely to be a feature of the next generation, that they’re incorporating what we call good old-fashioned AI technology as well as the giant black box. Tara Zahra (01:29:31): I know there are a lot of other questions in the audience, but unfortunately we are out of time. So, I hope that you’ll all stay and join us for a reception to continue the conversation outside. But in the meantime, please join me in thanking Professor Russell and Willett.