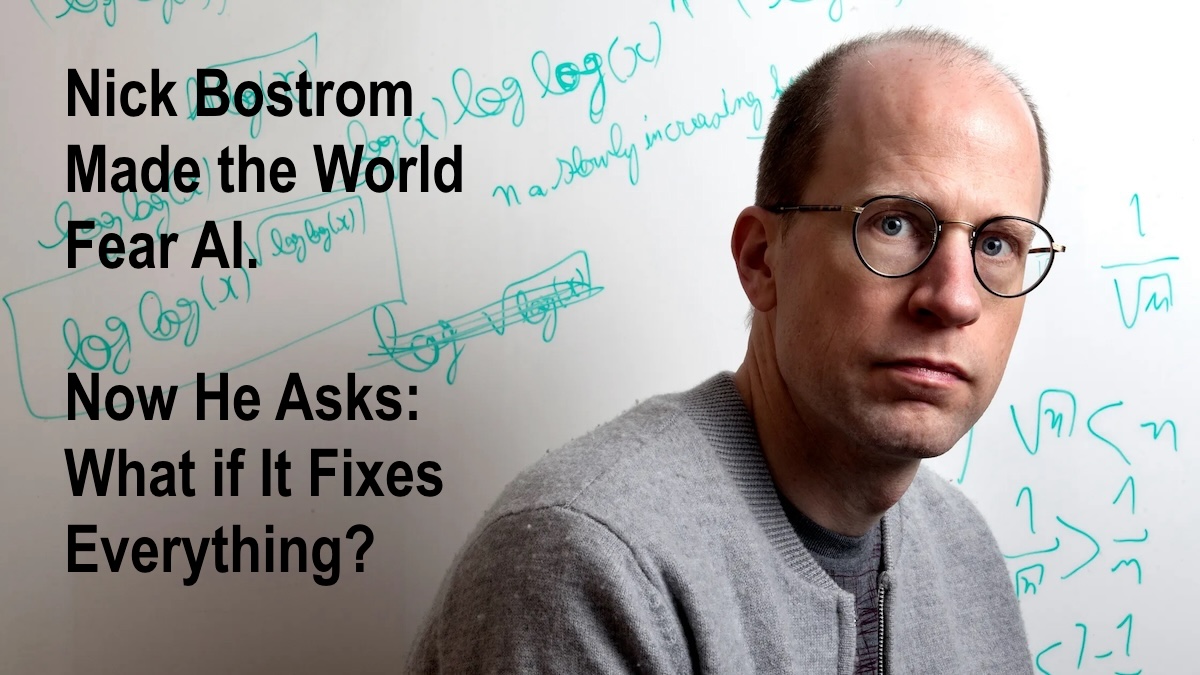

WIRED. Nick Bostrom Made the World Fear AI. Now He Asks: What if It Fixes Everything?

Philosopher Nick Bostrom popularized the idea superintelligent AI could erase humanity. His new book imagines a world in which algorithms have solved every problem.

WILL KNIGHT, BUSINESS

MAY 2, 2024 12:00 PM

Philosopher Nick Bostrom is surprisingly cheerful for someone who has spent so much time worrying about ways that humanity might destroy itself. In photographs he often looks deadly serious, perhaps appropriately haunted by the existential dangers roaming around his brain. When we talk over Zoom, he looks relaxed and is smiling.

Bostrom has made it his life’s work to ponder far-off technological advancement and existential risks to humanity. With the publication of his last book, Superintelligence: Paths, Dangers, Strategies, in 2014, Bostrom drew public attention to what was then a fringe idea—that AI would advance to a point where it might turn against and delete humanity.

To many in and outside of AI research the idea seemed fanciful, but influential figures including Elon Musk cited Bostrom’s writing. The book set a strand of apocalyptic worry about AI smoldering that recently flared up following the arrival of ChatGPT. Concern about AI risk is not just mainstream but also a theme within government AI policy circles.

Bostrom’s new book takes a very different tack. Rather than play the doomy hits, Deep Utopia: Life and Meaning in a Solved World, considers a future in which humanity has successfully developed superintelligent machines but averted disaster. All disease has been ended and humans can live indefinitely in infinite abundance. Bostrom’s book examines what meaning there would be in life inside a techno-utopia, and asks if it might be rather hollow. He spoke with WIRED over Zoom, in a conversation that has been lightly edited for length and clarity.

Will Knight: Why switch from writing about superintelligent AI threatening humanity to considering a future in which it’s used to do good?

Nick Bostrom: The various things that could go wrong with the development of AI are now receiving a lot more attention. It’s a big shift in the last 10 years. Now all the leading frontier AI labs have research groups trying to develop scalable alignment methods. And in the last couple of years also, we see political leaders starting to pay attention to AI.

There hasn’t yet been a commensurate increase in depth and sophistication in terms of thinking of where things go if we don’t fall into one of these pits. Thinking has been quite superficial on the topic.

When you wrote Superintelligence, few would have expected existential AI risks to become a mainstream debate so quickly. Will we need to worry about the problems in your new book sooner than people might think?

As we start to see automation roll out, assuming progress continues, then I think these conversations will start to happen and eventually deepen.

Social companion applications will become increasingly prominent. People will have all sorts of different views and it’s a great place to maybe have a little culture war. It could be great for people who couldn’t find fulfillment in ordinary life but what if there is a segment of the population that takes pleasure in being abusive to them?

In the political and information spheres we could see the use of AI in political campaigns, marketing, automated propaganda systems. But if we have a sufficient level of wisdom these things could really amplify our ability to sort of be constructive democratic citizens, with individual advice explaining what policy proposals mean for you. There will be a whole bunch of dynamics for society.

Would a future in which AI has solved many problems, like climate change, disease, and the need to work, really be so bad?

Ultimately, I’m optimistic about what the outcome could be if things go well. But that’s on the other side of a bunch of fairly deep reconsiderations of what human life could be and what has value. We could have this superintelligence and it could do everything: Then there are a lot of things that we no longer need to do and it undermines a lot of what we currently think is the sort of be all and end all of human existence. Maybe there will also be digital minds as well that are part of this future.

Coexisting with digital minds would itself be quite a big shift. Will we need to think carefully about how we treat these entities?

My view is that sentience, or the ability to suffer, would be a sufficient condition, but not a necessary condition, for an AI system to have moral status.

There might also be AI systems that even if they’re not conscious we still give various degrees of moral status. A sophisticated reasoner with a conception of self as existing through time, stable preferences, maybe life goals and aspirations that it wants to achieve, and maybe it can form reciprocal relationships with humans—if that were such a system I think that plausibly there would be ways of treating it that would be wrong.

What if we didn’t allow AI to become more willful and develop some sense of self. Might that not be safer?

There are very strong drivers for advancing AI at this point. The economic benefits are massive and will become increasingly evident. Then obviously there are scientific advances, new drugs, clean energy sources, et cetera. And on top of that, I think it will become an increasingly important factor in national security, where there will be military incentives to drive this technology forward.

I think it would be desirable that whoever is at the forefront of developing the next generation AI systems, particularly the truly transformative superintelligent systems, would have the ability to pause during key stages. That would be useful for safety.

I would be much more skeptical of proposals that seemed to create a risk of this turning into AI being permanently banned. It seems much less probable than the alternative, but more probable than it would have seemed two years ago. Ultimately it wouldn’t be an immense tragedy if this was never developed, that we were just kind of confined to being apes in need and poverty and disease. Like, are we going to do this for a million years?

Turning back to existential AI risk for a moment, are you generally happy with efforts to deal with that?

Well, the conversation is kind of all over the place. There are also a bunch of more immediate issues that deserve attention—discrimination and privacy and intellectual property et cetera.

Companies interested in the longer term consequences of what they’re doing have been investing in AI safety and in trying to engage policymakers. I think that the bar will need to sort of be raised incrementally as we move forward.

In contrast to so-called AI doomers there are some who advocate worrying less and accelerating more. What do you make of that movement?

People sort of divide themselves up into different tribes that can then fight pitched battles. To me it seems clear that it’s just very complex and hard to figure out what actually makes things better or worse in particular dimensions.

I’ve spent three decades thinking quite hard about these things and I have a few views about specific things but the overall message is that I still feel very in the dark. Maybe these other people have found some shortcuts to bright insights.

Perhaps they’re also reacting to what they see as knee-jerk negativity about technology?

That’s also true. If something goes too far in another direction it naturally creates this. My hope is that although there are a lot of maybe individually irrational people taking strong and confident stances in opposite directions, somehow it balances out into some global sanity.

I think there’s like a big frustration building up. Maybe as a corrective they have a point, but I think ultimately there needs to be a kind of synthesis.

Since 2005 you have worked at Oxford University’s Future of Humanity Institute, which you founded. Last month it announced it was closing down after friction with the university’s bureaucracy. What happened?

It’s been several years in the making, a kind of struggle with the local bureaucracy. A hiring freeze, a fundraising freeze, just a bunch of impositions, and it became impossible to operate the institute as a dynamic, interdisciplinary research institute. We were always a little bit of a misfit in the philosophy faculty, to be honest.

What’s next for you?

I feel an immense sense of emancipation, having had my fill for a period of time perhaps of dealing with faculties. I want to spend some time I think just kind of looking around and thinking about things without a very well-defined agenda. The idea of being a free man seems quite appealing.

Deep Utopia: Life and Meaning in a Solved World Hardcover – March 27, 2024 – Amazon Books

A greyhound catching the mechanical lure—what would he actually do with it? Has he given this any thought?

Bostrom’s previous book, Superintelligence: Paths, Dangers, Strategies changed the global conversation on AI and became a New York Times bestseller. It focused on what might happen if AI development goes wrong. But what if things go right?

Suppose that we develop superintelligence safely, govern it well, and make good use of the cornucopian wealth and near magical technological powers that this technology can unlock. If this transition to the machine intelligence era goes well, human labor becomes obsolete. We would thus enter a condition of “post-instrumentality”, in which our efforts are not needed for any practical purpose. Furthermore, at technological maturity, human nature becomes entirely malleable.

Here we confront a challenge that is not technological but philosophical and spiritual. In such a solved world, what is the point of human existence? What gives meaning to life? What do we do all day?

Deep Utopia shines new light on these old questions, and gives us glimpses of a different kind of existence, which might be ours in the future.