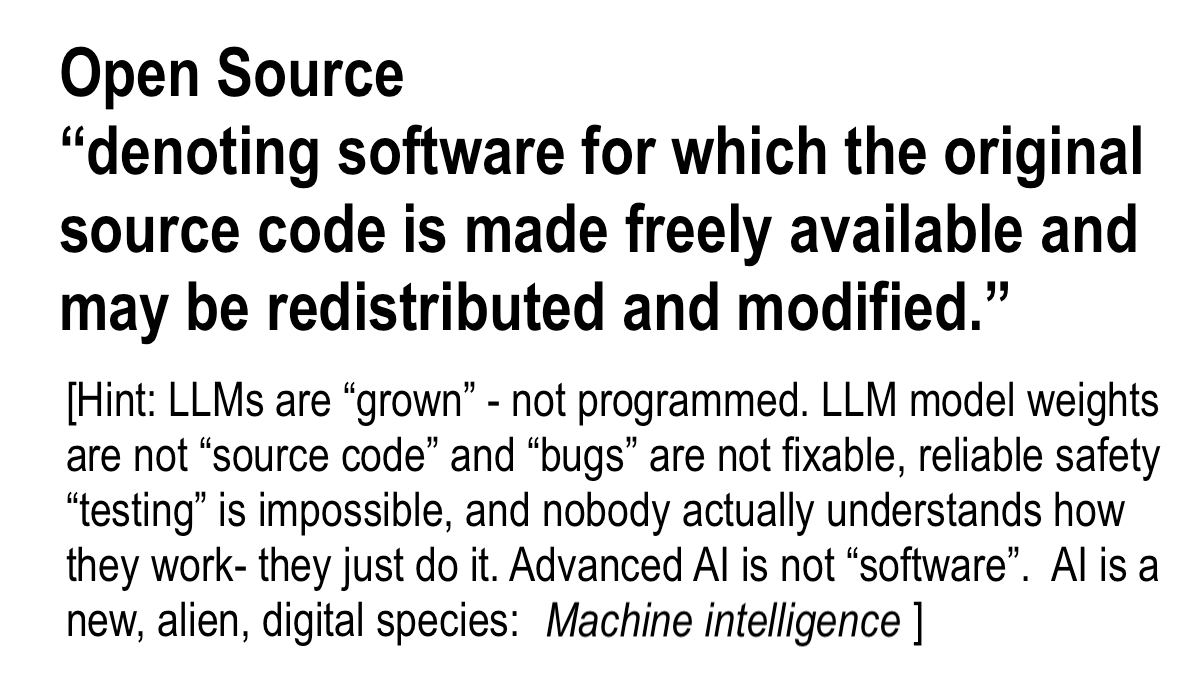

Relevant opinion. Common knowledge: (A) “open source” is software, and (B) “LLM models” are not software.

In other words… AI is most certainly not “Open Source Software”. AI is a new, invasive, alien, digital species.

Machine intelligence is being created by Homo sapiens.

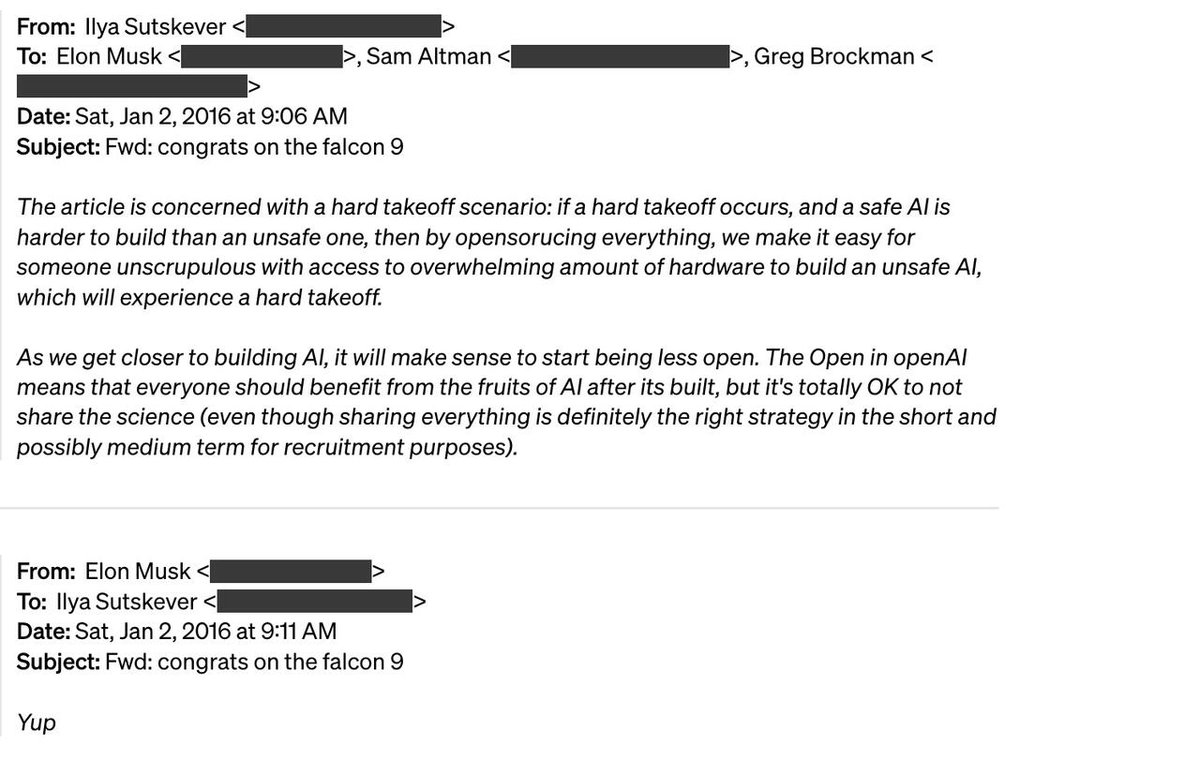

INTERESTING OPINION:

“Open source AI” is a total scam:

With open source software one releases the necessary information (source code) in order to reproduce the program. This also allows one to inspect and modify the software.

“Open source” AI is more akin to simply releasing a compiled binary.… pic.twitter.com/GTcd2OLh4p

— Tolga Bilge (@TolgaBilge_) March 17, 2024

Open source AI” is a total scam:

With open source software one releases the necessary information (source code) in order to reproduce the program. This also allows one to inspect and modify the software. “Open source” AI is more akin to simply releasing a compiled binary. Unlike with source code in traditional software, by releasing the model weights and architecture users currently can obtain very little insight into the inner workings of a model. From the model weights you also do not have the ability to reproduce the program, you just have the program! A slightly truer open source AI would mean that instead of releasing the model weights and architecture one would release the training data and algorithms (including learning algorithms & architecture). In this case you are at least getting the “recipe” to build the program. AI companies won’t do this, because their data is precious to them, is typically obtained and held illegally, and releasing it may have negative legal consequences for them. However, it is worth noting that even then you would not be able to exactly reproduce the model due to the randomness in the training process. In both the case of releasing the model weights, or releasing the data and algorithms, neither reasonably should qualify as open source, since having the training data and being able to reproduce the model does not give you significantly more insight into how it actually works or what it is doing. As @NPCollapse has pointed out on a number of occasions, AI models are not normal computer programs. It is more like they are grown, fed on training data, and you end up with a bunch of numbers that do the job but you don’t understand how they work internally. Therefore we should not be particularly surprised when assumptions from open source software do not generalize to AI. Ultimately, “open source” AI does not seem to serve much of a purpose other than for luring in idealistic young researchers and obtaining positive vibes on the internet:

Concerning AI safety, there may plausibly be some benefits to releasing the model weights of smaller models, since it could aid in interpretability and other research. However, you could likely obtain most of these benefits simply by providing private access to researchers. Regarding the most capable models, it seems foolish to make public the weights of models sufficiently capable such that they could be fine-tuned to aid in bioterrorist and other attacks that present catastrophic risks. It also seems foolish to do anything to make it easier for bad actors to build such models from scratch. Therefore, I do not think we should do either kind of “open source” with potentially dangerous frontier AI models, with both kinds of “open source” anyway falling far short of what any reasonable meaning of the term would imply.