Arguing Machines: Human Supervision of Black Box AI Systems That Make Life-Critical Decisions

Lex Fridman* Li Ding Benedikt Jenik Bryan Reimer Massachusetts Institute of Technology (MIT)

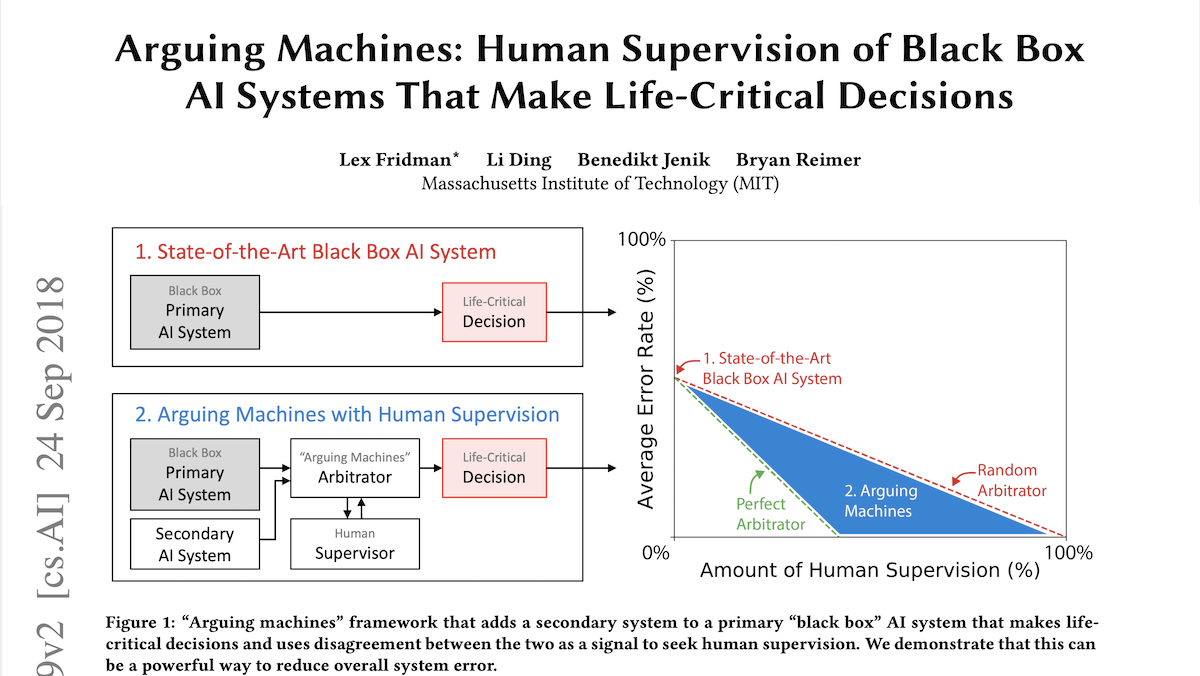

ABSTRACT We consider the paradigm of a black box AI system that makes life-critical decisions. We propose an “arguing ma- chines” framework that pairs the primary AI system with a secondary one that is independently trained to perform the same task. We show that disagreement between the two sys- tems, without any knowledge of underlying system design or operation, is sufficient to arbitrarily improve the accuracy of the overall decision pipeline given human supervision over disagreements. We demonstrate this system in two applica- tions: (1) an illustrative example of image classification and (2) on large-scale real-world semi-autonomous driving data. For the first application, we apply this framework to image classification achieving a reduction from 8.0% to 2.8% top-5 error on ImageNet. For the second application, we apply this framework to Tesla Autopilot and demonstrate the ability to predict 90.4% of system disengagements that were labeled by human annotators as challenging and needing human supervision.