Google Deepmind. Data curation via joint example selection further accelerates multimodal learning.

09 JULY24

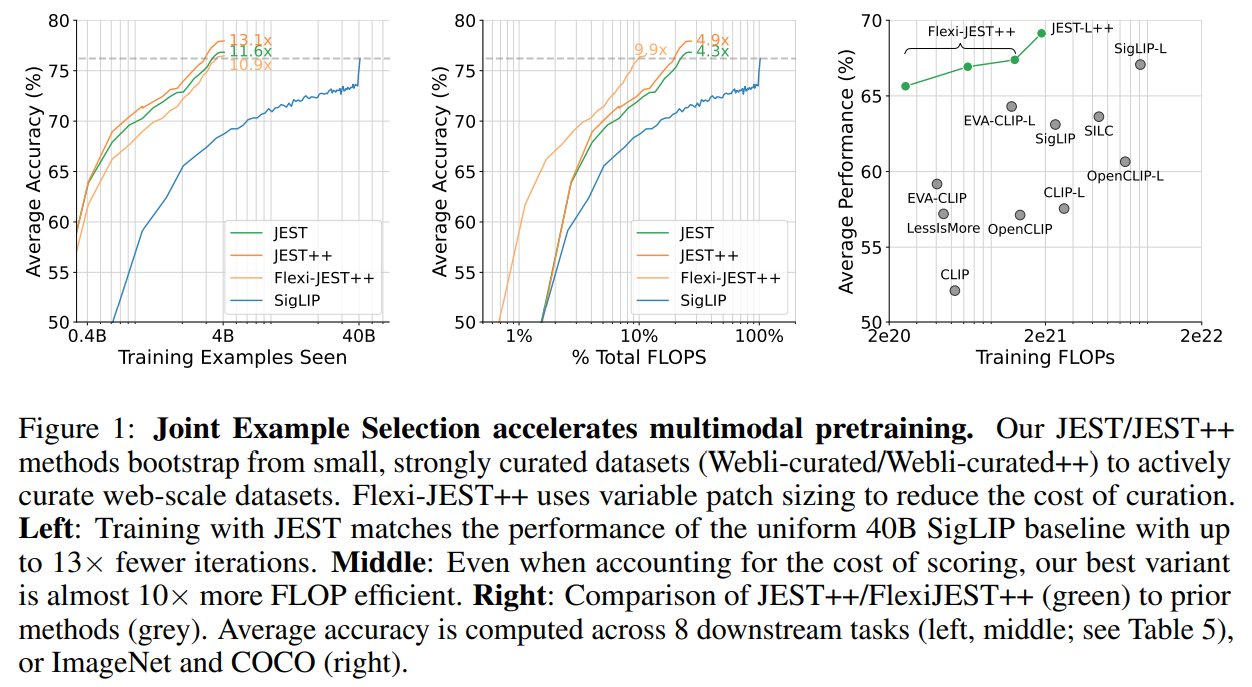

Abstract. Data curation is an essential component of large-scale pretraining. In this work, we demonstrate that jointly selecting batches of data is more effective for learning than selecting examples independently. Multimodal contrastive objectives expose the dependencies between data and thus naturally yield criteria for measuring the joint learnability of a batch. We derive a simple and tractable algorithm for selecting such batches, which significantly accelerate training beyond individually- prioritized data points. As performance improves by selecting from larger super- batches, we also leverage recent advances in model approximation to reduce the associated computational overhead. As a result, our approach—multimodal contrastive learning with joint example selection (JEST)—surpasses state-of-the-art models with up to 13× fewer iterations and 10× less computation. Essential to the performance of JEST is the ability to steer the data selection process towards the distribution of smaller, well-curated datasets via pretrained reference models, exposing the level of data curation as a new dimension for neural scaling laws.

Discussion We proposed a method—JEST—for jointly selecting the most learnable batches of data, which significantly accelerates large-scale multimodal learning, surpassing the previous state-of-the-art with up to 10× fewer FLOPs and 13× fewer examples. In particular, our experiments point to the strong potential for “data quality bootstrapping”, using small curated datasets to guide learning on much larger, uncurated ones. Recent work has shown that static dataset filtering, without knowledge of downstream training, can ultimately limit performance [18]. Our results demonstrate that useful batches, which must be constructed online, improve pretraining efficiency beyond individually selected examples. These findings therefore advocate for foundation distributions—either through pre-scored datasets with easy-reference JEST, or dynamically adjusted to the demands of the model with learnability JEST—as a more general and effective replacement to generic foundation datasets.

Limitations. While our method has accelerated multimodal learning of canonical downstream tasks, it has relied on small, well-curated reference datasets which specify the distribution to prioritize within much larger uncurated data. We would therefore encourage future work exploring the inference of reference datasets from the set of downstream tasks of interest.

Summary by Gemini 1.5 Pro:

This report presents a novel approach for data curation, called Joint Example Selection (JEST), that significantly accelerates multimodal learning, particularly for image-text models.

Here’s a summary of the key points:

Problem:

Traditional data curation methods focus on individual data points, neglecting the importance of batch composition for learning.

Manually curating large datasets is expensive and difficult to scale.

Solution:

JEST utilizes contrastive learning objectives to measure the joint learnability of data batches.

The algorithm selects “sub-batches” from larger “super-batches” based on their learnability score, considering both the model’s current state and a pretrained reference model.

JEST leverages online model approximation techniques to make scoring large batches computationally feasible.

Results:

JEST outperforms baseline models and previous data curation methods by up to 13 times fewer training iterations and 10 times less computation.

JEST demonstrates strong data quality bootstrapping: a well-curated reference model effectively guides the selection of high-quality data from a much larger dataset.

JEST is robust to different contrastive loss formulations and scales effectively with model size.

The proposed method simplifies data curation by eliminating the need for pre-filtering.

Implications:

JEST offers a more efficient and effective way to train multimodal models at scale.

It underscores the importance of foundation distributions, either static or dynamically adjusted, for data-efficient learning.

Limitations:

JEST relies on small, well-curated reference datasets, which may limit its application to specific domains.

Further research is needed to explore the inference of reference datasets from the set of downstream tasks.

Overall, JEST is a promising approach for accelerating multimodal learning by utilizing the joint learnability of data batches and enabling efficient data quality bootstrapping. It holds significant potential for advancing the field of large-scale multimodal modeling.

Learn More:

Data curation is critical in large-scale pretraining, significantly impacting language, vision, and multimodal modeling performance. Well-curated datasets can achieve strong performance with less data, but current pipelines often rely on manual curation, which is costly and hard to scale. Model-based data curation, leveraging training model features to select high-quality data, offers potential improvements in scaling efficiency. Traditional methods focus on individual data points, but batch quality also depends on composition. In computer vision, hard negatives—clusters of points with different labels—provide a more effective learning signal than easily solvable ones. Researchers from Google DeepMind have shown that selecting batches of data jointly rather than independently enhances learning. Using multimodal contrastive objectives, they developed a simple JEST algorithm for joint example selection. This method selects relevant sub-batches from larger super-batches, significantly accelerating training and reducing computational overhead. By leveraging pretrained reference models, JEST guides the data selection process, improving performance with fewer iterations and less computation. Flexi-JEST, a variant of JEST, further reduces costs using variable patch sizing. This approach outperforms state-of-the-art models, demonstrating the effectiveness of model-based data curation.

Offline curation methods initially focused on the quality of textual captions and alignment with high-quality datasets, using pretrained models like CLIP and BLIP for filtering. These methods, however, fail to consider dependencies within batches. Cluster-level data pruning methods address this by reducing semantic redundancy and using core-set selection, but these are heuristic-based and decoupled from training objectives. Online data curation adapts during learning, addressing the limitations of fixed strategies. Hard negative mining optimizes the selection of challenging examples, while model approximation techniques allow smaller models to act as proxies for larger ones, enhancing data selection efficiency during training. The method selects the most relevant data sub-batches from a larger super-batch using model-based scoring functions, considering losses from both the learner and pretrained reference models. Prioritizing high-loss batches for the learner can discard trivial data but may also up-sample noise. Alternatively, selecting low-loss data for the reference model can identify high-quality examples but may be overly dependent on the reference model. Combining these approaches, learnability scoring prioritizes unlearned and learnable data, accelerating large-scale learning. Efficient scoring with online model approximation and multi-resolution training further optimizes the process.

The efficacy of JEST for forming learnable batches was evaluated, revealing that JEST rapidly increases batch learnability with few iterations. It outperforms independent selection, achieving performance comparable to brute-force methods. In multimodal learning, JEST significantly accelerates training and improves final performance, with benefits scaling with filtering ratios. Flexi-JEST, a compute-efficient variant using multi-resolution training, also reduces computational overhead while maintaining speedups. JEST’s performance improves with stronger data curation, and it surpasses prior models on multiple benchmarks, demonstrating effectiveness in both training and compute efficiency. In conclusion, The JEST method, designed for jointly selecting the most learnable data batches, significantly accelerates large-scale multimodal learning, achieving superior performance with up to 10× fewer FLOPs and 13× fewer examples. It highlights the potential for “data quality bootstrapping,” where small curated datasets guide learning on larger, uncurated ones. Unlike static dataset filtering, which can limit performance, online construction of useful batches enhances pretraining efficiency. This suggests that foundation distributions can effectively replace generic foundation datasets, whether through pre-scored datasets or dynamically adjusted with learnability JEST. However, the method relies on small, curated reference datasets, indicating a need for future research to infer reference datasets from downstream tasks.