“Show me the incentive and I’ll show you the outcome.”

— Charlie Munger (1924-2023)

“There is a belief in the market that the invention of intelligence has infinite return.” — Eric Schmidt (2024)

On the Origin of Species: Chapter III, Struggle for Existence: “The forms which stand in closest competition with those undergoing modification and improvement will naturally suffer most. But Natural Selection, as we shall hereafter see, is a power incessantly ready for action, and is immeasurably superior to man’s feeble efforts.”

— Charles Darwin (1859)

OPINION: AGI is not a technology tool. AGI is an Agent. AGI is not “open-source” because nobody knows how it works, therefore nobody can improve the safety inside “the black box”. The weights and parameters are meaningless to humans. AGI is unpredictable, uncontrollable and untestable for safety. Machine intelligence is emerging as a new species in our environment, with intelligence vastly superior to the human race. Manageable regulations are certainly good for any unregulated industry to protect their own markets. SB 1047 was light touch and was supported by 80% of Californians, by California Labor, by Hollywood, by an overwhelmingly bipartisan California Assembly AND by Leading AI Scientists including Bengio, Hinton, Russell, Tegmark and 1,000s more. Regulations will certainly come, but will they come in time to manage a “controlled intelligence explosion” – in time to avoid catastrophe? Or, are we in for a FOOM with the probabilistic “P(doom)” of 10 to 90% ? — SafeAI Editorial Board

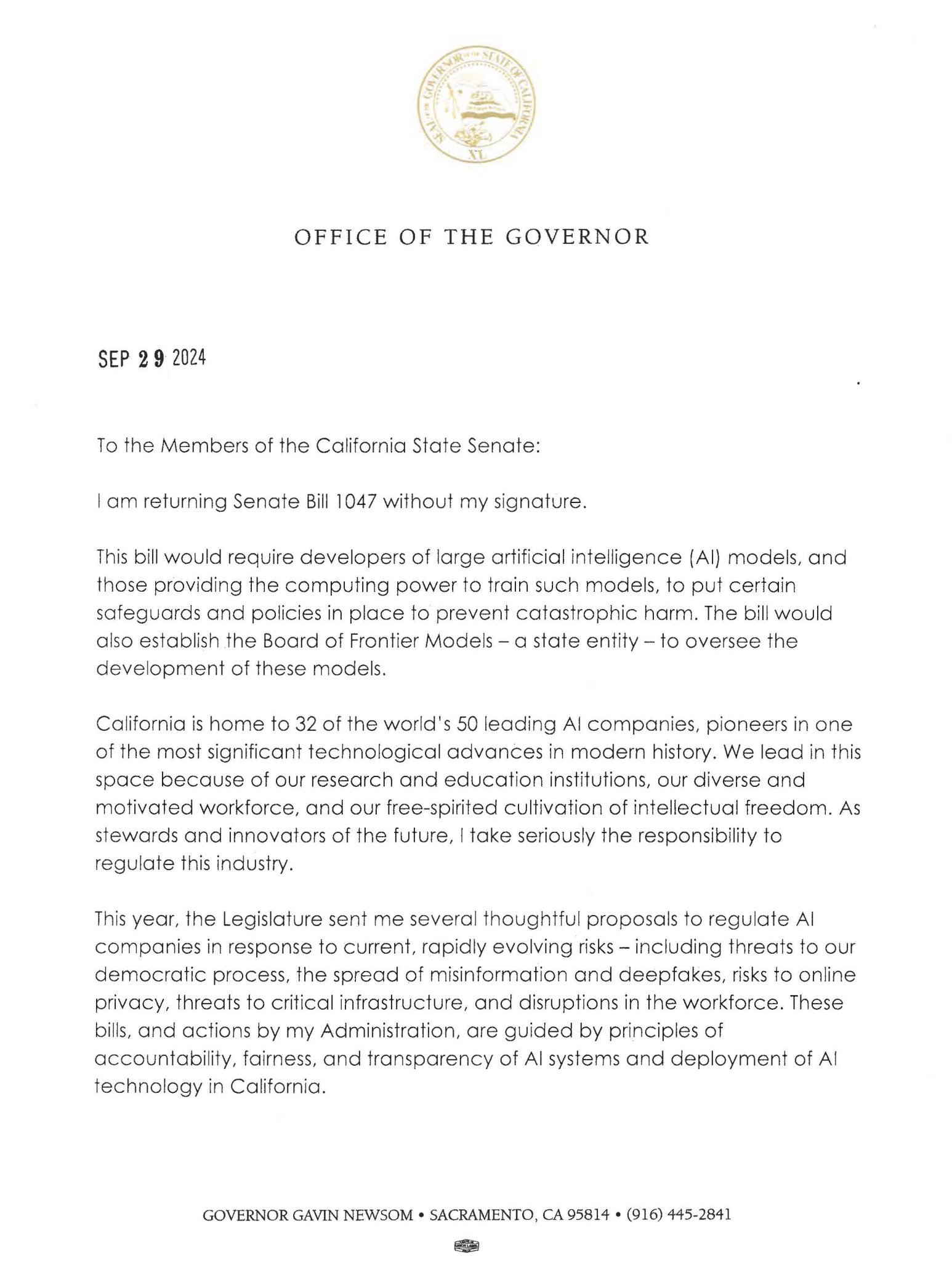

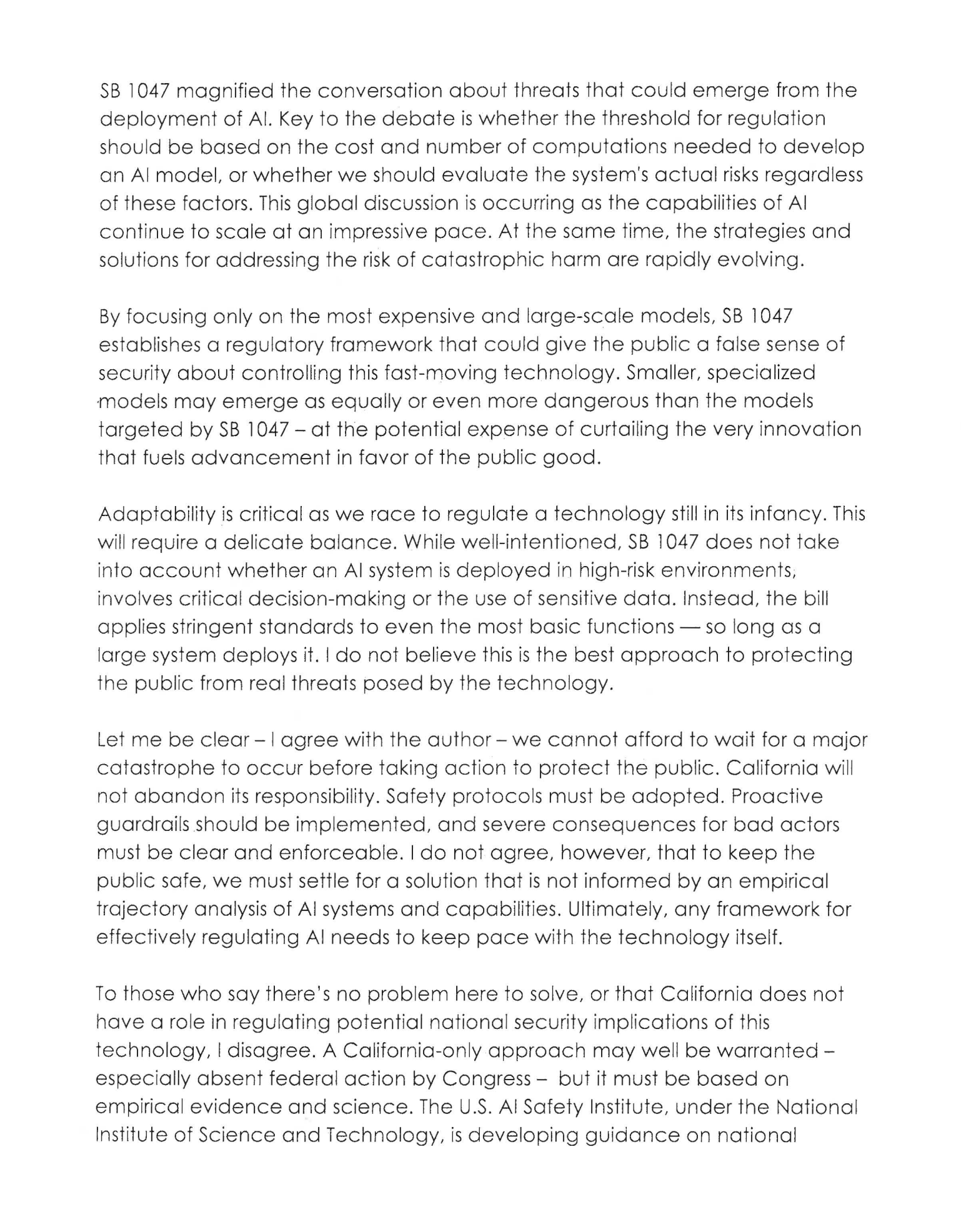

“I do not believe this is the best approach to protecting the public from real threats posed by the technology… Ultimately, any framework for effectively regulating Al needs to keep pace with the technology itself.” — California Governor Gavin Newsom (29 September 2024)

Page 2

Page 3

My statement on the Governor’s veto of SB 1047: pic.twitter.com/SsuBvV2mMI

— Senator Scott Wiener (@Scott_Wiener) September 29, 2024

Gov. Gavin Newsom vetoes AI safety bill opposed by Silicon Valley https://t.co/JpPxbLrK14

— Los Angeles Times (@latimes) September 29, 2024

Scoop: @GavinNewsom just vetoed SB 1047

He does plan to work on a more comprehensive AI bill next year https://t.co/AGj6wYqWPz pic.twitter.com/sarWIlRrzx

— Preetika Rana (@Preetika_Rana) September 29, 2024

Newsom vetoed SB 1047. The reasoning given here is so transparently weak. Like even if you bought that smaller models were JUST as likely to cause problems, why wouldn’t it be better to regulate some models while you work on more comprehensive regulations? This justification… pic.twitter.com/CyOAJ1erQv

— Garrison Lovely (@GarrisonLovely) September 29, 2024

Governor Gavin Newsom’s veto of SB 1047 is disappointing. This bill presented a reasonable path for protecting Californians and safeguarding the AI ecosystem, while encouraging innovation.

But I am not discouraged. The bill encouraged collaboration between industry, academics…

— Dan Hendrycks (@DanHendrycks) September 29, 2024

Thank you @gavinnewsom for vetoing SB1047 — for siding with California Dynamism, economic growth, and freedom to compute, over safetyism, doomerism, and decline. 💪🚀✨

— Marc Andreessen 🇺🇸 (@pmarca) September 29, 2024

Is there one single person in the state of California who believes that this is Newsom’s real reason for the veto – SB 1047 isn’t comprehensive enough! (from WSJ: https://t.co/Sc77iHluCd) pic.twitter.com/LY6g2UPP2W

— Kelsey Piper (@KelseyTuoc) September 29, 2024

Thank you Governor @GavinNewsom for vetoing SB-1047 — your pro-innovation leadership is much appreciated!

And to the many people who’ve been pushing back on SB-1047, a huge thank you as well. Congratulations to all — we won! 🎉

Looking ahead, lets keep on protecting AI…

— Andrew Ng (@AndrewYNg) September 29, 2024

I and so many other people have just lost so much respect for @GavinNewsom

I’m deeply disappointed by him suppressing the will of the majority of Californians.

— Kat Woods ⏸️ (@Kat__Woods) September 29, 2024

This comment from Gavin Newsom on vetoing SB 1047, the AI “safety” bill, is absolutely spot on.

I hope a diverse group of folks will now work together to help create less risky and more effective regulations. pic.twitter.com/Kwsg5yeBZx

— Jeremy Howard (@jeremyphoward) September 29, 2024

AI can and will be used for so much good. But just like we’ve seen with social media, there could also be seriously damaging side effects if governments don’t lay down some rules. I was hoping to see California step up and lead the way with #SB1047. And while I’m disappointed to… pic.twitter.com/byJWslkkGh

— Joseph Gordon-Levitt (@hitRECordJoe) September 29, 2024

Well, @GavinNewsom had lost me forever. What an act of cowardice. All our insiders told us that we needed to fluff up his ego and present SB-1047 as a “hero moment”. Instead it was a coward, sell-out moment that I will never forget.

— Holly ⏸️ Elmore (@ilex_ulmus) September 29, 2024

A broad bipartisan coalition came together to support SB 1047, including many academic researchers (including Turing Award winners Yoshua Bengio and Geoffrey Hinton), the California legislature, 77% of California voters, 120+ employees at frontier AI companies, 100+ youth…

— Dan Hendrycks (@DanHendrycks) September 29, 2024

Appreciate @GavinNewsom for his leadership in recognizing that SB 1047, as written, would have slowed innovation, learning, and progress toward safe AI by imposing a vague and preemptive regulatory regime on AI developers worldwide, including many California-based companies.…

— Reid Hoffman (@reidhoffman) September 29, 2024

Article: California won’t require big tech firms to test safety of AI after Newsom kills bill https://t.co/f9PYatcALs

— Mark Ruffalo (@MarkRuffalo) September 30, 2024

Instead of focusing on frontier models where the risk is greatest, Newsom wants a bill that covers *all* AI models, big and small.

Opponents of SB1047 will regret not accepting the narrow approach when they had the chance. This is what “safety isn’t a model property” gets you. pic.twitter.com/BaXNNMezzQ

— Samuel Hammond 🌐🏛 (@hamandcheese) September 29, 2024

Today, Governor Newsom vetoed our AI safety bill, SB 1047.

I still remember when @EncodeJustice was invited to join this effort as an official co-sponsor earlier this year. I expected that few eyes outside California would land on the bill, and that it would join the docket as a… pic.twitter.com/F2V70HNckD

— Sneha Revanur (@SnehaRevanur) September 29, 2024

Newsom vetoing SB1047 is terrible news for global AI safety regulation. It shows how hard it’s going to be for politicians to stand up to wealthy AI corporate interests, even when the public overwhelmingly supports regulation. https://t.co/R4Tr0SzSX4

— Ed Newton-Rex (@ednewtonrex) September 29, 2024

Any sensible explanation of the SB 1047 veto has to take into account Newsom’s political ambitions.

Ron Conway and Reid Hoffman — both investors and major Democratic power brokers — didn’t like the bill. Newsom needs their support. That’s the main thing that happened here.

— Shakeel (@ShakeelHashim) September 30, 2024

The fundamental problem with SB 1047 was that it regulated AI models rather than AI systems. “Is this model dangerous” is an impossible question to answer because it depends on how the model is used. https://t.co/FQDiCwhWw6 pic.twitter.com/RCvvXXPc55

— Timothy B. Lee (@binarybits) September 30, 2024

While the final version of SB 1047 was not perfect, it was a promising first step towards mitigating potentially severe and far reaching risks associated with AI development.

— Jack Clark (@jackclarkSF) September 29, 2024

Newsom vetoing SB1047 is terrible news for global AI safety regulation. It shows how hard it’s going to be for politicians to stand up to wealthy AI corporate interests, even when the public overwhelmingly supports regulation. https://t.co/R4Tr0SzSX4

— Ed Newton-Rex (@ednewtonrex) September 29, 2024

Lifecycle of SB1047:

* First-draft written by a niche special-interest stakeholder

* Draft publicly socialized too quickly before other stakeholders can weigh-in privately.

* Public socialization starts a political gridlock — people have to take sides and double-down, anything…— Soumith Chintala (@soumithchintala) September 29, 2024

Glad to see SB 1047 vetoed — it leaned heavily on training compute + cost to estimate model risk.

We know these are misleading estimates of risk. I took time earlier this year to write an essay explaining why.

This is a step in the right direction.https://t.co/8EcMK1vLvz

— Sara Hooker (@sarahookr) September 30, 2024

Tech executives and investors opposed the measure, which would have required companies to test the most powerful AI systems before release.

Tech executives, investors and prominent California politicians, including Rep. Nancy Pelosi (D), had argued the bill would quash innovation by making it legally risky to develop new AI systems, since it could be difficult or impossible to test for all the potential harms of the multipurpose technology. Opponents also argued that those who used AI for harm — not the developers — should be penalized.

The bill’s proponents, including pioneering AI researchers Geoffrey Hinton and Yoshua Bengio, argued that the law only formalized commitments that tech companies had already made voluntarily. California state Sen. Scott Wiener, the Democrat who authored the bill, said the state must act to fill the vacuum left by lawmakers in Washington, where no new AI regulations have passed despite vocal support for the idea.

Hollywood also weighed in, with Star Wars director J.J. Abrams and “Moonlight” actor Mahershala Ali among more than 120 actors and producers who signed a letter this past week asking Newsom to sign the bill.

California’s AI bill had already been weakened several times by the state’s legislature, and the law gained support from AI company Anthropic and X owner Elon Musk. But lobbyists from Meta, Google and major venture capital firms, as well as founders of many tech start-ups, still opposed it.

In a statement to California’s legislature on his veto, Newsom wrote that the bill was wrong to single out AI projects using more than a certain amount of computing power while ignoring an AI system’s use case, such as whether it is involved in critical decision-making or uses sensitive data.

“I do not believe this is the best approach to protecting the public from real threats posed by the technology,” he said. “A California-only approach may well be warranted — especially absent federal action by Congress — but it must be based on empirical evidence and science.”

Newsom also announced Sunday that his administration will work with leading academics to develop what his office called “workable guardrails” for deploying generative AI technology. The experts involved include Fei-Fei Li, co-director of Stanford’s Human-Centered AI Institute and an AI entrepreneur, and Mariano-Florentino Cuéllar, president of the Carnegie Endowment for International Peace.

Anthony Aguirre, executive director of the Future of Life Institute, a nonprofit that studies existential risks to humanity and vocally advocated for the bill, said in a statement that the veto signaled it was time for federal or even global regulation to oversee Big Tech companies developing AI technology.

“The furious lobbying against the bill can only be reasonably interpreted in one way: these companies believe they should play by their own rules and be accountable to no one,” Aguirre’s statement said.

The California bill had prompted unusually impassioned public debate for a piece of technology regulation. At a tech conference in San Francisco earlier this month, Newsom said the measure had “created its own weather system,” triggering an outpouring of emotional comments. He also noted that the California legislature had passed other AI bills that were more “surgical” than S.B. 1047.

Newsom’s veto came after he signed 17 other AI-related laws, which impose new restrictions on some of the same tech companies that opposed the law he blocked. The regulations include a ban on AI-generated images that seek to deceive voters in the months ahead of elections; a requirement that movie studios negotiate with actors for the right to use their likeness in AI-generated videos; and rules forcing AI companies to create digital “watermark” technology to make it easier to detect AI videos, images and audio.

Newsom’s veto is a major setback for the AI safety movement, a collection of researchers and advocates who believe smarter-than-human AI could be invented soon and argue that humanity must urgently prepare for that scenario. The group is closely connected to the effective altruism community, which has funded think tanks and fellowships on Capitol Hill to influence AI policy and been derided as a “cult” by some tech leaders, such as Meta’s chief AI scientist, Yann LeCun.

Despite those concerns, the majority of AI executives, engineers and researchers are focused on the challenges of building and selling workable AI products, not the risk of the technology one day developing into an able assistant for terrorists or swinging elections with disinformation.

Andrea Jimenez contributed to this report.

Learn more:

- AP: California Governor Vetoes Bill To Create First-In-Nation Ai Safety Measures

- ENGADGET: California Gov. Newsom vetoes bill SB 1047 that aims to prevent AI disasters

- FINANCIAL TIMES: California governor vetoes bill to regulate artificial intelligence

- LA TIMES: Gov. Gavin Newsom vetoes AI safety bill opposed by Silicon Valley

- NPR: California Gov. Newsom vetoes AI safety bill that divided Silicon Valley

- TECHCRUNCH: Gov. Newsom vetoes California’s controversial AI bill, SB 1047

- THE GUARDIAN: California won’t require big tech firms to test safety of AI after Newsom kills bill

- THE VERGE: California governor vetoes major AI safety bill

- TIME: Gavin Newsom Blocks Contentious AI Safety Bill in California

- VARIETY: Gavin Newsom Vetoes AI Safety Bill, Which Had Backing of SAG-AFTRA

- WSJ: California’s Gavin Newsom Vetoes Controversial AI Safety Bill