870,536 views

31 Oct 2024

Watch Jensen Huang’s special address to see the role of AI in India’s digital transformation and how it’s fueling innovation, economic growth, and global leadership.

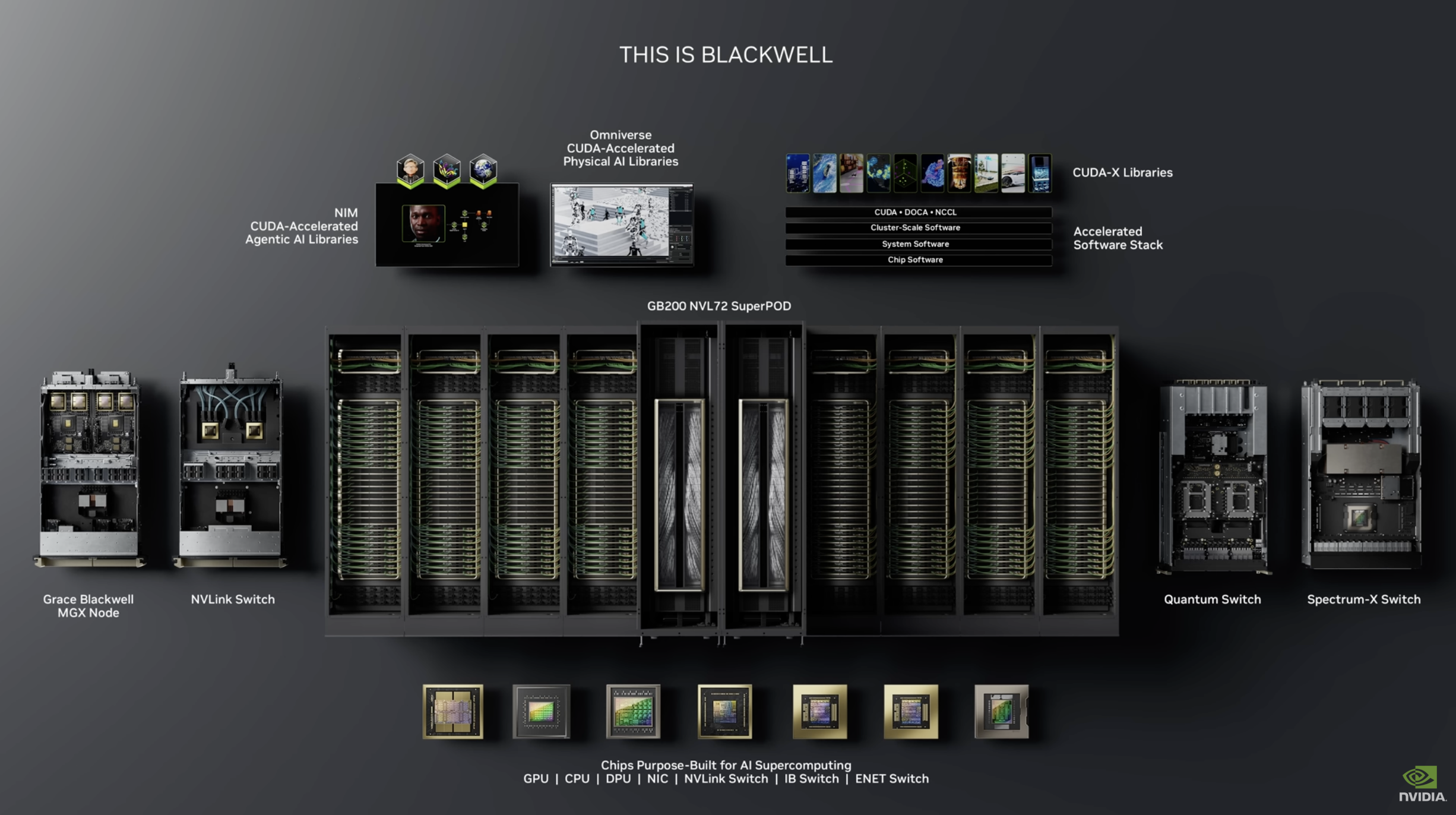

please welcome Nvidia founder and CEO Jensen [Applause] Hong hello [Applause] mumai wow it’s so great to be here Mumbai so much happening this is as you know India very very dear to the world’s computer industry Central to the IT industry at the center at the core of the it of just about every single company in the world my industry your industry that we’ve built over the last several decades is going through fundamental change seismic change tectonic shifts let’s talk about that today but before we start let me thank all of our partners our incredible partners that we’re working with here in India to transform the IT industry together and so I’m delighted that all of you have joined us today there are two fundamental shifts that are happening at the same time this hasn’t happened since 1964 the year after my birth it wasn’t because of my birth but in 1964 the IBM system 360 introduced the world to the concept of it the IT industry as we know it introduced the idea of general purpose Computing they described a central processing unit a CPU IO subsystems multitasking the separation of hardware and application software through a layer called the operating system IBM described family compatibility for application so that you could benefit by the install base of your Hardware to run your software over a long period of time they described architectural benefit across Generations so that the investment that you make in software the Investments you make in using the software is not squandered every single time you buy new hardware they recognized in 1964 the importance of in based the importance of software investment the importance of building computers that run the software architecture discipline all described in 1964 I’ve just described today’s computer industry the same industry that the India IT industry was built from general purpose Computing as we know it has existed for 60 years until now for the last 30 years we’ve had the benefit of mors law an incredible phenomenon without changing the software the hardware can continue to improve in an architecturally compatible way and the benefits of that software doubles every year as a result of doubling in performance every year depending on what your applications you’re reducing your cost by a factor of two every single year the most incredible depreciating force of any technology the world’s ever known by depreciation cost reduction it made it possible for society to use more and more of it as we continue to consume it as we continue to process more data mors law made it possible for us to continue to drive down cost the critizing Computing as we know it today those two events the invention of the system 360 More’s law with Windows PC drove what unquestionably one of the most important industries in the world every single industry has subsequently been built on top of it it but we know now that the scaling of CPUs has reached its limit we can’t continue to ride that curve that that free Free Ride The Free Ride of Moors law has ended we have to now do something different or depreciation will end and we now will not enjoy depreciation but experience inflation Computing inflation and that’s exactly what’s happening around the world we no longer can afford to do nothing in software and expect that our computing experience will continue to improve that cost will decrease and continue to spread the benefits of it and to benefit from solving greater and greater challenges we started our company to accelerate software our vision was there are applications that would benefit from acceleration if we augmented general purpose Computing we take the workload that is very computer intensive and we offload it and we accelerate it using a model we call Cuda a programming model that we invented called cuda that made it possible for us to accelerate applications tremendously that acceleration benefit has the same qualities as mores law for applications that were impossible or impractical to perform using general purpose Computing we have the benefits of accelerated Computing to realize that capability for example computer Graphics real-time computer Graphics was made possible because of Nvidia coming into the world and make possible this new processor we call gpus the GPU was really the first accelerated Computing architecture running Cuda running computer Graphics a perfect example we democratized computer Graphics as we know it 3D Graphics is now literally everywhere it could be used as a medium for almost any application but we felt that longterm accelerated Computing could be far far more impactful and so over the last 30 years we’ve been on a journey to accelerate one domain of application after another the reason why this has taken so long is simply because of this there is no such magical processor that can accelerate everything in the world because if you could do that you would just call it a CPU you need to reinvent the Computing stack from the algorithms to the architecture underneath and connected to applications on top in one domain after another domain computer Graphics is a beginning but we’ve taken this architecture Cuda architecture from one industry after another industry after another industry today we accelerate so many important industries ktho is fundamental to semiconductor manufacturing computational lithography simulation computer Aid and Engineering even 5G radios that we’ve recently announced part Partnerships with that we can accelerate the 5G software stack Quantum Computing so that we can invent the future of computing with classical Quantum hybrid Computing parabricks are Gene sequencing software stack CVS one of the most important things every single company’s working on is going from databases to knowledge bases so that we can create AI databases using qvs that we can create and vectorize all of your data qdf data frames data frames is essentially another word for structured data SQL acceleration is possible with qdf in each one of these different libraries we’re able to accelerate the application 20 30 50 times of course it takes a rewrite of software which is the reason why it’s taken so long in each one of these domains we’ve had to work with the industry work with our ecosystem software developers and customers in order to accelerate those applications for their domains kopt one of my favorites combinatorial Computing application a very uh Compu intensive application for example the travel salesperson problem every supply chain every driver Rider combination those applications could be accelerated with Coop incredible speed up modulus teaching and AI the laws of physics not just to be able to predict next word but to be able to predict the next moment in time of fluid dynamics and particle physics and so on so forth and of course one of the most famous application libraries we’ve ever created called cdnn made it possible to democratize artificial intelligence as we know it these acceleration libraries Now cover so many different domains that it appears that accelerated Computing is used everywhere but that’s simply because we’ve applied this architecture one domain after another domain that we’ve covered just about every single industry now accelerated Computing or Cuda has reached the Tipping Point several years ago about a decade ago something very important happened and most of you have seen the same thing alexnet made a gigantic leap in the performance of computer vision computer vision is a very important field of artificial intelligence Alex net surprised the world with how much of a leap that it was able to produce we had the benefit of taking a step back and asking ourselves what are we witnessing why is alexnet so effective how far can it scale what else can we do with this approach called Deep learning and if we were to find ways to apply deep learning to other problems how does it affect the computer industry and if we wanted to do that if we believe in that future and we’re excited about what deep learning can do how would we change every single layer of the Computing stack so that we could reinvent Computing all together 12 years ago we decided to dedicate our entire company to go pursue this Vision it is now 12 years later you every single time I’ve come to India I’ve had the benefit of talking to you about deep learning had benefit of talking to you about machine learning and I think it’s very very clear now the world has completely changed now let’s think about what happened the first thing that happened of course is how we do software our industry is underpinned by the method by which software is done the way that software was done call It software 1.0 program rers would code algorithms we call functions into to run on a computer and we would apply it to input information to predict an output somebody would write python or C or Fortran or Pascal or C++ code algorithms that run on a computer you apply input to it and output is produced very classically the computer model that we understood quite well and it of course created one of the largest Industries in the world right here in India the production of software coding programming became a whole industry this all happened within our generation however that approach of developing software has been disrupted it is now not coding but machine learning using a computer using a computer to study the patterns and relationships of massive amounts of observed data to essentially learn from it the function that predicts it and so we are essentially designing a universal function approximator using machines to learn the expected output that would produce such a function and so going back and forth looking this is software 1.0 with human coding to now software 2.0 using machine learning notice who is writing the software the software is now written by the computer and after you’re done training the model you inference the model you then apply that function now as the input that function that large language model that deep learning model that computer vision model speech understanding model is now an input neural network that goes into the GPU that can now make a prediction given new input unobserved input this way of doing software notice is based on fundamentally machine learning and we have gone from coding to machine learning from developing software to creating artificial intelligence and from soft that prefers to run on CPUs to now neural networks that runs best on gpus this at its core is what happened to our industry in the last 10 years we have now seen the complete reinvention of the Computing stack the whole technology stack has been reinvented the hardware the way that software is able is is developed and what software can do is now fundamentally different we dedicated ourselves to advance this field and so this is what we now build what all of you have initially when I first met India we were building gpus that fit into a PCI Express card that goes into your PC this is what a GPU looks like today this is Blackwell incredible system that is designed to study data at an enormous scale yeah thank you a massive system designed to study data at an enormous scale so that we could discover patterns and relationships and learn the meaning of the data this is the Greek breakthrough in the last several years we have now learn the representation or the meaning of words and numbers and images and pixels and videos chemicals proteins amino acids fluid patterns particle physics we have now learned the meaning of so many different types of data we have learned to represent how to represent information in so many different modalities not only have we learned the meaning of it we can translate it to another modality so one great example of course is translating English to Hindi translating English large body of text into other English summarization from pixels to image image recognition from words to pixels image generation from images videos to words captioning from words to proteins used for drug discovery from words to chemicals discovering new compounds from amino acids to proteins understanding the structure of proteins these fundamental ideas essentially a universal translator of information from any modality to another modality has led to a Cambrian explosion of the number of startups in the world they’re applying the basic method I just described if I could this and that what else can I do if I can do that and this what else can I do the number of applications has clearly exploded in the last couple two three years the number of generative AI companies around the world tens of thousands tens of billions of dollars have been invested in this field all because of this one instrument that made it possible for us to study data at enormous scales well I just want to say that that in order to build the black wall system of course the Blackwell GPU is involved but it takes seven other chips tsmc manufacture all of these chips and they’re just doing an extraordinary job ramping the Blackwell system this is in a all in Blackwell is in full production and we’re hoping to uh we’re expecting to deliver in volume production in Q4 and so this is basically Blackwell now this is one of the things that’s really incredible about about the system let me show it to you nothing’s easy this morning this is MV link and it goes across the entire back spine of a rack of gpus and these gpus are all connected from the top to the bottom using MV link driving these incredible cies the world’s longest driving CES for copper and it connects uh all of these gpus together 72 dual GPU packages of black WS 144 gpus connected together so it’s one giant GPU if I were to spread out all of the chips to show you what this connects together it’s essentially a GPU so large it’ be like this big but it’s obviously impossible to build gpus that large so we break it up into the L the smallest chunks we could which is retical limits and the most Advanced Technologies and we connect it together using MV link this is mvlink backs spine you’re looking at all of the gpus being connected that’s the quantum switch that connects all of these gpus together on top Spectrum X if you would like to have ethernet and uh what connects this together this is like 50 lbs this I’m just demonstrating how strong I am this is connected to this switch and this is one of the most advanced switches the world’s ever built now all of this together represents Blackwell and then it runs the software uh that’s on top the Cuda software CNN software uh Megatron for training the large language models tensor RT for doing the inference tensor RT llm for doing uh distributed multi-gpu inference for large language models and then on top of that we have two software Stacks one is NVIDIA AI Enterprise that I’ll talk about in a second and then the other is Omniverse I’ll talk about both of those in a second this job is surprisingly rigorous so this is the Blackwell system this is what Nvidia builds today those of you who have known us for very long time it’s really quite surprising how the company has transformed but literally we reason from first principles how Computing was going to be done in the future and this is Blackwell now the Blackwell system the Blackwell system is extraordinary of course the computation is incredible each rack is 3,000 lb 120 kilowatt Watts 120,000 watts in each rack the density of computing the highest the world’s ever known and what we’re trying to do is to learn larger and smarter models it’s called the scaling law the scaling law comes from the fact that the observation that the empirical observation and measurements that suggests the more data you have to train a large language model with and therefore to correspondingly large model size you know the more information you want to learn from the larger the model has to be or the larger model you would like to train the more data you need to have and each one each year we’re increasing the amount of data and the model size each by about a factor of two which means that every single year the computation which is the product of those two has to increase by a factor of four now remember there was a time when the world Mo’s law was two times every year and a half or 10 times every 5 years 100 times every 10 years we are now moving technology at a rate of four times every year four times every year over the course of 10 years incredible scaling and we continue to find that AI continues to get smarter as we scale up the uh uh the training size the second thing that we’ve discovered recently and this is a very big deal after you’re done training the model of course uh all of you have used chat GPT when you use chat GPT is a oneshot you ask you give it a prompt instead of writing a program to Compu communicate with a computer today you write a prompt you just talk to the talk to the computer the way you talk to a person you describe the context you describe what it is you’re you’re querying about uh you could ask it to write a program for you you could you know ask it uh to write a recipe for you whatever question would like to have and the AI process through a very large neural network and produces a sequence of answers producing one one uh one word after another word in the future and starting with strawberry we realize that of course intelligence is not just one shot but intelligence requires thinking and thinking is reasoning and maybe you’re doing path planning and maybe you’re doing some simulations in your mind you’re reflecting on your own answers and so as a result thinking results in higher quality answers and we’ve now discovered a second scaling law and this is a SCA scaling law at a time of inference the longer you think the higher quality answer you can produce this is not illogical this is very very intuitive to all of us if you were to ask me what’s my favorite Indian food I would tell you chicken briani okay and I don’t have to think about that very much and I don’t have to reason about that I just know it and there are many things that you can ask it like for example what’s Nvidia good at Nvidia is good at building AI supercomputers nvidia’s uh great at building gpus and those are things that you know that it’s encoded into your knowledge however there are many things that requires reasoning you know for example if I had to travel from uh Mumbai to California I I want to do it in the in a way that allows me to enjoy four other cities along the way you know today uh I got here at 3:00 a.m. this morning uh I got here through Denmark I I and right before Denmark I was in Orlando Florida and before Orlando Florida I was in California that was two days ago and I’m still trying to figure out what day we’re in right now but anyways I’m happy to be here uh if I were to to tell it I would like to go from California uh to Mumbai uh I would like to do it within uh 3 days uh and I give it all kinds of constraints about what time I’m willing to leave and able to leave what hotels I like to stay at so on so forth uh the people I have to meet the number of permutations of that of course uh quite high and so the planning of that process coming up with a optimal plan is very very complicated and so that’s where thinking reasoning planning comes in and the more you compute the higher quality answer uh you could provide and so we now have two fundamental scaling laws that is driving our technology development first for training and now for inference the number of foundation model makers has more than doubled since the beginning of Hopper there are more companies that realize that fundamental intelligence is vital to their company and that they have to build Foundation model technology and second the size of the models have increased by 20 30 40x the amount of computation necessary to train these model because of uh the size of the models but also multimodality capability um reinforcement learning capability synthetic data generation capability the amount of data that we use to train these models has really grown tremendously that’s one and then the other reason of course is that Blackwell is also used for generating tokens at incredible speeds and so together all of these factors has led to the demand for Blackwell being incredibly High let’s talk about now how we’re going to use this technology the headline I thought was really good Nvidia is AI in India now aside from the letter V you could use Nvidia to create the rest of that sentence which I thought was really cool you don’t know this story but in 1993 we had to come up with a name for our company and the reason why we chose Nvidia I’ll do the extreme short version the reason why I chose Nvidia in the end was because I really love Nvidia being sounds like a mystical Place India Nvidia and sounded like a great place and so if it turns out that computer graphics and accelerated Computing didn’t work out for us we could do almost anything and so I’m just happy it worked out okay so so Nvidia in India uh we have we have a really rich ecosystem here uh the first thing that you have to realize is that in order to build an AI ecosystem in any industry or in any country you have to start first with the ecosystem of the infrastructure and we announced that Yoda that ET T Communications and our other partners uh are joining us to build fundamental Computing infrastructure here in India and in just one year’s time by the end of this year we will have nearly 20 times more compute here in India than just a little over a year ago that’s the amount of infrastructure we’re y so the first part of building an AI ecosystem is the AI infrastructure just as the first part of uh infrastructure for the internet ecosystem was building the infrastructure of of networking of course uh the infrastructure of networking internet consists of the personal computer cloud and and internet itself um in the case of AI it starts with the AI Computing infrastructure the next part the operating system of AI is large language models and we’ve worked with Partners here in India to build the Hindi large language model and Hindi large language model as you know there’s 25 different um uh formal languages here in in India with apparently um a new a dialect every you know 1,500 kilometers and so so you don’t have to go very far before you need to train another model this is the hardest language model region in the world and if anybody could do it you can do it and and once India figures out how to create the Hindi large language model you could you could figure it out for every other country so the next layer is um the application layer above that and uh uh working with us to bring uh AI to uh the ecosystem of India of course uh AI native companies that are creating new applications that are started that made possible only with AI and then our Service Partners uh from from wiipro to infosis to to TCS working with us to take the AI models and the AI infrastructure out to the world’s Enterprises now that’s Nvidia in India I’m going to have uh Vel our country leader come join me on stage because I would love for him to talk to you about some of the companies that we’re working with here in India velle V to par okay so I’m going to intr a couple of other ideas and so earlier I told you that we have Blackwell we have all of the libraries acceleration libraries that we were talking about before but on top there are two very important platforms we working on one of them is called Nvidia AI Enterprise and the other is called Nvidia Omniverse and I’ll explain each one of them very Qui quickly first Nvidia AI Enterprise this is a time now where the large language models and the fundamental AI capabilities have reached a level of capabilities were able to now create what is called agents large language models that understand understand the data that of course it’s being presented it could be it could be streaming data could video data language model data it could be data of all kinds the first stage is perception the second is reasoning about given its observations uh what is the mission and what is the task it has to perform in order to perform that task the agent would break down the that task into steps of other tasks and uh it would reason about what it would take and it would connect with other AI models some of them are uh good at produ for example understanding PDF maybe it’s a model that understands how to generate images maybe it’s a model that uh uh is able to retrieve information AI information AI semantic data from a uh proprietary database so each one of these uh large language models models are connected to the central reasoning large language model we call agent and so these agents are able to perform all kinds of tasks uh some of them are maybe uh marketing agents some of them are customer service agents some of them are chip design agents Nvidia has Chip design agents all over our company helping us design chips maybe they’re software engineering uh agents uh maybe uh uh maybe they’re able to do marketing campaigns uh Supply Chain management and so we’re going to have agents that are helping our employees become super employees these agents or agentic AI models uh augment all of our employees to supercharge them make them more productive now when you think about these agents it’s really the way you would bring these agents into your company is not unlike the way you would onboard uh someone uh who’s a new employee you have to give them training curriculum you have to uh fine-tune them teach them how to use uh how to perform the skills and understand the vocabulary of your of your company uh you evaluate them and so they evaluation systems and you might guardrail them if you’re accounting agent uh don’t do marketing if you’re a marketing agent you know don’t report earnings at the end of the quarter so on so forth and so each one of these agents are guardrail um that entire process we put into essentially an agent life cycle Suite of library and we call that Nemo our partners are working with us to integrate these libraries into their platforms so that they could enable agents to be created onboarded deployed improved into a life cycle of agents and so this is what we call Nvidia Nemo we have um on the one hand the libraries on the other hand what comes out of the output of it is a API inference microser service we call Nims essentially this is a factory that builds AIS and Nemo is a suite of libraries that on board and help you operate the AIS and ultimately your goal is to create a whole bunch of Agents we have Partners here that we’re working with in India and Vel if you could tell everybody about our ecosystem here absolutely Jensen you know the word that stuck me as I was standing behind was was a word called Mystique this is the Mystique of India Jensen was here exactly 12 months back and he asked me a pretty profound question that the rich tapestry of India how are you going to encode it and it All Began from the infrastructure as mentioned in just 12 months today we have Computing from Yota which has built the stateof thee art y infrastructure tatas are going live E2 e2e has been in existence giving us exceled Computing infrastructure for a long long period of time all this Computing helped us to leave frog to solve one of India’s largest problem that is about communication like Jensen said we speak in so many languages he did say 1500 kilm but all of you know every 5050 kilomet we change our dialect we don’t only speak English we speak English and if you are from South there’ll be a little bit of malali also added into it so how do we make this really work is the work of some of our partners Serum is a classical example serum basically started their efforts to basically help India talk they decided we’re going to do voice to voice and and while doing voice to voice they had to understand how does this language work which is multimodal how do we make sure that it performs and they came into existence pretty quickly because there was infrastructure that was available to us similarly we saw projects coming from bhat GPT again a work that has been done predominantly in Academia the Academia in India has been rich with ideas and every time they wanted an idea to be translated into reality they need infrastructure today the work that we are doing in iits the work that we are doing at different organization is all a result of coming together solving the critical issue that India Has Not only was the language getting solved we also realized very quickly that there are many mega challenges that India has no one loves India more than Michelle a well spoken Indian and a healthy Indian always make a difference and that’s why we have companies who’ve been working on health as many of us know it’s been challenging how do we look after our health but Diagnostics coming from s Tuple cure. is really helping us solve many of these challenges so with that promise Jensen that’s awesome healthy yeah and well spoken healthy and well spoken and the the important thing here that the uh it takes an entire ecosystem of Partners to be able to help the world apply AI to help their employees be more productive and this is whereas India was focused on it the back office operations of software the delivery of software producing software the next generation of it is going to be about producing and delivery of AI and as you know the delivery of software coding and the delivery of AI is fundamentally different but dramatically more impactful insanely more exciting and the ability for this industry for India to help every single company around the world to enjoy the benefit of Agents to enjoy the benefits of AIS across all of their different functionalities to be able to deploy it at scale I don’t know anybody else who could do it this is just an extraordinary opportunity our job is to help you build Ai and deploy AI your job is to take these libraries and the capabilities that we have combine it with your incredible it capability software capabilities so that we can create agents and help every single company benefit from it and so this is the first part the second part is this what happens after agents now remember every single company has employees but most companies the goal is to build something to produce something to make something and that those things that people make could be factories it could be warehouses it could be cars and planes and trains and uh ship ships and so on and so forth all kinds of things computers and servers the servers that Nvidia builds it could be phones most companies in the largest of Industries ultimately produces something sometimes produce production of service which is the IT industry but many of your customers are about producing something those that next generation of AI needs to understand the physical world we call it physical AI in order to create physical AI we need three computers and we created three computers to do so the dgx computer which Blackwell for example is is a reference design an architecture for to create things like dgx computers for training the model that model needs a place to be refined it needs a place to learn it needs the place to apply its physical capability it’s robotics capability we call that Omniverse a virtual world that obeys the laws of physics where robots can learn to be robots and then when you’re done with the training of it that AI model could then run in the actual robotic system that robotic system could be a car it could be a robot it could be AV it could be a autonomous moving robot it could be a a picking arm uh it could be an entire Factory or an entire Warehouse that’s robotic and that computer we call agx Jetson agx dgx for training and then Omniverse for doing the digital twin now here here in India we’ve got a really great ecosystem who is working with us to take this infrastructure take this ecosystem of capabilities to help the world build physical AI systems and you know what I’ve really loved is adorb is one of the largest robotics company they build Robotics and more importantly they put it in a digital twin where optimization takes place they teach the robo all the inputs that comes out of the physical world not only is that work taking place our system integrators asenta TCS Tech Mahindra are taking that knowledge not only into India but also outside India so do it in India for India and do from India for globe start locally grow globally right right that’s fantastic okay thank you thank you thank you very much Michelle thank you thank you we made a short video to help you put everything together that I just said run it please for 60 years software 1.0 code written by programmers ran on general purpose CPUs then software 2.0 arrived machine learning neural networks running on gpus this led to the Big Bang of generative AI models that learn and generate anything today generative AI is revolutionizing $100 trillion in Industries knowledge Enterprises use agentic AI to automate digital work hello I’m James a digital human Industrial Enterprises use physical AI to automate physical work physical AI embodies robots like self-driving cars that safely navigate the real world manipulators that perform complex industrial tasks and humanoid robots who work collaboratively alongside us plants and factories will be embodied by physical AI capable of monitoring and adjusting its operations or speaking to us Nvidia builds three computers to enable developers to create physical AI the models are first trained on dgx then the AI is fine-tuned and tested using reinforcement learning physics feedback in Omniverse and the trained AI runs on Nvidia Jetson agx robotics computers Nvidia Omniverse is a physics-based operating system for physical AI simulation robots learn and fine-tune their skills in Isaac lab a robot gym built on Omniverse this is just one robot future factories will orchestrate teams of robots and monitor entire operations through thousands of sensors for factory digital digal twins they use an Omniverse blueprint called Mega with mega the factory digital twin is populated with virtual robots and their AI models the robots brains the robots execute a task by perceiving their environment reasoning planning their next motion and finally converting it to actions these actions are simulated in the environment by the world simulator in Omniverse and the results are perceived by the robot brains through Omniverse sensor simulation based on the sensor simulations the robot brains decide the next action and the loop continues while Mega precisely tracks the state and position of everything in the factory digital twin this software in the loop testing brings softwar defined processes to physical spaces and embodiments letting Industrial Enterprises simulate and validate changes in an Omniverse digital twin before deploying to the physical world saving massive risk and cost the era of physical AI is here transforming the world’s heavy Industries and Robotics