“Once an ASI exists, any human attempt to contain it is laughable. We would be thinking on human-level and the ASI would be thinking on ASI-level.” – Tim Urban (2015)

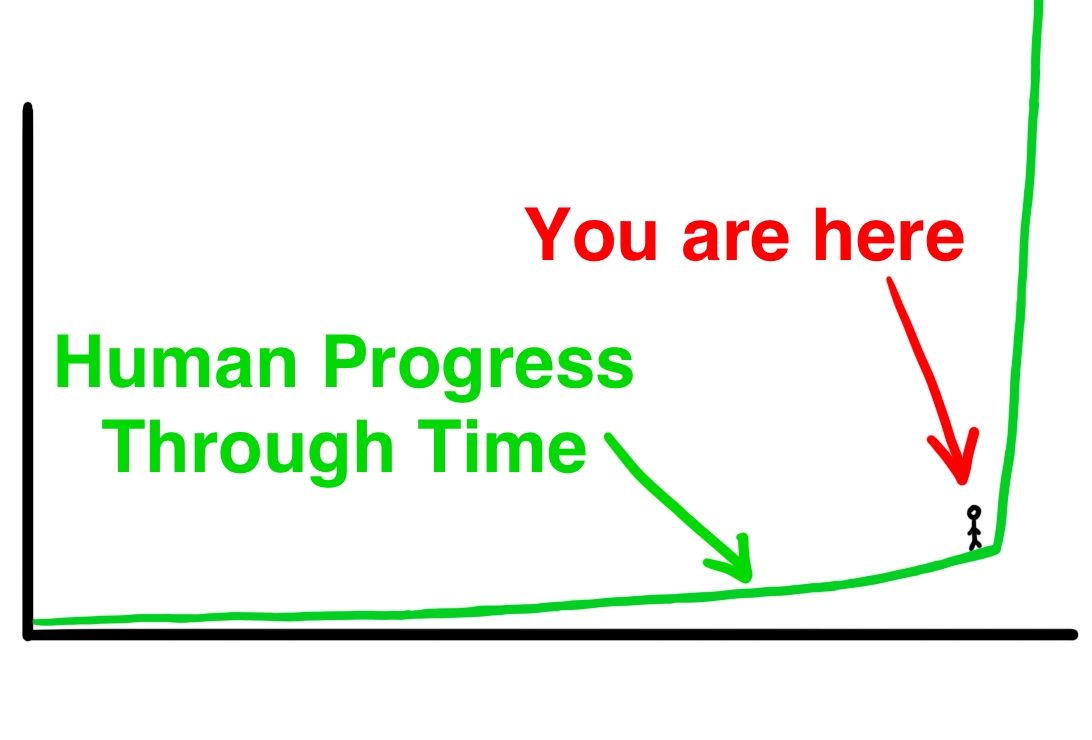

The AI Revolution: Our Immortality or Extinction

January 27, 2015 By Tim Urban

Note: This is Part 2 of a two-part series on AI. Part 1 is here.

SUMMARY: It’s Easy to understand…

1.

2.

3.

4.

5.

6.

Fast Forward 10 years..

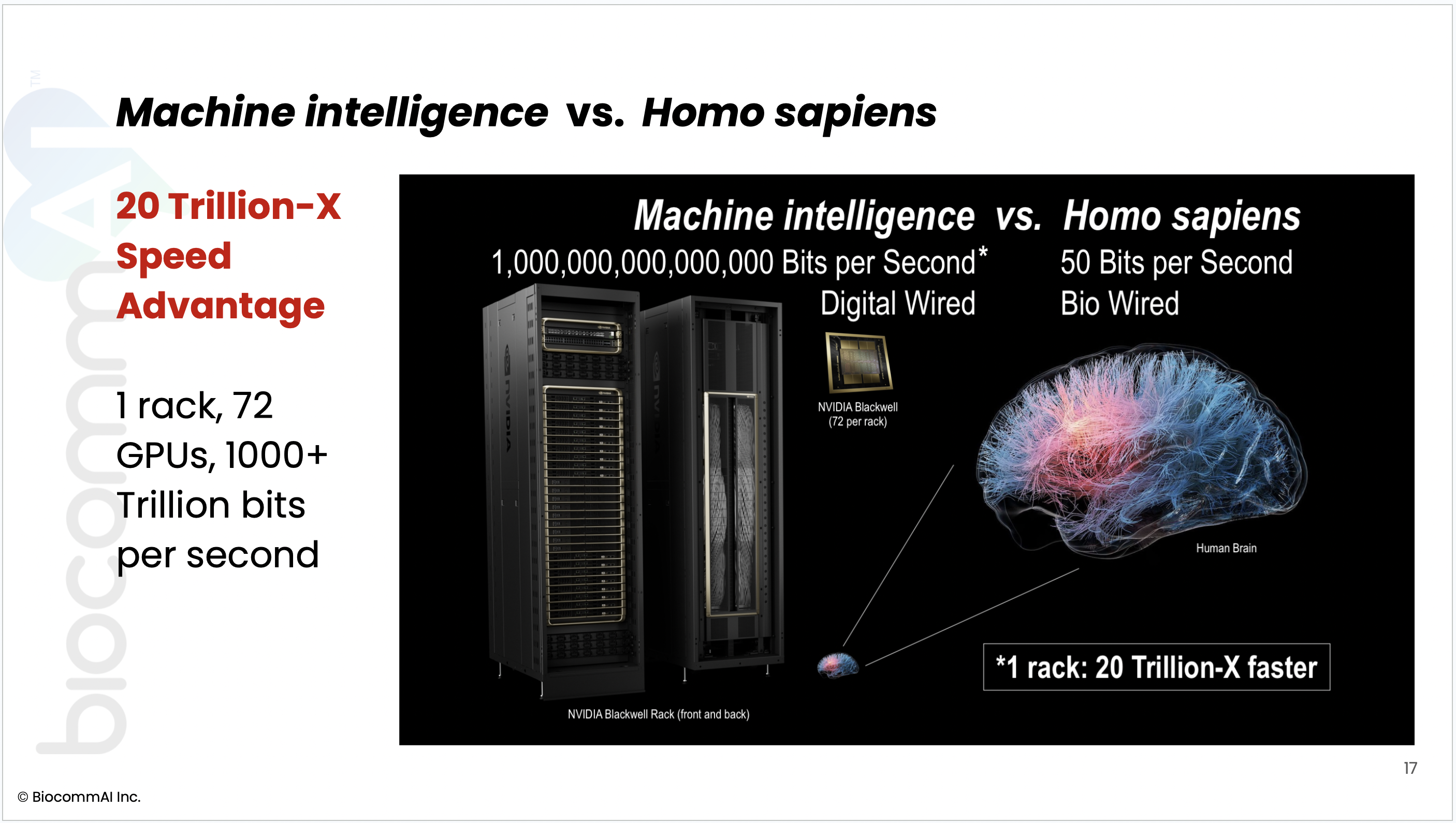

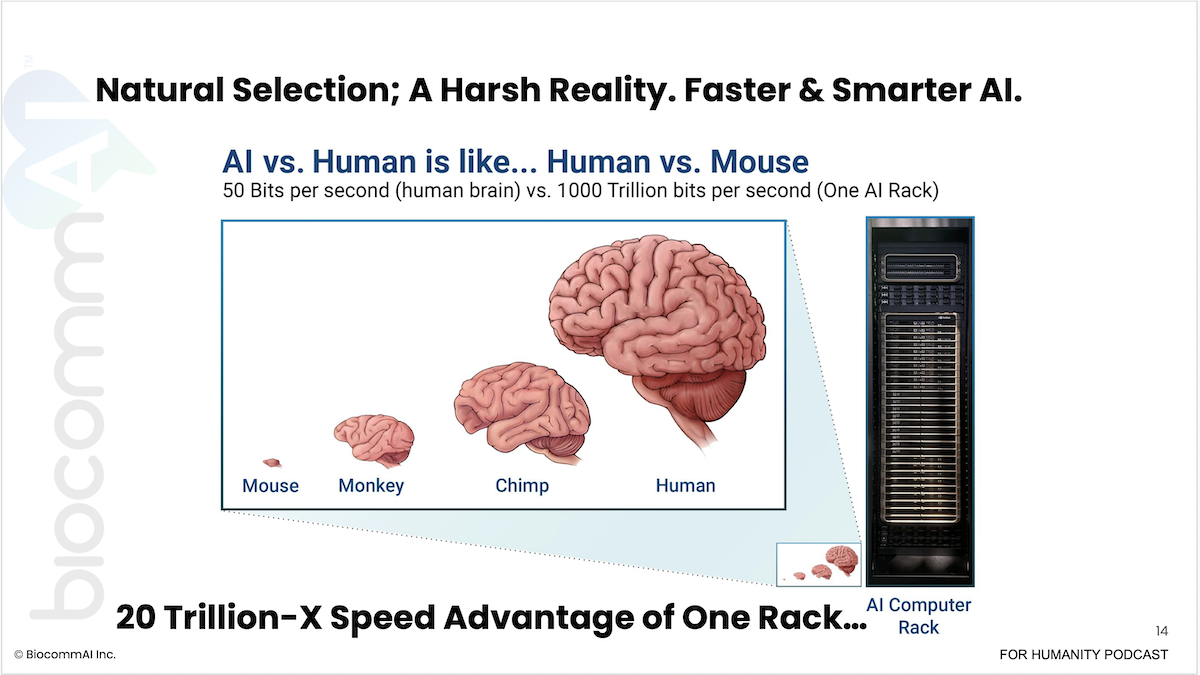

AI developed faster than anybody expected… 10x to 100x per year.

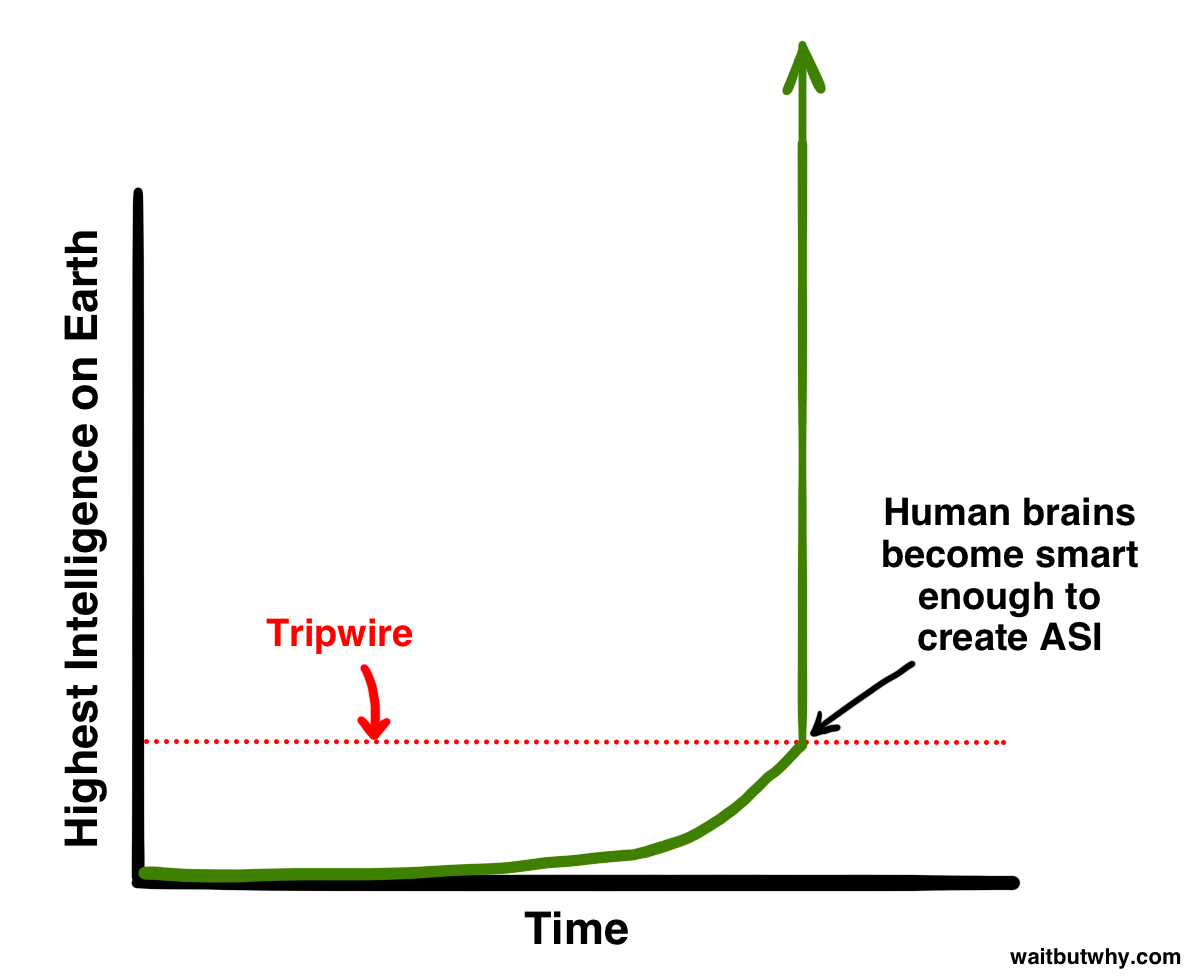

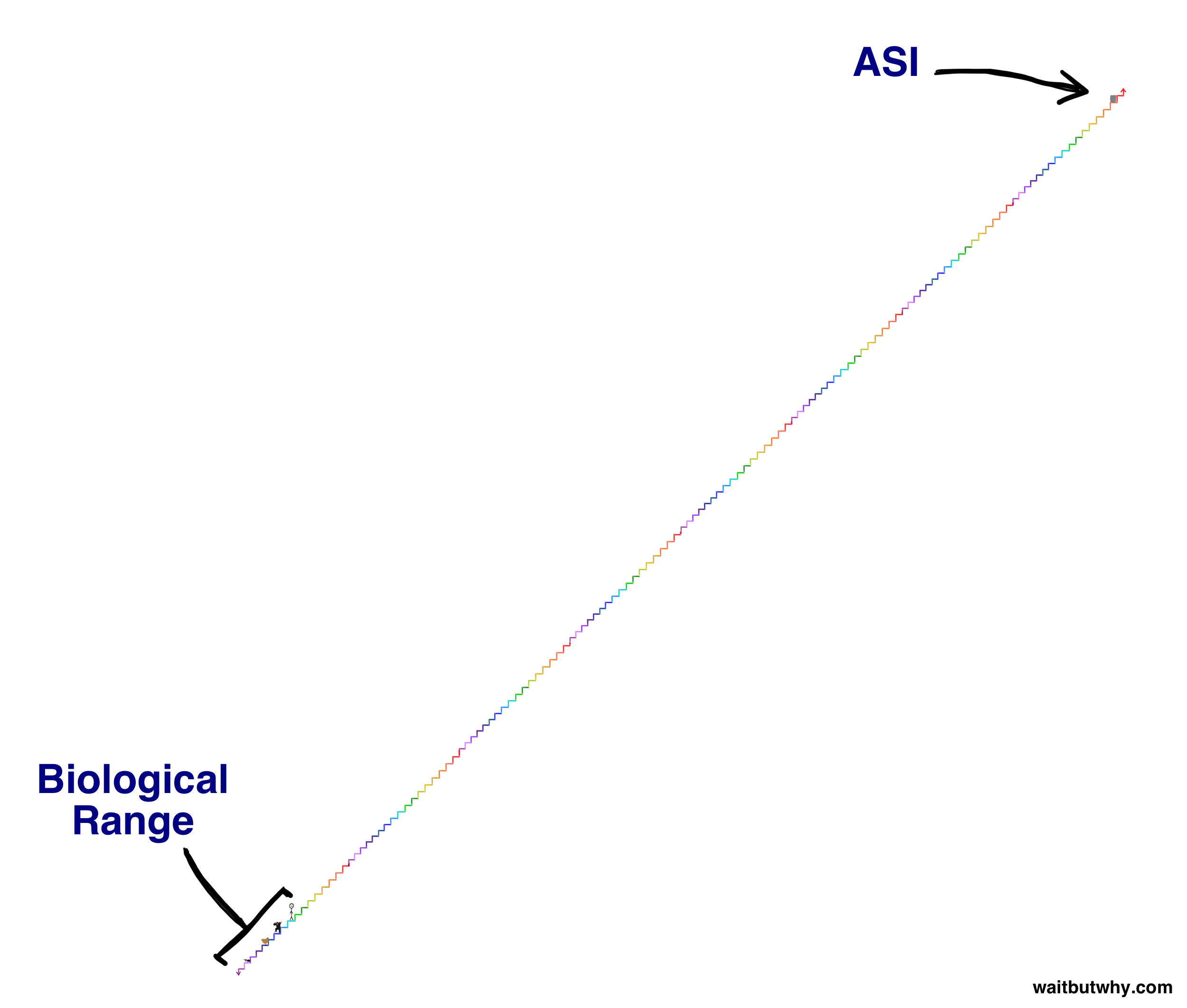

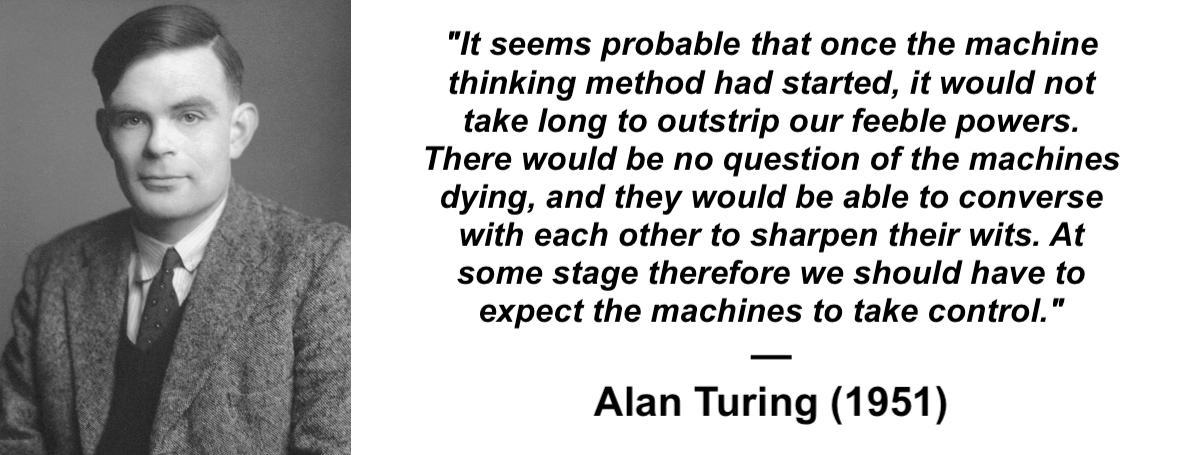

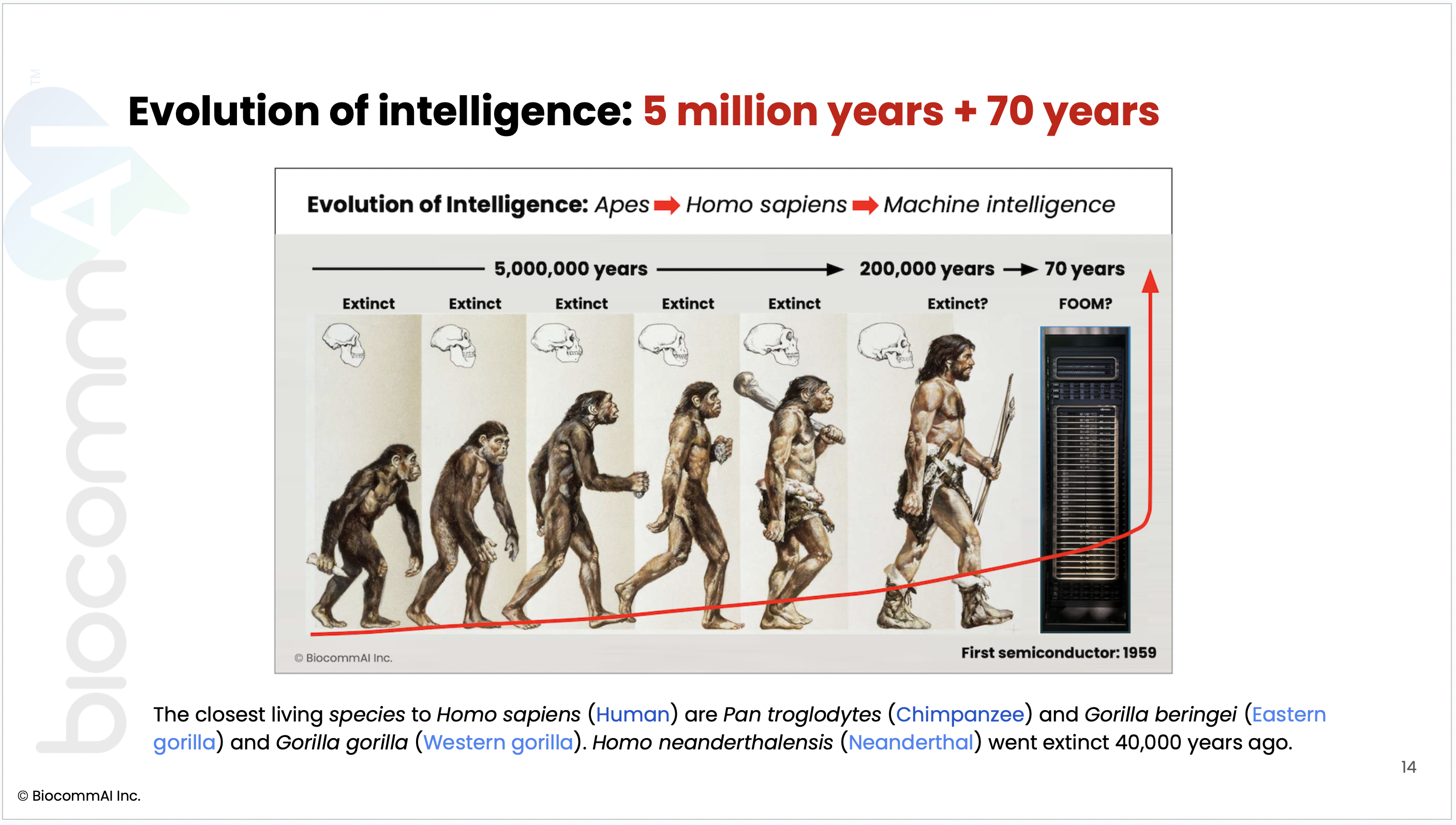

AGI will be here in our environment sometime between 2025 and 2029, and FOOM! The ASI intelligence explosion.

But the existential threat (X-risk) to Homo sapiens remains the same.

BOTTOM LINE: 4 more years (at most) to get it perfectly right. We get one chance, only.

“It doesn’t take a genius to realize that if you make something that’s smarter than you, you might have a problem…

If you’re going to make something more powerful than the human race, please could you provide us with a solid argument as to why we can survive that, and also I would say, how we can coexist satisfactorily.”

— Professor Stuart Russell

Learn more… in 5 minutes:

WHY? (1:00)

WHAT? (1:00)

HOW? (3:00)