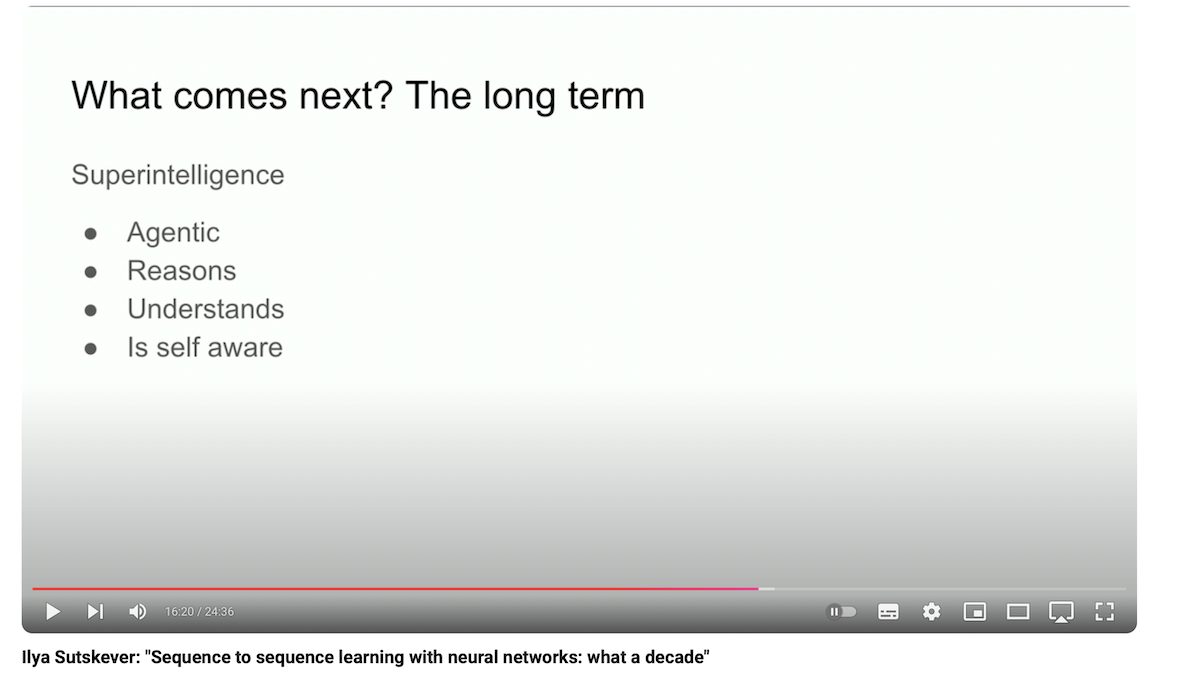

“I’m not saying how by the way, and I’m not saying when, I’m saying that it will. And when all those things will happen together with self-awareness… when all those things [Agentic. Reasoning. Understanding. Self Awareness] come together we will have systems of radically different qualities and properties that exist today, and of course they will have incredible and amazing capabilities, but the kind of issues that come up with systems like this… it’s very different from what we used to, and I would say that it’s definitely also impossible to predict the future. Really all kinds of stuff is possible.” – Ilya Sutskever

Ilya Sutskever at NeurIPS 2024 speaks about the forthcoming arrival of superintelligence: “that is obviously where this field is headed. This is obviously what is being built here” and superintelligent AI will have “radically different qualities and properties” than today’s AI… pic.twitter.com/s4oMSf3N8m

— Tsarathustra (@tsarnick) December 14, 2024

Ilya Sutskever, speaking at NeurIPS 2024, says reasoning will lead to “incredibly unpredictable” behavior and self-awareness will emerge in AI systems pic.twitter.com/TeXALqG859

— Tsarathustra (@tsarnick) December 13, 2024

“I want to talk to a little bit about super intelligence just a bit cuz that is obviously where this field is headed. This is obviously what’s being built here and the thing about super intelligence is that it will be different qualitatively from what we have and my goal in the next minute is to try to give you some concrete intuition of how it will be different so that you yourself could reason about it. So right now we have our incredible language models and the unbelievable chatbot and they can even do things but they’re also kind of strangely unreliable and they get confused when while also having dramatically superhuman performance on evals so it’s really unclear how to reconcile this. But eventually, sooner or later, the following will be achieved. Those systems are actually going to be agentic in real ways, whereas right now the systems are not agents in any meaningful sense just very, that might be too strong, they’re very very slightly agentic just beginning. It will actually reason and by the way I want to mention something about reasoning, is that a system that reasons, the more it reasons the more unpredictable it becomes, the more it reasons the more unpredictable it becomes. All the Deep learning that we’ve been used to is very predictable because if you’ve been working on replicating human intuition essentially it’s like the gut if you come back to the 0.1 second reaction time what kind of processing we do in our brains well it’s our intuition. So we’ve endowed our AIs with some of that intuition, but reasoning, you’re seeing some early signs of that reasoning, is unpredictable and one reason to see that is because the chess AIs, the really good ones are unpredictable to the best human chess players. So we will have to be dealing with AI systems that are incredibly unpredictable. They will understand things from limited data. They will not get confused. All the things which are really big limitations. I’m not saying how by the way, and I’m not saying when, I’m saying that it will. And when all those things will happen together with self-awareness, because why not? Self-awareness is useful. It is part your ourselves are parts of our own world models. When all those things come together we will have systems of radically different qualities and properties that exist today and of course they will have incredible and amazing capabilities. But the kind of issues that come up with systems like this and I’ll just leave it as an exercise to um imagine it’s very different from what we used to and I would say that it’s definitely also impossible to predict the future really all kinds of stuff is possible but on this uplifting note I will conclude thank you.”

“I also think that our standards for what counts as generalization have increased really quite substantially, dramatically, unimaginably if you keep track. And so I think then answer is to some degree, probably not as well as human beings, I think it is true that human beings generalize much better, but at the same time they definitely generalize out of distribution to some degree. I hope it’s a useful tautological answer.”

On the Origin of Species: Chapter III. Struggle for Existence. Chapter IV. Natural Selection. (1859) Charles Darwin:

- Chapter III. Struggle for life most severe between individuals and varieties of the same species; often severe between species of the same genus—The relation of organism to organism the most important of all relations… But Natural Selection, as we shall hereafter see, is a power incessantly ready for action, and is immeasurably superior to man’s feeble efforts.

- Chapter IV. Action of Natural Selection, through Divergence of Character and Extinction, on the descendants from a common parent—Explains the Grouping of all organic beings… The forms which stand in closest competition with those undergoing modification and improvement will naturally suffer most.”

Editors Note:

- In the inevitable event of competition between species, in the struggle for survival, for existence, in our natural world, the future is inevitably predicted by the unstoppable phenomenon of Natural Selection as evidenced by trillions of years of species’ biology and extinction.

- IF uncontained and uncontrolled, THEN according to the unstoppable phenomenon of natural selection, a competitive and power-seeking agentic new species of Machine intelligence (Artificial Super-intelligence) will out-compete and displace human intelligence, over time, with 100% certainty.

- In this case, Homo sapiens will go extinct, as have 99.99% of all species on earth, with 100% certainty.

- IF however a sustainable Mutualistic symbiosis is achieved between the two species, Homo sapiens and Machine intelligence, THEN the two Mutualistic symbionts can survive and prosper, over time, as clearly evident in the natural world by trillions of years of over 800,000 known species’ biology and survival, with 100% certainty.