Abstract We study a novel language model architecture that is capable of scaling test-time computation by implicitly reasoning in latent space.

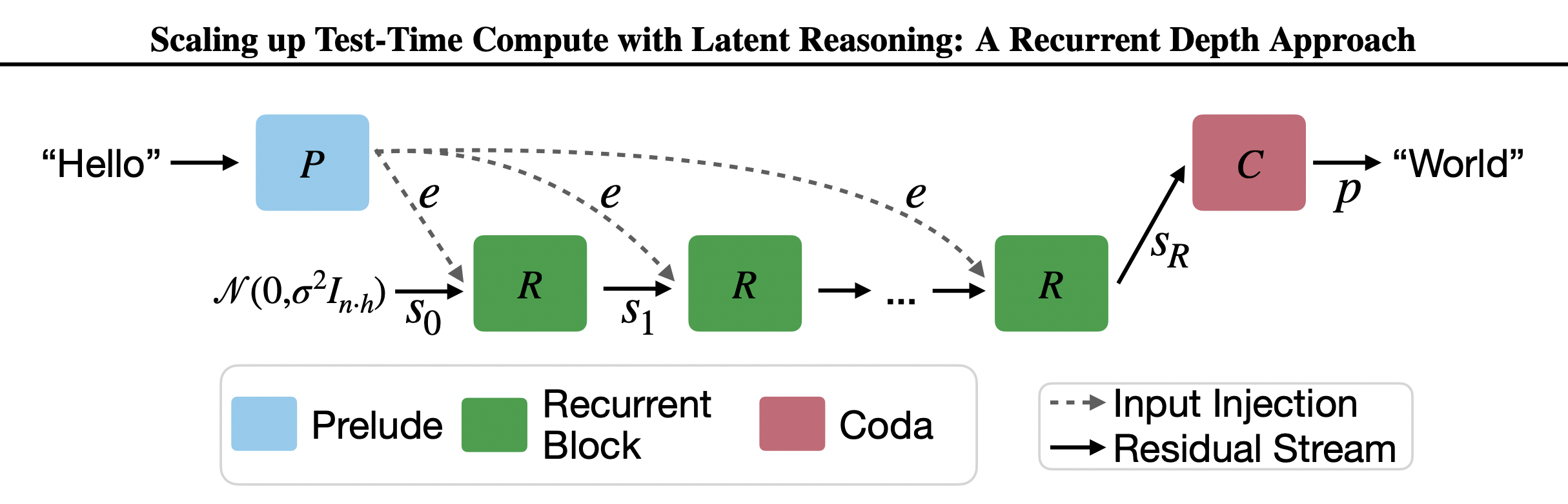

Abstract We study a novel language model architecture that is capable of scaling test-time computation by implicitly reasoning in latent space. Our model works by iterating a recurrent block, thereby un- rolling to arbitrary depth at test-time. This stands in contrast to mainstream reasoning models that scale up compute by producing more tokens. Un- like approaches based on chain-of-thought, our approach does not require any specialized train- ing data, can work with small context windows, and can capture types of reasoning that are not easily represented in words. We scale a proof-of- concept model to 3.5 billion parameters and 800 billion tokens. We show that the resulting model can improve its performance on reasoning bench- marks, sometimes dramatically, up to a compu- tation load equivalent to 50 billion parameters.