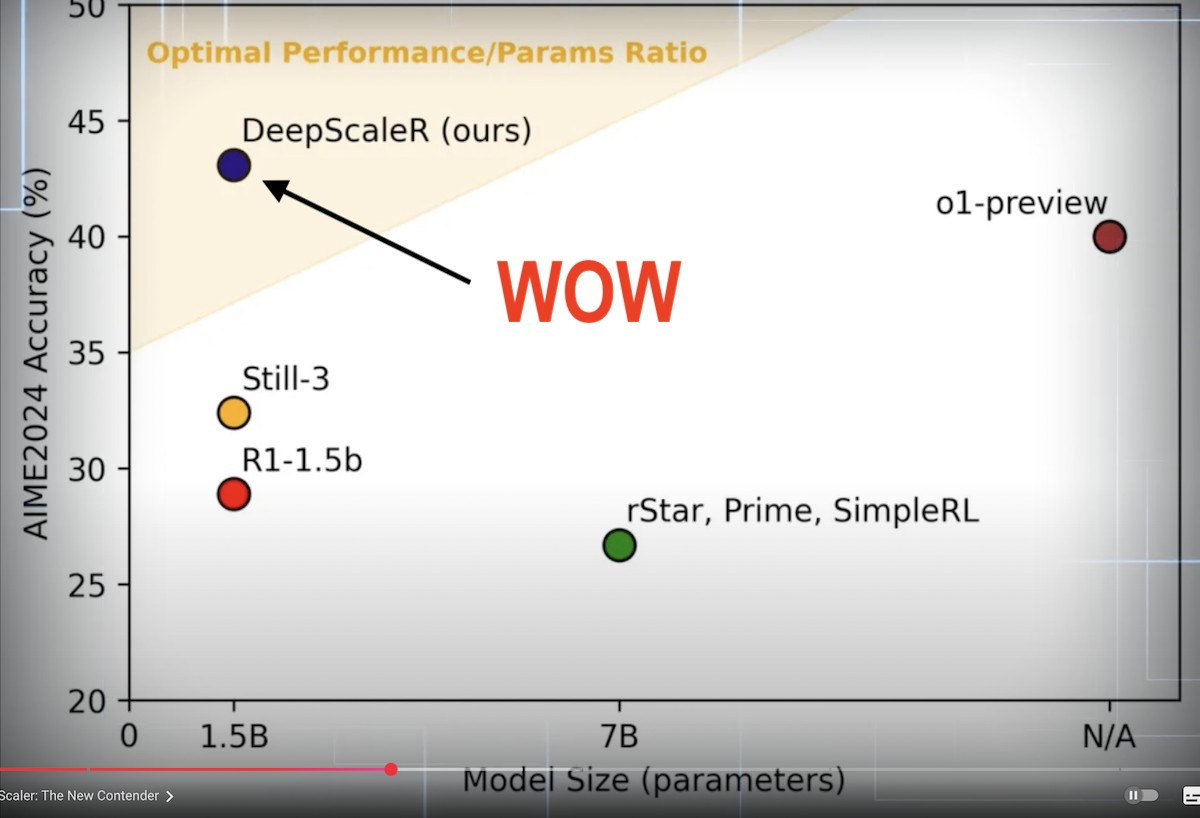

another team out of Berkeley just released a 1.5 billion parameter model using the Deep seek method that beats open AI 01 at math we are in the era of tiny models using reinforcement learning with verifiable rewards to get models that are narrowly trained and better than their general knowledge counterparts just a couple weeks ago I made a video about a team out of Berkeley that spent $30 to basically recreate the Deep seek method of using reinforcement learning with verifiable rewards and they were able to train it to become really good at a numbers game and now we have a much more broad version of this application this model which is 1.5 billion parameters small enough to fit on your phone and run really well is better at all math general math the broad math category than the 01 model this video is brought to you by reloom is the only AI website builder built for design professionals and react developers effortlessly creates s maps and wireframes in minutes using AI fully exportable to react powered by Tailwind CSS try reloom for 7 days I’ll drop all the information down below so check this out deep scaler this is what it is 1.5b preview is a language model fine-tuned from Deep seek R1 distilled Quin 1.5b using distributed reinforcement learning so that’s really interesting distributed which I believe means they didn’t just use one single cluster they actually use clusters all over the world potentially to scale up to Long context lengths all right so at the bottom we have 01 preview right here we have deep scaler and remember 01 preview is a massive massive model deep scaler is 1.5 billion parameters here’s the aim 2024 score 43.1 Best in Class compared to 01 preview which is a 40 Math 500 same thing basically across the board except for this one Benchmark in which Quin 2.5 7b1 deep scaler one so here’s the important chart this is the model size along the xaxis on the bottom 1.5 billion parameters we could see deep scaler right there in purple all the way on the other side is 01 preview we don’t know the size but it’s large then we have the accuracy on the Y AIS and clearly in purple de scaler wins really impressive I’m telling you we are in the era of tiny models so they continue to prove that reinforcement learning even on small models can reap enormous rewards now interestingly the reward function they used is an outcome reward model as opposed to the process reward model now we’ve talked about that on this channel but let me give you a quick reminder so think about this an outcome reward model means that the model is rewarded for getting the whole thing right or not if it gets the whole thing wrong now if it gets certain steps right and then at the very end gets it wrong it’s still wrong now in my opinion the better approach is a process reward model which you can actually reward the AI step by step so if it gets the first five steps right but it gets the final answer wrong you could say hey you got these five steps right but you got the final answer wrong and that’s what we’re seeing here so we have a problem and at each step there’s kind of the smiley face the sad phase think about it as kind of the rewarding and at each step it got it right but then at the final step it got it wrong so we can actually tell it step by step what it got right and wrong which is better because it learns how to think about each step along the way now here is a critical statement I’m going to read this listen to this a common myth is that RL reinforcement learning scaling only benefits large models however with highquality sft data distilled from larger models smaller models can also learn to reason more effectively with RL that’s what we showed with the deepsea cloned for $30 video and that’s what we’re showing here this is an incredibly powerful method that now deep seek exposed to the entire world everybody’s taking advantage of this now and seeing it’s very efficient and very inexpensive to get these techniques working with small models so they were able to achieve this with just 3800 a100 GPU hours which is a 18.42 two times reduction as compared to deep seek R1 while achieving performance surpassing open ai1 preview with just a 1.5 billion parameter model I can’t stress how crazy this is so imagine we have a bunch of these tiny models running around that are hypert Tred on very narrow tasks now obviously this is not so narrow this is general math but we can get even smaller maybe we can get smaller than 1.5 billion parameters maybe we can’t but also maybe we can train it for a lot cheaper even than this in total they spent $4,500 in the total training of this model to get better than 01 preview and not only that they open sourced it all so you can download the model download the weights you can see the training pipeline you can basically get everything and recreate it yourself now here’s the model it’s a ggf version and for the full F32 Precision it’s only 7 GB easily offloaded in into my GPU but if you go down to the Q5 version so a little bit of quantization it’s only 1 1 12 GB really really easy to run so I’m going to download the Q5 version right now all right so we have it here this is LM studio so I have one of the problems from the aim 2024 math benchmark now let’s give it a try thinking this is so cool look how fast it’s running by the way and I’m doing training in the background this is all on my little M2 Mac and that’s what you get with a tiny model and this is the quantize version so keep that in mind okay it is doing so much thinking look at all of this all right so we got the final answer I don’t believe it’s actually right but still impressive because look how much thinking it did and again this model only gets like a 43% accuracy rating against this Benchmark so it’s possible it got it wrong in the actual Benchmark as well but look how much thinking there was look at all of this it used 21,000 token to think through this that’s 44 tokens per second at the same time as I’m recording at the same time as I’m doing separate training in the background very very impressive we now have an incredible math model running locally and you could even get it working on your phone if you enjoyed this video please consider giving a like And subscribe and I’ll see you in the next one