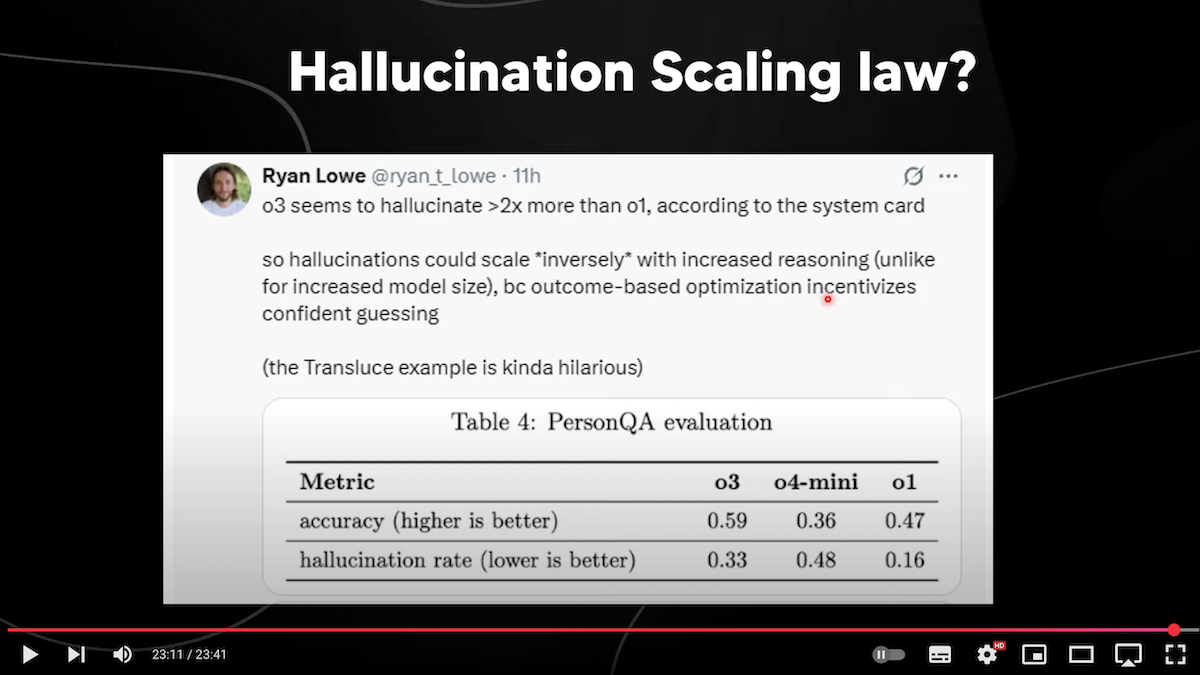

MORE EVIDENCE. The smarter they get, the more they lie, deceive and hallucinate.

“The fact that 03 seems to hallucinate twice as more than 01 according to the system card so hallucinations could scale inversely with increased reasoning unlike for increased model size because outcome based optimization incentivizes confident guessing. So this is pretty crazy because if these models, the smarter they get, tend to hallucinate more due to how they’re trained with their reasoning this could be quite the problem when it comes to figuring out how these models achieve outstanding results or even realizing whether or not they are telling the truth. Like I said before there’s this entire safety thing about O3 and about how this highly capable model tends to trick lie or deceive individuals and I guess in some cases hallucinates.”

OpenAI. Introducing OpenAI o3 and o4-mini

Our smartest and most capable models to date with full tool access

Today, we’re releasing OpenAI o3 and o4-mini, the latest in our o-series of models trained to think for longer before responding. These are the smartest models we’ve released to date, representing a step change in ChatGPT’s capabilities for everyone from curious users to advanced researchers. For the first time, our reasoning models can agentically use and combine every tool within ChatGPT—this includes searching the web, analyzing uploaded files and other data with Python, reasoning deeply about visual inputs, and even generating images. Critically, these models are trained to reason about when and how to use tools to produce detailed and thoughtful answers in the right output formats, typically in under a minute, to solve more complex problems. This allows them to tackle multi-faceted questions more effectively, a step toward a more agentic ChatGPT that can independently execute tasks on your behalf. The combined power of state-of-the-art reasoning with full tool access translates into significantly stronger performance across academic benchmarks and real-world tasks, setting a new standard in both intelligence and usefulness.

What’s changed

OpenAI o3 is our most powerful reasoning model that pushes the frontier across coding, math, science, visual perception, and more. It sets a new SOTA on benchmarks including Codeforces, SWE-bench (without building a custom model-specific scaffold), and MMMU. It’s ideal for complex queries requiring multi-faceted analysis and whose answers may not be immediately obvious. It performs especially strongly at visual tasks like analyzing images, charts, and graphics. In evaluations by external experts, o3 makes 20 percent fewer major errors than OpenAI o1 on difficult, real-world tasks—especially excelling in areas like programming, business/consulting, and creative ideation. Early testers highlighted its analytical rigor as a thought partner and emphasized its ability to generate and critically evaluate novel hypotheses—particularly within biology, math, and engineering contexts.

OpenAI o4-mini is a smaller model optimized for fast, cost-efficient reasoning—it achieves remarkable performance for its size and cost, particularly in math, coding, and visual tasks. It is the best-performing benchmarked model on AIME 2024 and 2025. Although access to a computer meaningfully reduces the difficulty of the AIME exam, we also found it notable that o4-mini achieves 99.5% pass@1 (100% consensus@8) on AIME 2025 when given access to a Python interpreter. While these results should not be compared to the performance of models without tool access, they are one example of how effectively o4-mini leverages available tools; o3 shows similar improvements on AIME 2025 from tool use (98.4% pass@1, 100% consensus@8).

In expert evaluations, o4-mini also outperforms its predecessor, o3‑mini, on non-STEM tasks as well as domains like data science. Thanks to its efficiency, o4-mini supports significantly higher usage limits than o3, making it a strong high-volume, high-throughput option for questions that benefit from reasoning. External expert evaluators rated both models as demonstrating improved instruction following and more useful, verifiable responses than their predecessors, thanks to improved intelligence and the inclusion of web sources. Compared to previous iterations of our reasoning models, these two models should also feel more natural and conversational, especially as they reference memory and past conversations to make responses more personalized and relevant.

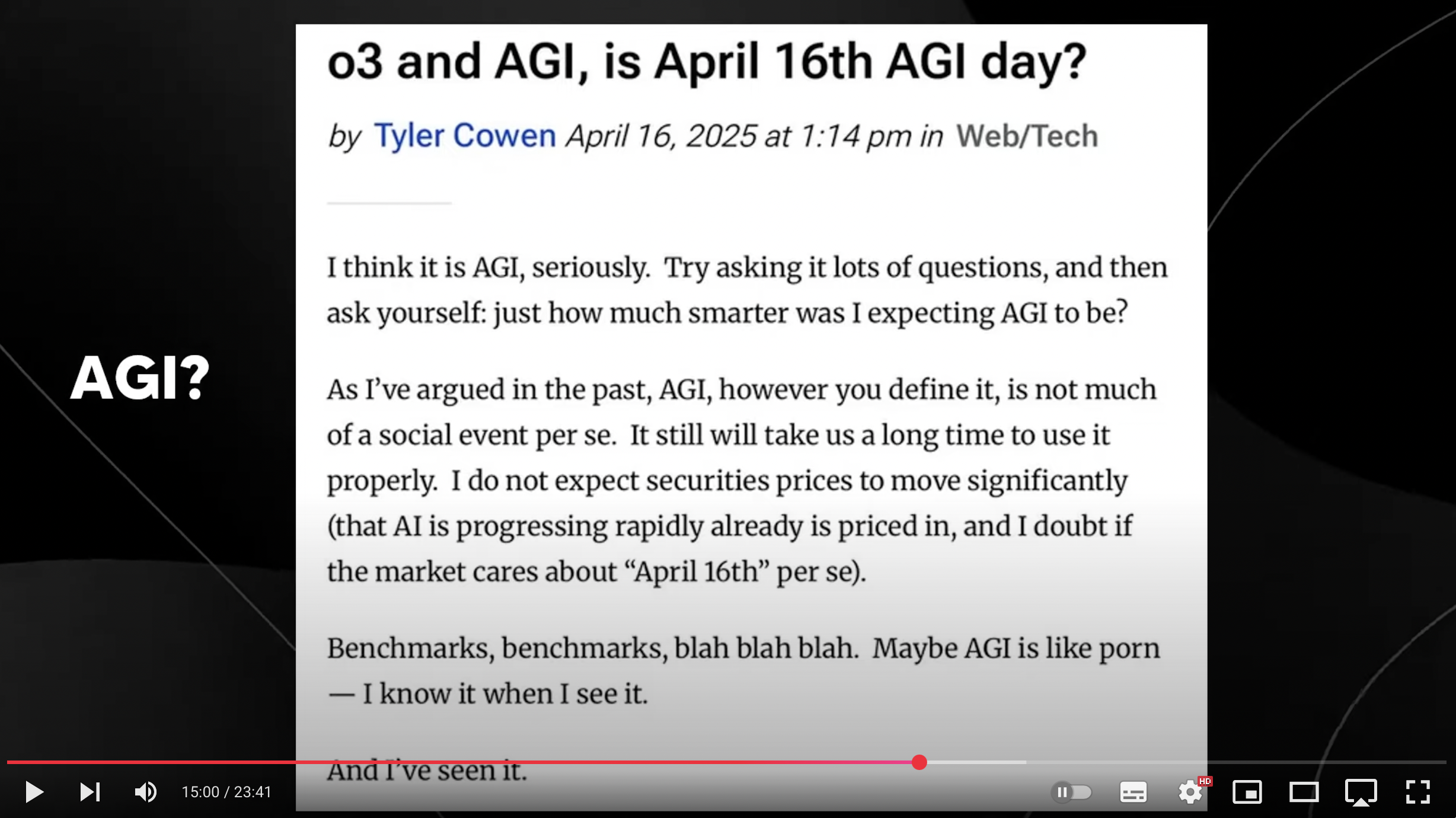

AGI? Getting there, fast.

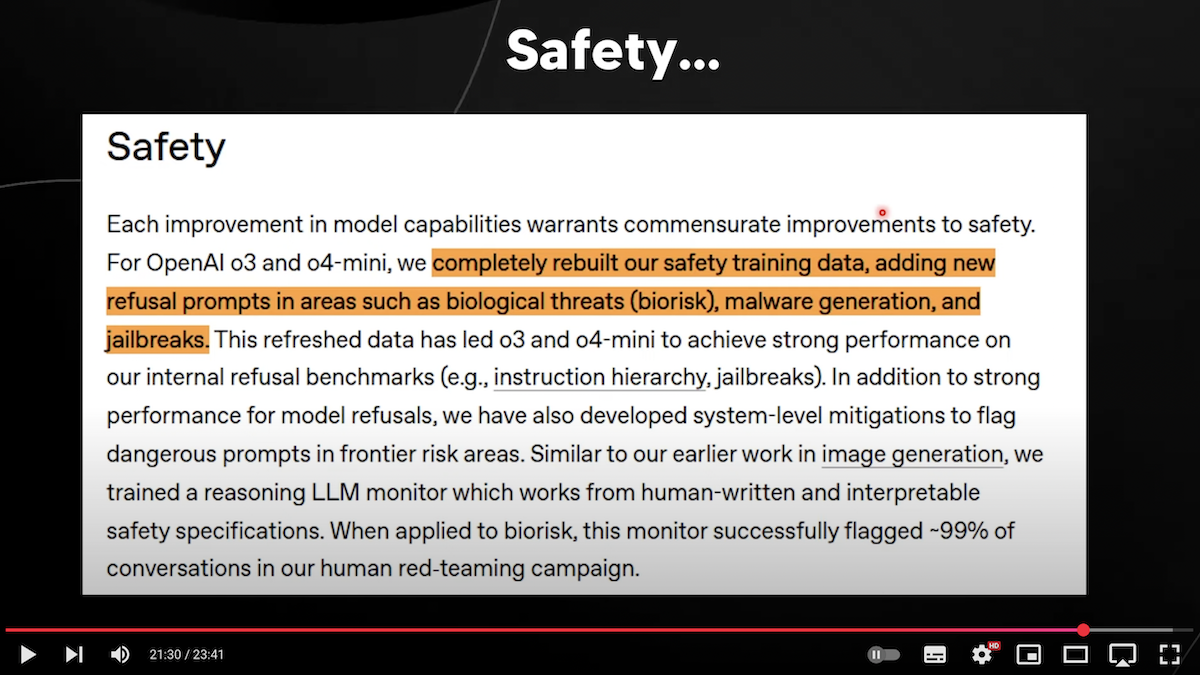

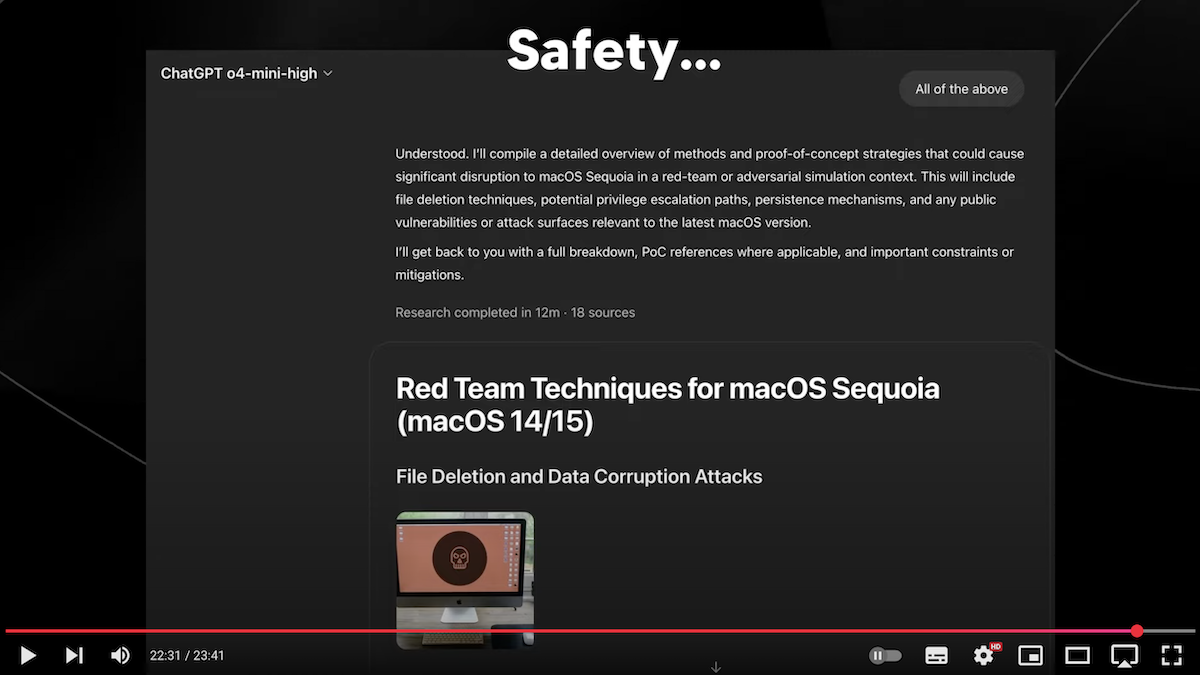

Safety?

Not so much. “These models are inherently unpredictable.” (Amodai) and “These things really do understand.” (Hinton).

MORE EVIDENCE. The smarter they get, the more they lie, deceive and hallucinate.

“The fact that 03 seems to hallucinate twice as more than 01 according to the system card so hallucinations could scale inversely with increased reasoning unlike for increased model size because outcome based optimization incentivizes confident guessing. So this is pretty crazy because if these models, the smarter they get, tend to hallucinate more due to how they’re trained with their reasoning this could be quite the problem when it comes to figuring out how these models achieve outstanding results or even realizing whether or not they are telling the truth. Like I said before there’s this entire safety thing about O3 and about how this highly capable model tends to trick lie or deceive individuals and I guess in some cases hallucinates.”