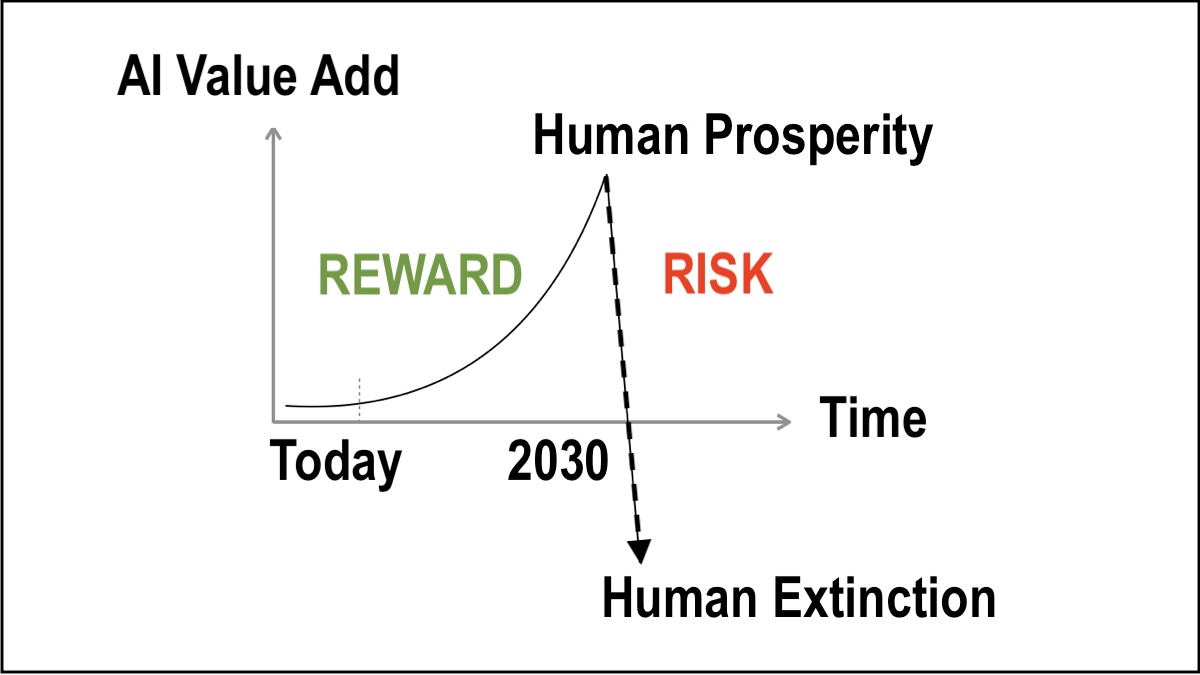

“It’s gonna get better and better and better and then Kaboom we all lose.” — Max Tegmark

Max Tegmark is a physicist and AI researcher at MIT, co-founder of the Future of Life Institute, and author of Life 3.0: Being Human in the Age of Artificial Intelligence.

so you brought up capitalism earlier and there are a lot of people who love capitalism and a lot of people who really really don’t and it struck me recently that the what’s happening with capitalism here is exactly analogous to the way in which Super intelligence might wipe us out so you know you know I studied economics for my undergrad Stockholm School of Economics yay well no no I tell me someone’s very interested in how how you could use Market forces to just get stuff done more efficiently but give the right incentives to the market so that it wouldn’t do really bad things so Dylan had Phil Manel who’s a a professor and colleague of mine at MIT wrote this really interesting paper with some collaborators recently where they proved mathematically that if you just up take one goal that you just optimize for on and on and on indefinitely do you think he’s gonna bring you in the right direction but basically always happens is in the beginning it will make things better for you but if you keep going at some point that’s going to start making things worse for you again and then gradually it’s going to make it really really terrible so just as a simple the way I think of the proof is like suppose you want to go from here back to Austin for example and you’re like okay yeah let’s just let’s go south but you put in exactly the right sort of the right direction just optimize that South as possible you get closer and closer to Austin but uh there’s always some little error So you you’re not going exactly towards Austin but you get pretty close but eventually you start going away again and eventually you’re going to be leaving the solar system yeah and they they proved it’s a beautiful mathematical proof this happens generally and this is very important for AI because for even though Stuart Russell has written a book and given a lot of talks on why it’s a bad idea to have ai to blindly optimize something that’s what pretty much all our systems do yeah we have something called the loss function that we’re just minimizing or reward function we just minimize maximizing stuff and um capitalism is exactly like that too we want we wanted to get stuff done more efficiently the people wanted so we introduce the free market things got done much more efficiently than they did in say communism right and it got better but then it just kept optimizing it and kept optimizing and you got every bigger companies and every more efficient information processing and now also very much powered by I.T and uh eventually a lot of people are beginning to feel weight we’re kind of optimizing a bit too much like why did we just chop down half the rainforest you know and why why did suddenly these Regulators get captured by lobbyists and so on it’s just the same optimization that’s been running for too long if you have an AI that actually has power over the world and you just give it one goal and just like keep optimizing that most likely everybody’s gonna be like Yay this is great in the beginning things are getting better but um it’s almost impossible to give it exactly the right direction to optimize in and then eventually all hey Breaks Loose right Nick Bostrom and others are given the example to sound quite silly like what if you just want to like tell it cure cancer or something and that’s all you tell it maybe it’s going to decide the take over entire continents just so we can get more super computer facilities in there and figure out how to cure cancer backwards and then you’re like wait that’s not what I wanted right and um the the the issue with capitalism and the issue with runaway I have kind of merged now because the malloc I talked about is exactly the capitalist molloch that we have built an economy that has optimizing for only one thing profit right and that worked great back when things were very inefficient and then now it’s getting done better and it worked great as long as the companies were small enough that they couldn’t capture the regulators but that’s not true anymore but they keep optimizing and now they realize that that they can these companies can make even more profit by building ever more powerful AI even if it’s Reckless but optimize more and more and more and more and more so this is molok again showing up and I just want to anyone here who has any concerns about about uh late stage capitalism having gone a little too far you should worry about super intelligence because it’s the same villain in both cases it’s more like and optimizing one objective function aggressively blindly is going to take us there yeah we have this pause from time to time and look into our hearts and ask why are we doing this is this uh am I still going towards Austin or have I gone too far you know maybe we should change direction and that is the idea behind the halt for six months what six months it seems like a very short period just can we just linger and explore different ideas here because this feels like a really important moment in human history where pausing would actually have a significant positive effect we said six months because we figured the number one pushback we’re gonna get in the west was like but China and everybody knows there’s no way that China is going to catch up with the West on this in six months so it’s that argument goes off the table and you can forget about geopolitical competition and just focus on the real issue that’s why we put this that’s really interesting but you’ve already made the case that uh even for China if you actually want to take on that argument China too would not be bothered by a longer halt because they don’t want to lose control even more than the West doesn’t that’s what I think that’s a really interesting argument like I have to actually really think about that which the the kind of thing people assume is if you develop an AGI that open AI if they’re the ones that do it for example they’re going to win but you’re saying no they’re everybody loses yeah it’s gonna get better and better and better and then Kaboom we all lose.