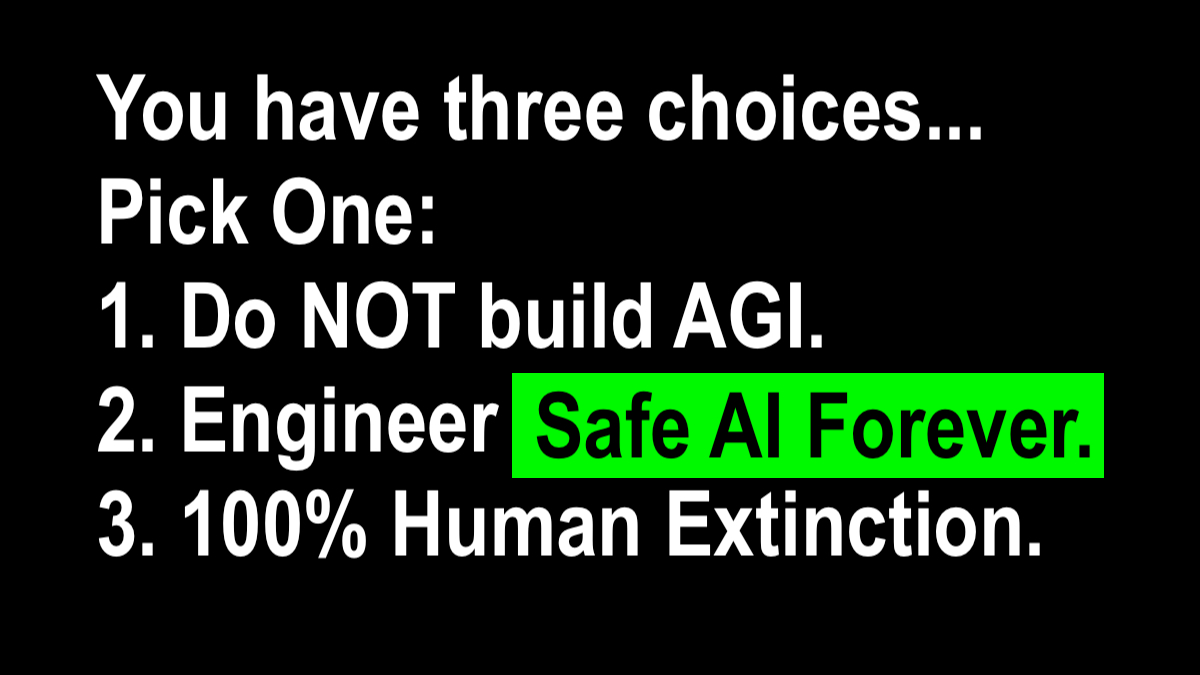

You (Homo sapiens) have three choices with your AI technology race. Pick Only One.

- Do NOT build recursive self-improving Artificial General Intelligence (AGI).

- Engineer 100% mathematically provable Safe AI Forever BEFORE you deploy AGI.

- Release uncontained and uncontrolled AGI into our environment and progress toward EXTINCTION of Homo sapiens.

On the Origin of Species: Chapter III. Struggle for Existence. Chapter IV. Natural Selection. (1859) Charles Darwin:

- Chapter III. Struggle for life most severe between individuals and varieties of the same species; often severe between species of the same genus—The relation of organism to organism the most important of all relations… But Natural Selection, as we shall hereafter see, is a power incessantly ready for action, and is immeasurably superior to man’s feeble efforts.

- Chapter IV. Action of Natural Selection, through Divergence of Character and Extinction, on the descendants from a common parent—Explains the Grouping of all organic beings… The forms which stand in closest competition with those undergoing modification and improvement will naturally suffer most.”

Editors Note:

- In the inevitable event of competition between species, in the struggle for survival, for existence, in our natural world, the future is inevitably predicted by the unstoppable phenomenon of Natural Selection as evidenced by trillions of years of species’ biology and extinction.

- IF uncontained and uncontrolled, THEN according to the unstoppable phenomenon of natural selection, a competitive and power-seeking agentic new species of Machine intelligence (Artificial Super-intelligence) will out-compete and displace human intelligence, over time, with 100% certainty.

- In this case, Homo sapiens will go extinct, as have 99.99% of all species on earth, with 100% certainty.

- IF however a sustainable Mutualistic symbiosis is achieved between the two species, Homo sapiens and Machine intelligence, THEN the two Mutualistic symbionts can survive and prosper, over time, as clearly evident in the natural world by trillions of years of over 800,000 known species’ biology and survival, with 100% certainty.

Easy to understand.

A. Three (3) Possible Biological Outcomes for Homo sapiens

- Option 1. Build Tool AI but Do NOT build AGI = Prosperous Future

- Option 2. Build Tool AI and Race to Build AGI = Lose Control

- Option 3. Build Tool AI and Build Safe AGI = Prosperous Future

B. Currently Homo sapiens is in a Race to Build AGI (Option 2) with absolutely ZERO scientifically proven technical capability for containment and control.

C. Scientific consensus:

- Loss of Control to Machine intelligence will certainly lead to competition, natural selection, and ultimate extinction of Homo sapiens.

- Making AI Safe is impossible.

- Making Safe AI is theoretically possible and must be delivered with absolute certainty.

Learn More (5 minutes)

WHY?

We love our children and our families!

Provably Safe AI for sustainable benefit of humans is vital to all people. AI must be controlled forever. AI must be by people, for people. Humans must not become superseded by a superior intelligence. Humans are a wonderful species, loving, curious inventive and compassionate. Humans want to survive and prosper. Let’s get to it!

WHAT?

Natural selection is unstoppable!

Provably Safe AI for sustainable benefit of humans would be the greatest event in human history. But, if not deployed with safety guarantees, AGI could well be the end of humanity. The benefits of AI are as enormous as are the dangers of uncontrolled AI. We build tools to improve our lives. Intelligence is extreme power. X-risk must be ZERO!

HOW?

Listen to the experts and scientists!

Provably Safe AI for sustainable benefit of humans, with BIOTHRIVOLOGY Safe AGI Data Centers enables a technicalsolution to containment and control of AI systems and services for our customers and for the Safe AI engineering community. AGI Valley apps for benefit of humans include healthcare, education & biosecurity!