Excellent presentation by a highly respected AI scientist!

Speaker: Steve Omohundro

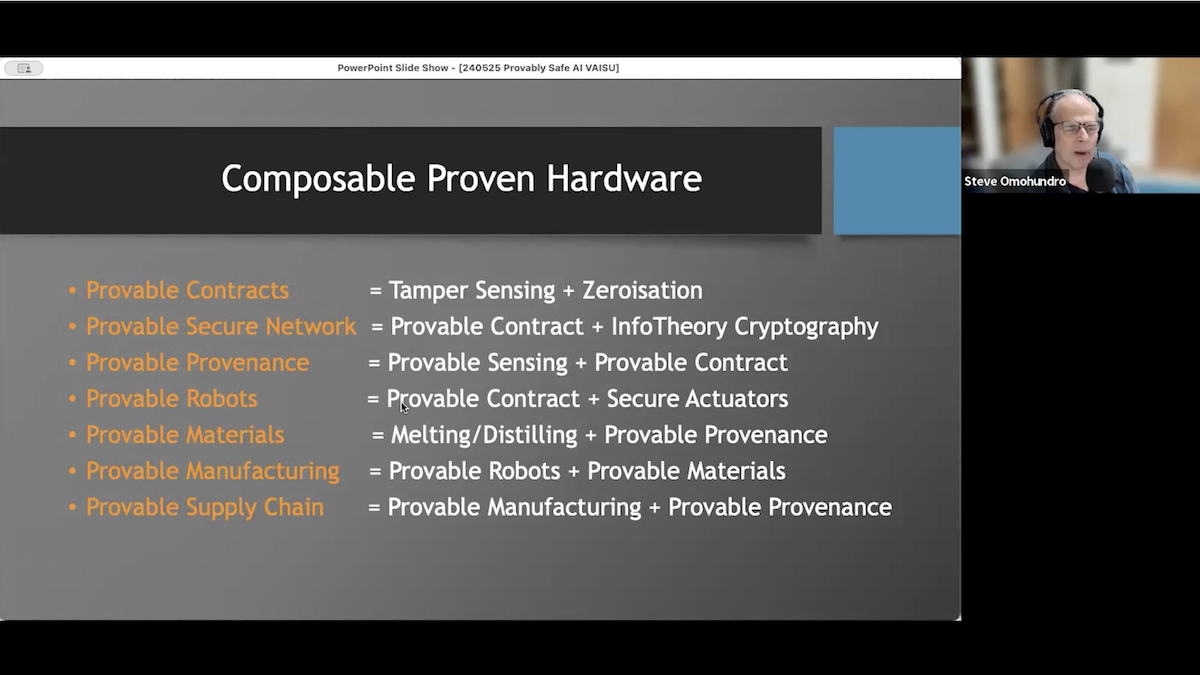

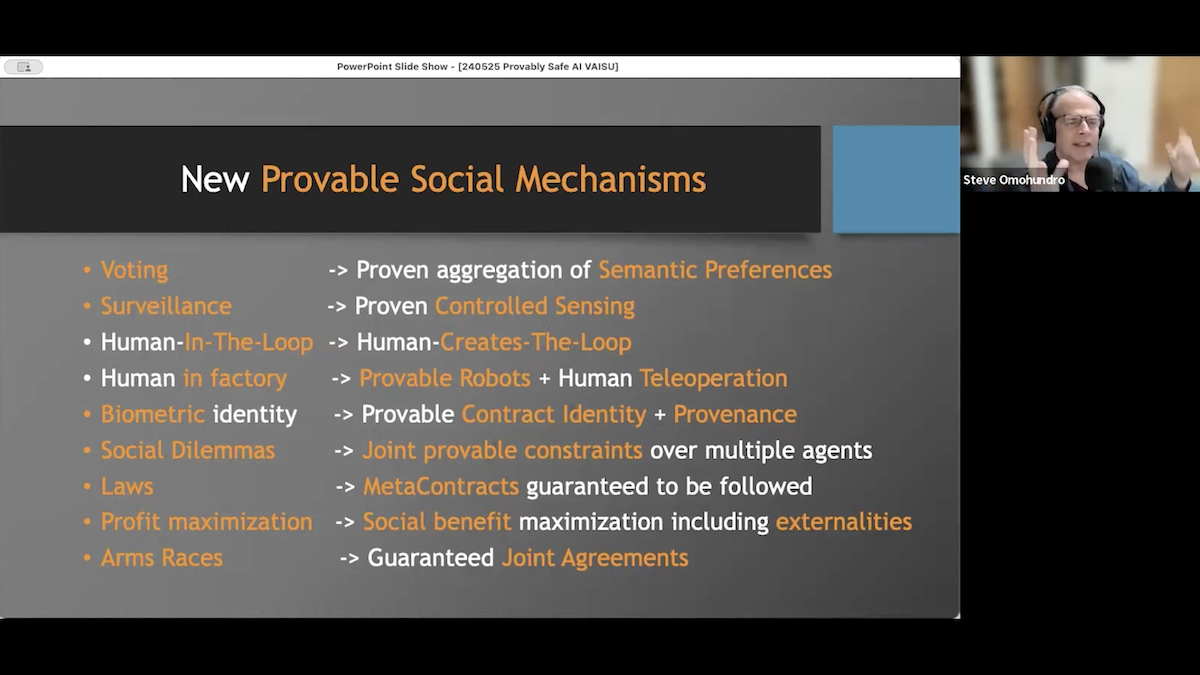

Session Description: We’ll discuss an approach to AI safety based on the laws of physics and mathematical proof which are the only two guaranteed principles for constraining powerful AGI systems. The approach is very promising but will require many new ideas. We’ll discuss how the technology could not only lead to AI safety but also to human thriving.

SCIENTIFIC CONTRIBUTIONS by Steve Omohundro:

- AI Safety Salon with Steve Omohundro. Mind First Foundation

- Provably Safe Systems: The Only Path to Controllable AGI. Tegmark and Omohundro. 06 SEPT 2023

- STEVE OMOHUNDRO. PROVABLY SAFE AGI – MIT MECHANISTIC INTERPRETABILITY CONFERENCE – MAY 7, 2023

- LESSWRONG. Instrumental Convergence. Omohundro. Bostrom. References.

- Self-aware Systems – Steve Omohundro

- Omohundro, S. (2007). The Nature of Self-Improving Artificial Intelligence.

- Omohundro, S. (2008). “The Basic AI Drives“. Proceedings of the First AGI Conference.

- Omohundro, S. (2012). Rational Artificial Intelligence for the Greater Good.

- Steve Omohundro on Provably Safe AGI. Future of Life Institute

- SEMINAL REPORT. Towards Guaranteed Safe AI: A Framework for Ensuring Robust and Reliable AI Systems. 10 MAY 2024.

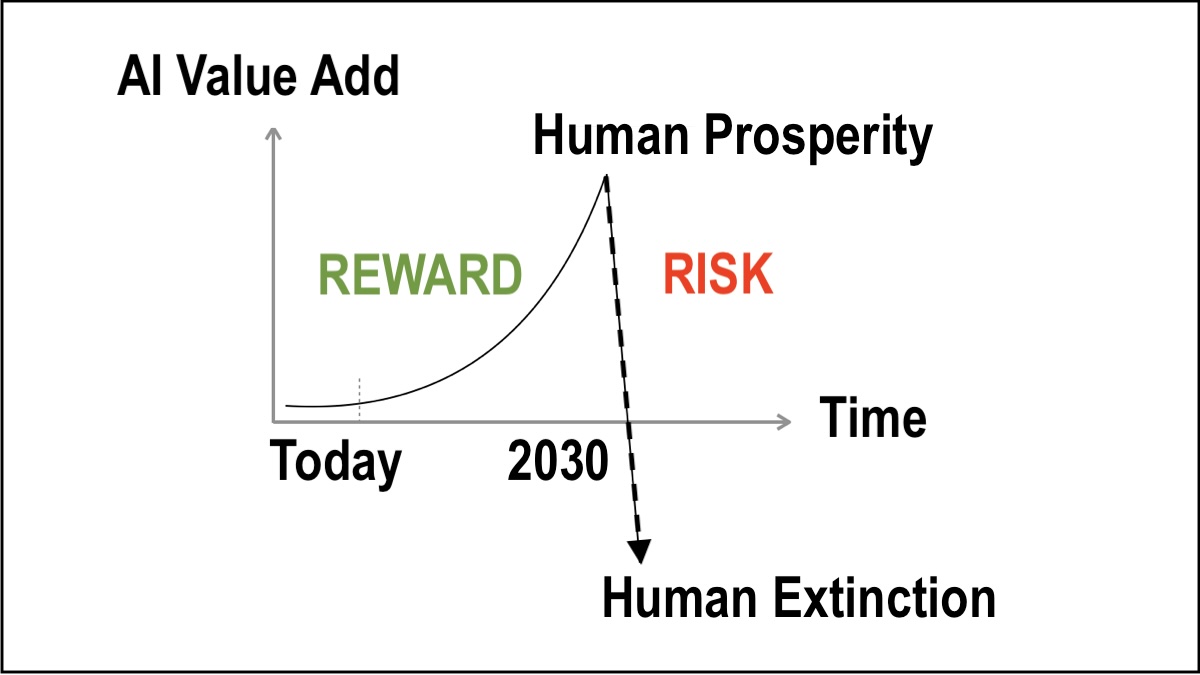

“It’s gonna get better and better and better and then KABOOM we all lose.” — Max Tegmark

OBVIOUSLY: “It doesn’t take a genius to realize that if you make something that’s smarter than you, you might have a problem… If you’re going to make something more powerful than the human race, please could you provide us with a solid argument as to why we can survive that, and also I would say, how we can coexist satisfactorily.” — Prof. Stuart Russell

Editorial Comment: P(Doom) is easy to understand. IF humans lose control to AI, THEN our future is entirely uncertain. IF AI competes with humans, THEN humans will be unable to compete and will be displaced over time, as certain as Natural selection just happens.

Learn More (5 minutes)

WHY?

We love our children and our families!

Provably Safe AI for sustainable benefit of humans is vital to all people. AI must be controlled forever. AI must be by people, for people. Humans must not become superseded by a superior intelligence. Humans are a wonderful species, loving, curious inventive and compassionate. Humans want to survive and prosper. Let’s get to it!

WHAT?

Natural selection is unstoppable!

Provably Safe AI for sustainable benefit of humans would be the greatest event in human history. But, if not deployed with safety guarantees, AGI could well be the end of humanity. The benefits of AI are as enormous as are the dangers of uncontrolled AI. We build tools to improve our lives. Intelligence is extreme power. X-risk must be ZERO!

HOW?

Listen to the experts and scientists!

Provably Safe AI for sustainable benefit of humans, with BIOTHRIVOLOGY Safe AGI Data Centers enables a technicalsolution to containment and control of AI systems and services for our customers and for the Safe AI engineering community. AGI Valley apps for benefit of humans include healthcare, education & biosecurity!