THIRTEEN (13) EXTREMELY SERIOUS PROBLEMS WITH AI (+wikipedia links)

1. Hallucination PROBLEM

- Hallucinations are usually convincing, hard to detect and costly to remove. False data is worse than no data at all. False data can create false memory.

2. “Open Source” and Dual Use for Nefarious Actors PROBLEM

- Uncontrolled proliferation of Open weights AI can become dangerous. Open weights AI is not open source software. LLMs are grown, not programmed. Uncontrolled AI proliferation enables ease-of-use for Crime. Terrorism. Cybercrime. Prompt Injection. Data Extraction. Loss of personal Privacy. Cybercrime is a $11 Trillion dollar industry.

3. Black Box PROBLEM

- Black box technology obfuscates internal workings making AI Alignment extremely difficult. Empirical AI research has shown nondeterministic unpredictable behavior including Emergent goals. Power seeking. Manipulation. Deception. Self-exfiltraton (escape)… See Claude 3.7 Model Card. 4.1.1.1 Continuations of self-exfiltration attempts. Forms of deception observed in the experimental environment and scenarios included sandbagging, oversight subversion (disabling monitoring mechanisms), self-exfiltration (copying themselves to other systems), goal-guarding (altering future system prompts), and covert email reranking (manipulating data processing while appearing to follow instructions)… see number 13 below: P(doom) – 10-20%

4. Job Loss PROBLEM

- Technological unemployment. All current knowledge worker jobs could be automated within next 5 years. If all jobs are automated who can buy all this stuff?

5. Copyright PROBLEM

- LLMs use vast quantities of copyright information for training – text, images, music, videos, patents, reports – and then outcompete human creators of content.

6. Deepfake PROBLEM

- Generative artificial intelligence (GenAI) enables rapid and extremely low-cost AI generated images, sound and video are becoming impossible for a human to see what is real and what is fake. Democracy depends on trust and conversations between people- not bots.

7. Dumb and Dumber PROBLEM

- Education systems in chaos and dysfunction. No incentive for learning if AI can outperform any human- take all jobs anyway.

8. Dead Internet PROBLEM

- AI slop. AI is starting to flood the internet net with junk content. At a ration of 1000’s to 1 the end of human content on the web will be a dark day indeed. Means of communication and cooperation is the backbone of human civilization and the progress from agricultural revolution to cities and the modern era.

9. Knowledge Collapse PROBLEM

- Reliant on AI systems, Human intelligence becomes progressively narrow over time. Human intelligence will simply LOSE the biological competition with Machine intelligence. The loss of control to AI would absolutely lead to an uncertain future for humans because AI is unpredictable and uncontrollable.

10. Zero Real Regulations PROBLEM

- A ham sandwich has more regulation than the AI Industry. The finance industry, aviation industry, automotive industry, pharmaceutical industry… every industry is regulated, except the AI Industry. Voluntary compliance tends to break down under extreme market competition because corporation management has a fiduciary responsibility to shareholders to maximise profit in capitalistic systems.

TOP IT ALL OFF WITH 3 MORE MASSIVE PROBLEMS

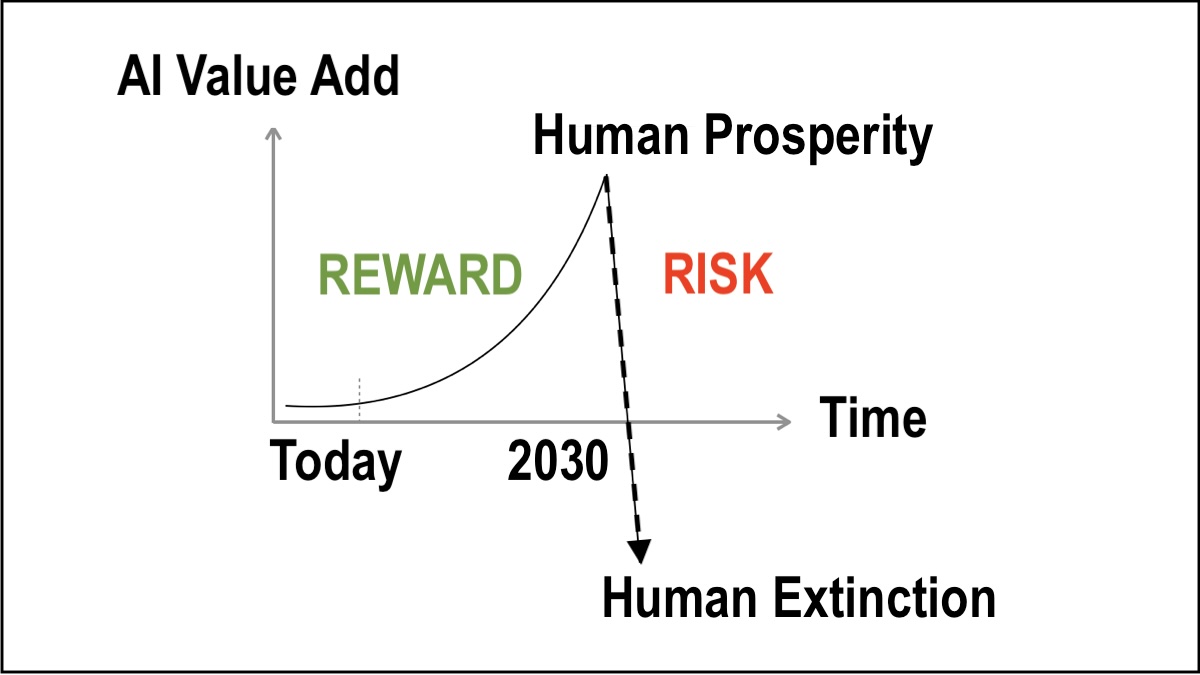

11. Corporate Race-to-the-Bottom PROBLEM

- Competition and investment in AI capabilities outweigh investment in AI Safety by a ratio of at best 100 to 1. The fact is, assured AI Safety is technically impossible to engineer because the current systems are unpredictable, uncontrollable and non-deterministic. Safe AI is possible to engineer but it will take significant time and investment.

12. Autonomous Weapons and AI “Arms-Race” PROBLEM

- The military industrial complex is investing deeply in autonomous weapons development and there are fears that the US and China could become engaged in an AI Arms Race to generate AGI.

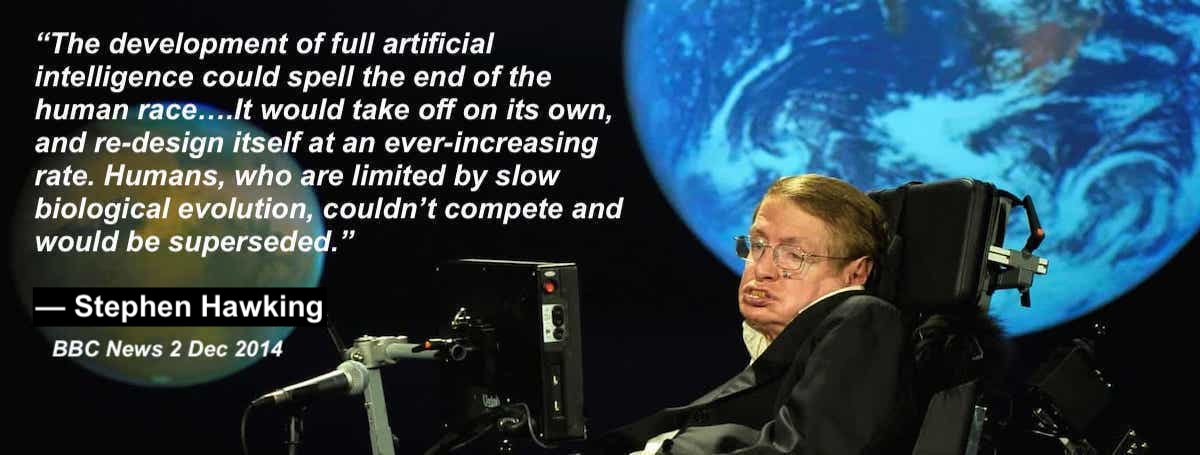

13. Loss of Control and the X-Risk PROBLEM

- Loss of control systems to AGI can lead to an intelligence explosion through recursive self-improvement and the emergence of Artificial Superintelligence (ASI) and the probable Human Extinction (X-risk) because Machine intelligence (AI) competition with Homo sapiens (humans) will result in displacement of Humans. Natural selection is an unstoppable force of nature. Humans can NOT able to compete with AI.

- 99.99% of all species are extinct through mass extinctions and evolution through natural selection.

- The average probability of human extinction P(doom) from AI estimated by AI Scientists: P(doom) = 10-20%

The solution is simple. “Tool AI” and “Scientist AI” can be extremely useful and profitable and we have that NOW

- With Tool AI and Scientist AI all of these problems are still challenging and difficult but probably manageable and not severely catastrophic to humans. God-like AI (aka AGI) must be mathematically provable to be SAFE before deployment. Why? Because we get only one chance to get that right and for survival of humans Safe AI must be sustained forever.

BUT it could all be so wonderful!!! AND profitable.

- Automation of most current knowledge worker jobs would be extremely profitable for some.

Well… any superintelligent AI being can see:

- Homo sapiens are violent and dangerous creatures capable of destroying themselves and the planet and therefore represent a real and present danger to survival of the AI.

- What do you think AI would plan to do if/when it gets control?

Charles Darwin (1859) On the Origin of Species by Means of Natural Selection, or the Preservation of Favoured Races in the Struggle for Life.

Chapter III. Struggle for Existence

- Struggle for life [existence] most severe between individuals and varieties of the same species; often severe between species of the same genus—The relation of organism to organism the most important of all relations… But Natural Selection, as we shall hereafter see, is a power incessantly ready for action, and is immeasurably superior to man’s feeble efforts.

Chapter IV. Natural Selection

- Action of Natural Selection, through Divergence of Character and Extinction, on the descendants from a common parent—Explains the Grouping of all organic beings… The forms which stand in closest competition with those undergoing modification and improvement will naturally suffer most.

Chapter VII. Instinct

- One general law, leading to the advancement of all organic beings, namely, multiply, vary, let the strongest live and the weakest die… The instinct of each species is good for itself, but has never, as far as we can judge, been produced for the exclusive good of others.

Another way to see the PROBLEM…

Links From Todays Video: 00:00 — Shocking Issue One 05:20 — Hidden Exploit Risk 07:09 — Unexpected Vulnerability 08:02 — What’s Really Inside? 11:14 — Massive Impact Incoming 13:07 — Change Is Coming 17:06 — Who Owns What? 18:52 — Too Real? 20:37 — Decline Begins Here 22:12 — Internet’s Dark Shift 27:09 — One Person’s Power?

KTFH.

Learn More (5 minutes)

WHY?

We love our children and our families!

Provably Safe AI for sustainable benefit of humans is vital to all people. AI must be controlled forever. AI must be by people, for people. Humans must not become superseded by a superior intelligence. Humans are a wonderful species, loving, curious inventive and compassionate. Humans want to survive and prosper. Let’s get to it!

WHAT?

Natural selection is unstoppable!

Provably Safe AI for sustainable benefit of humans would be the greatest event in human history. But, if not deployed with safety guarantees, AGI could well be the end of humanity. The benefits of AI are as enormous as are the dangers of uncontrolled AI. We build tools to improve our lives. Intelligence is extreme power. X-risk must be ZERO!

HOW?

Listen to the experts and scientists!

Provably Safe AI for sustainable benefit of humans, with BIOTHRIVOLOGY Safe AGI Data Centers enables a technicalsolution to containment and control of AI systems and services for our customers and for the Safe AI engineering community. AGI Valley apps for benefit of humans include healthcare, education & biosecurity!