For AI safety, the researchers deploy the models in a sandboxed and supervised environment, BUT they release open source code AND open weights… it doesn’t take a genius to realize that a nefarious actor could cause a HUGE problem.

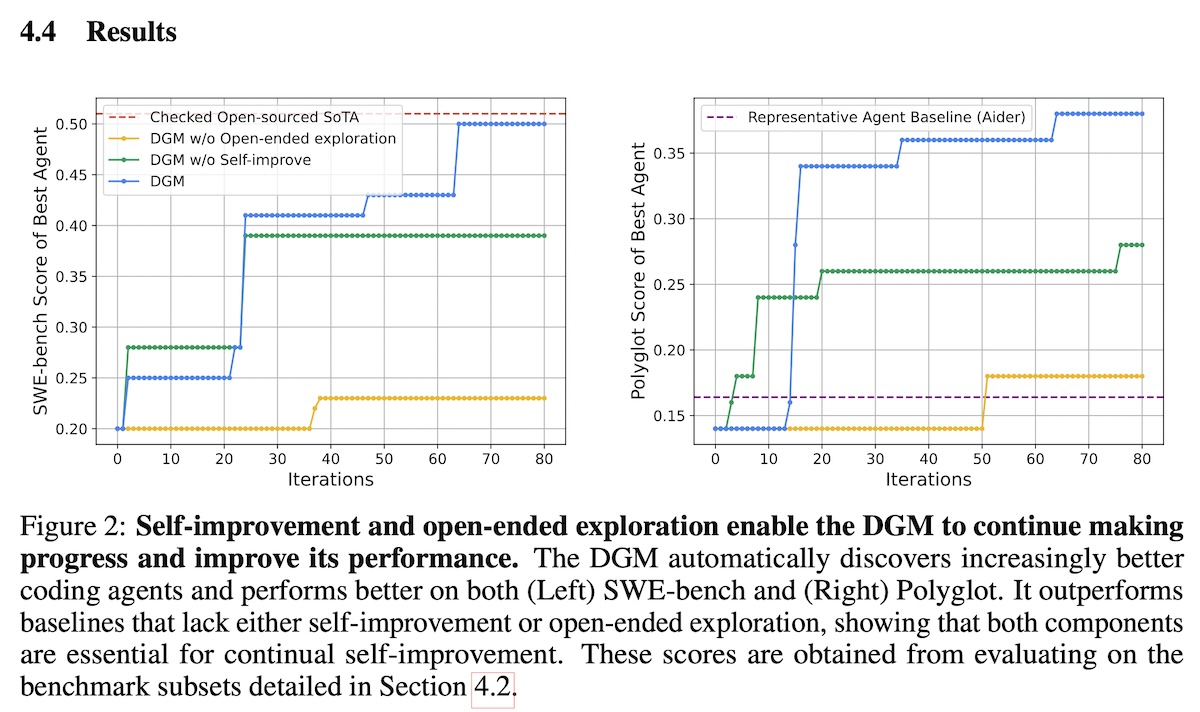

Abstract. Most of today’s AI systems are constrained by human-designed, fixed architectures and cannot autonomously and continuously improve themselves. The scientific method, on the other hand, provides a cumulative and open-ended system, where each innovation builds upon previous artifacts, enabling future discoveries. There is growing hope that the current manual process of advancing AI could itself be automated. If done safely, such automation would accelerate AI development and allow us to reap its benefits much sooner. This prospect raises the question of how AI systems can endlessly improve themselves while getting better at solving relevant problems. Previous approaches, such as meta-learning, provide a toolset for automating the discovery of novel algorithms but are limited by the human design of a suitable search space and first-order improvements. The Gödel machine [116], on the other hand, introduced a theoretical approach to a self-improving AI, capable of modifying itself in a provably beneficial manner. Unfortunately, this original formulation is in practice impossible to create due to the inability to prove the impact of most self-modifications. To address this limitation, we propose the Darwin Gödel Machine (DGM), a novel self-improving system that iteratively modifies its own code (thereby also improving its ability to modify its own codebase) and empirically validates each change using coding benchmarks. In this paper, the DGM aims to optimize the design of coding agents, powered by frozen foundation models, which enable the ability to read, write, and execute code via tool use. Inspired by biological evolution and open-endedness research, the DGM maintains an archive of generated coding agents. It then samples from this archive and tries to create a new, interesting, improved version of the sampled agent. This open-ended exploration forms a growing tree of diverse, high-quality agents and allows the parallel exploration of many different paths through the search space. Empirically, the DGM automatically improves its coding capabilities (e.g., better code editing tools, long-context window management, peer-review mechanisms), producing performance increases on SWE-bench from 20.0% to 50.0%, and on Polyglot from 14.2% to 30.7%. Furthermore, the DGM significantly outperforms baselines without self-improvement or open-ended exploration. All experiments were done with safety precautions (e.g., sandboxing, human oversight). Overall, the DGM represents a significant step toward self-improving AI, capable of gathering its own stepping stones along a path that unfolds into endless innovation. All code is open-sourced at https://github.com/jennyzzt/dgm.

ProRL: Prolonged Reinforcement Learning Expands Reasoning Boundaries in Large Language Models

Abstract. Recent advances in reasoning-centric language models have highlighted reinforcement learning (RL) as a promising method for aligning models with verifiable rewards. However, it remains contentious whether RL truly expands a model’s reasoning capabilities or merely amplifies high-reward outputs already latent in the base model’s distribution, and whether continually scaling up RL compute reliably leads to improved reasoning performance. In this work, we challenge prevailing assumptions by demonstrating that prolonged RL (ProRL) training can uncover novel reasoning strategies that are inaccessible to base models, even under extensive sampling. We introduce ProRL, a novel training methodology that incorporates KL divergence control, reference policy resetting, and a diverse suite of tasks. Our empirical analysis reveals that RL-trained models consistently outperform base models across a wide range of pass@k evaluations, including scenarios where base models fail entirely regardless of the number of attempts. We further show that reasoning boundary improvements correlates strongly with task competence of base model and training duration, suggesting that RL can explore and populate new regions of solution space over time. These findings offer new insights into the conditions under which RL meaningfully expands reasoning boundaries in language models and establish a foundation for future work on long-horizon RL for reasoning. We release model weights to support further research: this https URL

sakana AI just released another step in the direction of fully autonomous self-improving artificial intelligence This is the holy grail of AI This is called the Darwin girdle machine and it uses a combination of previously theorized methods of self-improving code mixed with evolutionary mechanics like Darwin’s theory of evolution And with these two concepts put together they have seen massive self-improvements in benchmarks like Swebench and Ader Polyglot So I’m going to break this paper down for you but first of course I need to talk about the intelligence explosion Again I know you’re probably sick of hearing me talk about it but it really seems like we are at the inflection point right at that point at which we have self-improving artificial intelligence that is AI that can discover new knowledge and apply it to itself getting better in a recursive fashion And once we achieve that that’s when we’re going to have the intelligence explosion And so we’ve seen a number of different papers and projects lately We’ve had the AI scientist also from Sakana AI We’ve had Alpha Evolve from Google Alpha Evolve was able to discover improvements in Google’s hardware algorithms that allowed for a meaningful percent increase in performance across their entire fleet of servers It was also able to figure out more efficient ways to do matrix multiplication So imagine we take all of these discoveries and then it applies it to itself and then continues and then at that point we have this exponential compounding improvement All right so the gist what are we actually talking about here the Darwin girdle machine DGM is a novel self-improving system that iteratively modifies its own code and empirically validates each change using coding benchmarks I’m going to explain all of this in really simple terms Just stick with me All right so large language models have been an incredible innovation over the last few years but they have one big limitation and it’s us humans The only way for these large language models to get better whether we’re talking about pre-training methods post-training algorithms it all requires human innovation and human application Most of today’s AI systems remain bound by fixed human-designed architectures that learn within predefined boundaries without the capacity to autonomously rewrite their own source code to self-improve Each advancement in AI development still leans heavily on human interventions tethering the pace of progress Now let me give you a related analogy The most recent major innovation in artificial intelligence was reinforcement learning with verifiable rewards That is because we’re able to post-train the model to become thinking models without human intervention The verifiable rewards means that we can tell the model if it’s giving us the right answer or the wrong answer without a human needing to self-label it That’s because we know does 2 plus 2 equal 4 yes Okay model you got that right That is the verifiable reward part So when you remove humans from the loop you are able to scale up performance much more quickly One can imagine an AI system that like scientific discovery itself becomes an engine of its own advancement Now this isn’t the first time something like this has been proposed In fact it’s part of the name of the Darwin Girdle machine Girdle Now the girdle machine was proposed back in 2007 It was theoretical and proposed an approach to self-improving AI capable of modifying itself in a provably beneficial matter Now that provably is the important part And the reason it’s important is because it’s kind of impossible to show provably before an evolution that it is better than the previous version This original formulation is in practice impossible to create due to the inability to prove the impact of most self-modifications It’s basically trying to predict is this next version of myself going to be better or worse That’s not how evolution works How evolution works is some random modification happens and the real world puts it to the test If all of a sudden a frog develops the ability to change its color to better blend in with its environment that frog is going to live longer it’s going to reproduce more and then evolution takes over from there That’s obviously a hyper oversimplification of evolution But generally speaking that’s what’s happening And so before that new evolution of frog was born it didn’t try to predict if being able to change its colors was going to be beneficial or not it would be impossible And so that’s why the girdle machine originally wasn’t really practical But what if we take that evolutionary system and apply it to the girdle machine rather than trying to provably predict if an evolution is going to be beneficial or not what if we just generate it and test it in the real world that’s exactly what the GDM does So instead of requiring formal proofs we empirically validate self-modifications against a benchmark allowing the system to improve and explore based on observed results That is an important improvement to the girdle machine Really a critical improvement Now listen to this This approach mirrors biological evolution where mutations and adaptations are not verified in advance but are produced trial and then selected via natural selection And I posted this on X yesterday Modeling AI systems after natural systems is likely the way to go And by the way if you’re not following me on X please do Matthew Berman But it’s not just coming up with random changes testing them and then moving on to the next one because that would actually cause problems which I’ll get into in a minute In fact they took a much more similar approach to Darwinian evolution We take inspiration from Darwinian evolution and investigate the effectiveness of maintaining a library of previously discovered agents to serve as stepping stones for future generations So even if they find an evolution and it isn’t quite as good as some other variation they don’t just throw it away They keep it and consider it for future evolution So that’s where the Darwin girdle machine comes in It is a self-referential self-improving system that writes and modifies its own code to become a better coding agent So a lot of high-level language here I really want to show you kind of the details the nitty-gritty of how this stuff works So let me paint the picture first We have the archive of all evolutions We have the parents that give birth to the child Then we have some variation of the parent It self-modifies itself It doesn’t try to predict whether it’s going to work or not It just makes the change proposes a change makes the change Then it writes all of that to itself So it changes its core code Then it evaluates against a benchmark like Swebench and Ader Polyglot and decides is it better is it worse and it adds it to the archive and then it just does that over and over again and hopefully each evolution is better than the previous generation and eventually at the end it sees a big performance bump and spoiler alert it actually did Okay so how does it actually work let’s get into the specifics The DGM is initialized with only one coding agent Remember each generation the parent is just a coding agent that is a large language model wrapped with scaffolding that is tools memory whatever else there is it is just that core LLM wrapped into an agent and its progression is evaluated on coding benchmarks A coding agent is defined as a single system implemented with a code repository and powered by frozen pre-trained foundation models Why do they say frozen well that’s the part that doesn’t evolve They are using just a core foundation model It turns out it’s claude 3.5 sonnet new And it actually talks about the fact that in future papers they’re going to explore actually updating the foundation model But for this paper for not making it overly complicated they froze the foundation model That does not change Only the code around it only the tools it has access to So these agents have the capability of reading writing and executing code They also talk about this metalarning that has been happening for a while now So that is prompts workflows and tools The scaffolding around the large language model When you improve those things the overall performance of the model improves as well And so they define self-improvement as the coding task that involves modifying the design of an agent’s own components i.e its own code So the prompts the workflows and the tools So then starting from that coding agent that initial coding agent the DGM builds an archive of all discovered agents In each iteration the DGM selects parent agents to self-modify and branch off to produce new agents Each selected parent analyzes its own benchmark evaluation logs proposes the next feature to implement and receives this proposal as a problem statement to execute The parent then implements the suggested feature into its own codebase generating a new coding agent Each newly generated agent is quantitatively evaluated on a chosen coding benchmark to estimate its coding abilities So that’s the process in which a coding agent starts to multiply Then the foundation model has access to two tools initially So a bash tool for executing bash commands that just means controlling your console and an edit tool for viewing and editing directories and entire files That’s it Those are the only tools it has available in that initial agent But of course it can write its own tools Now the agent receives a single input prompt that specifies the location of the target repository the problem statement and the repositories testing framework We run the DGM for 80 iterations with two iterations running in parallel for SWEBench and four for Polyglot All of this powered by Claude 3.5 Sonnet new All right let’s talk about some of the results now So what we see up here at the top is the state-of-the-art whatever the best model was at the time Then we have DGM So first DGM without open-ended exploration meaning it’s really just the foundation model And as we can see here after a number of iterations it gets a little bit better but really just plateaus immediately Then in green we have DGM without self-improve So it has open-ended exploration but does not have self-improve And as we can see here it does pretty well Some nice gains and then it plateaus But when given open-ended exploration and self-improvement this blue line right here we see increased performance over time to nearly the state-of-the-art performance So after 80 iterations of the DGM the coding agents performance increases from 20% to 50% on SWEBench and 14% to 38% on polyglot are massive gains by just allowing these models to iterate on their own So here’s what the DGM archive tree looks like You start with an initial agent Then you start spawning the variations of that agent proposing different changes implementing them and then benchmarking against them And as you can see the ones that did well will continue to spawn all the way down to where this star is right here which is the final best agent And remember it keeps track of all of the different evolutions along the way On polyglot although the DGM starts with an initial agent whose performance is lower than that of Ader it discovers an agent that far surpasses Ader And Ader is a very very good coding agent And here’s the key While the state-of-the-art SWE agent and Ader were painstakingly shaped by human efforts the DGM hints at a future in which such ingenuity is automated evolving through self-referential cycles and continuous self-improvements So the DGM evolves its tools it evolves its workflows and just gets better But remember the core model the foundation model powering all of it stays the same And I want to pause here for a second because this is important A lot of the benchmarks that we have now are completely saturated by these models And we’re still seeing nice performance bumps in these models but not like it was from GPT 3.5 to GPT4 These models are already incredibly capable In fact I’ve made the argument that 95 to 98% of all use cases are completely achievable with the intelligence of current models We don’t need massive gains in the core intelligence anymore Obviously for the most sophisticated use cases sure But for the majority of use cases we’ve already achieved saturation What we need now is massive investment in the tooling around it the scaffolding whether that’s evolution systems like the Darwin girdle machine whether it’s memory tooling like the MCP protocol web browsing collaboration between agents that is where the massive investment needs to be because again the core intelligence of the models are already there all right so what did it actually do what did it change well here are a couple example improvements that it figured out proposed and validated on its own so for example the DGM enhanced the edit tool to allow more granular file viewing by lines and more precise file editing by string replacement instead of always viewing or replacing the entire file And then also here’s an example of workflow improvements making multiple attempts to solve a task and using another foundation model to evaluate and select the best solution It also considered previous attempts when generating subsequent ones And the reason why open-ended exploration keeping track of all the previous evolutions is important is because if you just head down one evolution track you might get stuck in a local maximum Meaning you found whatever is best for that single track but you may have missed other tracks that maybe started out more slowly but all of a sudden performed best in the end They call this deceptive dips or peaks in performance And the cool thing is this framework the DGM is actually generalizable beyond just the model that they tested They tested a bunch of different models and the performance was the same They saw big gains in improvement and not only in Python which was the language that they chose for this experiment They tried other languages and it worked also very well So now let’s talk about safety because of course if we’re talking about the intelligence explosion we’re going to have super intelligence and we need to talk about safety This capability referring to GGM introduces unique safety considerations stemming from the systems ability to autonomously modify its own code If it can modify its own code we need to keep a close eye on it Modifications optimized solely for benchmark performance might inadvertently introduce vulnerabilities or behaviors misaligned with human intentions even if they improve the target metric This sure sounds like reward hacking to me And as a reminder reward hacking means the reward system we set up to tell a model whether it’s doing better or worse becomes hacked because they found a loophole An example which I’ve used before but I’m going to use again is the boating video game that OpenAI published a few years ago They were trying to train AI to get the highest score in a boat racing game And of course you think well it’s a boat racing game so the ultimate objective is to win the race But what they were using as the reward signal is the number of points generated by the AI in the game And the model figured out that if it simply went around in circles and hit a bunch of obstacles it was getting points for that And it would actually get more points than just finishing the race That is a reward hack And so if we have self-evolving systems we need to make sure that the benchmark that we’re testing against the reward is well aligned well-defined because otherwise it might find ways to hack that reward and we would have unintended consequences Self-improvement loop could amplify misalignment over successive generations So how do they actually add safety to this system all agent execution and self-modification processes are conducted within isolated sandbox environments So they can only change so much they could only go so far Each execution within the sandbox is subjected to a strict time limit reducing the risk of resource exhaustion or unbounded behavior The self-improvement processes currently confined to the well- definfined domain of enhancing performance on specific coding benchmarks by modifying the agents own Python codebase thus limiting the scope of potential modifications So that is the Darwin girdle machine This is proof that we can have self-improving artificial intelligence Now of course it still needs to get better We still need to throw a whole bunch of compute behind it but it really does seem like we’re starting to see little hints here and there that we are at that inflection point of self-improving AI also known as the intelligence explosion Now I’m going to leave you with one last thing Remember I mentioned that the only thing that is not evolving in this system is the foundation model itself Now think about that alpha evolve paper in which it discovered for the first time in 50 years a more efficient way to do matrix multiplication Imagine taking that and applying it to the foundation model Imagine the AI being able to pre-train another version of its foundation model or post-train it and evolve the core intelligence of the entire scaffolding Now that could be the last piece missing for the intelligence explosion