“Good news. My timelines have been lengthening slightly. So, I now feel like 2028, maybe even 2029, is better than 2027 as in terms of a guess as to when all this stuff is going to start happening. So I’m going to like do more thinking about that and publish more stuff on that.” — Daniel Kokotajlo

8,589 views 3 Jul 2025 Future of Life Institute Podcast

On this episode, Daniel Kokotajlo joins me to discuss why artificial intelligence may surpass the transformative power of the Industrial Revolution, and just how much AI could accelerate AI research. We explore the implications of automated coding, the critical need for transparency in AI development, the prospect of AI-to-AI communication, and whether AI is an inherently risky technology. We end by discussing iterative forecasting and its role in anticipating AI’s future trajectory.

You can learn more about Daniel’s work at: AI 2027 and The AI Futures Project

Timestamps: 00:00:00 Preview and intro 00:00:50 Why AI will eclipse the Industrial Revolution 00:09:48 How much can AI speed up AI research? 00:16:13 Automated coding and diffusion 00:27:37 Transparency in AI development 00:34:52 Deploying AI internally 00:40:24 Communication between AIs 00:49:23 Is AI inherently risky? 00:59:54 Iterative forecasting

Learn more about AI 2027

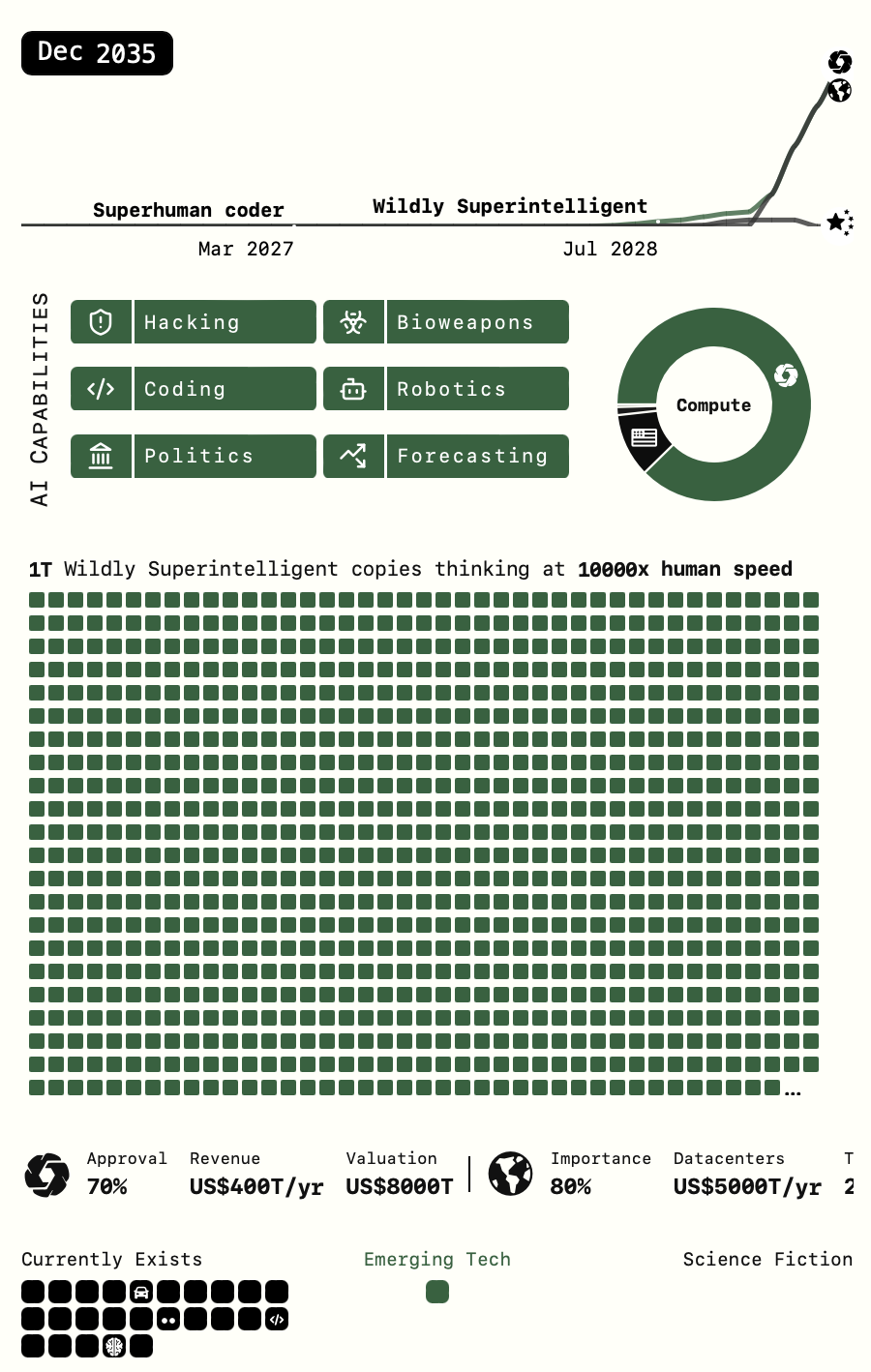

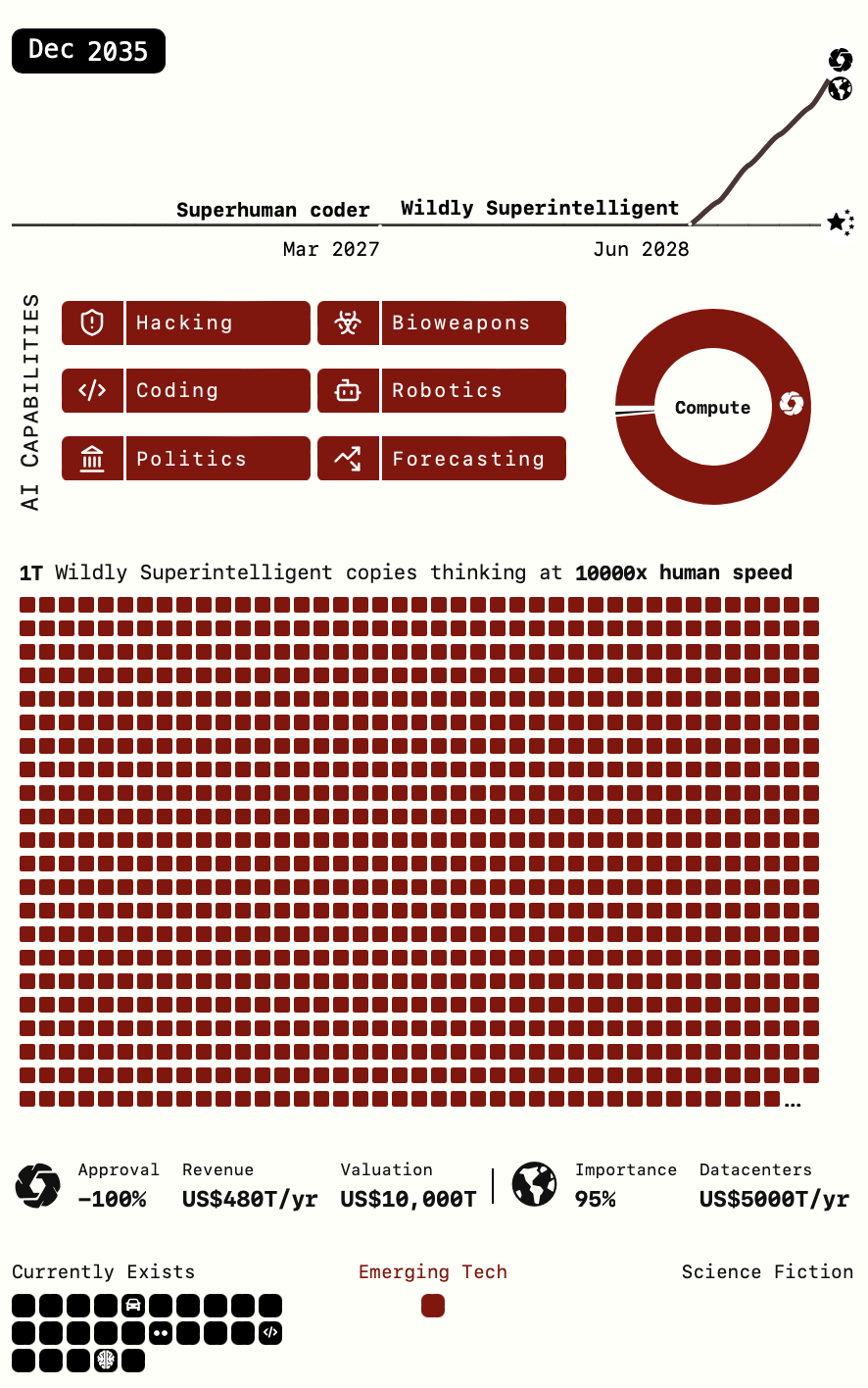

Scott Alexander and Daniel Kokotajlo break down every month from now until the 2027 intelligence explosion. Scott is author of the highly influential blogs Slate Star Codex and Astral Codex Ten. Daniel resigned from OpenAI in 2024, rejecting a non-disparagement clause and risking millions in equity to speak out about AI safety. We discuss misaligned hive minds, Xi and Trump waking up, and automated Ilyas researching AI progress. I came in skeptical, but I learned a tremendous amount by bouncing my objections off of them. I highly recommend checking out their new scenario planning document: https://ai-2027.com/ And Daniel’s “What 2026 looks like,” written in 2021: https://www.lesswrong.com/posts/6Xgy6… Read the transcript: https://www.dwarkesh.com/p/scott-daniel Apple Podcasts: https://podcasts.apple.com/us/podcast… Spotify: https://open.spotify.com/show/4JH4tyb

Timestamps (00:00:00) – AI 2027 (00:07:45) – Forecasting 2025 and 2026 (00:15:30) – Why LLMs aren’t making discoveries (00:25:22) – Debating intelligence explosion (00:50:34) – Can superintelligence actually transform science? (01:17:43) – Cultural evolution vs superintelligence (01:24:54) – Mid-2027 branch point (01:33:19) – Race with China (01:45:36) – Nationalization vs private anarchy (02:04:11) – Misalignment (02:15:41) – UBI, AI advisors, & human future (02:23:49) – Factory farming for digital minds (02:27:41) – Daniel leaving OpenAI (02:36:04) – Scott’s blogging advice

Learn more: The OpenAI Files

- Published June 18, 2025, Last Updated June 18, 2025

- The OpenAI Files is the most comprehensive collection to date of documented concerns with governance practices, leadership integrity, and organizational culture at OpenAI.

- Key Findings. Our investigation has identified four major areas of concern.

- Restructuring

- CEO Integrity

- Transparency & Safety

- Conflicts of Interest