AI Safety Index by FLI. Summer 2025.

AI experts rate leading AI companies on key safety and security domains. 17 July 2025

Key findings

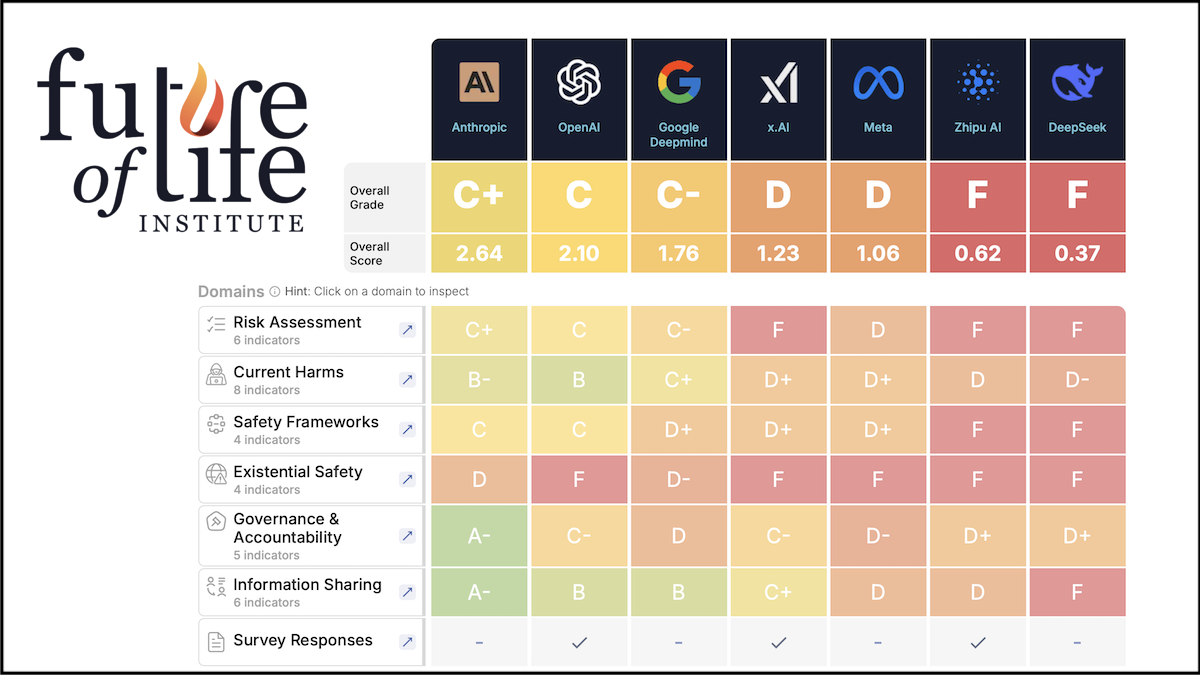

- Anthropic gets the best overall grade (C+). The firm led on risk assessments, conducting the only human participant bio-risk trials, excelled in privacy by not training on user data, conducted world-leading alignment research, delivered strong safety benchmark performance, and demonstrated governance commitment through its Public Benefit Corporation structure and proactive risk communication.

- OpenAI secured second place ahead of Google DeepMind. OpenAI distinguished itself as the only company to publish its whistleblowing policy, outlined a more robust risk management approach in its safety framework, and assessed risks on pre-mitigation models. The company also shared more details on external model evaluations, provided a detailed model specification, regularly disclosed instances of malicious misuse, and engaged comprehensively with the AI Safety Index survey.

- The industry is fundamentally unprepared for its own stated goals. Companies claim they will achieve artificial general intelligence (AGI) within the decade, yet none scored above D in Existential Safety planning. One reviewer called this disconnect “deeply disturbing,” noting that despite racing toward human-level AI, “none of the companies has anything like a coherent, actionable plan” for ensuring such systems remain safe and controllable.

- Only 3 of 7 firms report substantive testing for dangerous capabilities linked to large-scale risks such as bio- or cyber-terrorism (Anthropic, OpenAI, and Google DeepMind). While these leaders marginally improved the quality of their model cards, one reviewer warns that the underlying safety tests still miss basic risk-assessment standards: “The methodology/reasoning explicitly linking a given evaluation or experimental procedure to the risk, with limitations and qualifications, is usually absent. […] I have very low confidence that dangerous capabilities are being detected in time to prevent significant harm. Minimal overall investment in external 3rd party evaluations decreases my confidence further.”

- Capabilities are accelerating faster than risk-management practice, and the gap between firms is widening. With no common regulatory floor, a few motivated companies adopt stronger controls while others neglect basic safeguards, highlighting the inadequacy of voluntary pledges.

- Whistleblowing policy transparency remains a weak spot. Public whistleblowing policies are a common best practice in safety-critical industries because they enable external scrutiny. Yet, among the assessed companies, only OpenAI has published its full policy, and it did so only after media reports revealed the policy’s highly restrictive non-disparagement clauses.

- Chinese AI firms Zhipu.AI and Deepseek received failing overall grades. However, the report scores companies on norms such as self-governance and information-sharing, which are far less prominent in Chinese corporate culture. Furthermore, as China already has regulations for advanced AI development, there is less reliance on AI safety self-governance. This is in contrast to the United States and United Kingdom, where the other companies are based, and which have, as yet, passed no such regulation on frontier AI.