AWS activates Project Rainier: One of the world’s largest AI compute clusters comes online

The collaborative infrastructure innovation delivers nearly half a million Trainium2 chips in record time, with Anthropic scaling to more than one million chips by the end of 2025. AWS Artificial Intelligence Innovation Amazon Data Centers

Last updated: October 29, 2025

6 min read

- Project Rainier is now in use, featuring one of the world’s largest AI compute clusters with nearly half a million Trainium2 chips.

- AWS deployed this massive AI infrastructure project less than one year after it was first announced, with partner Anthropic already running workloads.

- Anthropic is actively using Project Rainier to build and deploy its industry-leading AI model, Claude, which AWS expects to be on more than 1 million Trainium2 chips by the end of 2025.

A mountain of compute

Chips chips chips

To deliver on this bold vision, Project Rainier is designed as a massive “EC2 UltraCluster of Trainium2 UltraServers.” The first part refers to Amazon Elastic Compute Cloud (EC2), an AWS service that lets customers rent virtual computers in the cloud rather than buying and maintaining their own physical servers.

From traditional to ultra

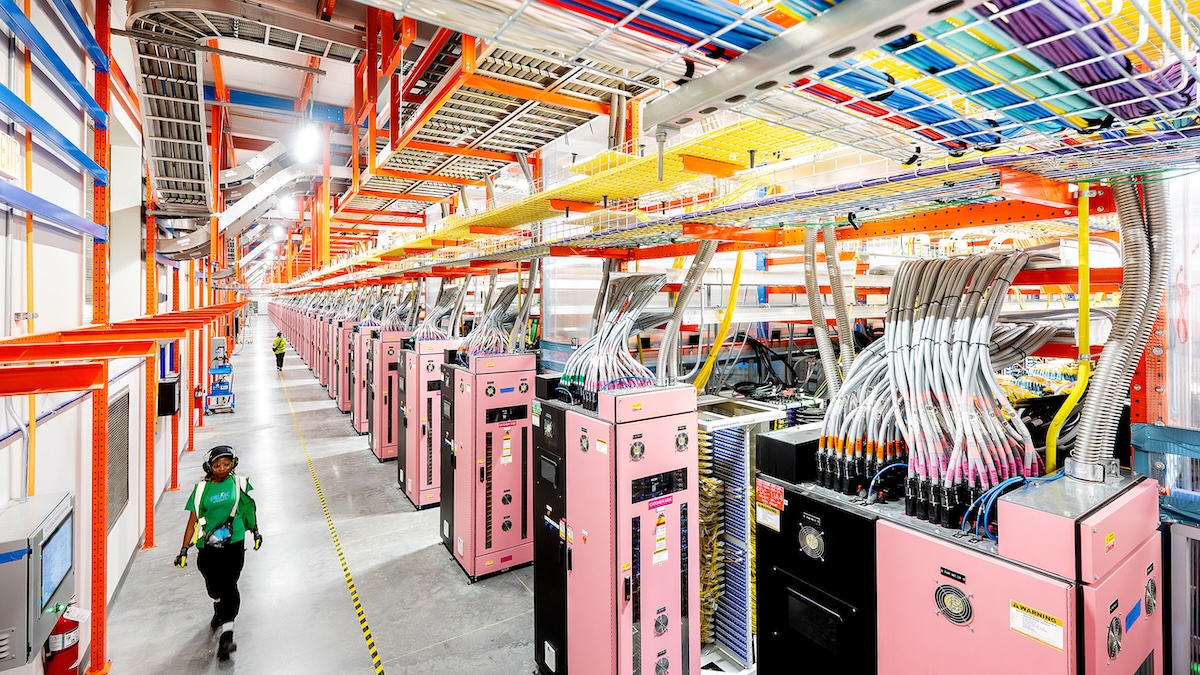

Traditionally, servers in a data center operate independently. If and when they need to share information, that data has to travel through external network switches. This introduces latency, which is not ideal at such large scale. AWS’s answer to this problem is the UltraServer. A new type of compute solution, an UltraServer combines four physical Trainium2 servers, each with 16 Trainium2 chips. They communicate via specialized high-speed connections called “NeuronLinks.” Identifiable by their distinctive blue cables, NeuronLinks are like dedicated express lanes, allowing data to move much faster within the system and significantly accelerating complex calculations across all 64 chips. When you connect tens of thousands of these UltraServers and point them all at the same problem, you get Project Rainier—a mega “UltraCluster.”

No room for failure

Controlling the stack

This vertical integration gives AWS a significant advantage in accelerating machine learning and reducing cost barriers to AI accessibility. With visibility across the entire stack—from chip design to software implementation to server architecture—AWS can optimize at precisely the right points in the system. Sometimes the solution lies in redesigning power delivery systems, sometimes in rewriting the software that coordinates the entire operation, and oftentimes in doing all of these solutions simultaneously. By maintaining comprehensive oversight of every component and system level, AWS can troubleshoot and innovate at pace.

Sustainability at scale

As the teams that run AWS’s data centers innovate quickly, they are also focused on improving energy efficiency—whether from rack layouts to electrical distribution to cooling techniques. When it comes to carbon-free energy use in data centers: all of the electricity consumed by Amazon’s operations, including its data centers, was matched 100% renewable energy resources in 2023 and 2024. The company is investing billions of dollars in nuclear power and battery storage, and in financing large-scale renewable energy projects around the world to power its operations. In fact, for the past five years Amazon has been the largest corporate purchaser of renewable energy in the world. The company is still on a path to be net-zero carbon by 2040. This goal remains unchanged by the addition of Project Rainier, and its continued worldwide growth in general. Last year AWS announced it would be rolling out new data center components that combine advances in power, cooling, and hardware, not only for data centers it’s currently building, but also in existing facilities. New data center components are projected to reduce mechanical energy consumption by up to 46% and reduce embodied carbon in the concrete used by 35%. The new sites the company is constructing to support Project Rainier and beyond will include a variety of upgrades for energy efficiency and sustainability. Some of these will have a strong focus on water stewardship. AWS engineers its facilities to use as little water as possible, and where possible none at all. One way it does this is by eliminating cooling water use in many of its facilities for most of the year, instead relying on outside air. For example, data centers in St. Joseph County, Indiana—one of the Project Rainier sites—will maximize the use of outside air for cooling. From October to March the data centers won’t use any water for cooling at all, while on an average day from April to September they’ll only use cooling water for a few hours per day. Thanks to engineering innovations like this, AWS leads the industry in water efficiency. Based on findings from a recent Lawrence Berkeley National Laboratory (LBNL) report on the data center industry’s water usage efficiency (WUE), the industry standard measure of how efficiently water is used inside data centers is 0.375 liters of water per kilowatt-hour. At 0.15 liters of water per kilowatt hour, AWS’s WUE is more than twice as good as than the industry average. It’s also a 40% improvement since 2021.

The future of AI

More about AWS innovation

- Look inside Annapurna Labs, where AWS designs custom chips, and explore the intricate world of AWS chips.

- Discover how Amazon is making its data centers more sustainable, through hardware and liquid cooling efficiency, reducing carbon emissions, and optimizing workloads.

- Learn about how AWS is already more than 50% of the way to being ‘water positive’ by 2030, meaning it will return more water to communities and the environment than it uses in its data center operations.

1 million Trainium2 chips = 1,300 EFLOPS in 15,625 racks

- Trainium2 Architecture 1,299 FP8 TFLOPS = 1.3 PFLOPS per chip

- 1 million chips / 64 chips per rack = 15,625 racks

- 1 million chips x 1.3 PFLOPS/chip = 1,300 EFLOPS (FP8)

- 473x more powerful than El Capitan at LBNL 2.746 exaFLOPS (Rpeak)