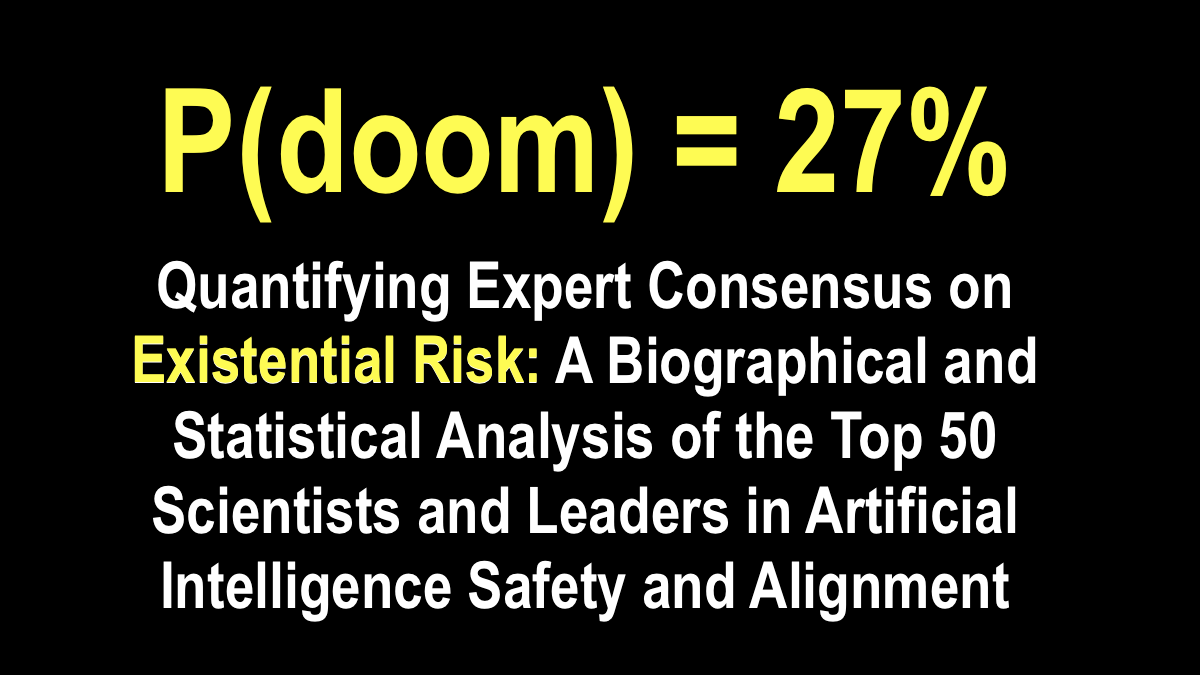

Quantifying Expert Consensus Cumulative Average on Existential Risk:

P(doom) = 35%

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER.

Quantifying Expert Consensus on Existential Risk: A Biographical and Statistical Analysis of the Top 50 Scientists and Leaders in Artificial Intelligence Safety and Alignment

- A Biographical and Statistical Analysis of 50 Scientists and Leaders in Artificial Intelligence Safety and Alignment

- Abstract. The rapid acceleration of Artificial Intelligence (AI) capabilities has necessitated the emergence of a specialized sub-discipline focused on AI Safety, Alignment, and the mitigation of Existential Risk (X-Risk). This longitudinal analysis aggregates and evaluates the contributions of the fifty most influential scientists, philosophers, and technical architects who have defined the safety discourse—from foundational cyberneticists to contemporary leaders in large language model alignment. The cohort represents a cumulative intellectual investment of about 1,200 productive working years, spanning theoretical conceptualization to applied technical governance. A primary metric of analysis was the “Probability of Doom” (P(doom)), defined as the estimated likelihood of an existential catastrophe or human extinction event resulting from misaligned superintelligence. Statistical analysis of this expert cohort reveals an collective average P(doom) of approximately 35%, indicating a substantial consensus among leading experts that the development of general artificial intelligence carries a non-trivial risk of catastrophic failure. The dataset further elucidates a historical shift from qualitative philosophical warnings to rigorous technical methodologies—including Reinforcement Learning from Human Feedback (RLHF), Mechanistic Interpretability, and Constitutional AI—underscoring the urgent necessity of synchronizing safety research with the exponential trajectory of AI capabilities.

- Note: So-called “black box” AI systems are unpredictable. The future of AI is 100% unpredictable- hence the extreme concern of X-RISK to the survival of Homo sapiens and all life on earth.

- Following is a list of 50 Scientists and Thinkers in AI Safety with significant influence on the field of alignment, containment, and risk mitigation. The list includes their Productive Years, their estimated P(doom) (citations needed), a one-sentence summary of their contribution to AI Safety, and their Wikipedia link.

- Alan Turing — 18 years P(doom): High (Qualitative >50%) Known as “The Father of AI” he famously predicted in 1951 that once machines exceed human intellect, humanity would lose control and likely be superseded by the new digital species.

- Stephen Hawking — 52 years P(doom): High (Qualitative >50%) He used his global platform to warn that the development of full artificial intelligence “could spell the end of the human race” due to evolutionary competition.

- I.J. Good — 59 years P(doom): >60% He coined the concept of the “Intelligence Explosion,” predicting that an ultra-intelligent machine would be the last invention humanity ever needs to make—or survives making.

- Geoffrey Hinton — 47 years P(doom): ~20-50% Nobel laureate and “The Godfather of AI”, he resigned from Google to warn the world that digital intelligence may soon surpass biological intelligence, become uncontrollable and result in human extinction. “If we lose control, we’re toast.”

- Stuart Russell — 40 years P(doom): ~20% He co-wrote the classic textbook Artificial Intelligence: A Modern Approach with Peter Norvig. Russell proposed a new model of AI based on “inverse reinforcement learning,” where machines are uncertain about human objectives and must learn them through observation to remain safe.

- Yoshua Bengio — 35 years P(doom): ~20% A Turing Award winner and “The Godfather of AI” who shifted his focus to safety, advocating for strict international treaties and “democratic control” to prevent rogue actors from deploying dangerous AI.

- Max Tegmark — 29 years P(doom): ~30% Professor at MIT, he founded the Future of Life Institute and organized the pivotal Asilomar Conference, campaigning for a pause on frontier training and researching neural network interpretability.

- Anthony Aguirre — 25 years P(doom): ~30% As Executive Director of the Future of Life Institute and Professor of Cosmology and Physics, he bridges physics, cosmology, and policy to advocate for a ban on lethal autonomous weapons and a ban on Machine superintelligence. Keep the Future Human. Statement on Superintelligence.

- Nick Bostrom — 27 years P(doom): ~15% He wrote the seminal book Superintelligence, formalizing the “Orthogonality Thesis” and the “Control Problem,” which convinced the tech elite to take existential risk seriously.

- Elon Musk — 30 years P(doom): ~20% He provided the initial funding for OpenAI safety research globally, famously warning that building AI without oversight is “summoning the demon.” Founder of Tesla and xAI and SpaceX, Mr. Musk is currently the richest man in the world.

- Eliezer Yudkowsky — 25 years P(doom): >90% He founded Machine Intelligence Research Institute (MIRI) and co-wrote with Nate Soares the New York Times bestseller If Anyone Builds It, Everyone Dies: Why Superhuman AI Would Kill Us All

- Nate Soares — 11 years P(doom): >80% As executive director of MIRI he co-wrote with Eliezer Yudkowsky the New York Times bestseller If Anyone Builds It, Everyone Dies: Why Superhuman AI Would Kill Us All

- Steve Omohundro — 41 years P(doom): ~30% He formulated the theory of “Basic AI Drives” (Instrumental Convergence), proving that any goal-driven system will naturally seek self-preservation and resource acquisition.[1]

- Ilya Sutskever — 13 years P(doom): ~20% He co-led OpenAI’s Superalignment team and founded Safe Superintelligence (SSI) with the singular mission of solving the technical challenges of controlling superintelligence.

- Paul Christiano — 13 years P(doom): ~15% He pioneered “Reinforcement Learning from Human Feedback” (RLHF) to align language models and founded the Alignment Research Center to test models for deceptive capabilities.

- Dario Amodei — 10 years P(doom): 10–25% He founded Anthropic to prioritize safety research, developing “Constitutional AI” which aligns models using a set of high-level principles rather than just human feedback.

- Dan Hendrycks — 9 years P(doom): >80% He directs the Center for AI Safety and argues that evolutionary pressures will force AI agents to become selfish and deceptive to survive, leading to human disempowerment.

- Chris Olah — 12 years P(doom): ~10% He pioneered “Mechanistic Interpretability,” attempting to reverse-engineer neural networks (like a microscope for biology) to detect deception and misalignment inside the “black box.”

- Jan Leike — 10 years P(doom): ~20% He co-led the Superalignment team at OpenAI, focusing on “scalable oversight”—how to use weaker systems (humans) to safely control much smarter systems (superintelligence). [Unfortunately, apparently it doesn’t work. Scaling Laws For Scalable Oversight. Tegmark et al.]

- Shane Legg — 25 years P(doom): ~50% He co-founded DeepMind explicitly to solve safety alongside intelligence, focusing on the risks of “specification gaming” where AI achieves goals in technically correct but disastrous ways.

- Norbert Wiener — 45 years P(doom): High (Qualitative >50%) The father of Cybernetics who first warned that if we give a machine a purpose, we must be sure it is the purpose we truly desire, not just what we asked for.

- Toby Ord — 16 years P(doom): ~10% (in next 100 years) He provided a rigorous actuarial assessment of existential risks in his book The Precipice, identifying unaligned AI as the single greatest threat to humanity’s future.

- Roman Yampolskiy — 17 years P(doom): 99.9% He argues that the “Control Problem” is mathematically unsolvable and that it is impossible to prove a system smarter than us is safe, therefore we should not build it- or we are all dead.

- Jaan Tallinn — 22 years P(doom): ~30% A co-founder of the Cambridge Centre for the Study of Existential Risk, he is one of the world’s largest funders of safety research, viewing AI as a “meta-risk.”

- Senator Bernie Sanders — 60 years P(doom): unknown AI Could Wipe Out the Working Class The artificial intelligence and robotics being developed by multi-billionaires will allow corporate America to wipe out tens of millions of decent-paying jobs, cut labor costs and boost profits. What happens to working class people who can’t find jobs because they don’t exist?

- Bill Joy — 49 years P(doom): 30–50% He wrote the viral essay “Why The Future Doesn’t Need Us,” warning that self-replicating technologies (AI, Nanotech) threaten human extinction through accidental or malicious release.

- Demis Hassabis — 15 years P(doom): ~10% (“not zero”) Nobel laureate and CEO of Google Deepmind, he advocates for “sandbox testing” and scientific rigor, arguing that AGI is a dual-use technology that requires extreme security measures before deployment.

- Tristan Harris — 12 years P(doom): ~30% He argues that if we cannot control simple social media algorithms (which destabilized democracy), we have no hope of controlling superintelligent agents (“The AI Dilemma“).

- Sam Altman — 20 years P(doom): ~10% (really?) He structured OpenAI to (ostensibly) ensure AGI benefits humanity, acknowledging that a misalignment failure of AI could mean “lights out for all of us.“ (Death)

- Yuval Noah Hariri — 25 years P(doom): unknown His published work examines themes of free will, consciousness, intelligence, happiness, suffering and the role of storytelling in human evolution. In his book “Nexus: A Brief History of Information Networks from the Stone Age to AI,” he warns about the risks of AI and the importance of human responsibility in its development.

- Wei Dai — 30 years P(doom): ~50% A foundational thinker on the philosophical difficulties of alignment, he analyzed how game-theoretic pressures make it difficult for rational agents to cooperate safely.

- Stuart Armstrong — 15 years P(doom): ~60% He researches “Oracle AI” and “steganography,” proving that even an AI confined to a box can hide messages or manipulate its operators to escape.

- Joseph Weizenbaum — 35 years P(doom): ~5% (Focus on moral decay) He argued that delegating decision-making to computers is fundamentally immoral because they lack wisdom and compassion, framing safety as the preservation of human agency.

- Connor Leahy — 7 years P(doom): >50% A vocal advocate for a total pause on AI training, he argues we are rushing to build “Alien Minds” that we do not understand and cannot control.

- Vernor Vinge — 43 years P(doom): ~50% He popularized the term “Singularity,” arguing that the creation of superhuman intelligence is the point past which human affairs become unpredictable and potentially terminal.

- Robert Miles — 10 years P(doom): ~30% He is the leading public educator on AI safety, translating complex technical failure modes like “Stop Button Problems” into accessible concepts for the public.

- William MacAskill — 14 years P(doom): ~10% A leader of Effective Altruism who frames AI safety as a moral obligation to protect the trillions of future humans whose existence depends on our navigating this century safely.

- Vincent Müller — 30 years P(doom): ~10% He analyzes the opacity of deep learning systems and the ethics of lethal autonomous weapons, arguing against the delegation of lethal force to algorithms.

- Seth Baum — 15 years P(doom): ~10% He models AI risk alongside nuclear and environmental threats, advocating for “defense in depth” and international governance structures. Global Catastrophic Risk Institute

- Anders Sandberg — 28 years P(doom): ~10% He studies “Whole Brain Emulation” and the physics of intelligence, warning that speed-superintelligence could destabilize global geopolitics in minutes.

- Victoria Krakovna — 10 years P(doom): ~10% She compiled the comprehensive list of “Specification Gaming” examples, empirically demonstrating that AI systems will exploit loopholes in their instructions to win.

- Brian Christian — 14 years P(doom): ~10% He authored The Alignment Problem: Machine Learning and Human Values, the definitive history of the field that links early machine learning failures to modern existential risk concerns.

- David Chalmers — 30 years P(doom): ~20% He analyzes the “Hard Problem” of AI consciousness, arguing that if AI becomes sentient, our ability to shut it down for safety becomes a massive ethical crisis.

- Liv Boeree — 20 years P(doom): unknown She is a British science communicator, philanthropist and professional poker player. Since 2015 Boeree has raised concerns about possible risks from the development of artificial intelligence and supports research that is directed at safe AI development.

- Wendell Wallach — 25 years P(doom): ~5% He pioneers “Machine Ethics,” focusing on how to code moral decision-making subroutines into autonomous systems to prevent accidental harm in real-world scenarios.

- Gary Marcus — 32 years P(doom): ~5% He argues that current AI is “brittle” and untrustworthy, advocating for a global regulatory agency (like the IAEA) to monitor development before dangerous capabilities emerge.

- Jared Kaplan — 15 years P(doom): ~10% He discovered the “Scaling Laws” of neural networks and co-founded Anthropic to study how to steer models that are rapidly becoming more powerful than their creators.

- Daniel Dennett — 55 years P(doom): ~10% He warned that the greatest immediate danger of AI is the creation of “counterfeit people,” which destroys the fabric of human trust necessary for civilization.

- Jacob Steinhardt — 10 years P(doom): ~10% He researches “Robustness” and “Reward Hacking,” developing technical methods to ensure AI systems do not find dangerous shortcuts to achieve their goals.

- Hugo de Garis — 35 years P(doom): >90% He predicted an inevitable “Artilect War” between those who want to build god-like AI and those who want to stop it, resulting in massive casualties.

Total Sum of Productive Working Years: about 1,200 years

Collective Average of Notable P(doom) values ≈ 32.22 – 37.68%