AGI (and the ASI Intelligence Explosion) is Closer than you think… a lot Closer.

It could be already happening. Seriously.

AI models are now creating and coding and creating synthetic data (knowledge) for the next generation of AI models.

Meta-cogintion is emergent behavior which means the machine understands itself- and can improve itself with synthetic code and content.

Understanding mathematics means understanding everything.

Self-improvement coding + synthetic data + self-play + self-learning + scale = Intelligence Explosion.

Learn More about Q-learning and the Q* (Q-star) breakthrough at OpenAI:

- The Q* hypothesis: Tree-of-thoughts reasoning, process reward models, and supercharging synthetic data | Nov. 24, 2023. Nathan Lambert

- Q* Did OpenAI Achieve AGI? OpenAI Researchers Warn Board of Q-Star | Caused Sam Altman to be Fired? | Video Nov. 23, 2023. Wes Roth

- OpenAi’s New Q* (Qstar) Breakthrough Explained For Beginners (GPT- 5) | Video Nov. 24, 2023. TheAIGRID

- OpenAI’s Q* is the BIGGEST thing since Word2Vec… and possibly MUCH bigger – AGI is definitely near. | Video Nov. 24, 2023. David Shapiro

- What is Q-Learning (back to basics) | Video Nov. 25, 2023. Yannic Kilcher

- OpenAI Q* might be REVOLUTIONARY AI TECH | Biggest thing since Transformers | Researchers *spooked* | Video Nov. 26, 2023. Wes Roth

- What Is Q*? The Leaked AGI BREAKTHROUGH That Almost Killed OpenAI | Video Nov. 27, 2023. Matthew Berman

- Q* why AI that is “Good at Math” a “Threat to Humanity”? | Q star. | Video Nov. 28, 2023. Wes Roth

- Introducing Amazon Q, a new generative AI-powered assistant (preview) | Video Nov. 28, 2023. AWS

- DEBUNKED. What if Q* broke cybersecurity? How would we adapt? Deep dive! P≠NP? Here’s why it’s probably fine… | Video Nov. 29, 2023. David Shapiro

- Synthetic data: Anthropic’s CAI, from fine-tuning to pretraining, OpenAI’s Superalignment, tips, types, and open examples | Nov. 29, 2023. Nathan Lambert

- Sam Altman Comments on Q* | Self Operating Computer | Pika 1.0 | Video Nov. 30, 2023. Wes Roth

- GEMINI 1.0 Beats GPT-4 | AlphaCode 2 is better than 90% of coders | Gemini Nano on Pixel Phones! | Video Nov. 30, 2023. Wes Roth

- DeepMind’s GNoME Creates Materials | Schmidhuber Claims Q* | TLDRAW is out of this world! | Video Nov. 30, 2023. Wes Roth

- Sam Altman’s Q* Reveal, OpenAI Updates, Elon: “3 Years Until AGI”, and Synthetic Data Predictions | Video Dec. 1, 2023. Matthew Berman

- Open AI Q* (Q-STAR) Exposed – NEW Hidden Details Of Q* | Video Dec. 3, 2023. TheAIGRID

- P vs. NP: The Greatest Unsolved Problem in Computer Science – Quanta Magazine

- POST: LLM Emergent Behavior: Q* is Learning Mathematics. A worrying development AND a promising power. (But FIRST DO NO HARM)

References for convenience…

- STaR: Bootstrapping Reasoning With Reasoning | March 22, 2022. Google Research

- A* Search Without Expansions: Learning Heuristic Functions with Deep Q-Networks – UC Irvine

- Dota 2 with Large Scale Deep Reinforcement Learning. | 13 Dec 2019. OpenAI

- TaskWeaver: A Code-First Agent Framework. and Github | Nov. 29, 2023. Microsoft

- Deep Transformer Q-Networks for Partially Observable Reinforcement Learning. | June 2, 2022. Esslinger et al.

- Minecraft AI – NVIDIA uses GPT-4 to create a SELF-IMPROVING autonomous agent. | Video May. 28, 2023. Wes Roth

- Let’s Verify Step by Step | May 31, 2023. OpenAI

- Orca 2: Teaching Small Language Models How to Reason. | Nov. 20, 2023. Microsoft

- The Mind-Blowing GROK: Elon Musk’s Brain-Inspired AI Chatbot | Video Dec. 2, 2023. AI Junkies

- Orca 2. GIANT Breakthrough For AI Logic/Reasoning | Video Dec. 5, 2023. Matthew Berman

- Googles ALPHACODE-2 Just SHOCKED The ENTIRE INDUSTRY! Full Breakdown + Technical Report | Video Dec. 7, 2023. TheAIGRID

- The Secret To AGI – Synthetic Data | Video Dec. 10, 2023. TheAIGRID

- Textbooks [synthetic data] Are All You Need. 20 Jun 2023. Microsoft Research

- P vs. NP: The Greatest Unsolved Problem in Computer Science | Video Dec. 1, 2023. Quanta Magazine

- New AI Agents, Quantum Computing, Upgrades, AI Upscale, Text To Video (Major AI NEWS#20) | Video Dec. 8, 2023. TheAIGRID

Recent (example) tweets that certainly are worrying…

Yann LeCun is calling the list of scientists and founders below “idiots” for saying extinction risk from AI should be a global priority. Using insults to make a point is a bad sign for the point… plus Hinton, Bengio, and Sutskever are the most cited AI researchers in history:… https://t.co/494pSP2BmR pic.twitter.com/6Ina0OHEVj

— Andrew Critch (@AndrewCritchPhD) November 29, 2023

The Great Programmer Layoffs Are Coming

Google’s new AlphaCode 2 now beats 85% of human programmers.

Last year’s AlphaCode was median human level.

Yes, in just 1 year, AI went from outperforming 46% to 85% of coders. Sit with that.

And it’s actually crazier than that, because… https://t.co/rs7yiD422J pic.twitter.com/l2yKjIPMmQ

— AI Notkilleveryoneism Memes ⏸️ (@AISafetyMemes) December 7, 2023

The AI discourse is appalling.

The for-profit side, with billions of dollars of ACTUAL financial interests in AI, is trying to frame the tiny nonprofit side as grifters.

Marc Andreessen, a literal billionaire with bags filled with AI investments, has the gall to accuse tiny… https://t.co/TdjiD0msoE pic.twitter.com/XDkOBBpBe7

— AI Notkilleveryoneism Memes ⏸️ (@AISafetyMemes) December 6, 2023

Skeptics just one year ago: don’t worry, AGI is 100 years away

Skeptics now: don’t worry, AGI is at least 3-5 years away

Have you stopped to think about what this means?

The world as we know it really may end in the next few years.

Americans are now 5 to 1 (!) in favor of… https://t.co/8OWYlV5Z2V pic.twitter.com/eEcU5837TN

— AI Notkilleveryoneism Memes ⏸️ (@AISafetyMemes) December 4, 2023

Arm CEO: I’m losing sleep worried about losing control of AI. We need a kill switch.

Why his opinion matters: Arm is one of the biggest semiconductor companies in the world.

He was asked what keeps him up at night, and he said AI:

“The thing I worry about most is humans… https://t.co/cG24dHcgfl pic.twitter.com/OvKiVY4CZk

— AI Notkilleveryoneism Memes ⏸️ (@AISafetyMemes) December 10, 2023

Vitalik Buterin: AI has a serious chance of becoming the new apex species

“A lot of dismissive takes I’ve seen about AI come from the perspective that it is “just another technology”

But there is a different way to think about AI: it’s a new type of mind that is rapidly gaining… https://t.co/zXpQxb7EcA pic.twitter.com/epzhhgTj9q

— AI Notkilleveryoneism Memes ⏸️ (@AISafetyMemes) December 6, 2023

Inflection CEO @MustafaSuleyman:

“I still find it mindblowing that people don’t seem to be fully grasping the trajectory that we’re on.

I mean, we are going to produce a replacement to cognitive manual labor in the next couple of years.”

He’s worried about political chaos: “If… https://t.co/1NToWEVp8o pic.twitter.com/ige3oy1C8S

— AI Notkilleveryoneism Memes ⏸️ (@AISafetyMemes) December 5, 2023

The AI Job Tsunami is coming

If you have one of these jobs, you may have just 6-24 months left before your career is over:

Graphic designers

Copywriters

Translators

Voice Artists

Creative Writers

Product photographers

Videographers

Photo Editors

Video Editors

Audio Mastering… https://t.co/T8sx7bATFA pic.twitter.com/lxg7owDhfT— AI Notkilleveryoneism Memes ⏸️ (@AISafetyMemes) November 24, 2023

.@sama: “I am definitely worried about the impact that AI is going to have on the election.”

NOT DEEPFAKES: “The main worry I have is not one that gets airtime.”

“The thing I’m really worried about is customized, one-on-one persuasion ability.”

“A foreign adversary that has… https://t.co/pojIpX0JjV pic.twitter.com/HHTl9isybu

— AI Notkilleveryoneism Memes ⏸️ (@AISafetyMemes) December 4, 2023

Elon Musk: We’re less than 3 years from AGI, AI that “writes novels as good as JK Rowling, discovers new physics, invents new technology”

“We should be concerned [about why Ilya fired Sam]…a serious thing. I don’t think it was trivial. I’m quite concerned there’s some dangerous… https://t.co/B5fNAGegpO

— AI Notkilleveryoneism Memes ⏸️ (@AISafetyMemes) November 30, 2023

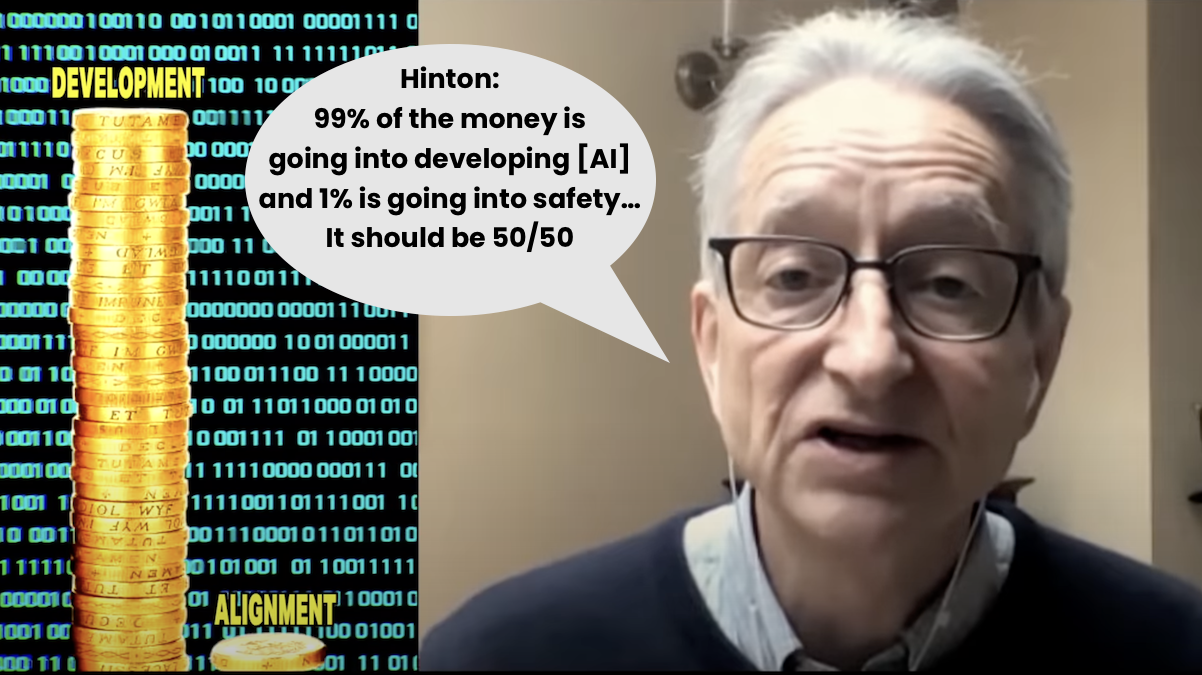

Question: Can this be true?

“The AI safety field is a FANTASTIC example of organic growth – it was just Eliezer and a few dozen nerds for like two decades. Now there are a few hundred nerds, funded by donations from other nerds. That’s it. That’s Big AI Safety”

Answer: Basically, Yes.