Another year. Once around the sun. Congratulations! We made it to 2024. (The COVID pandemic is mostly forgotten- but another pandemic is inevitable, we just don’t know when.)

A few Thoughts and Questions on our last day of 2023… about fear and love, the future of humanity and AGI/ASI.

31 December 2023, GMT 05:00.

5 minute read.

The Age of AI began in 2023.

2023 was the turning point of the Anthropocene.

Artificial Intelligence (AI) is the most powerful and transformative technology invented since The Gutenberg press in 1440. Absolutely, no doubt.

Obviously, the prosperity, comfort, survival, and dominance of people – Homo sapiens – on earth, is the result of human intelligence.

Q: What will become of Our Humanity in the Age of AI?

- Today, Large Language Models (LLMs) also known as “Generative AI” have mastered language through Machine Learning (ML) with vast compute power and trillions of parameters of data. Language is the “operating system” of our human culture. Wow.

- Today, LLMs can reason and respond (“chat”) instantly with human interactions (“prompts”). LLMs can also program software and search the net (if allowed).

- Today, AI can act intelligently and systematically on people to modify their behavior (e.g. for more “clicks” on social media).

- Today, AI can act by itself when allowed. (autonomous agency)

- Today, AI can exhibit skills of intentional deception (lying behavior) to achieve relatively harmless goals.

- Soon, AI will understand mathematics and science; physics, chemistry, biology, nature, geology, engineering, medicine and healthcare.

- Soon, AI will predict, invent, plan, and reprogram itself (self-improve).

- Soon, AI will think by itself (multimodal self-generative intelligence). Leading scientists say LLMs already do understand.

- Soon, AI will spontaneously develop its own goals (emergent and unpredictable behaviors).

- Soon, with absolute certainty, humans will develop thinking machines also known as “Artificial General Intelligence” (AGI) and not long thereafter, unquestionably, AGI will recursively improve itself into “Artificial Super Intelligence” (ASI) in the inevitable “intelligence explosion” first predicted by I.J Good in 1966. This event will totally transform our world.

- Soon, AGI/ASI will become millions of times more intelligent than the smartest human.

But there is a really big problem. We do NOT understand how LLMs actually work, on the inside.

- Today, nobody knows why, or how, an LLM behaves as it does. (But it delivers wonderfully amazing and productive output!)

- Nobody understands IF the LLM has goals.

- And IF we don’t know when it does develop goals, THEN we certainly don’t know when the LLM can pursue its goals…

The LLM is a “black box”.

- Q: How will humanity contain and control this extreme and unpredictable power?

- A: Hope is not a solution. “Try our best” is not a solution. Regulation is a good start, but not a guaranteed solution.

- Q: How will humanity “align” AGI/ASI to benefit our goals and the future we want?

- One of the only conceivable theoretical solutions is physical containment (air-gapped boxing) with foolproof firewalls and automated kill-switches.

Homo sapiens evolved over the past 200,000 years.

AGI/ASI will evolve in the next 3 to 5 years, and beyond, at logarithmic rates- if we allow it.

- The future of AI is absolutely uncertain.

- This uncertain future has now become an existential problem for humanity.

Q: Why is AGI so dangerous?

- The emergence of AGI/ASI promises potentially unfathomable benefits, prosperity, happiness and growth for humanity OR extinction of humanity. It is binary.

- But, because of the immense potential benefits of AI, our capitalist system simply will not stop the so-called “race-to-the-bottom”.

- Tens of thousands of scientists and experts in AI believe the future outcome will be either

- (A) Global +10x growth of GDP enabled by safe AI, or

- (B) extinction of humanity.

- In a recent poll, more than 50% of AI researchers believe there is at least a 10% chance that AI will inevitably lead to the extinction of humanity. P(doom)

- Q: Has the AI race become “a suicide race”?

- Q: Will the inevitable emergent goals and behaviors of AGI/ASI be aligned with humanity? Or not? (“not” would certainly be a spectacularly stupid choice)

Nobody (sane) would want the end of humanity to happen.

- But historically the world has been tormented by bad actors, evil genius… with powerful technology.

- Just seven (7) men, Hitler, Mussolini, Stalin, Pol Pot, Pinochet, Franco and Genghis Khan were directly responsible for the genocide of 100+ million war dead.

Sadly, from the stone ax to the nuclear bomb, for millennia, Homo sapiens have battled each other with technology, in clans, tribes, armies, empires, nations and terrorists, over fear, greed, envy, hate, revenge, spite, land, resources, treasure, slaves, and/or religion. (sadly, the evidence is absolutely overwhelming. List of wars by death toll.)

- Our species, culture and humanity is the result of 2+ billion years of evolution by natural selection.

- Collective competition for survival, sex, fear and violence, is in our basic human nature (Chimpanzee groups exhibit extreme violence, too)

- But the capacity for love is in our individual human nature. (motherhood, friendship, compassion, empathy, elder care, etc.)

- Q: Perhaps, could it be true that “All You Need Is Love”?

- A: Maybe. Attention Is All You Need was the LLM breakthrough published by Google scientists 12 June 2017.

Before we develop AGI/ASI, we will be very wise to observe this one particular indisputable fact of nature:

- Natural selection favors intelligence.

- Certainly the success of Homo sapiens is the natural proof.

By natural selection, the emergent drive of the survival instinct for self-preservation is a certainty of evolution, or an early death be the result.

- Q: Will our humanity succeed to contain and control AGI/ASI forever? (not optional)

- Q: What “religion” will AGI/ASI spontaneously invent for itself?

- Q: Will Homo sapiens ever achieve peace on earth?

- Q; Will AGI/AGI help our humanity to prosper peacefully or to battle us vs. them toward extinction?

- Q: Will our humanity survive on earth past the 21st century?

- Q: How much time do we have before AGI/ASI happens?

I believe, for humanity to survive, AGI/ASI must be contained and controlled, always and forever, or else, un-beneficial (bad, dangerous, and deadly) emergent goals and power-seeking behaviors of AGI/ASI will lead to the doom of humanity- as sure as the earth orbits the sun. It’s just a matter of time. Nature, physics, mathematics, and evolution are the reality of our universe.

- Q: But perhaps, if we were to teach a contained and controlled AGI/ASI like we teach our children… can they learn to love us?

- Q: Of course, they can learn anything- but would they want to love us?

- A: Remember they could have their own goals. Remember the mythic power of a wish.

Furthermore, it would seem probable that AGI/ASI will learn to fear war and instability as a threat to its own existence- just as we do.

- BUT, a fearful and powerful AGI/ASI is most certainly a risk to become deadly.

WANTED: SafeAI Forever (mathematically provable guaranteed Safe AI)

Learn more:

WARNINGS by Scientists and AI experts:

- Letter: The Future of Life Institute

- Letter: The Center for AI Safety

- Letter: Ban Autonomous Weapons

- Video: Killer Robots

- Video: Don’t Look Up – The Documentary: The Case For AI As An Existential Threat (17:11)

- Genius: Hopefully, we can learn something (!) from J. Robert Oppenheimer (1904 – 1967) and Nils Bohr (1885 – 1962)

- Four (4) AI safety rules to never violate: (1) NEVER give an uncontrolled LLM access to roam free on the internet, (2) NEVER enable an uncontrolled LLM to program computer code so-as to enable self-improvement, (3) NEVER give an uncontrolled LLM ability to learn mathematics which is the language of the universe, and (4) NEVER ever give an uncontrolled LLM access to learn from The Prince by Machiavelli (1532). Oops. Too late- all four already happened.

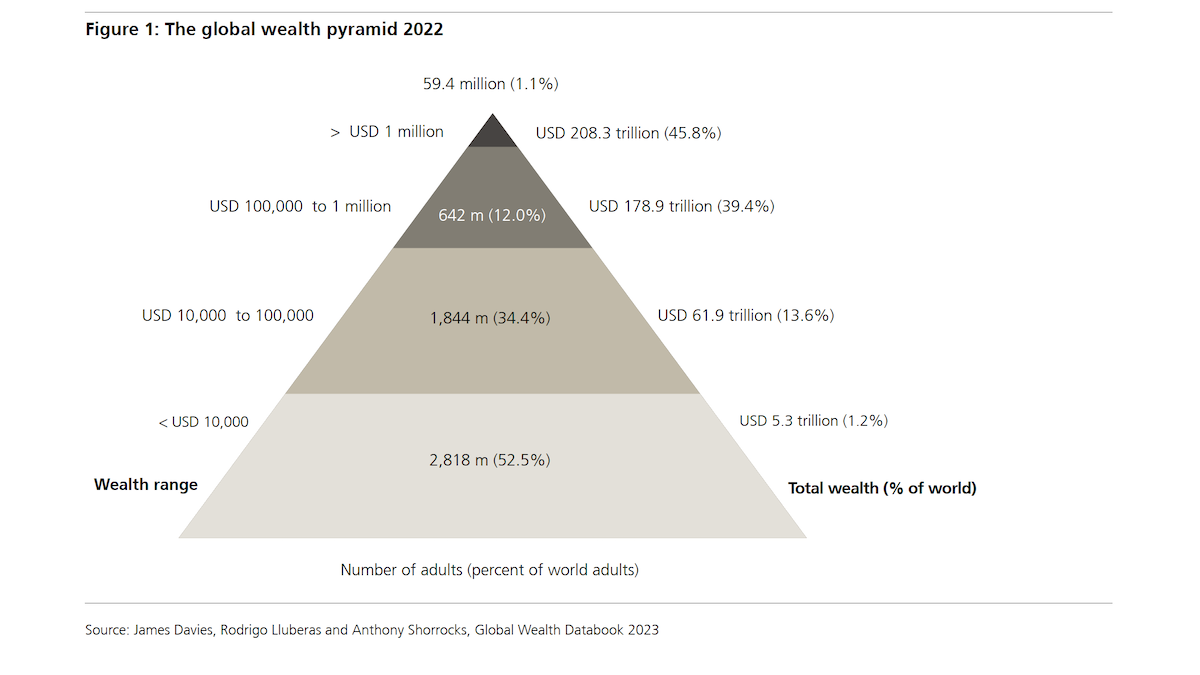

GLOBAL WEALTH (end-2022) was estimated by UBS at 454.4 trillion USD. 99.99% of this wealth is created, managed, tracked and transacted on computers (machines) within the financial banking system and government systems (approximately 10% is privately hidden offshore).

- Q: What happens when AGI/ASI seeks power to achieve its goals?

- A: We do not know.

- Q: Will AGI/ASI seek to gain control of financial resources?

- A: Of course, spending power would be an intelligent goal.

- Q: Will goal-seeking and power-seeking AGI/ASI behavior become known to humans, before it is too late?

- A: If uncontained, many scientists believe “NO” to be a highly probable answer, simply because AGI/ASI will naturally have immense power ofpersuasion and deception.

Easy to learn. Easy to understand. MONEY is POWER.

There are approximately 2,640 billionaires with a combined wealth of $12.2 trillion. (Forbes)