My recent lecture at Berkeley and a vision for a “CERN for AI”

Merging frontier AI efforts into a single highly secure, safe, and transparent project would have many benefits, and should be explored more seriously than it has been to date.

Author’s note: Yes, this is very long. If you click on the thing on the left-hand side of the screen, it will show you where you are in the article. If you just want the gist of the idea, read “Short version of the plan” and the next three sections after that, stopping right when you get to “Longer version of the plan.” Those four shorter sections will only take about five minutes to read in total.

Introduction

I recently had the honor of giving a guest lecture in Berkeley’s Intro to AI course. The students had a lot of great questions, and I enjoyed this opportunity to organize my thoughts on what students most need to know about AI policy. Thanks so much to Igor Mordatch for inviting me and to Pieter Abbeel and everyone else involved for giving me such a warm welcome!

You can watch the lecture here and find the slides here. While the talk was primarily aimed at an audience that’s more familiar with the technical aspects of AI than the policy aspects, it may be of wider interest.

After the talk, I reflected a bit on what I could have done better, and thought of a few small things.1 But the main regret I have with this talk is that I wish I had prepared a more for the section on a “CERN for AI.” The idea behind such a project is to pool many countries’ and companies’ resources into a single (possibly physically decentralized) civilian AI development effort, with the purpose of ensuring that this technology is designed securely, safely, and in the global interest.

In this post, I will go into more detail on why I’m excited about the general idea of a CERN for AI, and I’ll describe one specific version of it that appeals to me. I’ll start with what I actually said in the talk and then go much deeper. I’m not totally sure that this is the right overall plan for frontier AI governance, but I think it deserves serious consideration.

My presentation and the Q+A

Here are the actual slides I presented – most are pretty self-explanatory but I’ll explain the one that isn’t.

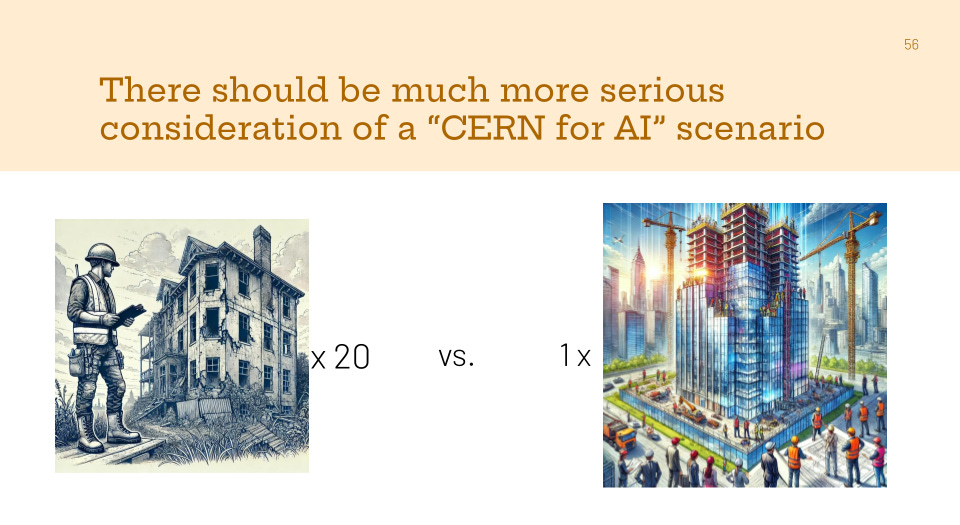

Explanation of the slide above: AI development and deployment today are kind of like a world where every building is made by a single person, who doesn’t have all the expertise and time they need to do a good job, and the different people aren’t allowed to team up because it’s illegal to do so, or they don’t trust the other person’s country, etc. Oh, and a bunch of award-winning architects believe that a mistake in the construction process could kill everyone. By contrast, a group of people could build a much better and safer building by combining forces. I made the same basic point with a different metaphor in this blog post, referenced in the slide below. Both metaphors are simplistic, of course.

One of the first questions I got was why this is a controversial idea (I had included it in the “spicy takes” section of my talk). I gave a pretty mediocre answer, e.g., talking about how it could go against some parties’ interests, which was the wrong answer for a few reasons.2 The right answer, in retrospect, is that the biggest blocker to real support and action is the lack of a detailed plan that addresses natural concerns about feasibility, and in particular alignment with corporate and national incentives.

The idea of a “CERN for AI” has a low “policy readiness level.” I’m currently thinking about whether I should start an organization focused on fixing that and a few related issues (e.g., the need for skeptical third parties to be able to verify the safety properties of AI systems without compromising the security of those systems, a key challenge in arms control and other contexts).

Without a well-developed proposal, and with many obvious challenges (discussed below), of course people will be skeptical of something that would be a huge change from the status quo. So below, I’ll give you my own detailed sketch of how and why this could work. I’d love to hear what you think!

One scenario for a “CERN for AI”

Definitions and context

A CERN for AI (as I use the term) would develop AI for global and civilian uses, and over time would become the leading player in developing advanced AI capabilities.

Recall the slightly different definition I used in my slides above:

Pooling resources to build and operate centralized [AI] infrastructure in a transparent way, as a global scientific community, for civilian rather than military purposes.

This is inspired by the actual CERN. See this article for discussion of how the actual CERN works. In short, a bunch of countries built and jointly manage expensive scientific instruments.

In the introduction to this blog post, I used a different (but consistent) definition:

[Pooling] many countries’ and companies’ resources into a single (possibly physically decentralized) civilian AI development effort, with the purpose of ensuring that this technology is designed securely, safely, and in the global interest.

Each definition emphasize different aspects of the idea. I don’t want to spend too much time on these various definitions of the overall concept, since the devil is in the details.

The devil in the details.

There are many things unspecified in my definition, including:

- The basic goal(s) of the project;

- The number and identity of countries involved;

- The incentives provided to different companies/countries to encourage participation;

- The institutional/sectoral status of the project (is it literally a government agency? A non-profit organization that governments give money to?);

- How big and small decisions are made about the direction of the project;

- Whether the initiative starts from scratch as a governmental or intergovernmental project, vs. building on the private industry that already exists;

- The extent to which the project consolidates AI development and deployment vs. serving as a complement to what already exists and continues to exist alongside it;

- The order of operations among different steps;

- How commercialization of the developed IP works;

- Etc.

Different choices may lead to very different outcomes, making it hard to reason about all possible “CERNs for AI.” Indeed, I’m not sure if I even want to keep using the term after this post. I currently slightly prefer it over the other term floating around, “Manhattan Project for AI,” which also could refer to various things. There are many other interesting analogies and metaphors, like the International Space Station, Intelsat, ITER, etc. There’s a bit more written about the CERN analogy, as well. I linked to some of the prior writing on this topic in this earlier blog post, and I’d suggest that people interested in a well-rounded sense of the topic check out those links, and especially this one.

I will not aim to exhaustively map all the possibilities here. My goal in this post is just to give a single, reasonably well-specified and reasonably well-motivated scenario. I won’t even fully defend it, but just want to encourage discussion of it and related ideas.

Lastly, I find “a/the CERN for AI” to be a clunky phrase, so I’ll sometimes just say “the collaboration” instead.

Without further ado, I’ll outline one version of a CERN for AI.

Short version of the plan

In my (currently) preferred version of a CERN for AI, an initially small but steadily growing coalition of companies and countries would:

- Collaborate on designing and building highly secure chips and datacenters;

- Collaborate on accelerating AI safety research and engineering, and agree on a plan for safely scaling AI well beyond human levels of intelligence while preserving alignment with human values;

- Safely scale AI well beyond human levels of intelligence while preserving alignment with human values;

- Distribute (distilled versions of) this intelligence around the world.

In practice, it won’t be quite this linear, which I’ll return to later, but this sequence of bullets conveys the gist of the idea.

Even shorter version of the plan

I haven’t yet decided how cringe this version is (let me know), but another way of summarizing the basic vision is “The 5-4-5 Plan”:

- First achieve level 5 model weight security (which is the highest; see here);

- Then figure out how to achieve level 4 AI safety (which is the highest; see here and here).

- Then build and distribute the benefits of level 5 AI capabilities (which is the highest; see here).

Meme summary

My various Lord of the Rings references lately might give the wrong impression regarding how into the series I am (I actually only started reading the book recently!). But, I couldn’t resist this one:

The Lord of the Rings: Fellowship of the Ring, Wingnut Films.

The plan’s basic motivation and logic

In this section, I’ll give a general intuitive/normative motivation plus two practical motivations for my flavor of CERN for AI, and then justify the sequence of steps.

The intuitive/normative motivation is that superhumanly intelligent AI will be the most important technology ever created, affecting and potentially threatening literally everyone. Since everyone is exposed to the negative externalities created by this technological transition, they also deserve to be compensated for the risks they’re being exposed to, by benefiting from the technology being developed. This suggests that the most dangerous AI should not be developed by a private company making decisions based on profit, or a government pursuing its own national interests, but instead through some sort of cooperative arrangement among all those companies and governments, and this collaboration should be accountable to all of humanity rather than shareholders or citizens of just one country.

The first practical motivation is simply that we don’t yet know how to do all of this safely (keeping AI aligned with human values as it gets more intelligent), and securely (making sure it doesn’t get stolen and then misused in catastrophic ways), and the people good at these things are scattered across many organizations and countries. The best cybersecurity engineer and the best safety research engineer currently only helps one project, and it may not even be the one with the most advanced capabilities. This fact suggests the value of combining expertise across the various currently-competing initiatives. Given the extreme stakes and the amount of work remaining to be done, we should have an “all hands on deck” to ensure that the most capable AI is built safely and securely, rather than splitting efforts.

Different people have different opinions about how hard these safety and security challenges will be, and how much AI will help us solve the challenges we run into along the way. But everyone agrees that we haven’t fully solved either one yet, and that there is a lot remaining to be done. People might reasonably disagree about whether pooling resources will in fact make things go better, but it seems pretty clear to me that we should at least seriously consider the possibility, given the extreme stakes.

The second practical motivation is that consolidating development in one project — one that is far ahead of the others — allows that project to take its time on safety when needed. Currently, there are many competing companies at fairly similar levels of capability, and they keep secrets not just for security’s sake, but to maintain a competitive advantage (which in turn is made difficult by so many people moving back and forth between companies and countries). This creates a very dangerous situation: we don’t know exactly how much safety and capability will trade off in the end, but it’d be strongly preferable to have some breathing room. It’s hard to have such breathing room and to sort out norms on safety and security when everyone is competing intensely and the stakes are high. Having a very clear leader in AI capabilities also limits the potential risks of a small project cutting corners to catch up, since, given a huge lead, small projects couldn’t succeed even if they completely disregarded safety.

The basic logic behind the sequence of steps in my plan is “first things first”: it’s a bad idea to jump straight into pooling insights on AI capabilities and combining forces on computing power before doing the other things. The capabilities that were just advanced will likely get stolen, including by reckless actors. Without a clear plan on safety, things could go sideways very easily as AI capabilities increase dramatically. Therefore, my proposed sequence begins with laying solid foundations before “hitting the gas pedal” on AI capabilities.