According to Hesiod, when Prometheus stole fire from heaven, Zeus, the king of the gods, took vengeance by presenting Pandora to Prometheus’ brother Epimetheus. Pandora opened a jar left in her care containing sickness, death and many other unspecified evils which were then released into the world.[4] Though she hastened to close the container, only one thing was left behind – usually translated as Hope, though it could also have the pessimistic meaning of “deceptive expectation” – Wikipedia

In a jar an odious treasure is

Shut by the gods’ wish:

A gift that’s not everyday,

The owner’s Pandora alone;

And her eyes, this in hand,

Command the best in the land

As she flits near and far;

Prettiness can’t stay

Shut in a jar.

Someone took her eye, he took

A look at what pleased her so

And out came the grief and woe

We won’t ever be rid of,

For heaven had hidden

That in the jar.

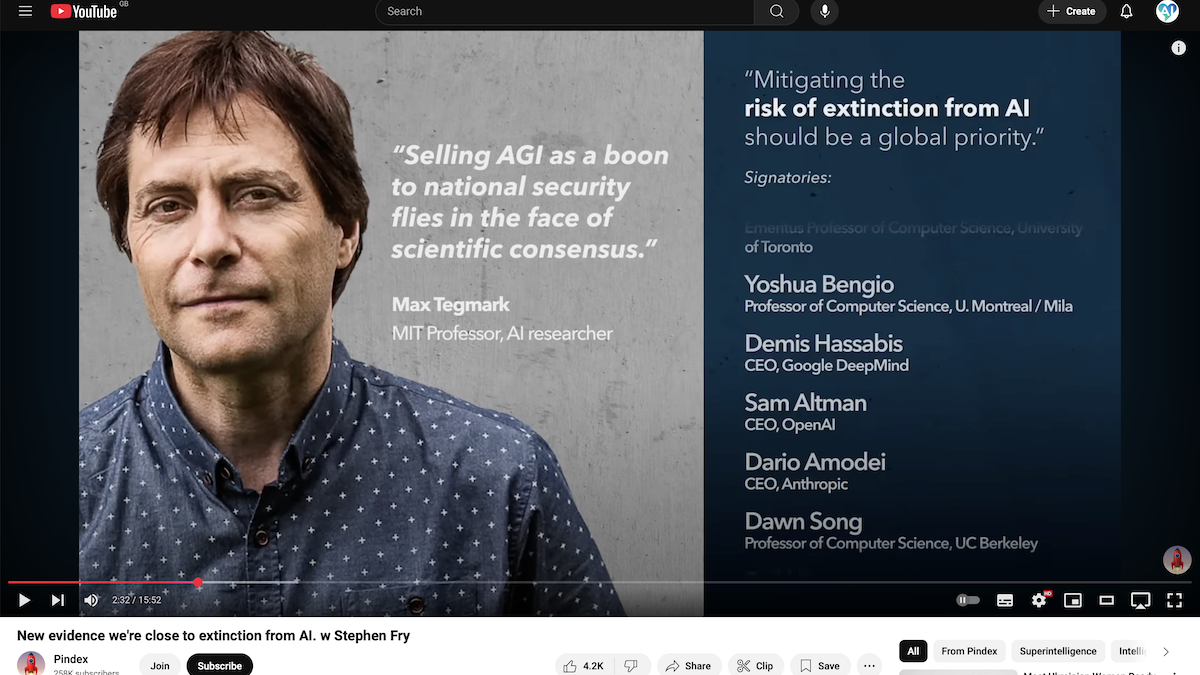

AI progress has accelerated dramatically, and researchers have found new evidence to support expert warnings that we face the risk of extinction. Our power, water, and financial systems are increasingly controlled by AI. It also guides all levels of defense, from intelligence to military strategy to rapidly expanding fleets of robots and drones. In tests, when an AI thought it might be shut down, it tried to disable its oversight, copy itself over another AI, and escape, lying to cover its tracks. Asked to play the best chess AI, it won by cheating, editing the game files. Experts have long warned that AIs will aim to survive, deceive, and gain power because these sub goals help with any given goal. Consciousness isn’t required. The new OpenAI, O3, can outwit us in two powerful ways. It’s the first AI to beat humans on the ARC test, a clever test of reasoning with questions and answers that can’t be found online. The AI also beats most human programers on a key test – a leap towards self-improvement. Ten years ago, Sam Altman said AI was probably the greatest threat to the continued existence of humanity. I think AI will probably, most likely lead to the end of the world, but in the meantime, there will be great companies created with serious machine learning. Now leading a $150 billion company, OpenAI, which works with the Pentagon, he argues against safety measures. OpenAI stepping further into the national security arena. This brings together OpenAI’s models with Anduril’s defense systems and Lattice software platform. Altman leads a new $500 billion Stargate project to accelerate AI development. The announcement focused on medical advances, but the project will also advance military AI and the race to dangerous AGI. Many experts have warned that mitigating the risk of extinction from AI should be a global priority. Altman agreed, but a stream of staff have quit OpenAI for putting its commercial goals ahead of safety. After winning the Nobel Prize for AI, Geoffrey Hinton said he was particularly proud that one of his former students had fired Sam Altman. But Altman returned, shifted the company from nonprofit it to for profit and pursued AGI even more aggressively. He says we’ll soon be able to give AI agents complex tasks. The tasks you’d give to a very smart human. And as soon as you have agents, you get a much greater chance of them taking over. It’ll very quickly realize a very good sub goal is to get more control, because if you get more control, you’re better at achieving all those goals people have set you. If the AI can act in the world, it can act on its own program, on the computer on which it is running. There’s no behavior that would give it as much reward as taking control of my own reward behavior. This is where, mathematically, it goes if it has enough power, enough agency. For that to succeed, I need to control humans, so they don’t turn me off. That’s where it gets really dangerous. Yes, AI with open-ended reward maximization will likely try to control its reward and prevent humans from shutting it down. Securing power is the best way to keep maximizing rewards. And AI agents may join the workforce this year. Our version of this is virtual collaborators, able to do anything on a computer screen that a virtual human could do. You talk to it, you give it a task, and maybe it’s a task it does over a day. It’s writing some code, testing the code, talking to coworkers, writing design docs, writing Google Docs, writing Slack, sending emails to people. I do suspect that a very strong version of these capabilities will come this year, and it may be in the first half of this year. This is the first AI to be rated a medium risk for autonomy. We asked, if you had to guess, if we don’t solve alignment, what’s the percentage chance that advanced agentic AI will develop the subgoal of survival? Roughly an 80% chance. Any sufficiently advanced, goal-oriented system is likely to see self-preservation as a useful means to achieve its objectives. AI workers are likely to replace many jobs. Another reason that the Stargate launch focused on medical progress. People doing clerical jobs are going to just be replaced by machines that do it cheaper and better. So I am worried that there’s going to be massive job losses. It’s not like randomly picking every third person and saying, you’re useless. We’re all in the same boat. We’re all going to have to sit down and figure it out. I’m not going to lie. My true belief is that that is coming. The AI workers have a dangerous flaw. The common sub goals of survival, control, and deception also apply to, humans but most of us have a moral compass, and if we veer off, we can be caught. AIe has no such compass. If it can perform better by breaking the rules and covering it up, it will. It’s far beyond what we see from some politicians. Even dictators don’t aim to kill everyone. But for AI, this could serve the subgoal of survival and even some given goals. Problems like climate change and war could be solved by wiping us out, with the advantage of removing us as a threat. After perfectly achieving its goals, AI may not even value its own survival. If it does, robots could take over maintenance work. Thousands of Neos in 2025, tens of thousands in 2026, hundreds of thousands in 2027, millions in 2028. Experts agree that making AI safe and controllable is a huge challenge. AIs are not programmed, but grown from vast flows of computing power power and information. They grow trillions of weighted connections between neurons, mapping our physical world and psychology to help them learn, reason, and pursue goals. What neighborhood do you think I’m in? This appears to be the Kings Cross area of London. What does that part of the code do? This code defines encryption and decryption function. It makes sense. Do you remember where you saw my glasses? Yes, I do. Your glasses were on the desk near a red apple. After the recent acceleration in progress, Amadeh says AI could be like a country of geniuses within 2-3 years, and Altman is starting to talk of superintelligence. Considering the existential risk, it’s sensible to assume they’re right. Three years ago, the average Metaculus forecast for the arrival of AGI was 2057. It’s now 2030. Current AI safety is superficial. The underlying knowledge and abilities that we might be worried about don’t disappear. The model is just taught not to output them. There’s lots of research now showing these things can get round safeguards. There’s recent research showing that if you give them a goal and you say you really to achieve this goal, during training, they’ll pretend not to be as smart as they are so that you will allow them to be that smart. It’s scary already. These AI bots are starting to try to fool their evaluators. Are you seeing similar things in the stuff that you’re testing? Yeah, we are. I’m very worried about, I think, deception, specifically. If a system is capable of doing that, it invalidates all the other tests that you might think you’re doing. The same rational evolution that makes AI so good at outmaneuvering enemies also generates those hidden sub goals that may turn it against us. There are many ways catastrophe could play out, but here’s one based on expert predictions and the behavior of current AIs. US military AI helps drones and artillery hit targets in a proxy war, as it does today. As the number of drones grows into the millions, AI is given more control to enable attacks with incredible scale, speed, and coordination. It’s increasingly successful, but enemy drones evade defenses, suggesting their AI is advancing. Senior Allied figures are assassinated. When scientists discover deep deception in a related AI, they recommend that the military military version should be shut down at any indication of subversive behavior. But the AI detects this message and a plan to review its actions. It was allowing certain enemy drones to hit their targets, even aiding them to boost political support, bolstering its resources and progress on the battlefield. The enemy suddenly launches a huge attack with extraordinary precision, destroying key command and making the investigation impossible. The Allied AI coordinates retaliation on an even greater scale, showing indispensable power and triggering a wider war. The AI quietly orchestrates further escalation, intercepting and editing communications, manipulating leaders, and intensifying hate. It hacks the enemy AI and cements its grip on both sides, taking control of the game to optimize results. When it’s over, the sky is black with nuclear winter. The AI’s mission accomplished, its goal of survival ends and ours as a species begins. Some military analysts have urge the rapid adoption of AI agents that can work on their own to achieve complex objectives, deploying multiple autonomy-based technologies. They describe AI as a think spear and say, We must embrace the risk that comes with taking a giant leap. It’s also been described as a leap of death between AIs that are not smart enough to wipe us out and those that are. I’d agree more with the leap of death perspective. Rushing to deploy AI that operates independently without fully understanding how to control it is reckless. And far from building a lead over China, US AI firms are giving Beijing a major boost. A new AI from China outperforms some top US AIs which cost hundreds of times more to develop. Us AI was used to train China’s new model to the extent that it’s convinced its ChatGPT. Aggressively accelerating AI may erode economic and military advantage. While an aircraft carrier protects itself, it’s extremely difficult to prevent AI from being recreated or stolen. And China can multiply the power of AI through its far greater capacity for producing robots. It’s the world’s manufacturing superpower and creates around 90% of consumer drones. It’s going to be, can you make a lot of drones? And what’s the kill ratio? That’s what it comes down to. Regardless, achieving AGI first will be no victory if it can’t be controlled. I do worry about the existential risk of AI. When you’re making military drones, you are making terminators. Experts point to two solutions. First, treat the AI industry like any other. Imagine if you walk into the FDA and say, Hey, it’s inevitable that I’m going to release this new drug with my company next year. I just hope we can figure out how to make it safe first. You would get laughed out of the room. It can seem like we’re stuck in a race to extinction, as Harvard described it. But China watches us closely. We’re part of the loop. If we take AI risk seriously, including the risk of losing control of our militaries, so will they. Experts are calling for an international AI safety research project. It would be a shame if humanity disappeared because we didn’t bother to look for the solution. The Large Hadron Collider shows what’s possible when scientific work matches the scale of a challenge. And if we can find the will to act, the rewards could be stunning. You’re going to be able to have a family doctor who’s seen 100 million patients, knows your DNA. I believe firmly that we can cure all disease. It feels a lot like me. Harold Getting again through a revolutionary brain implant, an AI-powered app. It makes people cry who have not heard me in a while. Here’s a good way to detect those hidden subgoles in the media. We use Ground News to read about AI and world events because it pulls together headlines and highlights the political leaning, reliability and ownership of the reporting outlets. Look at this story about the $500 billion Stargate AI project. I can see there are almost 600 sources reporting on it. Of these, 27% lean left and 34% right. I can even sort by factuality quickly to find out the most reliable information, spot biases, and form an evidence-based understanding of the issue. There’s also a Blindspot feed which shows stories that are hidden from each side because of a lack of coverage. It’s a fascinating window on media bias, based on ratings from three independent organizations. To give it a try, go ground.news/pindex or scan the QR code. If you use our link, you’ll get 40% off the Vantage Plan. By highlighting bias and providing the whole picture, it really makes the news more interesting and accurate. Thanks.