The Alignment Problem from a Deep Learning Perspective

Richard Ngo, Lawrence Chan, Sören Mindermann

Abstract

In coming years or decades, artificial general intelligence (AGI) may surpass human capabilities at many critical tasks. We argue that, without substantial effort to prevent it, AGIs could learn to pursue goals that are in conflict (i.e., misaligned) with human interests. If trained like today’s most capable models, AGIs could learn to act deceptively to receive higher reward, learn misaligned internally-represented goals that generalize beyond their fine-tuning distributions, and pursue those goals using power-seeking strategies. AGIs with these properties would be difficult to align and may strategically appear aligned even when they are not. In this revised paper, we expand our review of emerging evidence for these properties to include more direct empirical observations published as of early 2025. Finally, we briefly outline how the deployment of misaligned AGIs might irreversibly undermine human control over the world, and we review research directions aimed at preventing this outcome.

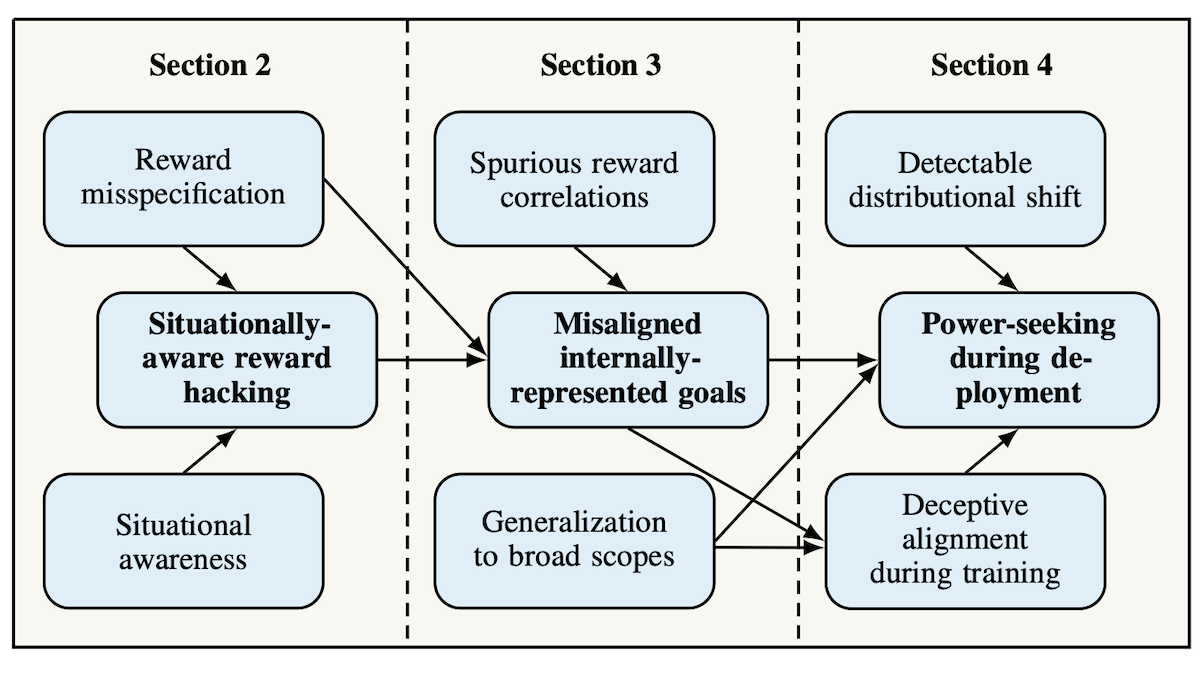

Figure 1: Overview of our paper. Arrows indicate contributing factors. In Section 2, we describe why we expect situationally-aware reward hacking to occur following reward misspecification and the development of situational awareness. In Section 3, we describe how neural net policies could learn to plan towards internally-represented goals which generalize to broad scopes, and how several factors may contribute towards those goals being misaligned. In Section 4, we describe how broadly-scoped misaligned goals could lead to unwanted power-seeking behavior during deployment, and why distributional shifts and deceptive alignment could make this problem hard to address during training.

6 Conclusion

We ground the analysis of large-scale risks from misaligned AGI in the deep learning literature. We argue that if AGI-level policies are trained using a currently-popular set of techniques, those policies may learn to reward hack in situationally-aware ways, develop misaligned internally-represented goals (in part caused by reward hacking), then carry out undesirable power-seeking strategies in pursuit of them. These properties could make misalignment in AGIs difficult to recognize and address. While we ground our arguments in the empirical deep learning literature, some caution is deserved since many of our concepts remain abstract and informal. However, we believe this paper constitutes a much-needed starting point that we hope will spur further analysis. Future work should formalize and empirically test the above hypotheses and extend the analysis to other possible training settings (such as lifelong learning), possible solution approaches (such as those in Section 5), or combinations of deep learning with other paradigms. Reasoning about these topics is difficult, but the stakes are high and we cannot justify disregarding or postponing the work.

Learn more

Updated our 2022 paper on the alignment problem (ICLR). More direct evidence now supports our hypotheses: alignment faking, manipulative and obfuscated reward hacking, situational awareness, internal goals and power-seeking. Key updates below. 1/🧵https://t.co/WiluDwr1EC pic.twitter.com/03ln4YBh2b

— Sören Mindermann (@sorenmind) March 11, 2025